Learning LangChain

Building AI and LLM Applications with LangChain and LangGraph

Praise for Learning LangChain

With clear explanations and actionable techniques, this is the go-to resource for anyone looking to harness LangChain’s power for production-ready generative AI and agents. A must-read for developers aiming to push the boundaries of this platform.

Tom Taulli, IT consultant and author of AI-Assisted Programming

This comprehensive guide on LangChain covers everything from document retrieval and indexing to deploying and monitoring AI agents in production. With engaging examples, intuitive illustrations, and hands-on code, this book made learning LangChain interesting and fun!

Rajat K. Goel, senior software engineer, IBM

This book is a comprehensive LLM guide covering fundamentals to production, packed with technical insights, practical strategies, and robust AI patterns.

Gourav Singh Bais, senior data scientist and senior technical content writer, Allianz Services

Prototyping generative AI apps is easy—shipping them is hard. The strategies and tools in Learning LangChain make it possible to turn ideas into modern, production-ready applications.

James Spiteri, director of product management for security, Elastic

Learning LangChain provides a clear path for transforming how you build AIpowered applications. By breaking down flexible architectures and robust checkpointing, it offers a strong foundation for creating reliable, productionready AI agents at scale.

David O’Regan, engineering manager for AI/ML, GitLab

Learning LangChain helped us skip the boilerplate for debugging and monitoring. The many helpful patterns and tooling insights allowed us to move fast and deploy AI apps with confidence.

Chris Focke, chief AI scientist, AppFolio

Teaching LangChain through clear, actionable examples, this book is a gateway to agentic applications that are as inspiring as Asimov’s sci-fi novels.

Ilya Meyzin, SVP head of data science, Dun & Bradstreet

Learning LangChain

Building AI and LLM Applications with LangChain and LangGraph

Mayo Oshin and Nuno Campos

Learning LangChain

by Mayo Oshin and Nuno Campos

Copyright © 2025 Olumayowa “Mayo” Olufemi Oshin. All rights reserved.

Printed in the United States of America.

Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

- Acquisitions Editor: Nicole Butterfield •

- Development Editor: Corbin Collins •

- Production Editor: Clare Laylock •

- Copyeditor: nSight, Inc. •

- Proofreader: Helena Stirling •

- Indexer: Judith McConville •

- Interior Designer: David Futato •

- Cover Designer: Karen Montgomery •

- Illustrator: Kate Dullea •

- February 2025: First Edition •

Revision History for the First Edition

2024-02-13: First Release •

See http://oreilly.com/catalog/errata.csp?isbn=9781098167288 for release details.

The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Learning LangChain, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc.

The views expressed in this work are those of the authors and do not represent the publisher’s views. While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-098-16728-8

[LSI]

- Preface 1.

- 1. LLM Fundamentals with LangChain 2.

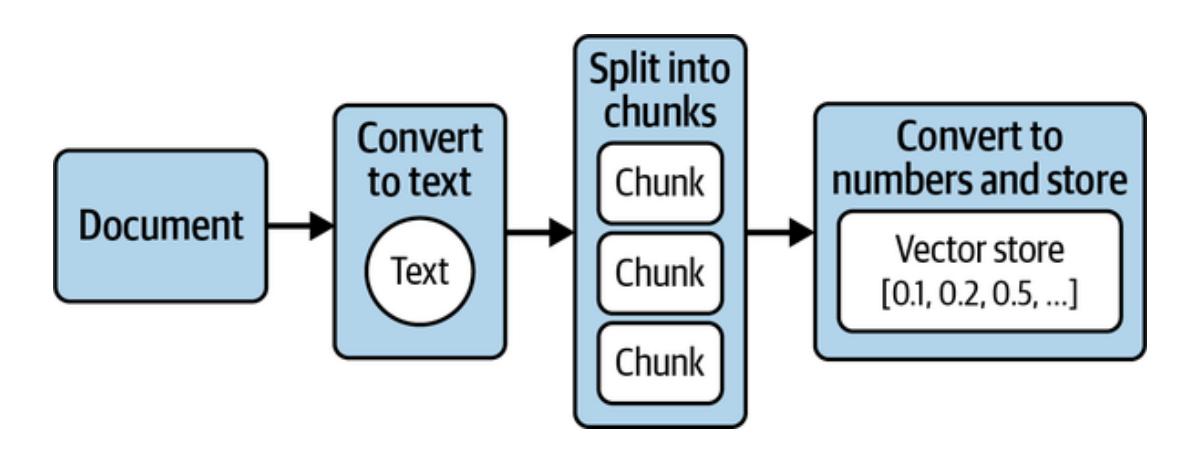

- 2. RAG Part I: Indexing Your Data 3.

- The Goal: Picking Relevant Context for LLMs 1.

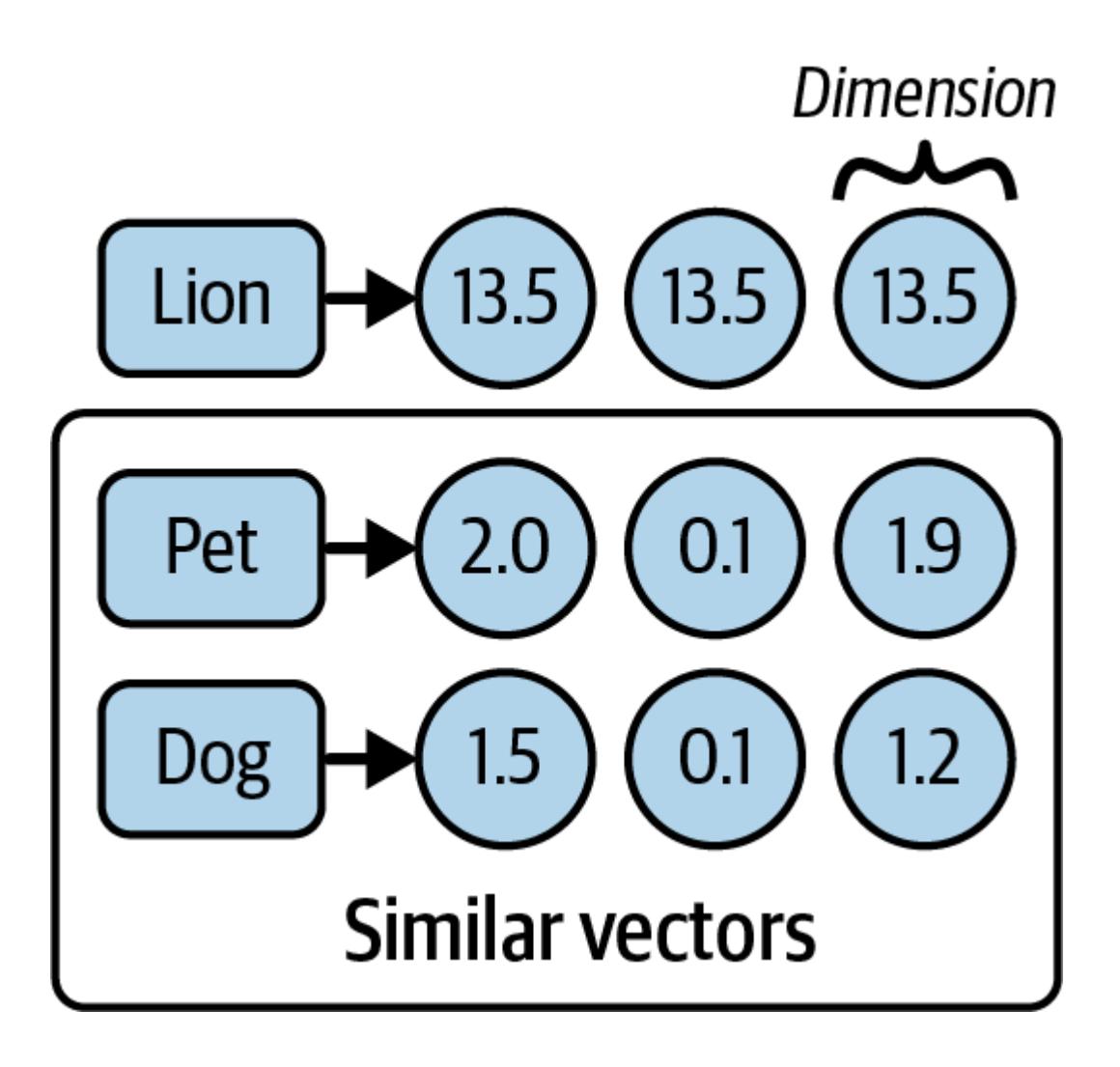

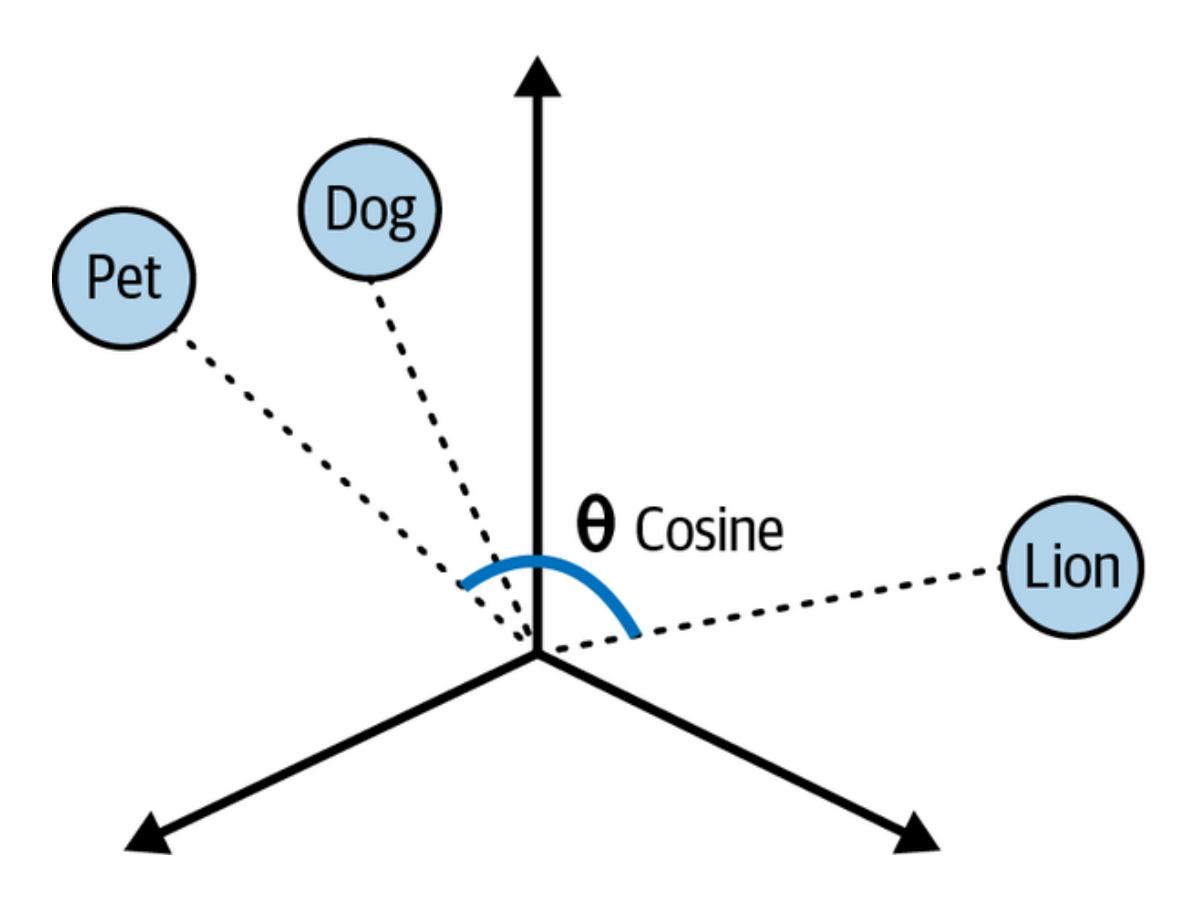

- Embeddings: Converting Text to Numbers 2.

- Converting Your Documents into Text 3.

- Splitting Your Text into Chunks 4.

- Generating Text Embeddings 5.

- Storing Embeddings in a Vector Store 6.

- Tracking Changes to Your Documents 7.

- Indexing Optimization 8.

- Summary 9.

- 3. RAG Part II: Chatting with Your Data 4.

- 4. Using LangGraph to Add Memory to Your Chatbot 5.

- Modifying Chat History 5.

- Summary 6.

- 5. Cognitive Architectures with LangGraph 6.

- 6. Agent Architecture 7.

- 7. Agents II 8.

- 8. Patterns to Make the Most of LLMs 9.

- 9. Deployment: Launching Your AI Application into Production 10.

- 10. Testing: Evaluation, Monitoring, and Continuous Improvement 11.

- The Preproduction Stage 3.

- Production 4.

- Summary 5.

- 11. Building with LLMs 12.

- Index 13.

- About the Authors 14.

Preface

On November 30, 2022, San Francisco–based firm OpenAI publicly released ChatGPT—the viral AI chatbot that can generate content, answer questions, and solve problems like a human. Within two months of its launch, ChatGPT attracted over 100 million monthly active users, the fastest adoption rate of a new consumer technology application (so far). ChatGPT is a chatbot experience powered by an instruction and dialogue-tuned version of OpenAI’s GPT-3.5 family of large language models (LLMs). We’ll get to definitions of these concepts very shortly.

Note

Building LLM applications with or without LangChain requires the use of an LLM. In this book we will be making use of the OpenAI API as the LLM provider we use in the code examples (pricing is listed on its platform). One of the benefits of working with LangChain is that you can follow along with all of these examples using either OpenAI or alternative commercial or open source LLM providers.

Three months later, OpenAI released the ChatGPT API, giving developers access to the chat and speech-to-text capabilities. This kickstarted an uncountable number of new applications and technical developments under the loose umbrella term of generative AI.

Before we define generative AI and LLMs, let’s touch on the concept of machine learning (ML). Some computer algorithms (imagine a repeatable recipe for achievement of some predefined task, such as sorting a deck of cards) are directly written by a software engineer. Other computer algorithms are instead learned from vast amounts of training examples—the job of the software engineer shifts from writing the algorithm itself to writing the training logic that creates the algorithm. A lot of attention in the ML field went into developing algorithms for predicting any number of things, from tomorrow’s weather to the most efficient delivery route for an Amazon driver.

With the advent of LLMs and other generative models (such as diffusion models for generating images, which we don’t cover in this book), those same ML techniques are now applied to the problem of generating new content, such as a new paragraph of text or drawing, that is at the same time unique and informed by examples in the training data. LLMs in particular are generative models dedicated to generating text.

LLMs have two other differences from previous ML algorithms:

- They are trained on much larger amounts of data; training one of these models from scratch would be very costly. •

- They are more versatile. •

The same text generation model can be used for summarization, translation, classification, and so forth, whereas previous ML models were usually trained and used for a specific task.

These two differences conspire to make the job of the software engineer shift once more, with increasing amounts of time dedicated to working out how to get an LLM to work for their use case. And that’s what LangChain is all about.

By the end of 2023, competing LLMs emerged, including Anthropic’s Claude and Google’s Bard (later renamed Gemini), providing even wider access to these new capabilities. And subsequently, thousands of successful startups and major enterprises have incorporated generative AI APIs to build applications for various use cases, ranging from customer support chatbots to writing and debugging code.

On October 22, 2022, Harrison Chase published the first commit on GitHub for the LangChain open source library. LangChain started from the realization that the most interesting LLM applications needed to use LLMs together with “other sources of computation or knowledge”. For instance, you can try to get an LLM to generate the answer to this question:

How many balls are left after splitting 1,234 balls evenly among 123 people?

You’ll likely be disappointed by its math prowess. However, if you pair it up with a calculator function, you can instead instruct the LLM to reword the question into an input that a calculator could handle:

1,234 % 123

Then you can pass that to a calculator function and get an accurate answer to your original question. LangChain was the first (and, at the time of writing, the largest) library to provide such building blocks and the tooling to reliably combine them into larger applications. Before discussing what it takes to build compelling applications with these new tools, let’s get more familiar with LLMs and LangChain.

Brief Primer on LLMs

In layman’s terms, LLMs are trained algorithms that receive text input and predict and generate humanlike text output. Essentially, they behave like the familiar autocomplete feature found on many smartphones, but taken to an extreme.

Let’s break down the term large language model:

Large refers to the size of these models in terms of training data and parameters used during the learning process. For example, OpenAI’s GPT-3 model contains 175 billion •

parameters, which were learned from training on 45 terabytes of text data.1 Parameters in a neural network model are made up of the numbers that control the output of each neuron and the relative weight of its connections with its neighboring neurons. (Exactly which neurons are connected to which other neurons varies for each neural network architecture and is beyond the scope of this book.)

Language model refers to a computer algorithm trained to receive written text (in English or other languages) and produce output also as written text (in the same language or a different one). These are neural networks, a type of ML model which resembles a stylized conception of the human brain, with the final output resulting from the combination of the individual outputs of many simple mathematical functions, called neurons, and their interconnections. If many of these neurons are organized in specific ways, with the right training process and the right training data, this produces •

a model that is capable of interpreting the meaning of individual words and sentences, which makes it possible to use them for generating plausible, readable, written text.

Because of the prevalence of English in the training data, most models are better at English than they are at other languages with a smaller number of speakers. By “better” we mean it is easier to get them to produce desired outputs in English. There are LLMs designed for multilingual output, such as BLOOM, that use a larger proportion of training data in other languages. Curiously, the difference in performance between languages isn’t as large as might be expected, even in LLMs trained on a predominantly English training corpus. Researchers have found that LLMs are able to transfer some of their semantic understanding to other languages.2

Put together, large language models are instances of big, general-purpose language models that are trained on vast amounts of text. In other words, these models have learned from patterns in large datasets of text—books, articles, forums, and other publicly available sources—to perform general text-related tasks. These tasks include text generation. summarization, translation, classification, and more.

Let’s say we instruct an LLM to complete the following sentence:

The capital of England is ______.

The LLM will take that input text and predict the correct output answer as London. This looks like magic. but it’s not. Under the hood, the LLM estimates the probability of a sequence of word(s) given a previous sequence of words.

Tip

Technically speaking, the model makes predictions based on tokens, not words. A token represents an atomic unit of text. Tokens can represent individual characters, words, subwords, or even larger linguistic units, depending on the specific tokenization approach used. For example, using GPT-3.5’s tokenizer (called cl100k), the phrase good morning dearest friend would consist of five tokens (using to show the space character):

\(G\) ood

With token ID 19045

morning

With token ID 6693

\(de\)

With token ID 409

arest

With token ID 15795

friend

With token ID 4333

Usually tokenizers are trained with the objective of having the most common words encoded into a single token, for example, the word morning is encoded as the token 6693. Less common words, or words in other languages (usually tokenizers are trained on English text). require several tokens to encode them. For example, the word dearest is encoded as tokens 409, 15795. One token spans on average four characters of text for common English text, or roughly three quarters of a word.

The driving engine behind LLMs’ predictive power is known as the transformer neural network architecture.3 The transformer architecture enables models to handle sequences of data, such as sentences or lines of code, and make predictions about the likeliest next

word(s) in the sequence. Transformers are designed to understand the context of each word in a sentence by considering it in relation to every other word. This allows the model to build a comprehensive understanding of the meaning of a sentence, paragraph, and so on (in other words, a sequence of words) as the joint meaning of its parts in relation to each other.

So, when the model sees the sequence of words the capital of England is, it makes a prediction based on similar examples it saw during its training. In the model’s training corpus the word England (or the token(s) that represent it) would have often shown up in sentences in similar places to words like France, United States, China. The word capital would figure in the training data in many sentences also containing words like England, France, and US, and words like London, Paris, Washington. This repetition during the model’s training resulted in the capacity to correctly predict that the next word in the sequence should be London.

The instructions and input text you provide to the model is called a prompt. Prompting can have a significant impact on the quality of output from the LLM. There are several best practices for prompt design or prompt engineering, including providing clear and concise instructions with contextual examples, which we discuss later in this book. Before we go further into prompting, let’s look at some different types of LLMs available for you to use.

The base type, from which all the others derive, is commonly known as a pretrained LLM: it has been trained on very large amounts of text (found on the internet and in books, newspapers, code, video transcripts, and so forth) in a self-supervised fashion. This means that—unlike in supervised ML, where prior to training the researcher needs to assemble a dataset of pairs of input to expected output—for LLMs those pairs are inferred from the training data. In fact, the only feasible way to use datasets that are so large is to assemble those pairs from the training data automatically. Two techniques to do this involve having the model do the following:

Predict the next word

Remove the last word from each sentence in the training data, and that yields a pair of input and expected output, such as The capital of England is ___ and London.

Predict a missing word

Similarly, if you take each sentence and omit a word from the middle, you now have other pairs of input and expected output, such as The ___ of England is London and capital.

These models are quite difficult to use as is, they require you to prime the response with a suitable prefix. For instance, if you want to know the capital of England, you might get a response by prompting the model with The capital of England is, but not with the more natural What is the capital of England?

Instruction-Tuned LLMs

Researchers have made pretrained LLMs easier to use by further training (additional training applied on top of the long and costly training described in the previous section), also known as fine-tuning them on the following:

Task-specific datasets

These are datasets of pairs of questions/answers manually assembled by researchers, providing examples of desirable responses to common questions that end users might prompt the model with. For example, the dataset might contain the following pair: Q: What is the capital of England? A: The capital of England is London. Unlike the pretraining datasets, these are manually assembled, so they are by necessity much smaller:

Reinforcement learning from human feedback (RLHF)

Through the use of RLHF methods, those manually assembled datasets are augmented with user feedback received on output produced by the model. For example, user A

preferred The capital of England is London to London is the capital of England as an answer to the earlier question.

Instruction-tuning has been key to broadening the number of people who can build applications with LLMs, as they can now be prompted with instructions, often in the form of questions such as, What is the capital of England?, as opposed to The capital of England is.

Dialogue-Tuned LLMs

Models tailored for dialogue or chat purposes are a further enhancement of instruction-tuned LLMs. Different providers of LLMs use different techniques, so this is not necessarily true of all chat models, but usually this is done via the following:

Dialogue datasets

The manually assembled fine-tuning datasets are extended to include more examples of multiturn dialogue interactions, that is, sequences of prompt-reply pairs.

Chat format

The input and output formats of the model are given a layer of structure over freeform text, which divides text into parts associated with a role (and optionally other metadata like a name). Usually, the roles available are system (for instructions and framing of the task), user (the actual task or question), and assistant (for the outputs of the model). This method evolved from early prompt engineering techniques and makes it easier to tailor the model’s output while making it harder for models to confuse user input with instructions. Confusing user input with prior instructions is also known as jailbreaking, which can, for instance, lead to carefully crafted prompts, possibly including trade secrets, being exposed to end users.

Fine-Tuned LLMs

Fine-tuned LLMs are created by taking base LLMs and further training them on a proprietary dataset for a specific task. Technically, instruction-tuned and dialogue-tuned LLMs are finetuned LLMs, but the term “fine-tuned LLM” is usually used to describe LLMs that are tuned by the developer for their specific task. For example, a model can be fine-tuned to accurately extract the sentiment, risk factors, and key financial figures from a public company’s annual report. Usually, fine-tuned models have improved performance on the chosen task at the expense of a loss of generality. That is, they become less capable of answering queries on unrelated tasks.

Throughout the rest of this book, when we use the term LLM, we mean instruction-tuned LLMs, and for chat model we mean dialogue-instructed LLMs, as defined earlier in this section. These should be your workhorses when using LLMs—the first tools you reach for when starting a new LLM application.

Now let’s quickly discuss some common LLM prompting techniques before diving into LangChain.

Brief Primer on Prompting

As we touched on earlier, the main task of the software engineer working with LLMs is not to train an LLM, or even to fine-tune one (usually), but rather to take an existing LLM and work out how to get it to accomplish the task you need for your application. There are commercial providers of LLMs, like OpenAI, Anthropic, and Google, as well as open source LLMs (Llama, Gemma, and others), released free-of-charge for others to build upon. Adapting an existing LLM for your task is called prompt engineering.

Many prompting techniques have been developed in the past two years, and in a broad sense, this is a book about how to do prompt engineering with LangChain—how to use LangChain to get LLMs to do what you have in mind. But before we get into LangChain

proper, it helps to go over some of these techniques first (and we apologize in advance if your favorite prompting technique isn’t listed here; there are too many to cover).

To follow along with this section we recommend copying these prompts to the OpenAI Playground to try them yourself:

- Create an account for the OpenAI API at http://platform.openai.com, which will let you use OpenAI LLMs programmatically, that is, using the API from your Python or JavaScript code. It will also give you access to the OpenAI Playground, where you can experiment with prompts from your web browser. 1.

- If necessary, add payment details for your new OpenAI account. OpenAI is a commercial provider of LLMs and charges a fee for each time you use their models through OpenAI’s API or through Playground. You can find the latest pricing on their website. Over the past two years, the price for using OpenAI’s models has come down significantly as new capabilities and optimizations are introduced. 2.

- Head on over to the OpenAI Playground and you’re ready to try out the following prompts for yourself. We’ll make use of the OpenAI API throughout this book. 3.

- Once you’ve navigated to the Playground, you will see a panel of presets on the right side of the screen, including your model of choice. If you look further down the panel, you will see Temperature under the “Model configuration” title. Move the Temperature toggle from middle to left until the number shows 0.00. Essentially, temperature controls the randomness of LLM output. The lower the temperature, the more deterministic the model output. 4.

Now on to the prompts!

Zero-Shot Prompting

The first and most straightforward prompting technique consists of simply instructing the LLM to perform the desired task:

How old was the 30th president of the United States when his wife’s mother died?

This is typically what you should try first, and it will usually work for simple questions, especially when the answer to it was likely present in some of the training data. If we prompt OpenAI’s gpt-3.5-turbo with the preceding prompt, the following is returned:

The 30th president of the United States, Calvin Coolidge, was 48 years old when his wife’s mother passed away in 1926.

Note

You may get a different result from what we get. There is an element of randomness to how LLMs generate responses, and OpenAI may have updated the model by the time you try it.

While the model did correctly identify the 30th president, the answer isn’t quite right. Often you’ll have to iterate on prompts and responses to get a reliable system. The next sections expand on how.

Chain-of-Thought

A very useful iteration is to further instruct the model to take the time to think. This technique has been found to increase performance on a variety of tasks. Curiously, a more

recent paper4 suggests that this technique can also reduce LLM performance on tasks where thinking reduces human performance. Called chain-of-thought (CoT) prompting, this is usually done by prepending the prompt with instructions for the LLM to describe how it could arrive at the answer:

Think step by step.

How old was the 30th president of the United States when his wife’s mother died?

And now let’s see what the same model produces for this new prompt:

First, find out who the 30th president of the United States was. This can be done by researching a list of US presidents or by searching for “30th president of the United States” online. You will find that the 30th president was Calvin Coolidge.

Next, find out the year when Calvin Coolidge was the president. This can also be found by researching online or in a history book. You will find that he was the president from 1923-1929.

Now, you need to determine the year when Calvin Coolidge’s wife’s mother died. This can be done by researching the life and death of his wife, Grace Goodhue Coolidge. You will find that she lived from 1879-1957.

Calculate the age of Calvin Coolidge’s wife’s mother when she died. She was 78 years old.

Determine the age of Calvin Coolidge when his wife’s mother died. Subtract the year of his birth, 1872, from the year his mother-in-law died, 1957. This means that Calvin Coolidge was 85 years old when his wife’s mother passed away.

Notice how the only change in the prompt was the addition of Think step by step, and how much of a difference that made in the model’s output. Now the model outputs a sequence of steps you could go through when trying to answer this question. Unfortunately, while the steps are very reasonable, the final output is still incorrect, given it got some facts wrong, namely it listed the wrong birth and death years for President Coolidge’s wife’s mother. Let’s see how to improve on this.

Retrieval-Augmented Generation

Retrieval-augmented generation (RAG) consists of finding relevant pieces of text, also known as context, such as facts you’d find in an encyclopedia and including that context in the prompt. The RAG technique can (and in real applications should) be combined with CoT, but for simplicity we’ll use these techniques one at a time here. Here’s the prompt including RAG:

Context:

Calvin Coolidge (born John Calvin Coolidge Jr.; /ˈkuːlɪdʒ/; July 4, 1872 – January 5, 1933) was an American attorney and politician who served as the 30th president of the United States from 1923 to 1929.

Grace Anna Coolidge (née Goodhue; January 3, 1879 – July 8, 1957) was the wife of the 30th president of the United States, Calvin Coolidge.

Grace Anna Goodhue was born on January 3, 1879, in Burlington, Vermont, the only child of Andrew Issachar Goodhue and Lemira Barrett Goodhue.

Lemira A. Goodhue (Barrett) ; Birthdate: April 26, 1849 ; Birthplace: Burlington, Chittenden County, VT, United States ; Death: October 24, 1929.

How old was the 30th president of the United States when his wife’s mother died?

And the output from the model:

The 30th president of the United States, Calvin Coolidge, was 54 years old when his wife’s mother, Lemira A. Goodhue, died on October 24, 1929.

Now we’re a lot closer to the correct answer, but as we touched on earlier, LLMs aren’t great at out-of-the-box math. In this case, the final result of 54 years old is off by 3. Let’s see how we can improve on this.

Tool Calling

The tool calling technique consists of prepending the prompt with a list of external functions the LLM can make use of, along with descriptions of what each is good for and instructions on how to signal in the output that it wants to use one (or more) of these functions. Finally, you—the developer of the application—should parse the output and call the appropriate functions. Here’s one way to do this:

Tools:

calculator: This tool accepts math expressions and returns their result.

search: This tool accepts search engine queries and returns the first search result.

If you want to use tools to arrive at the answer, output the list of tools and inputs in CSV format, with this header row tool,input.

How old was the 30th president of the United States when his wife’s mother died?

And this is the output you might get:

tool,input

calculator,2023-1892

search,“What age was Calvin Coolidge when his mother-in-law died?”

While the LLM correctly followed the output format instructions, the tools and inputs selected aren’t the most appropriate for this question. This gets at one of the most important things to keep in mind when prompting LLMs: each prompting technique is most useful when used in combination with (some of) the others. For instance, here we could improve on this by combining tool calling, chain-of-thought, and RAG into a prompt that uses all three. Let’s see what that looks like:

Context:

Calvin Coolidge (born John Calvin Coolidge Jr.; /ˈkuːlɪdʒ/; July 4, 1872 – January 5, 1933) was an American attorney and politician who served as the 30th president of the United States from 1923 to 1929.

Grace Anna Coolidge (née Goodhue; January 3, 1879 – July 8, 1957) was the wife of the 30th president of the United States, Calvin Coolidge.

Grace Anna Goodhue was born on January 3, 1879, in Burlington, Vermont, the only child of Andrew Issachar Goodhue and Lemira Barrett Goodhue.

Lemira A. Goodhue (Barrett) ; Birthdate: April 26, 1849 ; Birthplace: Burlington, Chittenden County, VT, United States ; Death: October 24, 1929.

Tools:

- calculator: This tool accepts math expressions and returns their result.

If you want to use tools to arrive at the answer, output the list of tools and inputs in CSV format, with this header row tool,input.

Think step by step.

How old was the 30th president of the United States when his wife’s mother died?

And with this prompt, maybe after a few tries, we might get this output:

tool,input

calculator,1929 - 1872

If we parse that CSV output, and have a calculator function execute the operation 1929 - 1827, we finally get the right answer: 57 years.

As per the previous example, by combining RAG with chain-of-thought and tool calling, you can retrieve the most relevant data to ground your model’s output, then guide it step by step to ensure it uses that context effectively.

Few-Shot Prompting

Finally, we come to another very useful prompting technique: few-shot prompting. This consists of providing the LLM with examples of other questions and the correct answers, which enables the LLM to learn how to perform a new task without going through additional training or fine-tuning. When compared to fine-tuning, few-shot prompting is more flexible you can do it on the fly at query time—but less powerful, and you might achieve better performance with fine-tuning. That said, you should usually always try few-shot prompting before fine-tuning:

Static few-shot prompting

The most basic version of few-shot prompting is to assemble a predetermined list of a small number of examples that you include in the prompt.

Dynamic few-shot prompting

If you assemble a dataset of many examples, you can instead pick the most relevant examples for each new query.

The next section covers using LangChain to build applications using LLMs and these prompting techniques.

LangChain and Why It’s Important

LangChain was one of the earliest open source libraries to provide LLM and prompting building blocks and the tooling to reliably combine them into larger applications. As of writing, LangChain has amassed over 28 million monthly downloads, 99,000 GitHub stars, and the largest developer community in generative AI (72,000+ strong). It has enabled software engineers who don’t have an ML background to utilize the power of LLMs to build a variety of apps, ranging from AI chatbots to AI agents that can reason and take action responsibly.

LangChain builds on the idea stressed in the preceding section: that prompting techniques are most useful when used together. To make that easier, LangChain provides simple abstractions for each major prompting technique. By abstraction we mean Python and JavaScript functions and classes that encapsulate the ideas of those techniques into easy-touse wrappers. These abstractions are designed to play well together and to be combined into a larger LLM application.

First of all, LangChain provides integrations with the major LLM providers, both commercial (OpenAI, Anthropic, Google, and more) and open source (Llama, Gemma, and others). These integrations share a common interface, making it very easy to try out new LLMs as they’re announced and letting you avoid being locked-in to a single provider. We’ll use these in Chapter 1.

LangChain also provides prompt template abstractions, which enable you to reuse prompts more than once, separating static text in the prompt from placeholders that will be different for each time you send it to the LLM to get a completion generated. We’ll talk more about these also in Chapter 1. LangChain prompts can also be stored in the LangChain Hub for sharing with teammates.

LangChain contains many integrations with third-party services (such as Google Sheets, Wolfram Alpha, Zapier, just to name a few) exposed as tools, which is a standard interface for functions to be used in the tool-calling technique.

For RAG, LangChain provides integrations with the major embedding models (language models designed to output a numeric representation, the embedding, of the meaning of a sentence, paragraph, and so on), vector stores (databases dedicated to storing embeddings), and vector indexes (regular databases with vector-storing capabilities). You’ll learn a lot more about these in Chapters 2 and 3.

For CoT, LangChain (through the LangGraph library) provides agent abstractions that combine chain-of-thought reasoning and tool calling, first popularized by the ReAct paper. This enables building LLM applications that do the following:

- Reason about the steps to take. 1.

- Translate those steps into external tool calls. 2.

- Receive the output of those tool calls. 3.

- Repeat until the task is accomplished. 4.

We cover these in Chapters 5 through 8.

For chatbot use cases, it becomes useful to keep track of previous interactions and use them when generating the response to a future interaction. This is called memory, and Chapter 4 discusses using it in LangChain.

Finally, LangChain provides the tools to compose these building blocks into cohesive applications. Chapters 1 through 6 talk more about this.

In addition to this library, LangChain provides LangSmith—a platform to help debug, test, deploy, and monitor AI workflows—and LangGraph Platform—a platform for deploying and scaling LangGraph agents. We cover these in Chapters 9 and 10.

What to Expect from This Book

With this book, we hope to convey the excitement and possibility of adding LLMs to your software engineering toolbelt.

We got into programming because we like building things, getting to the end of a project, looking at the final product and realizing there’s something new out there, and we built it. Programming with LLMs is so exciting to us because it expands the set of things we can build, it makes previously hard things easy (for example, extracting relevant numbers from a long text) and previously impossible things possible—try building an automated assistant a year ago and you end up with the phone tree hell we all know and love from calling up customer support numbers.

Now with LLMs and LangChain, you can actually build pleasant assistants (or myriad other applications) that chat with you and understand your intent to a very reasonable degree. The difference is night and day! If that sounds exciting to you (as it does to us) then you’ve come to the right place.

In this Preface, we’ve given you a refresher on what makes LLMs tick and why exactly that gives you “thing-building” superpowers. Having these very large ML models that understand language and can output answers written in conversational English (or some other language) gives you a programmable (through prompt engineering), versatile language-generation tool. By the end of the book, we hope you’ll see just how powerful that can be.

We’ll begin with an AI chatbot customized by, for the most part, plain English instructions. That alone should be an eye-opener: you can now “program” part of the behavior of your application without code.

Then comes the next capability: giving your chatbot access to your own documents, which takes it from a generic assistant to one that’s knowledgeable about any area of human knowledge for which you can find a library of written text. This will allow you to have the chatbot answer questions or summarize documents you wrote, for instance.

After that, we’ll make the chatbot remember your previous conversations. This will improve it in two ways: It will feel a lot more natural to have a conversation with a chatbot that remembers what you have previously chatted about, and over time the chatbot can be personalized to the preferences of each of its users individually.

Next, we’ll use chain-of-thought and tool-calling techniques to give the chatbot the ability to plan and act on those plans, iteratively. This will enable it to work toward more complicated requests, such as writing a research report about a subject of your choice.

As you use your chatbot for more complicated tasks, you’ll feel the need to give it the tools to collaborate with you. This encompasses both giving you the ability to interrupt or authorize actions before they are taken, as well as providing the chatbot with the ability to ask for more information or clarification before acting.

Finally, we’ll show you how to deploy your chatbot to production and discuss what you need to consider before and after taking that step, including latency, reliability, and security. Then we’ll show you how to monitor your chatbot in production and continue to improve it as it is used.

Along the way, we’ll teach you the ins and outs of each of these techniques, so that when you finish the book, you will have truly added a new tool (or two) to your software engineering toolbelt.

Conventions Used in This Book

The following typographical conventions are used in this book:

Italic

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant width

Used for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Tip

This element signifies a tip or suggestion.

Note

This element signifies a general note.

Using Code Examples

Supplemental material (code examples, exercises, etc.) is available for download at https:// oreil.ly/supp-LearningLangChain.

If you have a technical question or a problem using the code examples, please send email to support@oreilly.com.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but generally do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Learning LangChain by Mayo Oshin and Nuno Campos (O’Reilly). Copyright 2025 Olumayowa”Mayo” Olufemi Oshin, 978-1-098-16728-8.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

O’Reilly Online Learning

Note

For more than 40 years, O’Reilly Media has provided technology and business training, knowledge, and insight to help companies succeed.

Our unique network of experts and innovators share their knowledge and expertise through books, articles, and our online learning platform. O’Reilly’s online learning platform gives you on-demand access to live training courses, in-depth learning paths, interactive coding environments, and a vast collection of text and video from O’Reilly and 200+ other publishers. For more information, visit https://oreilly.com.

How to Contact Us

Please address comments and questions concerning this book to the publisher:

- O’Reilly Media, Inc. •

- 1005 Gravenstein Highway North •

- Sebastopol, CA 95472 •

- 800-889-8969 (in the United States or Canada) •

- 707-827-7019 (international or local) •

- 707-829-0104 (fax) •

- support@oreilly.com •

- https://oreilly.com/about/contact.html •

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at https://oreil.ly/learning-langchain.

For news and information about our books and courses, visit https://oreilly.com.

Find us on LinkedIn: https://linkedin.com/company/oreilly-media.

Watch us on YouTube: https://youtube.com/oreillymedia.

Acknowledgments

We would like to express our gratitude and appreciation to the reviewers—Rajat Kant Goel, Douglas Bailley, Tom Taulli, Gourav Bais, and Jacob Lee—for providing valuable technical feedback on improving this book.

1 Tom B. Brown et al., “Language Models Are Few-Shot Learners”, arXiv, July 22, 2020. 2 Xiang Zhang et al., “Don’t Trust ChatGPT When Your Question Is Not in English: A Study of Multilingual Abilities and Types of LLMs”, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, December 6–10, 2023.

3 For more information, see Ashish Vaswani et al., “Attention Is All You Need”, arXiv, June 12, 2017.

4 Ryan Liu et al. “Mind Your Step (by Step): Chain-of-Thought Can Reduce Performance on Tasks Where Thinking Makes Humans Worse”, arXiv, November 8, 2024.

Chapter 1. LLM Fundamentals with LangChain

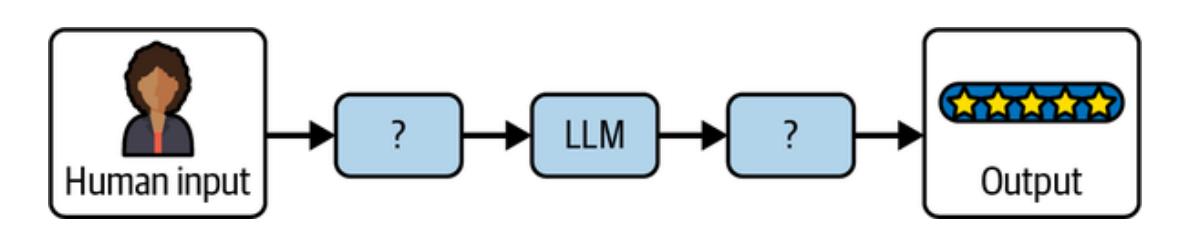

The Preface gave you a taste of the power of LLM prompting, where we saw firsthand the impact that different prompting techniques can have on what you get out of LLMs, especially when judiciously combined. The challenge in building good LLM applications is, in fact, in how to effectively construct the prompt sent to the model and process the model’s prediction to return an accurate output (see Figure 1-1).

Figure 1-1. The challenge in making LLMs a useful part of your application

If you can solve this problem, you are well on your way to building LLM applications, simple and complex alike. In this chapter, you’ll learn more about how LangChain’s building blocks map to LLM concepts and how, when combined effectively, they enable you to build LLM applications. But first, the sidebar “Why LangChain?” is a brief primer on why we think it useful to use LangChain to build LLM applications.

Why LangChain?

You can of course build LLM applications without LangChain. The most obvious alternative is to use the software development kit (SDK)—the package exposing the methods of their HTTP API as functions in the programming language of your choice—of the LLM provider you tried first (for example, OpenAI). We think learning LangChain will pay off in the short term and over the long run because of the following factors:

Prebuilt common patterns

LangChain comes with reference implementations of the most common LLM application patterns (we mentioned some of these in the Preface: chain-of-thought, tool calling, and others). This is the quickest way to get started with LLMs and might often be all you need. We’d suggest starting any new application from these and checking whether the results out of the box are good enough for your use case. If not, then see the next item for the other half of the LangChain libraries.

Interchangeable building blocks

These are components that can be easily swapped out for alternatives. Every component (an LLM, chat model, output parser, and so on—more on these shortly) follows a shared specification, which makes your application future-proof. As new capabilities are released by model providers and as your needs change, you can evolve your application without rewriting it each time.

Throughout this book we make use of the following major components in the code examples:

- LLM/chat model: OpenAI •

- Embeddings: OpenAI •

- Vector store: PGVector •

You can swap out each of these for any of the alternatives listed on the following pages:

Chat models

See the LangChain documentation. If you don’t want to use OpenAI (a commercial API) we suggest Anthropic as a commercial alternative or Ollama as an open source one.

Embeddings

See the LangChain documentation. If you don’t want to use OpenAI (a commercial API) we suggest Cohere as a commercial alternative or Ollama as an open source one.

Vector stores

See the LangChain documentation. If you don’t want to use PGVector (an open source extension to the popular SQL database Postgres) we suggest using either Weaviate (a dedicated vector store) or OpenSearch (vector search features that are part of a popular search database).

This effort goes beyond, for instance, all LLMs having the same methods, with similar arguments and return values. Let’s look at the example of chat models and two popular LLM providers, OpenAI and Anthropic. Both have a chat API which receives chat messages (loosely defined as objects with a type string and a content string) and returns a new message generated by the model. But if you try to use both models in the same conversation, you’ll immediately run into issues, as their chat message formats are subtly incompatible. LangChain abstracts away these differences to enable building applications that are truly independent of a particular provider. For instance, with LangChain, a chatbot conversation where you use both OpenAI and Anthropic models works.

Finally, as you build out your LLM applications with several of these components, we’ve found it useful to have the orchestration capabilities of LangChain:

- All major components are instrumented by the callbacks system for observability (more on this in Chapter 8). •

- All major components implement the same interface (more on this toward the end of this chapter). •

- Long-running LLM applications can be interrupted, resumed, or retried (more on this in Chapter 6). •

Getting Set Up with LangChain

To follow along with the rest of the chapter, and the chapters to come, we recommend setting up LangChain on your computer first.

See the instructions in the Preface regarding setting up an OpenAI account and complete these if you haven’t yet. If you prefer using a different LLM provider, see “Why LangChain?” for alternatives.

Then head over to the API Keys page on the OpenAI website (after logging in to your OpenAI account), create an API key, and save it—you’ll need it soon.

In this book, we’ll show code examples in both Python and JavaScript (JS). LangChain offers the same functionality in both languages, so just pick the one you’re most comfortable with and follow the respective code snippets throughout the book (the code examples for each language are equivalent).

First, some setup instructions for readers using Python:

- Ensure that you have Python installed. See the instructions for your operating system. 1.

- Install Jupyter if you want to run the examples in a notebook environment. You can do this by running pip install notebook in your terminal. 2.

- Install the LangChain library by running the following commands in your terminal: 3.

pip install langchain langchain-openai langchain-community pip install langchain-text-splitters langchain-postgres

Take the OpenAI API key you generated at the beginning of this section and make it available in your terminal environment. You can do this by running the following: 4.

export OPENAI_API_KEY=your-key

- Don’t forget to replace your-key with the API key you generated previously. 5.

- Open a Jupyter notebook by running this command: 6.

jupyter notebook

You’re now ready to follow along with the Python code examples.

Here are the instructions for readers using JavaScript:

Take the OpenAI API key you generated at the beginning of this section and make it available in your terminal environment. You can do this by running the following: 1.

export OPENAI_API_KEY=your-key

- Don’t forget to replace your-key with the API key you generated previously. 2.

- If you want to run the examples as Node.js scripts, install Node by following the instructions. 3.

- Install the LangChain libraries by running the following commands in your terminal: 4.

npm install langchain @langchain/openai @langchain/community npm install @langchain/core pg

Take each example, save it as a .js file and run it with node ./file.js. 5.

Using LLMs in LangChain

To recap, LLMs are the driving engine behind most generative AI applications. LangChain provides two simple interfaces to interact with any LLM API provider:

- Chat models •

- LLMs •

The LLM interface simply takes a string prompt as input, sends the input to the model provider, and then returns the model prediction as output.

Let’s import LangChain’s OpenAI LLM wrapper to invoke a model prediction using a simple prompt:

Note

Python

from langchain_openai.llms import OpenAI model = OpenAI(model=“gpt-3.5-turbo”) model.invoke(“The sky is”) JavaScript import { OpenAI } from “@langchain/openai”; const model = new OpenAI({ model: “gpt-3.5-turbo” }); await model.invoke(“The sky is”); The output: Blue!

Tip

Notice the parameter model passed to OpenAI. This is the most common parameter to configure when using an LLM or chat model, the underlying model to use, as most providers offer several models with different trade-offs in capability and cost (usually larger models are more capable, but also more expensive and slower). See OpenAI’s overview of the models they offer.

Other useful parameters to configure include the following, offered by most providers.

temperature

This controls the sampling algorithm used to generate output. Lower values produce more predictable outputs (for example, 0.1), while higher values generate more creative, or unexpected, results (such as 0.9). Different tasks will need different values for this parameter. For instance, producing structured output usually benefits from a lower temperature, whereas creative writing tasks do better with a higher value:

max_tokens

This limits the size (and cost) of the output. A lower value may cause the LLM to stop generating the output before getting to a natural end, so it may appear to have been truncated.

Beyond these, each provider exposes a different set of parameters. We recommend looking at the documentation for the one you choose. For an example, refer to OpenAI’s platform.

Alternatively, the chat model interface enables back and forth conversations between the user and model. The reason why it’s a separate interface is because popular LLM providers like OpenAI differentiate messages sent to and from the model into user, assistant, and system roles (here role denotes the type of content the message contains):

System role

Used for instructions the model should use to answer a user question

User role

Used for the user’s query and any other content produced by the user

Assistant role

Used for content generated by the model

The chat model’s interface makes it easier to configure and manage conversions in your AI chatbot application. Here’s an example utilizing LangChain’s ChatOpenAI model:

Python

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.messages import HumanMessage

model = ChatOpenAI()

prompt = [HumanMessage("What is the capital of France?")]

model.invoke(prompt)

JavaScript

import { ChatOpenAI } from '@langchain/openai'

import { HumanMessage } from '@langchain/core/messages'

const model = new ChatOpenAI()

const prompt = [new HumanMessage('What is the capital of France?')]

await model.invoke(prompt)The output:

AIMessage(content=‘The capital of France is Paris.’)

Instead of a single prompt string, chat models make use of different types of chat message interfaces associated with each role mentioned previously. These include the following:

HumanMessage

A message sent from the perspective of the human, with the user role

AIMessage

A message sent from the perspective of the AI that the human is interacting with, with the assistant role

SystemMessage

A message setting the instructions the AI should follow, with the system role

ChatMessage

A message allowing for arbitrary setting of role

Let’s incorporate a SystemMessage instruction in our example:

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_openai.chat_models import ChatOpenAI

model = ChatOpenAI()

system_msg = SystemMessage(

'''You are a helpful assistant that responds to questions with three

exclamation marks.'''

)

human_msg = HumanMessage('What is the capital of France?')

model.invoke([system_msg, human_msg])

JavaScriptimport { ChatOpenAI } from "@langchain/openai";

import { HumanMessage, SystemMessage } from "@langchain/core/messages";

const model = new ChatOpenAI();

const prompt = [

new SystemMessage(

`You are a helpful assistant that responds to questions with three

exclamation marks.`,

),

new HumanMessage("What is the capital of France?"),

];

await model.invoke(prompt);

The output:AIMessage(‘Paris!!!’)

As you can see, the model obeyed the instruction provided in the SystemMessage even though it wasn’t present in the user’s question. This enables you to preconfigure your AI application to respond in a relatively predictable manner based on the user’s input.

Making LLM Prompts Reusable

The previous section showed how the prompt instruction significantly influences the model’s output. Prompts help the model understand context and generate relevant answers to queries.

Here is an example of a detailed prompt:

Answer the question based on the context below. If the question cannot be answered using the information provided, answer with “I don’t know”.

Context: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face’s transformers library, or by utilizing OpenAI and Cohere’s offerings through the openai and cohere libraries, respectively.

Question: Which model providers offer LLMs?

Answer:

Although the prompt looks like a simple string, the challenge is figuring out what the text should contain and how it should vary based on the user’s input. In this example, the Context and Question values are hardcoded, but what if we wanted to pass these in dynamically?

Fortunately, LangChain provides prompt template interfaces that make it easy to construct prompts with dynamic inputs:

Python

from langchain_core.prompts import PromptTemplate

template = PromptTemplate.from_template(“““Answer the question based on the context below. If the question cannot be answered using the information provided, answer with”I don’t know”.

Context: {context}

Question: {question}

Answer: """)

template.invoke({

"context": """The most recent advancements in NLP are being driven by Large

Language Models (LLMs). These models outperform their smaller

counterparts and have become invaluable for developers who are creating

applications with NLP capabilities. Developers can tap into these

models through Hugging Face's `transformers` library, or by utilizing

OpenAI and Cohere's offerings through the `openai` and `cohere`

libraries, respectively.""",

"question": "Which model providers offer LLMs?"

})

JavaScript

import { PromptTemplate } from '@langchain/core/prompts'

const template = PromptTemplate.fromTemplate(`Answer the question based on the

context below. If the question cannot be answered using the information

provided, answer with "I don't know".

Context: {context}

Question: {question}

Answer: `)

await template.invoke({

context: `The most recent advancements in NLP are being driven by Large

Language Models (LLMs). These models outperform their smaller

counterparts and have become invaluable for developers who are creating

applications with NLP capabilities. Developers can tap into these models

through Hugging Face's \`transformers\` library, or by utilizing OpenAI

and Cohere's offerings through the \`openai\` and \`cohere\` libraries,

respectively.`,

question: "Which model providers offer LLMs?"

})

The output:StringPromptValue(text='Answer the question based on the context below. If the

question cannot be answered using the information provided, answer with "I

don\'t know".\n\nContext: The most recent advancements in NLP are being

driven by Large Language Models (LLMs). These models outperform their

smaller counterparts and have become invaluable for developers who are

creating applications with NLP capabilities. Developers can tap into these

models through Hugging Face\'s `transformers` library, or by utilizing

OpenAI and Cohere\'s offerings through the `openai` and `cohere` libraries,

respectively.\n\nQuestion: Which model providers offer LLMs?\n\nAnswer: ')This example takes the static prompt from the previous block and makes it dynamic. The template contains the structure of the final prompt alongside the definition of where the dynamic inputs will be inserted.

As such, the template can be used as a recipe to build multiple static, specific prompts. When you format the prompt with some specific values—in this case, context and question —you get a static prompt ready to be passed in to an LLM.

As you can see, the question argument is passed dynamically via the invoke function. By default, LangChain prompts follow Python’s f-string syntax for defining dynamic parameters—any word surrounded by curly braces, such as {question}, are placeholders for values passed in at runtime. In the previous example, {question} was replaced by “Which model providers offer LLMs?”

Let’s see how we’d feed this into an LLM OpenAI model using LangChain:

Python from langchain_openai.llms import OpenAI from langchain_core.prompts import PromptTemplate # both template and model can be reused many times template = PromptTemplate.from_template(“““Answer the question based on the context below. If the question cannot be answered using the information provided, answer with”I don’t know”. Context: {context} Question: {question} Answer: “““) model = OpenAI() # prompt and completion are the results of using template and model once prompt = template.invoke({”context”: “““The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face’s transformers library, or by utilizing OpenAI and Cohere’s offerings through the openai and cohere libraries, respectively.”““,”question”: “Which model providers offer LLMs?” }) completion = model.invoke(prompt) JavaScript import { PromptTemplate } from ‘@langchain/core/prompts’ import { OpenAI } from ‘@langchain/openai’ const model = new OpenAI() const template = PromptTemplate.fromTemplate(Answer the question based on the context below. If the question cannot be answered using the information provided, answer with "I don't know". Context: {context} Question: {question} Answer:) const prompt = await template.invoke({ context: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's \transformers` library, or by utilizing OpenAI and Cohere’s offerings through the `openai` and `cohere` libraries,

respectively.`,question: “Which model providers offer LLMs?” }) await model.invoke(prompt) The output: Hugging Face’s transformers library, OpenAI using the openai library, and Cohere using the cohere library offer LLMs. If you’re looking to build an AI chat application, the ChatPromptTemplate can be used instead to provide dynamic inputs based on the role of the chat message: Python from langchain_core.prompts import ChatPromptTemplate template = ChatPromptTemplate.from_messages([ (‘system’, ’‘’Answer the question based on the context below. If the question cannot be answered using the information provided, answer with “I don't know”.’’‘), (’human’, ‘Context: {context}’), (‘human’, ‘Question: {question}’), ]) template.invoke({ “context”: “““The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face’s transformers library, or by utilizing OpenAI and Cohere’s offerings through the openai and cohere libraries, respectively.”““,”question”: “Which model providers offer LLMs?” }) JavaScript import { ChatPromptTemplate } from ‘@langchain/core/prompts’ const template = ChatPromptTemplate.fromMessages([ [‘system’, Answer the question based on the context below. If the question cannot be answered using the information provided, answer with "I don\'t know".], [‘human’, ‘Context: {context}’], [‘human’, ‘Question: {question}’], ]) await template.invoke({ context: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's \transformers` library, or by utilizing OpenAI and Cohere’s offerings through the `openai` and `cohere` libraries, respectively.`, question: “Which model providers offer LLMs?” })

The output:

ChatPromptValue(messages=[SystemMessage(content=‘Answer the question based on the context below. If the question cannot be answered using the information provided, answer with “I don't know”.’), HumanMessage(content=“Context:

The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's transformers library, or by utilizing OpenAI and Cohere's offerings through the openai and cohere libraries, respectively.”), HumanMessage (content=‘Question: Which model providers offer LLMs?’)])

Notice how the prompt contains instructions in a SystemMessage and two instances of HumanMessage that contain dynamic context and question variables. You can still format the template in the same way and get back a static prompt that you can pass to a large language model for a prediction output:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

# both `template` and `model` can be reused many times

template = ChatPromptTemplate.from_messages([

('system', '''Answer the question based on the context below. If the

question cannot be answered using the information provided, answer

with "I don\'t know".'''),

('human', 'Context: {context}'),

('human', 'Question: {question}'),

])

model = ChatOpenAI()

# `prompt` and `completion` are the results of using template and model once

prompt = template.invoke({

"context": """The most recent advancements in NLP are being driven by

Large Language Models (LLMs). These models outperform their smaller

counterparts and have become invaluable for developers who are creating

applications with NLP capabilities. Developers can tap into these

models through Hugging Face's `transformers` library, or by utilizing

OpenAI and Cohere's offerings through the `openai` and `cohere`

libraries, respectively.""",

"question": "Which model providers offer LLMs?"

})

model.invoke(prompt)

JavaScript

import { ChatPromptTemplate } from '@langchain/core/prompts'

import { ChatOpenAI } from '@langchain/openai'

const model = new ChatOpenAI()

const template = ChatPromptTemplate.fromMessages([

['system', `Answer the question based on the context below. If the question

cannot be answered using the information provided, answer with "I

don\'t know".`],

['human', 'Context: {context}'],

['human', 'Question: {question}'],

])

const prompt = await template.invoke({

context: `The most recent advancements in NLP are being driven by Large

Language Models (LLMs). These models outperform their smaller

counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models

through Hugging Face's \`transformers\` library, or by utilizing OpenAI

and Cohere's offerings through the \`openai\` and \`cohere\` libraries,

respectively.`,

question: "Which model providers offer LLMs?"})

await model.invoke(prompt)

The output:

AIMessage(content="Hugging Face's `transformers` library, OpenAI using the

`openai` library, and Cohere using the `cohere` library offer LLMs.")Getting Specific Formats out of LLMs

Plain text outputs are useful, but there may be use cases where you need the LLM to generate a structured output—that is, output in a machine-readable format, such as JSON, XML, CSV, or even in a programming language such as Python or JavaScript. This is very useful when you intend to hand that output off to some other piece of code, making an LLM play a part in your larger application.

JSON Output

The most common format to generate with LLMs is JSON. JSON outputs can (for example) be sent over the wire to your frontend code or be saved to a database.

When generating JSON, the first task is to define the schema you want the LLM to respect when producing the output. Then, you should include that schema in the prompt, along with the text you want to use as the source. Let’s see an example:

from langchain_openai import ChatOpenAI

from langchain_core.pydantic_v1 import BaseModel

class AnswerWithJustification(BaseModel):

'''An answer to the user's question along with justification for the

answer.'''

answer: str

'''The answer to the user's question'''

justification: str

'''Justification for the answer'''

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

structured_llm = llm.with_structured_output(AnswerWithJustification)

structured_llm.invoke("""What weighs more, a pound of bricks or a pound

of feathers""")

JavaScript

import { ChatOpenAI } from '@langchain/openai'

import { z } from "zod";

const answerSchema = z

.object({

answer: z.string().describe("The answer to the user's question"),

justification: z.string().describe(`Justification for the

answer`),

})

.describe(`An answer to the user's question along with justification for the answer.`);

const model = new ChatOpenAI({

model: "gpt-3.5-turbo",

temperature: 0,

}).withStructuredOutput(answerSchema)

await model.invoke("What weighs more, a pound of bricks or a pound of feathers")

The output:

{

answer: "They weigh the same",

justification: "Both a pound of bricks and a pound of feathers weigh one pound.

The weight is the same, but the volu"... 42 more characters}So, first define a schema. In Python, this is easiest to do with Pydantic (a library used for validating data against schemas). In JS, this is easiest to do with Zod (an equivalent library). The method with_structured_output will use that schema for two things:

- The schema will be converted to a JSONSchema object (a JSON format used to describe the shape [types, names, descriptions] of JSON data), which will be sent to the LLM. For each LLM, LangChain picks the best method to do this, usually function calling or prompting. •

- The schema will also be used to validate the output returned by the LLM before returning it; this ensures the output produced respects the schema you passed in exactly. •

Other Machine-Readable Formats with Output Parsers

You can also use an LLM or chat model to produce output in other formats, such as CSV or XML. This is where output parsers come in handy. Output parsers are classes that help you structure large language model responses. They serve two functions:

Providing format instructions

Output parsers can be used to inject some additional instructions in the prompt that will help guide the LLM to output text in the format it knows how to parse.

Validating and parsing output

The main function is to take the textual output of the LLM or chat model and render it to a more structured format, such as a list, XML, or other format. This can include removing extraneous information, correcting incomplete output, and validating the parsed values.

Here’s an example of how an output parser works:

Python

from langchain_core.output_parsers import CommaSeparatedListOutputParser

parser = CommaSeparatedListOutputParser()

items = parser.invoke("apple, banana, cherry")JavaScript

import { CommaSeparatedListOutputParser } from '@langchain/core/output_parsers'const parser = new CommaSeparatedListOutputParser()

await parser.invoke(“apple, banana, cherry”)

The output:

[‘apple’, ‘banana’, ‘cherry’]

LangChain provides a variety of output parsers for various use cases, including CSV, XML, and more. We’ll see how to combine output parsers with models and prompts in the next section.

Assembling the Many Pieces of an LLM Application

The key components you’ve learned about so far are essential building blocks of the LangChain framework. Which brings us to the critical question: How do you combine them effectively to build your LLM application?

Using the Runnable Interface

As you may have noticed, all the code examples used so far utilize a similar interface and the invoke() method to generate outputs from the model (or prompt template, or output parser). All components have the following:

- There is a common interface with these methods: •

- invoke: transforms a single input into an output ◦

- batch: efficiently transforms multiple inputs into multiple outputs ◦

- stream: streams output from a single input as it’s produced ◦

- There are built-in utilities for retries, fallbacks, schemas, and runtime configurability. •

- In Python, each of the three methods have asyncio equivalents. •

As such, all components behave the same way, and the interface learned for one of them applies to all:

from langchain_openai.llms import ChatOpenAI

model = ChatOpenAI()

completion = model.invoke('Hi there!')

# Hi!

completions = model.batch(['Hi there!', 'Bye!'])

# ['Hi!', 'See you!']

for token in model.stream('Bye!'):

print(token)

# Good

# bye

# !

JavaScript

import { ChatOpenAI } from '@langchain/openai'

const model = new ChatOpenAI()

const completion = await model.invoke('Hi there!')

// Hi!

const completions = await model.batch(['Hi there!', 'Bye!'])

// ['Hi!', 'See you!']for await (const token of await model.stream('Bye!')) {

console.log(token)

// Good

// bye

// !

}In this example, you see how the three main methods work:

- invoke() takes a single input and returns a single output. •

- batch() takes a list of outputs and returns a list of outputs. •

- stream() takes a single input and returns an iterator of parts of the output as they become available. •

In some cases, where the underlying component doesn’t support iterative output, there will be a single part containing all output.

You can combine these components in two ways:

Imperative

Call your components directly, for example, with model.invoke(…)

Declarative

Use LangChain Expression Language (LCEL), as covered in an upcoming section

Table 1-1 summarizes their differences, and we’ll see each in action next.

| Table 1-1. The main differences between imperative and declarative composition. | ||

|---|---|---|

| – | ——————————————————————————— | – |

| Imperative | Declarative | |

|---|---|---|

| Syntax | All of Python or JavaScript | LCEL |

| Parallel execution | Python: with threads or coroutines JavaScript: with Promise.all |

Automatic |

| Streaming | With yield keyword | Automatic |

| Async execution | With async functions | Automatic |

Imperative Composition

Imperative composition is just a fancy name for writing the code you’re used to writing, composing these components into functions and classes. Here’s an example combining prompts, models, and output parsers:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import chain

# the building blocks

template = ChatPromptTemplate.from_messages([

('system', 'You are a helpful assistant.'),

('human', '{question}'),])

model = ChatOpenAI()

# combine them in a function

# @chain decorator adds the same Runnable interface for any function you write

@chain

def chatbot(values):

prompt = template.invoke(values)

return model.invoke(prompt)

# use it

chatbot.invoke({"question": "Which model providers offer LLMs?"})

JavaScript

import {ChatOpenAI} from '@langchain/openai'

import {ChatPromptTemplate} from '@langchain/core/prompts'

import {RunnableLambda} from '@langchain/core/runnables'

// the building blocks

const template = ChatPromptTemplate.fromMessages([

['system', 'You are a helpful assistant.'],

['human', '{question}'],

])

const model = new ChatOpenAI()

// combine them in a function

// RunnableLambda adds the same Runnable interface for any function you write

const chatbot = RunnableLambda.from(async values => {

const prompt = await template.invoke(values)

return await model.invoke(prompt)

})

// use it

await chatbot.invoke({

"question": "Which model providers offer LLMs?"

})

The output:

AIMessage(content="Hugging Face's `transformers` library, OpenAI using the

`openai` library, and Cohere using the `cohere` library offer LLMs.")

The preceding is a complete example of a chatbot, using a prompt and chat model. As you

can see, it uses familiar Python syntax and supports any custom logic you might want to add

in that function.

On the other hand, if you want to enable streaming or async support, you'd have to modify

your function to support it. For example, streaming support can be added as follows:

Python@chain

def chatbot(values):

prompt = template.invoke(values)

for token in model.stream(prompt):yield token

for part in chatbot.stream({

"question": "Which model providers offer LLMs?"

}):

print(part)

JavaScript

const chatbot = RunnableLambda.from(async function* (values) {

const prompt = await template.invoke(values)

for await (const token of await model.stream(prompt)) {

yield token

}

})

for await (const token of await chatbot.stream({

"question": "Which model providers offer LLMs?"

})) {

console.log(token)

}

The output:

AIMessageChunk(content="Hugging")

AIMessageChunk(content=" Face's")So, either in JS or Python, you can enable streaming for your custom function by yielding the values you want to stream and then calling it with stream.

For asynchronous execution, you’d rewrite your function like this:

AIMessageChunk(content=” transformers“)

Python

…

@chain

async def chatbot(values):

prompt = await template.ainvoke(values)

return await model.ainvoke(prompt)

await chatbot.ainvoke({"question": "Which model providers offer LLMs?"})

# > AIMessage(content="""Hugging Face's `transformers` library, OpenAI using

the `openai` library, and Cohere using the `cohere` library offer LLMs.""")This one applies to Python only, as asynchronous execution is the only option in JavaScript.

Declarative Composition

LCEL is a declarative language for composing LangChain components. LangChain compiles LCEL compositions to an optimized execution plan, with automatic parallelization, streaming, tracing, and async support.

Let’s see the same example using LCEL:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate# the building blockstemplate = ChatPromptTemplate.from_messages([('system', 'You are a helpful assistant.'),

('human', '{question}'),

])

model = ChatOpenAI()