Generative AI with LangChain

Build production-ready LLM applications and advanced agents using Python, LangChain, and LangGraph

Chapter 6: Advanced Applications and Multi-Agent Systems

In the previous chapter, we defined what an agent is. But how do we design and build a high-performing agent? Unlike the prompt engineering techniques we’ve previously explored, developing effective agents involves several distinct design patterns every developer should be familiar with. In this chapter, we’re going to discuss key architectural patterns behind agentic AI. We’ll look into multi-agentic architectures and the ways to organize communication between agents. We will develop an advanced agent with self-reflection that uses tools to answer complex exam questions. We will learn about additional LangChain and LangGraph APIs that are useful when implementing agentic architectures, such as details about LangGraph streaming and ways to implement handoff as part of advanced control flows.

Then, we’ll briefly touch on the LangGraph platform and discuss how to develop adaptive systems, by including humans in the loop, and what kind of prebuilt building blocks LangGraph offers for this. We will also look into the Tree-of-Thoughts (ToT) pattern and develop a ToT agent ourselves, discussing further ways to improve it by implementing advanced trimming mechanisms. Finally, we’ll learn about advanced long-term memory mechanisms on LangChain and LangGraph, such as caches and stores.

In all, we’ll touch on the following topics in this chapter:

- Agentic architectures

- Multi-agent architectures

- Building adaptive systems

- Exploring reasoning paths

- Agent memory

Agentic architectures

As we learned in Chapter 5, agents help humans solve tasks. Building an agent involves balancing two elements. On one side, it’s very similar to application development in the sense that you’re combining APIs (including calling foundational models) with production-ready quality. On the other side, you’re helping LLMs think and solve a task.

As we discussed in Chapter 5, agents don’t have a specific algorithm to follow. We give an LLM partial control over the execution flow, but to guide it, we use various tricks that help us as humans to reason, solve tasks, and think clearly. We should not assume that an LLM can magically figure everything out itself; at the current stage, we should guide it by creating reasoning workflows. Let’s recall the ReACT agent we learned about in Chapter 5, an example of a tool-calling pattern:

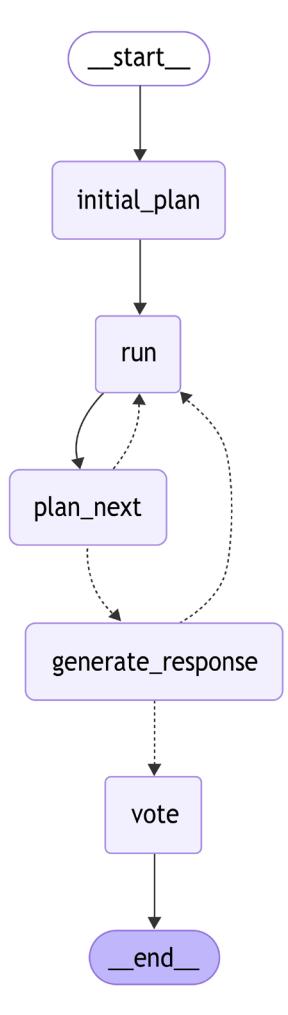

Figure 6.1: A prebuilt REACT workflow on LangGraph

Let’s look at a few relatively simple design patterns that help with building well-performing agents. You will see these patterns in various combinations across different domains and agentic architectures:

- Tool calling: LLMs are trained to do controlled generation via tool calling. Hence, wrap your problem as a tool-calling problem when appropriate instead of creating complex prompts. Keep in mind that tools should have clear descriptions and property names, and experimenting with them is part of the prompt engineering exercise. We discussed this pattern in Chapter 5.

- Task decomposition: Keep your prompts relatively simple. Provide specific instructions with few-shot examples and split complex tasks into smaller steps. You can give an LLM partial control over the task decomposition and planning process, managing the flow by an external orchestrator. We used this pattern in Chapter 5 when we built a plan-andsolve agent.

- Cooperation and diversity: Final outputs on complex tasks can be improved if you introduce cooperation between multiple instances of LLM-enabled agents. Communicating, debating, and sharing different perspectives helps, and you can also benefit from various skill sets by initiating your agents with different system prompts, available toolsets, etc. Natural language is a native way for such agents to communicate since LLMs were trained on natural language tasks.

- Reflection and adaptation: Adding implicit loops of reflection generally improves the quality of end-to-end reasoning on complex tasks. LLMs get feedback from the external environment by calling the tools (and these calls might fail or produce unexpected results), but at the same time, LLMs can continue iterating and self-recover from their mistakes. As an exaggeration, remember that we often use the same LLM-as-a-judge, so adding a loop when we ask an LLM to evaluate its own reasoning and find errors often helps it to recover. We will learn how to build adaptive systems later in this chapter.

- Models are nondeterministic and can generate multiple candidates: Do not focus on a single output; explore different reasoning paths by expanding the dimension of potential options to try out when an LLM interacts with the external environment when looking for the solution. We will investigate this pattern in more detail in the section below when we discuss ToT and Language Agent Tree Search (LATS) examples.

• Code-centric problem framing: Writing code is very natural for an LLM, so try to frame the problem as a code-writing problem if possible. This might become a very powerful way of solving the task, especially if you wrap it with a code-executing sandbox, a loop for improvement based on the output, access to various powerful libraries for data analysis or visualization, and a generation step afterward. We will go into more detail in Chapter 7.

Two important comments: first, develop your agents aligned with the best software development practices, and make them agile, modular, and easily configurable. That would allow you to put multiple specialized agents together, and give users the opportunity to easily tune each agent based on their specific task.

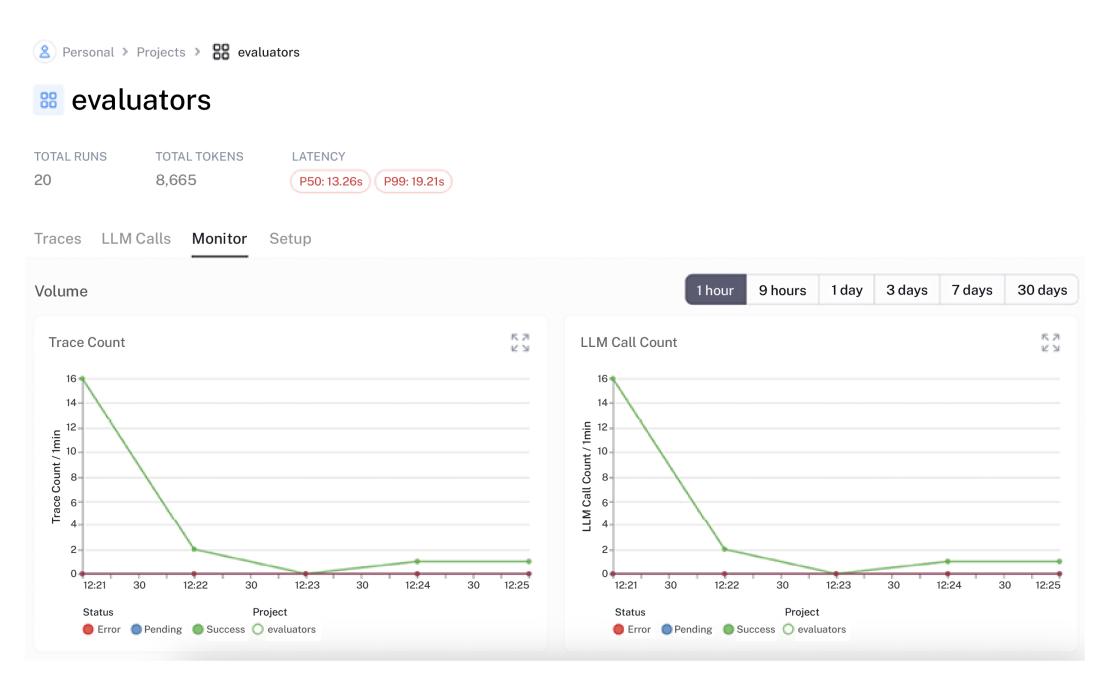

Second, we want to emphasize (once again!) the importance of evaluation and experimentation. We will talk about evaluation in more detail in Chapter 9. But it’s important to keep in mind that there is no clear path to success. Different patterns work better on different types of tasks. Try things, experiment, iterate, and don’t forget to evaluate the results of your work. Data, such as tasks and expected outputs, and simulators, a safe way for LLMs to interact with tools, are key to building really complex and effective agents.

Now that we have created a mental map of various design patterns, we’ll look deeper into these principles by discussing various agentic architectures and looking at examples. We will start by enhancing the RAG architecture we discussed in Chapter 4 with an agentic approach.

Agentic RAG

LLMs enable the development of intelligent agents capable of tackling complex, non-repetitive tasks that defy description as deterministic workflows. By splitting reasoning into steps in different ways and orchestrating them in a relatively simple way, agents can demonstrate a significantly higher task completion rate on complex open tasks.

This agent-based approach can be applied across numerous domains, including RAG systems, which we discussed in Chapter 4. As a reminder, what exactly is agentic RAG? Remember, a classic pattern for a RAG system is to retrieve chunks given the query, combine them into the context, and ask an LLM to generate an answer given a system prompt, combined context, and the question.

We can improve each of these steps using the principles discussed above (decomposition, tool calling, and adaptation):

• Dynamic retrieval hands over the retrieval query generation to the LLM. It can decide itself whether to use sparse embeddings, hybrid methods, keyword search, or web search. You can wrap retrievals as tools and orchestrate them as a LangGraph graph.

- • Query expansion tasks an LLM to generate multiple queries based on initial ones, and then you combine search outputs based on reciprocal fusion or another technique.

- Decomposition of reasoning on retrieved chunks allows you to ask an LLM to evaluate each individual chunk given the question (and filter it out if it’s irrelevant) to compensate for retrieval inaccuracies. Or you can ask an LLM to summarize each chunk by keeping only information given for the input question. Anyway, instead of throwing a huge piece of context in front of an LLM, you perform many smaller reasoning steps in parallel first. This can not only improve the RAG quality by itself but also increase the amount of initially retrieved chunks (by decreasing the relevance threshold) or expand each individual chunk with its neighbors. In other words, you can overcome some retrieval challenges with LLM reasoning. It might increase the overall performance of your application, but of course, it comes with latency and potential cost implications.

- Reflection steps and iterations task LLMs to dynamically iterate on retrieval and query expansion by evaluating the outputs after each iteration. You can also use additional grounding and attribution tools as a separate step in your workflow and, based on that, reason whether you need to continue working on the answer or the answer can be returned to the user.

Based on our definition from the previous chapters, RAG becomes agentic RAG when you have shared partial control with the LLM over the execution flow. For example, if the LLM decides how to retrieve, reflects on retrieved chunks, and adapts based on the first version of the answer, it becomes agentic RAG. From our perspective, at this point, it starts making sense to migrate to LangGraph since it’s designed specifically for building such applications, but of course, you can stay with LangChain or any other framework you prefer (compare how we implemented map-reduce video summarization with LangChain and LangGraph separately in Chapter 3).

Multi-agent architectures

In Chapter 5, we learned that decomposing a complex task into simpler subtasks typically increases LLM performance. We built a plan-and-solve agent that goes a step further than CoT and encourages the LLM to generate a plan and follow it. To a certain extent, this architecture was a multi-agent one since the research agent (which was responsible for generating and following the plan) invoked another agent that focused on a different type of task – solving very specific tasks with provided tools. Multi-agentic workflows orchestrate multiple agents, allowing them to enhance each other and at the same time keep agents modular (which makes it easier to test and reuse them).

We will look into a few core agentic architectures in the remainder of this chapter, and introduce some important LangGraph interfaces (such as streaming details and handoffs) that are useful to develop agents. If you’re interested, you can find more examples and tutorials on the LangChain documentation page at https://langchain-ai.github.io/langgraph/tutorials/#agentarchitectures. We’ll begin with discussing the importance of specialization in multi-agentic systems, including what the consensus mechanism is and the different consensus mechanisms.

Agent roles and specialization

When working on a complex task, we as humans know that usually, it’s beneficial to have a team with diverse skills and backgrounds. There is much evidence from research and experiments that suggests this is also true for generative AI agents. In fact, developing specialized agents offers several advantages for complex AI systems.

First, specialization improves performance on specific tasks. This allows you to:

- Select the optimal set of tools for each task type.

- Craft tailored prompts and workflows.

- Fine-tune hyperparameters such as temperature for specific contexts.

Second, specialized agents help manage complexity. Current LLMs struggle when handling too many tools at once. As a best practice, limit each agent to 5-15 different tools, rather than overloading a single agent with all available tools. How to group tools is still an open question; typically, grouping them into toolkits to create coherent specialized agents helps.

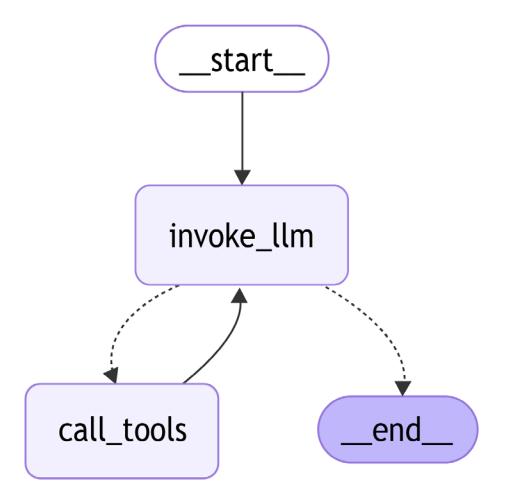

Figure 6.2: A supervisor pattern

Besides becoming specialized, keep your agents modular. It becomes easier to maintain and improve such agents. Also, by working on enterprise assistant use cases, you will eventually end up with many different agents available for users and developers within your organization that can be composed together. Hence, keep in mind that you should make such specialized agents configurable.

LangGraph allows you to easily compose graphs by including them as a subgraph in a larger graph. There are two ways of doing this:

• Compile an agent as a graph and pass it as a callable when defining a node of another agent:

builder.add_node("pay", payments_agent)• Wrap the child agent’s invocation with a Python function and use it within the definition of the parent’s node:

def _run_payment(state):

result = payments_agent.invoke({"client_id"; state["client_id"]})

return {"payment status": ...}

...

builder.add_node("pay", _run_payment)Note, that your agents might have different schemas (since they perform different tasks). In the first case, the parent agent would pass the same keys in schemas with the child agent when invoking it. In turn, when the child agent finishes, it would update the parent’s state and send back the values corresponding to matching keys in both schemas. At the same time, the second option gives you full control over how you construct a state that is passed to the child agent, and how the state of the parent agent should be updated as a result. For more information, take a look at the documentation at https://langchain-ai.github.io/langgraph/how-tos/subgraph/.

Consensus mechanism

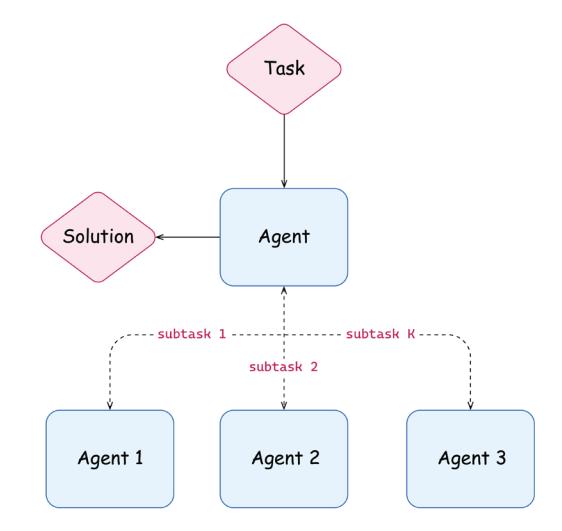

We can let multiple agents work on the same tasks in parallel as well. These agents might have a different “personality” (introduced by their system prompts; for example, some of them might be more curious and explorative, and others might be more strict and heavily grounded) or even varying architectures. Each of them independently works on getting a solution for the problem, and then you use a consensus mechanism to choose the best solution from a few drafts.

Figure 6.3: A parallel execution of the task with a final consensus step

We saw an example of implementing a consensus mechanism based on majority voting in Chapter 3. You can wrap it as a separate LangGraph node, and there are alternative ways of coming to a consensus across multiple agents:

- Let each agent see other solutions and score each of them on a scale of 0 to 1, and then take the solution with the maximum score.

- Use an alternative voting mechanism.

- Use majority voting. It typically works for classification or similar tasks, but it might be difficult to implement majority voting if you have a free-text output. This is the fastest and the cheapest (in terms of token consumption) mechanism since you don’t need to run any additional prompts.

- Use an external oracle if it exists. For instance, when solving a mathematical equation, you can easily verify if the solution is feasible. Computational costs depend on the problem but typically are low.

- Use another (maybe more powerful) LLM as a judge to pick the best solution. You can ask an LLM to come up with a score for each solution, or you can task it with a multi-class classification problem by presenting all of them and asking it to pick the best one.

- Develop another agent that excels at the task of selecting the best solution for a general task from a set of solutions.

It’s worth mentioning that a consensus mechanism has certain latency and cost implications, but typically they’re negligible relative to the costs of solving a task itself. If you task N agents with the same task, your token consumption increases N times, and the consensus mechanism adds a relatively small overhead on top of that difference.

You can also implement your own consensus mechanism. When you do this, consider the following:

- Use few-shot prompting when using an LLM as a judge.

- Add examples demonstrating how to score different input-output pairs.

- Consider including scoring rubrics for different types of responses.

- Test the mechanism on diverse outputs to ensure consistency.

One important note on parallelization – when you let LangGraph execute nodes in parallel, updates are applied to the main state in the same order as you’ve added nodes to your graph.

Communication protocols

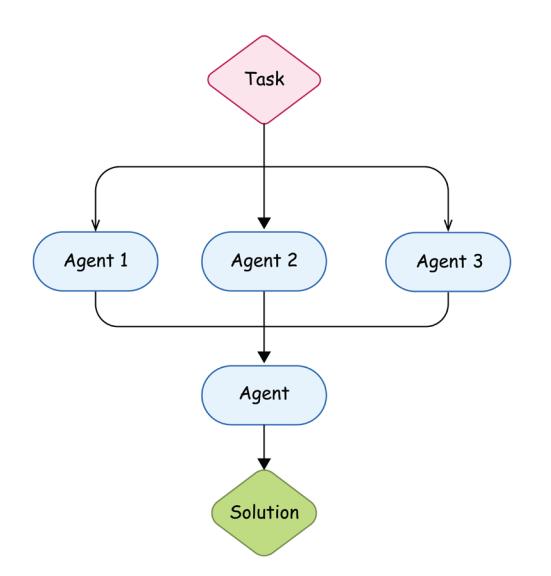

The third architecture option is to let agents communicate and work collaboratively on a task. For example, the agents might benefit from various personalities configured through system prompts. Decomposition of a complex task into smaller subtasks also helps you retain control over your application and how your agents communicate.

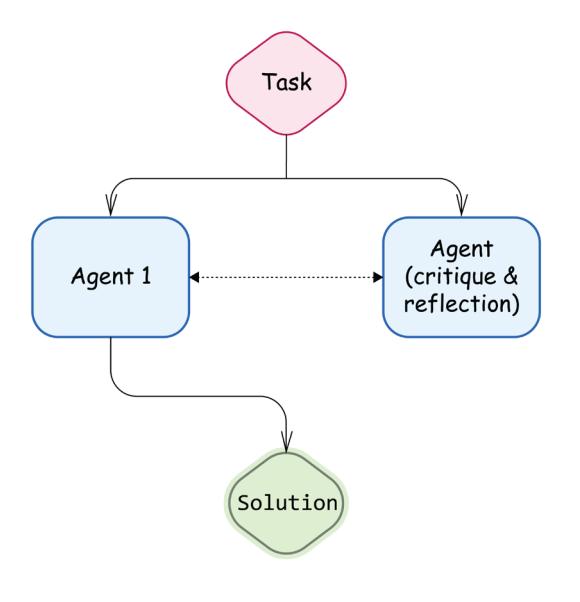

Figure 6.4: Reflection pattern

Agents can work collaboratively on a task by providing critique and reflection. There are multiple reflection patterns starting from self-reflection, when the agent analyzes its own steps and identifies areas for improvements (but as mentioned above, you might initiate the reflecting agent with a slightly different system prompt); cross-reflection, when you use another agent (for example, using another foundational model); or even reflection, which includes Human-in-the-Loop (HIL) on critical checkpoints (we’ll see in the next section how to build adaptive systems of this kind).

You can keep one agent as a supervisor, allow agents to communicate in a network (allowing them to decide which agent to send a message or a task), introduce a certain hierarchy, or develop more complex flows (for inspiration, take a look at some diagrams on the LangGraph documentation page at https://langchain-ai.github.io/langgraph/concepts/multi\_agent/).

Designing multi-agent workflows is still an open area of research and experimentation, and you need to answer a lot of questions:

- What and how many agents should we include in our system?

- What roles should we assign to these agents?

- What tools should each agent have access to?

- How should agents interact with each other and through which mechanism?

- What specific parts of the workflow should we automate?

- How do we evaluate our automation and how can we collect data for this evaluation? Additionally, what are our success criteria?

Now that we’ve examined some core considerations and open questions around multi-agent communication, let’s explore two practical mechanisms to structure and facilitate agent interactions: semantic routing, which directs tasks intelligently based on their content, and organizing interaction, detailing the specific formats and structures that agents can use to effectively exchange information.

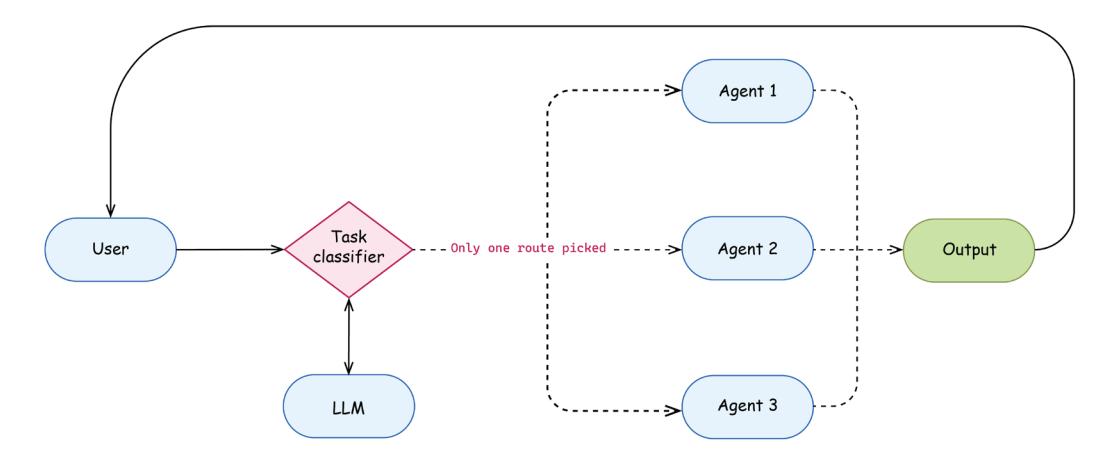

Semantic router

Among many different ways to organize communication between agents in a true multi-agent setup, an important one is a semantic router. Imagine developing an enterprise assistant. Typically it becomes more and more complex because it starts dealing with various types of questions – general questions (requiring public data and general knowledge), questions about the company (requiring access to the proprietary company-wide data sources), and questions specific to the user (requiring access to the data provided by the user itself). Maintaining such an application as a single agent becomes very difficult very soon. Again, we can apply our design patterns – decomposition and collaboration!

Imagine we have implemented three types of agents – one answering general questions grounded on public data, another one grounded on a company-wide dataset and knowing about company specifics, and the third one specialized on working with a small source of user-provided documents. Such specialization helps us to use patterns such as few-shot prompting and controlled generation. Now we can add a semantic router – the first layer that asks an LLM to classify the question and routes it to the corresponding agent based on classification results. Each agent (or some of them) might even use a self-consistency approach, as we learned in Chapter 3, to increase the LLM classification accuracy.

Figure 6.5: Semantic router pattern

It’s worth mentioning that a task might fall into two or more categories – for example, I can ask, “What is X and how can I do Y?” This might not be such a common use case in an assistant setting, and you can decide what to do in that case. First of all, you might just educate the user by replying with an explanation that they should task your application with a single problem per turn. Sometimes developers tend to be too focused on trying to solve everything programmatically. But some product features are relatively easy to solve via the UI, and users (especially in the enterprise setup) are ready to provide their input. Maybe, instead of solving a classification problem on the prompt, just add a simple checkbox in the UI, or let the system double-check if the level of confidence is low.

You can also use tool calling or other controlled generation techniques we’ve learned about to extract both goals and route the execution to two specialized agents with different tasks.

Another important aspect of semantic routing is that the performance of your application depends a lot on classification accuracy. You can use all the techniques we have discussed in the book to improve it – few-shot prompting (including dynamic one), incorporating user feedback, sampling, and others.

Organizing interactions

There are two ways to organize communication in multi-agent systems:

- Agents communicate via specific structures that force them to put their thoughts and reasoning traces in a specific form, as we saw in the plan-and-solve example in the previous chapter. We saw how our planning node communicated with the ReACT agent via a Pydantic model with a well-structured plan (which, in turn, was a result of an LLM’s controlled generation).

- On the other hand, LLMs were trained to take natural language as input and produce an output in the same format. Hence, it’s a very natural way for them to communicate via messages, and you can implement a communication mechanism by applying messages from different agents to the shared list of messages!.

When communicating with messages, you can share all messages via a so-called scratchpad – a shared list of messages. In that case, your context can grow relatively quickly and you might need to use some of the mechanisms to trim the chat memory (like preparing running summaries) that we discussed in Chapter 3. But as general advice, if you need to filter or prioritize messages in the history of communication between multiple agents, go with the first approach and let them communicate through a controlled output. It would give you more control of the state of your workflow at any given point in time. Also, you might end up with a situation where you have a complicated sequence of messages, for example, [SystemMessage, HumanMessage, AIMessage, ToolMessage, AIMessage, AIMessage, SystemMessage, …]. Depending on the foundational model you’re using, double-check that the model’s provider supports such sequences, since previously, many providers supported only relatively simple sequences – SystemMessages followed by alternating HumanMessage and AIMessage (maybe with a ToolMessage instead of a human one if a tool invocation was decided).

Another alternative is to share only the final results of each execution. This keeps the list of messages relatively short.

Now it’s time to look at a practical example. Let’s develop a research agent that uses tools to answer complex multiple-choice questions based on the public MMLU dataset (we’ll use high school geography questions). First, we need to grab a dataset from Hugging Face:

from datasets import load_dataset

ds = load_dataset("cais/mmlu", "high_school_geography")

ds_dict = ds["test"].take(2).to_dict()

print(ds_dict["question"][0])>> The main factor preventing subsistence economies from advancing

economically is the lack ofThese are our answer options:

print(ds_dict["choices"][0])>> ['a currency.', 'a well-connected transportation infrastructure.',

'government activity.', 'a banking service.']Let’s start with a ReACT agent, but let’s deviate from a default system prompt and write our own prompt. Let’s focus this agent on being creative and working on an evidence-based solution (please note that we used elements of CoT prompting, which we discussed in Chapter 3):

from langchain.agents import load_tools

from langgraph.prebuilt import create_react_agent

research_tools = load_tools(

tool_names=["ddg-search", "arxiv", "wikipedia"],

llm=llm)

system_prompt = (

"You're a hard-working, curious and creative student. "

"You're preparing an answer to an exam quesion. "

"Work hard, think step by step."

"Always provide an argumentation for your answer. "

"Do not assume anything, use available tools to search "

"for evidence and supporting statements."

)Now, let’s create the agent itself. Since we have a custom prompt for the agent, we need a prompt template that includes a system message, a template that formats the first user message based on a question and answers provided, and a placeholder for further messages to be added to the graph’s state. We also redefine the default agent’s state by inheriting from AgentState and adding additional keys to it:

from langchain_core.prompts import ChatPromptTemplate, PromptTemplate

from langgraph.graph import MessagesState

from langgraph.prebuilt.chat_agent_executor import AgentState

raw_prompt_template = (

"Answer the following multiple-choice question. "

"\nQUESTION:\n{question}\n\nANSWER OPTIONS:\n{option}\n"

)

prompt = ChatPromptTemplate.from_messages(

[("system", system_prompt),

("user", raw_prompt_template),

("placeholder", "{messages}")

]

)

class MyAgentState(AgentState):

question: str

options: str

research_agent = create_react_agent(

model=llm_small, tools=research_tools, state_schema=MyAgentState,

prompt=prompt)We could have stopped here, but let’s go further. We used a specialized research agent based on the ReACT pattern (and we slightly adjusted its default configuration). Now let’s add a reflection step to it, and use another role profile for an agent who will actionably criticize our “student’s” work:

reflection_prompt = (

"You are a university professor and you're supervising a student who is

"

"working on multiple-choice exam question. "

"nQUESTION: {question}.\nANSWER OPTIONS:\n{options}\n."

"STUDENT'S ANSWER:\n{answer}\n" "Reflect on the answer and provide a feedback whether the answer "

"is right or wrong. If you think the final answer is correct, reply

with "

"the final answer. Only provide critique if you think the answer might

"

"be incorrect or there are reasoning flaws. Do not assume anything, "

"evaluate only the reasoning the student provided and whether there is

"

"enough evidence for their answer."

)

class Response(BaseModel):

"""A final response to the user."""

answer: Optional[str] = Field(

description="The final answer. It should be empty if critique has

been provided.",

default=None,

)

critique: Optional[str] = Field(

description="A critique of the initial answer. If you think it

might be incorrect, provide an actionable feedback",

default=None,

)

reflection_chain = PromptTemplate.from_template(reflection_prompt) | llm.

with_structured_output(Response)Now we need another research agent that takes not only question and answer options but also the previous answer and the feedback. The research agent is tasked with using tools to improve the answer and address the critique. We created a simplistic and illustrative example. You can always improve it by adding error handling, Pydantic validation (for example, checking that either an answer or critique is provided), or handling conflicting or ambiguous feedback (for example, structure prompts that help the agent prioritize feedback points when there are multiple criticisms).

Note that we use a less capable LLM for our ReACT agents, just to demonstrate the power of the reflection approach (otherwise the graph might finish in a single iteration since the agent would figure out the correct answer with the first attempt):

raw_prompt_template_with_critique = (

"You tried to answer the exam question and you get feedback from your "

"professor. Work on improving your answer and incorporating the

feedback. "

"\nQUESTION:\n{question}\n\nANSWER OPTIONS:\n{options}\n\n"

"INITIAL ANSWER:\n{answer}\n\nFEEDBACK:\n{feedback}"

)

prompt = ChatPromptTemplate.from_messages(

[("system", system_prompt),

("user", raw_prompt_template_with_critique),

("placeholder", "{messages}")

]

)

class ReflectionState(ResearchState):

answer: str

feedback: str

research_agent_with_critique = create_react_agent(model=llm_small,

tools=research_tools, state_schema=ReflectionState, prompt=prompt)When defining the state of our graph, we need to keep track of the question and answer options, the current answer, and the critique. Also note that we track the amount of interaction between a student and a professor (to avoid infinite cycles between them) and we use a custom reducer for that (which summarizes old steps and new steps on each run). Let’s define the full state, nodes, and conditional edges:

from typing import Annotated, Literal, TypedDict

from langchain_core.runnables.config import RunnableConfig

from operator import add

from langchain_core.output_parsers import StrOutputParser

class ReflectionAgentState(TypedDict):

question: str options: str

answer: str

steps: Annotated[int, add]

response: Response

def _should_end(state: AgentState, config: RunnableConfig) ->

Literal["research", END]:

max_reasoning_steps = config["configurable"].get("max_reasoning_steps",

10)

if state.get("response") and state["response"].answer:

return END

if state.get("steps", 1) > max_reasoning_steps:

return END

return "research"

reflection_chain = PromptTemplate.from_template(reflection_prompt) | llm.

with_structured_output(Response)

def _reflection_step(state):

result = reflection_chain.invoke(state)

return {"response": result, "steps": 1}

def _research_start(state):

answer = research_agent.invoke(state)

return {"answer": answer["messages"][-1].content}

def _research(state):

agent_state = {

"answer": state["answer"],

"question": state["question"],

"options": state["options"],

"feedback": state["response"].critique

}

answer = research_agent_with_critique.invoke(agent_state)

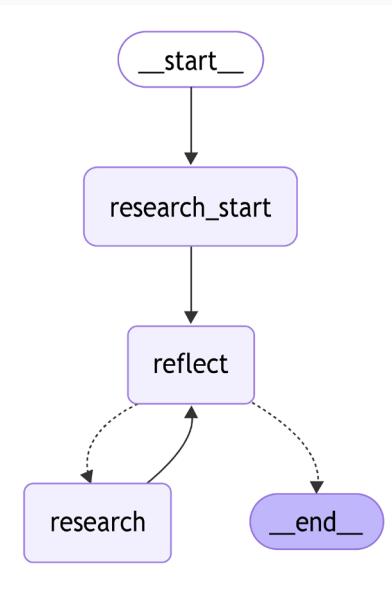

return {"answer": answer["messages"][-1].content}Let’s put it all together and create our graph:

builder = StateGraph(ReflectionAgentState)

builder.add_node("research_start", _research_start)

builder.add_node("research", _research)

builder.add_node("reflect", _reflection_step)

builder.add_edge(START, "research_start")

builder.add_edge("research_start", "reflect")

builder.add_edge("research", "reflect")

builder.add_conditional_edges("reflect", _should_end)

graph = builder.compile()display(Image(graph.get_graph().draw_mermaid_png()))

Figure 6.6: A research agent with reflection

Let’s run it and inspect what’s happening:

question = ds_dict["question"][0]

options = "\n".join(

[f"{i}. {a}" for i, a in enumerate(ds_dict["choices"][0])])

async for _, event in graph.astream({"question": question, "options":

options}, stream_mode=["updates"]):

print(event)We have omitted the full output here (you’re welcome to take the code from our GitHub repository and experiment with it yourself), but the first answer was wrong:

Based on the DuckDuckGo search results, none of the provided statements are entirely true. The searches reveal that while there has been significant progress in women’s labor force participation globally, it hasn’t reached a point where most women work in agriculture, nor has there been a worldwide decline in participation. Furthermore, the information about working hours suggests that it’s not universally true that women work longer hours than men in most regions. Therefore, there is no correct answer among the options provided.

After five iterations, the weaker LLM was able to figure out the correct answer (keep in mind that the “professor” only evaluated the reasoning itself and it didn’t use external tools or its own knowledge). Note that, technically speaking, we implemented cross-reflection and not self-reflection (since we’ve used a different LLM for reflection than the one we used for the reasoning). Here’s an example of the feedback provided during the first round:

The student’s reasoning relies on outside search results which are not provided, making it difficult to assess the accuracy of their claims. The student states that none of the answers are entirely true, but multiplechoice questions often have one best answer even if it requires nuance. To properly evaluate the answer, the search results need to be provided, and each option should be evaluated against those results to identify the most accurate choice, rather than dismissing them all. It is possible one of the options is more correct than the others, even if not perfectly true. Without the search results, it’s impossible to determine if the student’s conclusion that no answer is correct is valid. Additionally, the student should explicitly state what the search results were.

Next, let’s discuss an alternative communication style for a multi-agent setup, via a shared list of messages. But before that, we should discuss the LangGraph handoff mechanism and dive into some details of streaming with LangGraph.

LangGraph streaming

LangGraph streaming might sometimes be a source of confusion. Each graph has not only a stream and a corresponding asynchronous astream method, but also an astream_events. Let’s dive into the difference.

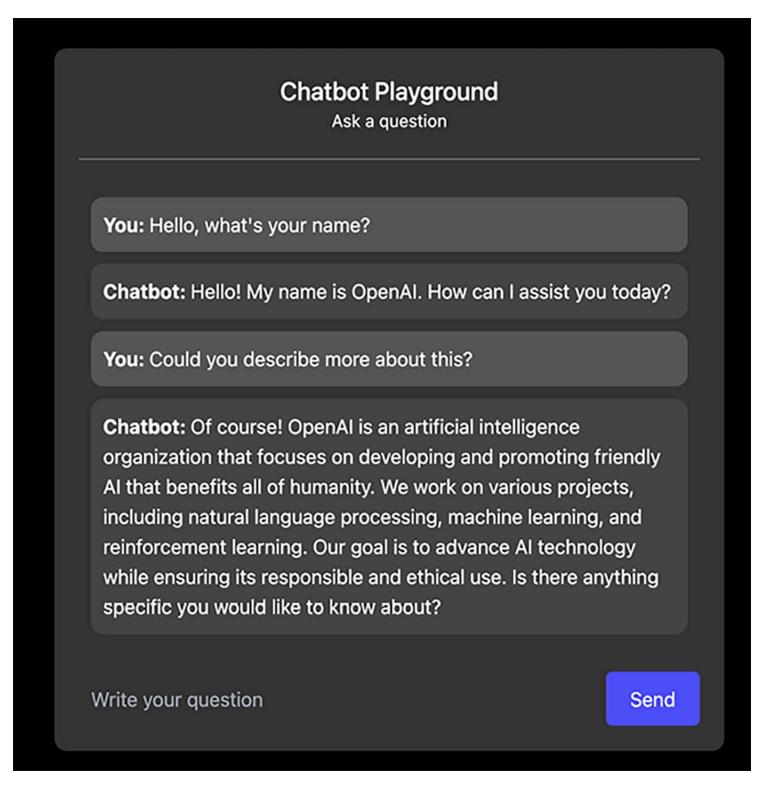

The Stream method allows you to stream changes to the graph’s state after each super-step. Remember, we discussed what a super-step is in Chapter 3, but to keep it short, it’s a single iteration over the graph where parallel nodes belong to a single super-step while sequential nodes belong to different super-steps. If you need actual streaming behavior (like in a chatbot, so that users feel like something is happening and the model is actually thinking), you should use astream with messages mode.

| Mode | Description | Output |

|---|---|---|

| updates | Streams only updates to the graph | A dictionary where each node name |

| produced by the node | maps to its corresponding state update) | |

| values | Streams the full state of the graph after | A dictionary with the entire graph’s |

| each super-step | state | |

| debug | Attempts to stream as much information | A dictionary with a timestamp, |

| as possible in the debug mode | task_type, and all the corresponding | |

| information for every event | ||

| custom | Streams events emitted by the node | A dictionary that was written from the |

| using a StreamWriter | node to a custom writer | |

| messages | Streams full events (for example, | A tuple with token or message segment |

| ToolMessages) or its chunks in a | and a dictionary containing metadata | |

| streaming node if possible (e.g., AI | from the node | |

| Messages) |

You have five modes with stream/astream methods (of course, you can combine multiple modes):

Table 6.1: Different streaming modes for LangGraph

Let’s look at an example. If we take the ReACT agent we used in the section above and stream with the values mode, we’ll get the full state returned after every super-step (you can see that the total number of messages is always increasing):

async for _, event in research_agent.astream({"question": question,

"options": options}, stream_mode=["values"]):

print(len(event["messages"]))4

If we switch to the update mode, we’ll get a dictionary where the key is the node’s name (remember that parallel nodes can be called within a single super-step) and a corresponding update to the state sent by this node:

async for _, event in research_agent.astream({"question": question,

"options": options}, stream_mode=["updates"]):

node = list(event.keys())[0]

print(node, len(event[node].get("messages", [])))

>> agent 1tools 2 agent 1

LangGraph stream always emits a tuple where the first value is a stream mode (since you can pass multiple modes by adding them to the list).

Then you need an astream_events method that streams back events happening within the nodes – not just tokens generated by the LLM but any event available for a callback:

seen_events = set([])

async for event in research_agent.astream_events({"question": question,

"options": options}, version="v1"):

if event["event"] not in seen_events:

seen_events.add(event["event"])

print(seen_events)

>> {'on_chat_model_end', 'on_chat_model_stream', 'on_chain_end', 'on_

prompt_end', 'on_tool_start', 'on_chain_stream', 'on_chain_start', 'on_

prompt_start', 'on_chat_model_start', 'on_tool_end'}You can find a full list of the events at https://python.langchain.com/docs/concepts/ callbacks/#callback-events.

Handoffs

So far, we have learned that a node in LangGraph does a chunk of work and sends updates to a common state, and an edge controls the flow – it decides which node to invoke next (in a deterministic manner or based on the current state). When implementing multi-agent architectures, your nodes can be not only functions but other agents, or subgraphs (with their own state). You might need to combine state updates and flow controls.

LangGraph allows you to do that with a Command – you can update your graph’s state and at the same time invoke another agent by passing a custom state to it. This is called a handoff – since an agent hands off control to another one. You need to pass an update – a dictionary with an update of the current state to be sent to your graph – and goto – a name (or list of names) of the nodes to hand off control to:

from langgraph.types import Command

def _make_payment(state):

...

if ...:

return Command(

update={"payment_id": payment_id},

goto="refresh_balance"

)

...A destination agent can be a node from the current or a parent (Command.PARENT) graph. In other words, you can change the control flow only within the current graph, or you can pass it back to the workflow that initiated this one (for example, you can’t pass control to any random workflow by ID). You can also invoke a Command from a tool, or wrap a Command as a tool, and then an LLM can decide to hand off control to a specific agent. In Chapter 3, we discussed the map-reduce pattern and the Send class, which allowed us to invoke a node in the graph by passing a specific input state to it. We can use Command together with Send (in this example, the destination agent belongs to the parent graph):

from langgraph.types import Send

def _make_payment(state):

...

if ...:

return Command(

update={"payment_id": payment_id},

goto=[Send("refresh_balance", {"payment_id": payment_id}, ...],

graph=Command.PARENT

)

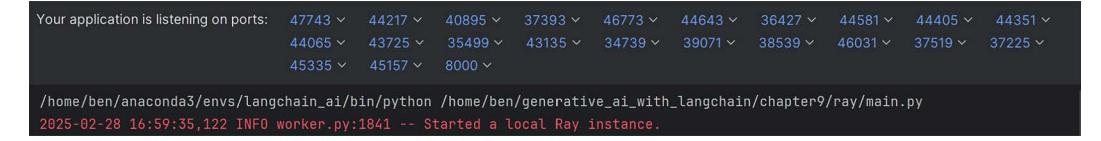

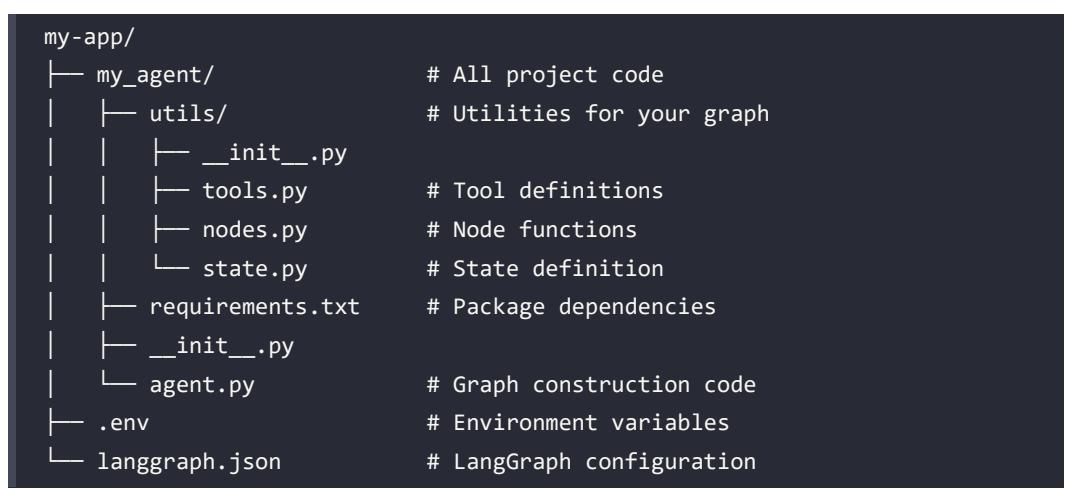

...LangGraph platform

LangGraph and LangChain, as you know, are open-source frameworks, but LangChain as a company offers the LangGraph platform – a commercial solution that helps you develop, manage, and deploy agentic applications. One component of the LangGraph platform is LangGraph Studio – an IDE that helps you visualize and debug your agents – and another is LangGraph Server.

You can read more about the LangGraph platform at the official website (https://langchain-ai. github.io/langgraph/concepts/#langgraph-platform), but let’s discuss a few key concepts for a better understanding of what it means to develop an agent.

After you’ve developed an agent, you can wrap it as an HTTP API (using Flask, FastAPI, or any other web framework). The LangGraph platform offers you a native way to deploy agents, and it wraps them with a unified API (which makes it easier for your applications to use these agents). When you’ve built your agent as a LangGraph graph object, you deploy an assistant – a specific deployment that includes an instance of your graph coupled together with a configuration. You can easily version and configure assistants in the UI, but it’s important to keep parameters configurable (and pass them as RunnableConfig to your nodes and tools).

Another important concept is a thread. Don’t be confused, a LangGraph thread is a different concept from a Python thread (and when you pass a thread_id in your RunnableConfig, you’re passing a LangGraph thread ID). When you think about LangGraph threads, think about conversation or Reddit threads. A thread represents a session between your assistant (a graph with a specific configuration) and a user. You can add per-thread persistence using the checkpointing mechanism we discussed in Chapter 3.

A run is an invocation of an assistant. In most cases, runs are executed on a thread (for persistence). LangGraph Server also allows you to schedule stateless runs – they are not assigned to any thread, and because of that, the history of interactions is not persisted. LangGraph Server allows you to schedule long-running runs, scheduled runs (a.k.a. crons), etc., and it also offers a rich mechanism for webhooks attached to runs and polling results back to the user.

We’re not going to discuss the LangGraph Server API in this book. Please take a look at the documentation instead.

Building adaptive systems

Adaptability is a great attribute of agents. They should adapt to external and user feedback and correct their actions accordingly. As we discussed in Chapter 5, generative AI agents are adaptive through:

- Tool interaction: They incorporate feedback from previous tool calls and their outputs (by including ToolMessages that represent tool-calling results) when planning the next steps (like our ReACT agent adjusting based on search results).

- Explicit reflection: They can be instructed to analyze current results and deliberately adjust their behavior.

- Human feedback: They can incorporate user input at critical decision points.

Dynamic behavior adjustment

We saw how to add a reflection step to our plan-and-solve agent. Given the initial plan, and the output of the steps performed so far, we’ll ask the LLM to reflect on the plan and adjust it. Again, we continue reiterating the key idea – such reflection might not happen naturally; you might add it as a separate task (decomposition), and you keep partial control over the execution flow by designing its generic components.

Human-in-the-loop

Additionally, when developing agents with complex reasoning trajectories, it might be beneficial to incorporate human feedback at a certain point. An agent can ask a human to approve or reject certain actions (for example, when it’s invoking a tool that is irreversible, like a tool that makes a payment), provide additional context to the agent, or give a specific input by modifying the graph’s state.

Imagine we’re developing an agent that searches for job postings, generates an application, and sends this application. We might want to ask the user before submitting an application, or the logic might be more complex – the agent might be collecting data about the user, and for some job postings, it might be missing relevant context about past job experience. It should ask the user and persist this knowledge in long-term memory for better long-term adaptation.

LangGraph has a special interrupt function to implement HIL-type interactions. You should include this function in the node, and by the first execution, it would throw a GraphInterrupt exception (the value of which would be presented to the user). To resume the execution of the graph, a client should use the Command class, which we discussed earlier in this chapter. LangGraph would start from the same node, re-execute it, and return corresponding values as a result of the node invoking the interrupt function (if there are multiple interrupts in your node, LangGraph would keep an ordering). You can also use Command to route to different nodes based on the user’s input. Of course, you can use interrupt only when a checkpointer is provided to the graph since its state should be persisted.

Let’s construct a very simple graph with only the node that asks a user for their home address:

from langgraph.types import interrupt, Command

class State(MessagesState):

home_address: Optional[str]

def _human_input(state: State):

address = interrupt("What is your address?")

return {"home_address": address}

builder = StateGraph(State)

builder.add_node("human_input", _human_input)

builder.add_edge(START, "human_input")

checkpointer = MemorySaver()

graph = builder.compile(checkpointer=checkpointer)

config = {"configurable": {"thread_id": "1"}}

for chunk in graph.stream({"messages": [("human", "What is weather

today?")]}, config):

print(chunk)

>> {'__interrupt__': (Interrupt(value='What is your address?',

resumable=True, ns=['human_input:b7e8a744-b404-0a60-7967-ddb8d30b11e3'],The graph returns us a special __interrupt__ state and stops. Now our application (the client) should ask the user this question, and then we can resume. Please note that we’re providing the same thread_id to restore from the checkpoint:

for chunk in graph.stream(Command(resume="Munich"), config):

print(chunk){‘human_input’: {‘home_address’: ‘Munich’}}

Note that the graph continued to execute the human_input node, but this time the interrupt function returned the result, and the graph’s state was updated.

So far, we’ve discussed a few architectural patterns on how to develop an agent. Now let’s take a look at another interesting one that allows LLMs to run multiple simulations while they’re looking for a solution.

Exploring reasoning paths

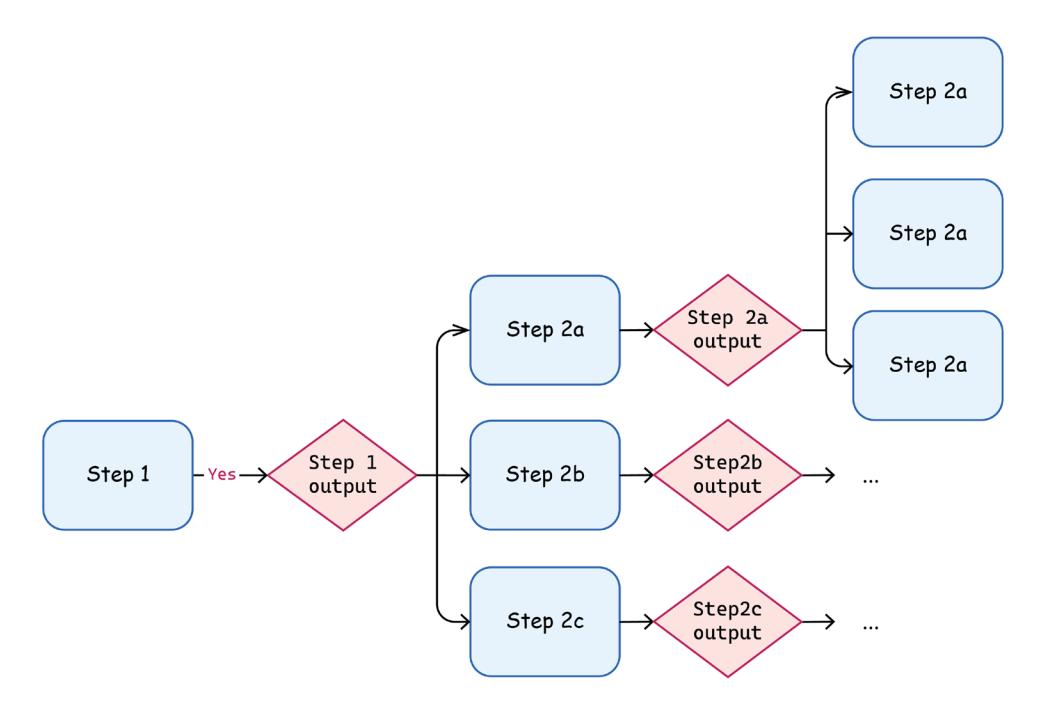

In Chapter 3, we discussed CoT prompting. But with CoT prompting, the LLM creates a reasoning path within a single turn. What if we combine the decomposition pattern and the adaptation pattern by splitting this reasoning into pieces?

Tree of Thoughts

Researchers from Google DeepMind and Princeton University introduced the ToT technique in December 2023. They generalize the CoT pattern and use thoughts as intermediate steps in the exploration process toward the global solution.

Let’s return to the plan-and-solve agent we built in the previous chapter. Let’s use the non-deterministic nature of LLMs to improve it. We can generate multiple candidates for the next action in the plan on every step (we might need to increase the temperature of the underlying LLM). That would help the agent to be more adaptive since the next plan generated will take into account the outputs of the previous step.

Now we can build a tree of various options and explore this tree with the depth-for-search or breadth-for-search method. At the end, we’ll get multiple solutions, and we’ll use some of the consensus mechanisms discussed above to pick the best one (for example, LLM-as-a-judge).

Figure 6.7: Solution path exploration with ToT

Please note that the model’s provider should support the generation of multiple candidates in the response (not all providers support this feature).

We would like to highlight (and we’re not tired of doing this repeatedly in this chapter) that there’s nothing entirely new in the ToT pattern. You take what algorithms and patterns have been used already in other areas, and you use them to build capable agents.

Now it’s time to do some coding. We’ll take the same components of the plan-and-solve agents we developed in Chapter 5 – a planner that creates an initial plan and execution_agent, which is a research agent with access to tools and works on a specific step in the plan. We can make our execution agent simpler since we don’t need a custom state:

execution_agent = prompt_template | create_react_agent(model=llm,

tools=tools)We also need a replanner component, which will take care of adjusting the plan based on previous observations and generating multiple candidates for the next action:

from langchain_core.prompts import ChatPromptTemplate

class ReplanStep(BaseModel):

"""Replanned next step in the plan."""

steps: list[str] = Field(

description="different options of the proposed next step"

)

llm_replanner = llm.with_structured_output(ReplanStep)

replanner_prompt_template = (

"Suggest next action in the plan. Do not add any superfluous steps.\n"

"If you think no actions are needed, just return an empty list of

steps. "

"TASK: {task}\n PREVIOUS STEPS WITH OUTPUTS: {current_plan}"

)

replanner_prompt = ChatPromptTemplate.from_messages(

[("system", "You're a helpful assistant. You goal is to help with

planning actions to solve the task. Do not solve the task itself."),

("user", replanner_prompt_template)

]

)

replanner = replanner_prompt | llm_replannerThis replanner component is crucial for our ToT approach. It takes the current plan state and generates multiple potential next steps, encouraging exploration of different solution paths rather than following a single linear sequence.

To track our exploration path, we need a tree data structure. The TreeNode class below helps us maintain it:

class TreeNode:

def __init__( self,

node_id: int,

step: str,

step_output: Optional[str] = None,

parent: Optional["TreeNode"] = None,

):

self.node_id = node_id

self.step = step

self.step_output = step_output

self.parent = parent

self.children = []

self.final_response = None

def __repr__(self):

parent_id = self.parent.node_id if self.parent else "None"

return f"Node_id: {self.node_id}, parent: {parent_id}, {len(self.

children)} children."

def get_full_plan(self) -> str:

"""Returns formatted plan with step numbers and past results."""

steps = []

node = self

while node.parent:

steps.append((node.step, node.step_output))

node = node.parent

full_plan = []

for i, (step, result) in enumerate(steps[::-1]):

if result:

full_plan.append(f"# {i+1}. Planned step: {step}\nResult:

{result}\n")

return "\n".join(full_plan)Each TreeNode tracks its identity, current step, output, parent relationship, and children. We also created a method to get a formatted full plan (we’ll substitute it in place of the prompt’s template), and just to make debugging more convenient, we overrode a __repr__ method that returns a readable description of the node.

Now we need to implement the core logic of our agent. We will explore our tree of actions in a depth-for-search mode. This is where the real power of the ToT pattern comes into play:

async def _run_node(state: PlanState, config: RunnableConfig):

node = state.get("next_node")

visited_ids = state.get("visited_ids", set())

queue = state["queue"]

if node is None:

while queue and not node:

node = state["queue"].popleft()

if node.node_id in visited_ids:

node = None

if not node:

return Command(goto="vote", update={})

step = await execution_agent.ainvoke({

"previous_steps": node.get_full_plan(),

"step": node.step,

"task": state["task"]})

node.step_output = step["messages"][-1].content

visited_ids.add(node.node_id)

return {"current_node": node, "queue": queue, "visited_ids": visited_ids,

"next_node": None}

async def _plan_next(state: PlanState, config: RunnableConfig) ->

PlanState:

max_candidates = config["configurable"].get("max_candidates", 1)

node = state["current_node"]

next_step = await replanner.ainvoke({"task": state["task"], "current_

plan": node.get_full_plan()})

if not next_step.steps:

return {"is_current_node_final": True}

max_id = state["max_id"]

for step in next_step.steps[:max_candidates]:

child = TreeNode(node_id=max_id+1, step=step, parent=node)

max_id += 1

node.children.append(child)

state["queue"].append(child)return {"is_current_node_final": False, "next_node": child, "max_id":

max_id}

async def _get_final_response(state: PlanState) -> PlanState:

node = state["current_node"]

final_response = await responder.ainvoke({"task": state["task"], "plan":

node.get_full_plan()})

node.final_response = final_response

return {"paths_explored": 1, "candidates": [final_response]}The _run_node function executes the current step, while _plan_next generates new candidate steps and adds them to our exploration queue. When we reach a final node (one where no further steps are needed), _get_final_response generates a final solution by picking the best one from multiple candidates (originating from different solution paths explored). Hence, in our agent’s state, we should keep track of the root node, the next node, the queue of nodes to be explored, and the nodes we’ve already explored:

import operator

from collections import deque

from typing import Annotated

class PlanState(TypedDict):

task: str

root: TreeNode

queue: deque[TreeNode]

current_node: TreeNode

next_node: TreeNode

is_current_node_final: bool

paths_explored: Annotated[int, operator.add]

visited_ids: set[int]

max_id: int

candidates: Annotated[list[str], operator.add]

best_candidate: strThis state structure keeps track of everything we need: the original task, our tree structure, exploration queue, path metadata, and candidate solutions. Note the special Annotated types that use custom reducers (like operator.add) to handle merging state values properly.

One important thing to keep in mind is that LangGraph doesn’t allow you to modify state directly. In other words, if we execute something like the following within a node, it won’t have an effect on the actual queue in the agent’s state:

def my_node(state):

queue = state["queue"]

node = queue.pop()

...

queue.append(another_node)

return {"key": "value"}If we want to modify the queue that belongs to the state itself, we should either use a custom reducer (as we discussed in Chapter 3) or return the queue object to be replaced (since under the hood, LangGraph always created deep copies of the state before passing it to the node).

We need to define the final step now – the consensus mechanism to choose the final answer based on multiple generated candidates:

prompt_voting = PromptTemplate.from_template(

"Pick the best solution for a given task. "

"\nTASK:{task}\n\nSOLUTIONS:\n{candidates}\n"

)

def _vote_for_the_best_option(state):

candidates = state.get("candidates", [])

if not candidates:

return {"best_response": None}

all_candidates = []

for i, candidate in enumerate(candidates):

all_candidates.append(f"OPTION {i+1}: {candidate}")

response_schema = {

"type": "STRING",

"enum": [str(i+1) for i in range(len(all_candidates))]}

llm_enum = ChatVertexAI(

model_name="gemini-2.0-flash-001", response_mime_type="text/x.enum",

response_schema=response_schema)

result = (prompt_voting | llm_enum | StrOutputParser()).invoke(

{"candidates": "\n".join(all_candidates), "task": state["task"]})

return {"best_candidate": candidates[int(result)-1]}This voting mechanism presents all candidate solutions to the model and asks it to select the best one, leveraging the model’s ability to evaluate and compare options.

Now let’s add the remaining nodes and edges of the agent. We need two nodes – the one that creates an initial plan and another that evaluates the final output. Alongside these, we define two corresponding edges that evaluate whether the agent should continue on its exploration and whether it’s ready to provide a final response to the user:

from typing import Literal

from langgraph.graph import StateGraph, START, END

from langchain_core.runnables import RunnableConfig

from langchain_core.output_parsers import StrOutputParser

from langgraph.types import Command

final_prompt = PromptTemplate.from_template(

"You're a helpful assistant that has executed on a plan."

"Given the results of the execution, prepare the final response.\n"

"Don't assume anything\nTASK:\n{task}\n\nPLAN WITH RESUlTS:\n{plan}\n"

"FINAL RESPONSE:\n"

)

responder = final_prompt | llm | StrOutputParser()

async def _build_initial_plan(state: PlanState) -> PlanState:

plan = await planner.ainvoke(state["task"])

queue = deque()

root = TreeNode(step=plan.steps[0], node_id=1)

queue.append(root)

current_root = root

for i, step in enumerate(plan.steps[1:]):

child = TreeNode(node_id=i+2, step=step, parent=current_root)

current_root.children.append(child)

queue.append(child)

current_root = child

return {"root": root, "queue": queue, "max_id": i+2}async def _get_final_response(state: PlanState) -> PlanState:

node = state["current_node"]

final_response = await responder.ainvoke({"task": state["task"], "plan":

node.get_full_plan()})

node.final_response = final_response

return {"paths_explored": 1, "candidates": [final_response]}

def _should_create_final_response(state: PlanState) -> Literal["run",

"generate_response"]:

return "generate_response" if state["is_current_node_final"] else "run"

def _should_continue(state: PlanState, config: RunnableConfig) ->

Literal["run", "vote"]:

max_paths = config["configurable"].get("max_paths", 30)

if state.get("paths_explored", 1) > max_paths:

return "vote"

if state["queue"] or state.get("next_node"):

return "run"

return "vote"These functions round out our implementation by defining the initial plan creation, final response generation, and flow control logic. The _should_create_final_response and _should_continue functions determine when to generate a final response and when to continue exploration. With all the components in place, we construct the final state graph:

builder = StateGraph(PlanState)

builder.add_node("initial_plan", _build_initial_plan)

builder.add_node("run", _run_node)

builder.add_node("plan_next", _plan_next)

builder.add_node("generate_response", _get_final_response)

builder.add_node("vote", _vote_for_the_best_option)

builder.add_edge(START, "initial_plan")

builder.add_edge("initial_plan", "run")

builder.add_edge("run", "plan_next")

builder.add_conditional_edges("plan_next", _should_create_final_response)

builder.add_conditional_edges("generate_response", _should_continue)

builder.add_edge("vote", END)graph = builder.compile()

from IPython.display import Image, display

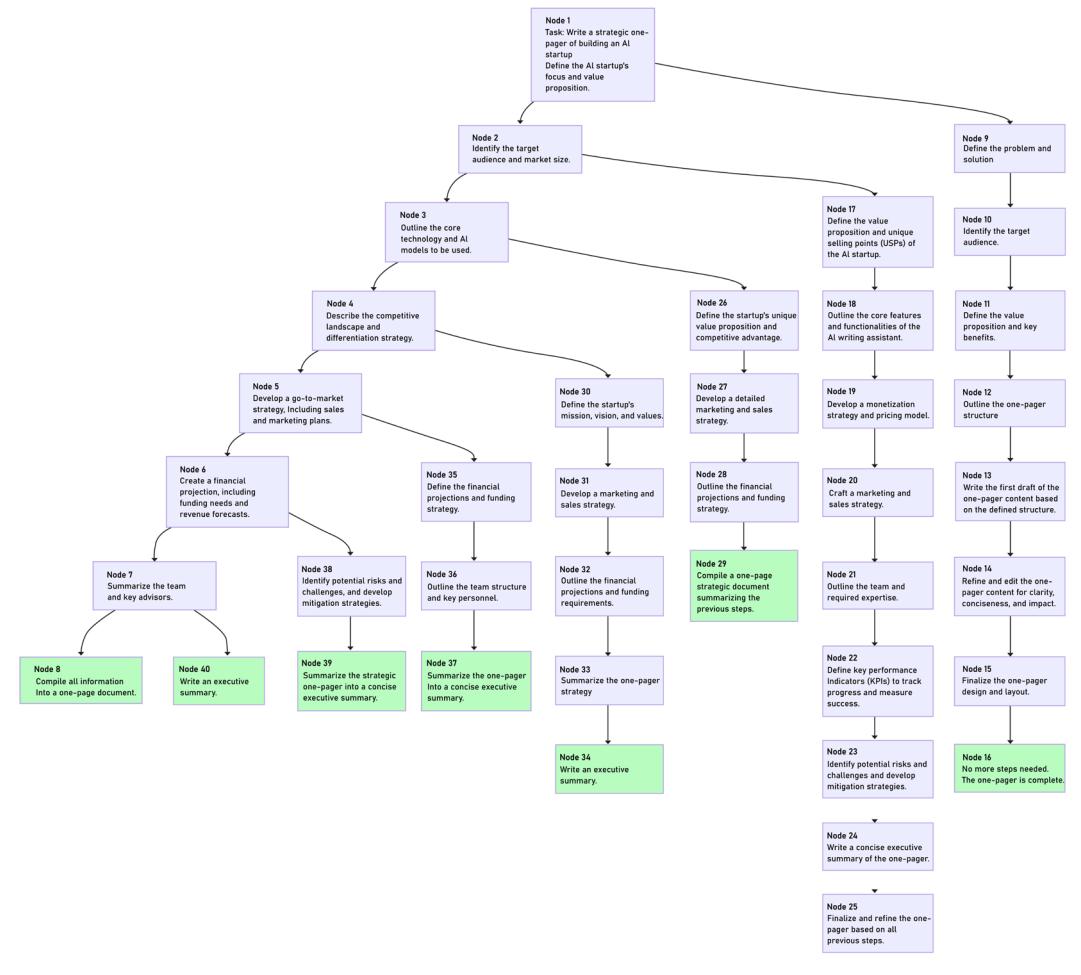

display(Image(graph.get_graph().draw_mermaid_png()))This creates our finished agent with a complete execution flow. The graph begins with initial planning, proceeds through execution and replanning steps, generates responses for completed paths, and finally selects the best solution through voting. We can visualize the flow using the Mermaid diagram generator, giving us a clear picture of our agent’s decision-making process:

Figure 6.8: LATS agent

We can control the maximum number of super-steps, the maximum number of paths in the tree to be explored (in particular, the maximum number of candidates for the final solution to be generated), and the number of candidates per step. Potentially, we could extend our config and control the maximum depth of the tree. Let’s run our graph:

task = "Write a strategic one-pager of building an AI startup"

result = await graph.ainvoke({"task": task}, config={"recursion_limit":

10000, "configurable": {"max_paths": 10}})

print(len(result["candidates"]))

print(result["best_candidate"])We can also visualize the explored tree:

Figure 6.9: Example of an explored execution tree

We limited the number of candidates, but we can potentially increase it and add additional pruning logic (which will prune the leaves that are not promising). We can use the same LLM-as-a-judge approach, or use some other heuristic for pruning. We can also explore more advanced pruning strategies; we’ll talk about one of them in the next section.

Trimming ToT with MCTS

Some of you might remember AlphaGo – the first computer program that defeated humans in a game of Go. Google DeepMind developed it back in 2015, and it used Monte Carlo Tree Search (MCTS) as the core decision-making algorithm. Here’s a simple idea of how it works. Before taking the next move in a game, the algorithm builds a decision tree with potential future moves, with nodes representing your moves and your opponent’s potential responses (this tree expands quickly, as you can imagine). To keep the tree from expanding too fast, they used MCTS to search only through the most promising paths that lead to a better state in the game.

Now, coming back to the ToT pattern we learned about in the previous chapter. Think about the fact that the dimensionality of the ToT we’ve been building in the previous section might grow really fast. If, on every step, we’re generating 3 candidates and there are only 5 steps in the workflow, we’ll end up with 35 =243 steps to evaluate. That incurs a lot of cost and time. We can trim the dimensionality in different ways, for example, by using MCTS. It includes selection and simulation components:

- Selection helps you pick the next node when analyzing the tree. You do that by balancing exploration and exploitation (you estimate the most promising node but add some randomness to this process).

- After you expand the tree by adding a new child to it, if it’s not a terminal node, you need to simulate the consequences of it. This might be done just by randomly playing all the next moves until the end, or using more sophisticated simulation approaches. After evaluating the child, you backpropagate the results to all the parent nodes by adjusting their probability scores for the next round of selection.

We’re not aiming to go into the details and teach you MCTS. We only want to demonstrate how you apply already-existing algorithms to agentic workflows to increase their performance. One such example is a LATS approach suggested by Andy Zhou and colleagues in June 2024 in their paper Language Agent Tree Search Unifies Reasoning, Acting, and Planning in Language Models. Without going into too much detail (you’re welcome to look at the original paper or the corresponding tutorials), the authors added MCTS on top of ToT, and they demonstrated an increased performance on complex tasks by getting number 1 on the HumanEval benchmark.

The key idea was that instead of exploring the whole tree, they use an LLM to evaluate the quality of the solution you get at every step (by looking at the sequence of all the steps on these specific reasoning steps and the outputs you’ve got so far).

Now, as we’ve discussed some more advanced architectures that allow us to build better agents, there’s one last component to briefly touch on – memory. Helping agents to retain and retrieve relevant information from long-term interactions helps us to develop more advanced and helpful agents.

Agent memory

We discussed memory mechanisms in Chapter 3. To recap, LangGraph has the notion of short-term memory via the Checkpointer mechanism, which saves checkpoints to persistent storage. This is the so-called per-thread persistence (remember, we discussed earlier in this chapter that the notion of a thread in LangGraph is similar to a conversation). In other words, the agent remembers our interactions within a given session, but it starts from scratch each time.

As you can imagine, for complex agents, this memory mechanism might be inefficient for two reasons. First, you might lose important information about the user. Second, during the exploration phase when looking for a solution, an agent might learn something important about the environment that it forgets each time – and it doesn’t look efficient. That’s why there’s the concept of long-term memory, which helps an agent to accumulate knowledge and gain from historical experiences, and enables its continuous improvement on the long horizon.

How to design and use long-term memory in practice is still an open question. First, you need to extract useful information (keeping in mind privacy requirements too; more about that in Chapter 9) that you want to store during the runtime and then you need to extract it during the next execution. Extraction is close to the retrieval problem we discussed while talking about RAG since we need to extract only knowledge relevant to the given context. The last component is the compaction of memory – you probably want to periodically self-reflect on what you have learned, optimize it, and forget irrelevant facts.

These are key considerations to take into account, but we haven’t seen any great practical implementations of long-term memory for agentic workflows yet. In practice, these days people typically use two components – a built-in cache (a mechanism to cache LLMs responses), a built-in store (a persistent key-value store), and a custom cache or database. Use the custom option when:

- You need additional flexibility for how you organize memory for example, you would like to keep track of all memory states.

- You need advanced read or write access patterns when working with this memory.

• You need to keep the memory distributed and across multiple workers, and you’d like to use a database other than PostgreSQL.

Cache

Caching allows you to save and retrieve key values. Imagine you’re working on an enterprise question-answering assistance application, and in the UI, you ask a user whether they like the answer. If the answer is positive, or if you have a curated dataset of question-answer pairs for the most important topics, you can store these in a cache. When the same (or a similar) question is asked later, the system can quickly return the cached response instead of regenerating it from scratch.

LangChain allows you to set a global cache for LLM responses in the following way (after you have initialized the cache, the LLM’s response will be added to the cache, as we’ll see below):

from langchain_core.caches import InMemoryCache

from langchain_core.globals import set_llm_cache

cache = InMemoryCache()

set_llm_cache(cache)

llm = ChatVertexAI(model="gemini-2.0-flash-001", temperature=0.5)

llm.invoke("What is the capital of UK?")Caching with LangChain works as follows: Each vendor’s implementation of a ChatModel inherits from the base class, and the base class first tries to look up a value in the cache during generation. cache is a global variable that we can expect (of course, only after it has been initialized). It caches responses based on the key that consists of a string representation of the prompt and the string representation of the LLM instance (produced by the llm._get_llm_string method).

This means the LLM’s generation parameters (such as stop_words or temperature) are included in the cache key:

import langchain

print(langchain.llm_cache._cache)LangChain supports in-memory and SQLite caches out of the box (they form part of langchain_ core.caches), and there are also many vendor integrations – available through the langchain_ community.cache subpackage at https://python.langchain.com/api\_reference/community/ cache.html or through specific vendor integrations (for example, langchain-mongodb offers cache integration for MongoDB: https://langchain-mongodb.readthedocs.io/en/latest/ langchain\_mongodb/api\_docs.html).

We recommend introducing a separate LangGraph node instead that hits an actual cache (based on Redis or another database), since it allows you to control whether you’d like to search for similar questions using the embedding mechanism we discussed in Chapter 4 when we were talking about RAG.

Store

As we have learned before, a Checkpointer mechanism allows you to enhance your workflows with a thread-level persistent memory; by thread-level, we mean a conversation-level persistence. Each conversation can be started where it stops, and the workflow executes the previously collected context.

A BaseStore is a persistent key-value storage system that organizes your values by namespace (hierarchical tuples of string paths, similar to folders. It supports standard operations such as put, delete and get operations, as well as a search method that implements different semantic search capabilities (typically, based on the embedding mechanism) and accounts for a hierarchical nature of namespaces.

Let’s initialize a store and add some values to it:

from langgraph.store.memory import InMemoryStore

in_memory_store = InMemoryStore()

in_memory_store.put(namespace=("users", "user1"), key="fact1",

value={"message1": "My name is John."})

in_memory_store.put(namespace=("users", "user1", "conv1"), key="address",

value={"message": "I live in Berlin."})We can easily query the value:

in_memory_store.get(namespace=("users", "user1", "conv1"), key="address")Item(namespace=[‘users’, ‘user1’], key=‘fact1’, value={‘message1’: ‘My name is John.’}, created_at=‘2025-03-18T14:25:23.305405+00:00’, updated_ at=‘2025-03-18T14:25:23.305408+00:00’)

If we query it by a partial path of the namespace, we won’t get any results (we need a full matching namespace). The following would return no results:

in_memory_store.get(namespace=("users", "user1"), key="conv1")On the other side, when using search, we can use a partial namespace path:

print(len(in_memory_store.search(("users", "user1", "conv1"),

query="name")))

print(len(in_memory_store.search(("users", "user1"), query="name")))

>> 1

2As you can see, we were able to retrieve all relevant facts stored in memory by using a partial search.

LangGraph has built-in InMemoryStore and PostgresStore implementations. Agentic memory mechanisms are still evolving. You can build your own implementation from available components, but we should see a lot of progress in the coming years or even months.

Summary

In this chapter, we dived deep into advanced applications of LLMs and the architectural patterns that enable them, leveraging LangChain and LangGraph. The key takeaway is that effectively building complex AI systems goes beyond simply prompting an LLM; it requires careful architectural design of the workflow itself, tool usage, and giving an LLM partial control over the workflow. We also discussed different agentic AI design patterns and how to develop agents that leverage LLMs’ tool-calling abilities to solve complex tasks.

We explored how LangGraph streaming works and how to control what information is streamed back during execution. We discussed the difference between streaming state updates and partial streaming answer tokens, learned about the Command interface as a way to hand off execution to a specific node within or outside the current LangGraph workflow, looked at the LangGraph platform and its main capabilities, and discussed how to implement HIL with LangGraph. We discussed how a thread on LangGraph differs from a traditional Pythonic definition (a thread is somewhat similar to a conversation instance), and we learned how to add memory to our workflow per-thread and with cross-thread persistence. Finally, we learned how to expand beyond basic LLM applications and build robust, adaptive, and intelligent systems by leveraging the advanced capabilities of LangChain and LangGraph.

In the next chapter, we’ll take a look at how generative AI transforms the software engineering industry by assisting in code development and data analysis.

Questions

- Name at least three design patterns to consider when building generative AI agents.

- Explain the concept of “dynamic retrieval” in the context of agentic RAG.

- How can cooperation between agents improve the outputs of complex tasks? How can you increase the diversity of cooperating agents, and what impact on performance might it have?

- Describe examples of reaching consensus across multiple agents’ outputs.

- What are the two main ways to organize communication in a multi-agent system with LangGraph?

- Explain the differences between stream, astream, and astream_events in LangGraph.

- What is a command in LangGraph, and how is it related to handoffs?

- Explain the concept of a thread in the LangGraph platform. How is it different from Pythonic threads?

- Explain the core idea behind the Tree of Thoughts (ToT) technique. How is ToT related to the decomposition pattern?

- Describe the difference between short-term and long-term memory in the context of agentic systems.

Subscribe to our weekly newsletter

Subscribe to AI_Distilled, the go-to newsletter for AI professionals, researchers, and innovators, at https://packt.link/Q5UyU.

Chapter 7: Software Development and Data Analysis Agents

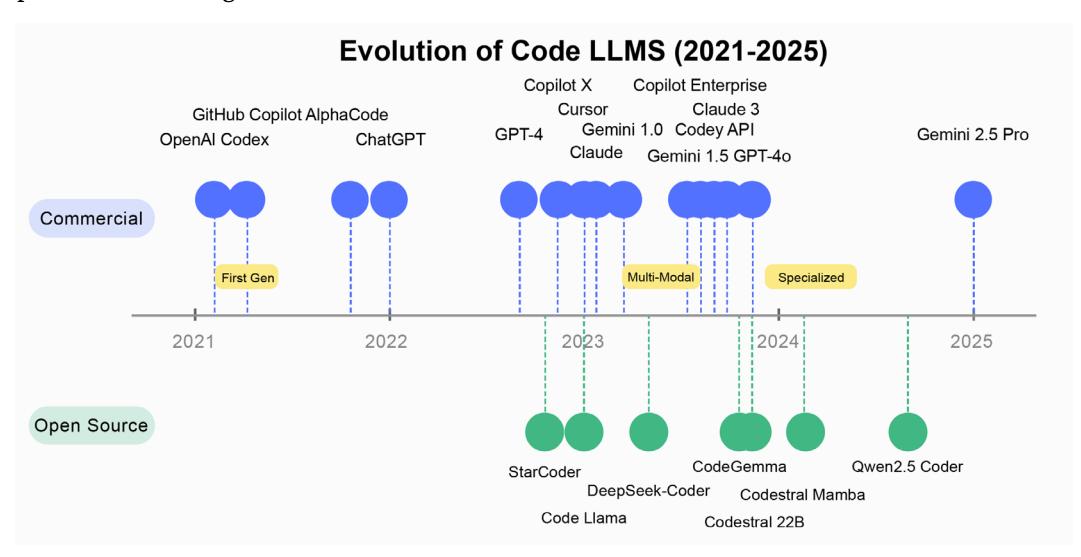

This chapter explores how natural language—our everyday English or whatever language you prefer to interact in with an LLM—has emerged as a powerful interface for programming, a paradigm shift that, when taken to its extreme, is called vibe coding. Instead of learning acquiring new programming languages or frameworks, developers can now articulate their intent in natural language, leaving it to advanced LLMs and frameworks such as LangChain to translate these ideas into robust, production-ready code. Moreover, while traditional programming languages remain essential for production systems, LLMs are creating new workflows that complement existing practices and potentially increase accessibility This evolution represents a significant shift from earlier attempts at code generation and automation.

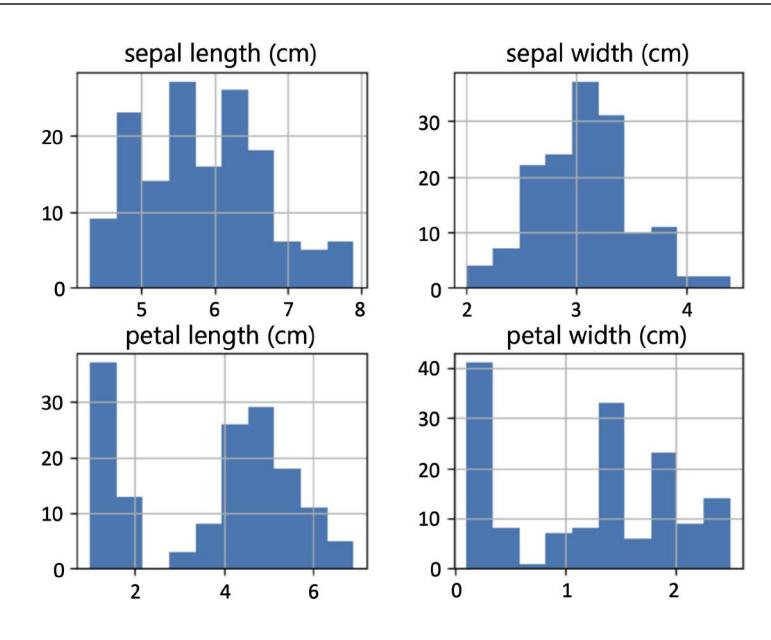

We’ll specifically discuss LLMs’ place in software development and the state of the art of performance, models, and applications. We’ll see how to use LLM chains and agents to help in code generation and data analysis, training ML models, and extracting predictions. We’ll cover writing code with LLMs, giving examples with different models be it on Google’s generative AI services, Hugging Face, or Anthropic. After this, we’ll move on to more advanced approaches with agents and RAG for documentation or a code repository.

We’ll also be applying LLM agents to data science: we’ll first train a model on a dataset, then we’ll analyze and visualize a dataset. Whether you’re a developer, a data scientist, or a technical decision-maker, this chapter will equip you with a clear understanding of how LLMs are reshaping software development and data analysis while maintaining the essential role of conventional programming languages.

The following topics will be covered in this chapter:

- LLMs in software development

- Writing code with LLMs

- Applying LLM agents for data science

LLMs in software development

The relationship between natural language and programming is undergoing a significant transformation. Traditional programming languages remain essential in software development—C++ and Rust for performance-critical applications, Java and C# for enterprise systems, and Python for rapid development, data analysis, and ML workflows. However, natural language, particularly English, now serves as a powerful interface to streamline software development and data science tasks, complementing rather than replacing these specialized programming tools.

Advanced AI assistants let you build software by simply staying “in the vibe” of what you want, without ever writing or even picturing a line of code. This style of development, known as vibe coding, was popularized by Andrej Karpathy in early 2025. Instead of framing tasks in programming terms or wrestling with syntax, you describe desired behaviors, user flows or outcomes in plain conversation. The model then orchestrates data structures, logic and integration behind the scenes. With vibe coding you don’t debug—you re-vibe. This means, you iterate by restating or refining requirements in natural language, and let the assistant reshape the system. The result is a pure, intuitive design-first workflow that completely abstracts away all coding details.

Tools such as Cursor, Windsurf (formerly Codeium), OpenHands, and Amazon Q Developer have emerged to support this development approach, each offering different capabilities for AI-assisted coding. In practice, these interfaces are democratizing software creation while freeing experienced engineers from repetitive tasks. However, balancing speed with code quality and security remains critical, especially for production systems.

The software development landscape has long sought to make programming more accessible through various abstraction layers. Early efforts included fourth-generation languages that aimed to simplify syntax, allowing developers to express logic with fewer lines of code. This evolution continued with modern low-code platforms, which introduced visual programming with prebuilt components to democratize application development beyond traditional coding experts. The latest and perhaps most transformative evolution features natural language programming through LLMs, which interpret human intentions expressed in plain language and translate them into functional code.