Data Analysis with LLMs

Text, tables, images and sound

Immanuel Trummer

Overview of Mini-Projects

Text, tables, images and sound

Immanuel Trummer

For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact

Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 761 Shelter Island, NY 11964 Email:orders@manning.com

©2025 by Manning Publications Co. All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher.

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps.

∞ Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental chlorine.

Manning Publications Co. 20 Baldwin Road PO Box 761 Shelter Island, NY 11964

Development editor: Dustin Archibald Technical editor: Timothy Andrew Roberts Review editor: Kishor Rit Production editor: Keri Hales Copy editor: Tiffany Taylor Proofreader: Melody Dolab Technical proofreader: Karsten Strøbaek Typesetter: Ammar Taha Mohamedy Cover designer: Marija Tudor

ISBN 9781633437647 Printed in the United States of America

To my beloved family

preface

Using a large language model for the first time is an almost magical experience. I still remember my first chat with GPT-3 (nowadays an outdated model). For the first time, it seemed to me that my computer actually understood me and could react appropriately to a wide range of complex inputs. What’s more, I gave it various tasks, ranging from text analysis to coding, and the model was able to solve them based on my instructions alone! I was used to a world in which neural networks had to be trained for highly specialized tasks using large amounts of task-specific training data that had to be labeled tediously by hand, so this was an absolute game-changer that opened a world of new and exciting possibilities.

I was hooked, and since then I have dedicated a large portion of my professional career to exploiting the amazing capabilities of language models. Coming from a data-analysis background, it was natural for me to look at language models from a data-analysis perspective. How can we use language models to make the most of our data sets? Since I started using language models, a big change has been the types of data to which language models can be applied. Starting with text analysis, modern models have expanded their scope to multimodal inputs including images, audio, video, and text. This makes them an invaluable tool for any kind of data science, allowing users to build complex analysis pipelines with just a few lines of Python code along with instructions for the model in natural language describing the task to solve.

In my work, I regularly meet data scientists and data workers who could benefit tremendously from the possibilities offered by language models. However, getting into this new area can be challenging.

I had to rely on blog posts and online tutorials to piece together the information I needed to use language models for various data-analysis tasks. This is the book I wish I’d had when I started my journey. I hope you will find the book useful and enjoyable!

acknowledgments

Thanks to the editorial staff at Manning, as well as to the behind-the-scenes production staff who helped shepherd this book into its final format. In addition, thanks to Timothy Andrew Roberts, the technical editor for this book.

Also, thanks to all the reviewers: Al Pezewski, Amitabh Premraj Cheekoth, Anindita Nath, Anto Aravinth, Brendan O’Hara, Clemens Baader, Darrin Bishop, Dotan Cohen, Eli Mayost, George E. Carter, Giri Swaminathan, Harcharan Kabbay, Ikechukwu Okonkwo, Jaume Valls Altadil, Jeremy Chen, John Guthrie, John V. McCarthy, John Williams, Krzysztof J drzejewski, Lex Drennan, Marcio Francisco Nogueira, Marjorie Roswell, Marvin Schwarze, Paul Silisteanu, Rahul Jain, Robert Rozploch, Sumit Bhattacharyya, Swapna Yeleswarapu, Thiago Britto Borges, Todd Cook, Tony Holdroyd, Vatsal Desai, Vinoth Nageshwaran, and Walter Alexander Mata López. Your suggestions helped make this a better book.

about this book

This book was written to help developers build applications for multimodal data analysis using state-of-the-art language models. It introduces language models and the most important libraries for using them in Python. Via a series of mini projects, it showcases how to use language models to analyze text, tabular data, graph data, images, videos, and audio files. By discussing topics such as prompt engineering, fine-tuning, and advanced software frameworks, the book will enable you to quickly build complex data-analysis applications with language models that are effective and cost-efficient.

Who should read this book?

Whether you are a software developer, data scientist, or hobbyist interested in data analysis, this book is for you if you want to exploit the powerful abilities of large language models to perform various types of data analysis. Prior experience with language models is unnecessary, as the book covers all the basics. However, experience with Python is helpful, at least at a beginner’s level, as this book uses Python to interact with language models.

How this book is organized: A road map

This book has 10 chapters in three parts. Part 1 introduces language models and gives a first impression of their benefits for data analysis:

- Chapter 1 introduces language models and explains how they can be used for data analysis.

- Chapter 2 guides you through a chat with ChatGPT, illustrating the analysis of text and tabular data in the ChatGPT web interface.

Part 2 introduces OpenAI’s Python library and shows how to analyze various types of data using language models directly from Python:

- Chapter 3 introduces OpenAI’s Python library, enabling users to send requests to language models and configure their behavior in various ways.

- Chapter 4 shows how to use language models to process text data: for example, to classify text documents or extract specific information.

- Chapter 5 demonstrates how to build natural language query interfaces using language models, translating questions in natural language to formal queries referring to data tables or graphs.

- Chapter 6 describes how to use multimodal language models to process images or video data for tasks such as object detection, question-answering, and captioning.

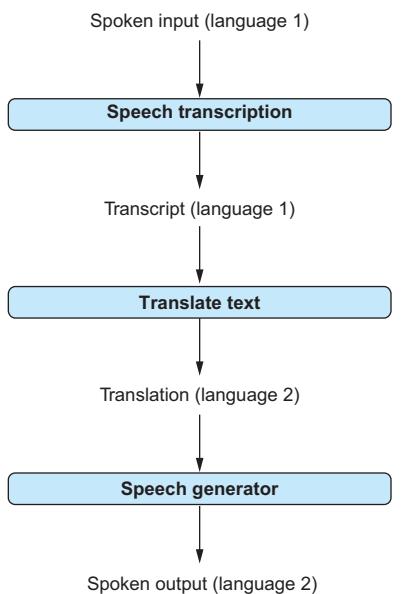

- Chapter 7 illustrates multiple use cases for language models in analyzing audio data: for instance, transcribing audio recordings, realizing voice query interfaces, or translating spoken input to other languages.

Part 3 covers advanced topics, enabling you to optimize your choice of models, configurations, and frameworks:

- Chapter 8 discusses different providers of large language models and gives a short overview of the models they offer and the corresponding Python libraries.

- Chapter 9 demonstrates methods that can be used to minimize processing fees and maximize output quality when working with language models, including optimizing model choices and parameter settings and fine-tuning.

- Chapter 10 discusses several software frameworks, particularly LangChain and LlamaIndex, that can be used to build complex applications on top of large language models with lower implementation overheads.

It is recommended that you start by reading chapter 1, which introduces important terms and concepts. You can skip chapter 2 if you have already used language models via web interfaces. Most of the remaining chapters are based on OpenAI’s Python library. It is therefore a good idea to read chapter 3 before diving into any later chapters. Chapters 4 to 7 focus on different data types and can be read in any order. Similarly, chapters 8 to 10 are independent, and you can study them in any order.

About the code

This book contains various code samples in numbered and unnumbered listings. All code in numbered listings is available for download from the book’s companion website at www.dataanalysiswithllms.com. Code, as well as suitable test data, is categorized by book chapter. Code files are named using the number of the corresponding listing in the book. The entire code and data repository can also be downloaded from the publisher’s website at www.manning.com/books/data-analysis-with-llms.

The source code is formatted in a fixed-width font like this to separate it from ordinary text. In many cases, the original source code has been reformatted; we’ve added line breaks and reworked indentation to accommodate the available page space in the book. Additionally, comments in the source code have often been removed from the listings when the code is described in the text. Code annotations accompany many of the listings, highlighting important concepts.

liveBook discussion forum

Purchase of Data Analysis with LLMs includes free access to liveBook, Manning’s online reading platform. Using liveBook’s exclusive discussion features, you can attach comments to the book globally or to specific sections or paragraphs. It’s a snap to make notes for yourself, ask and answer technical questions, and receive help from the author and other users. To access the forum, go to https://livebook.manning.com/book/ data-analysis-with-llms/discussion. You can also learn more about Manning’s forums and the rules of conduct at https://livebook.manning.com/discussion.

Manning’s commitment to our readers is to provide a venue where a meaningful dialogue between individual readers and between readers and the author can take place. It is not a commitment to any specific amount of participation on the part of the author, whose contribution to the forum remains voluntary (and unpaid). We suggest you try asking the author some challenging questions lest his interest stray! The forum and the archives of previous discussions will be accessible from the publisher’s website as long as the book is in print.

about the cover illustration

The figure on the cover of Data Analysis with LLMs, titled “Le Spéculateur,” or “The Speculator,”is taken from a book by Louis Curmer published in 1841. Each illustration is finely drawn and colored by hand.

In those days, it was easy to identify where people lived and what their trade or station in life was just by their dress. Manning celebrates the inventiveness and initiative of the computer business with book covers based on the rich diversity of regional culture centuries ago, brought back to life by pictures from collections such as this one.

Part 1 Introducing language models

So what are language models, exactly? And how can we use them for data analysis? This part of the book answers both those questions.

In chapter 1, we discuss the principles underlying language models and what makes them special. We also discuss all the different ways in which language models can be used for data analysis, covering options to use them directly on data as well as the possibility of using them as interfaces to more specialized data-analysis tools.

In chapter 2, we have a “chat” with ChatGPT: that is, we interact with a popular language model by OpenAI. We witness the flexibility of ChatGPT when performing a variety of tasks on text, ranging from text classification to extracting specific pieces of information from text based on a concise task description. We also see that ChatGPT does well when translating questions about data, formulated in natural language, to formal query languages such as SQL.

After reading this part, you should have a good understanding of what language models are and how you can use them for data analysis.

1 Analyzing data with large language models

This chapter covers

- An introduction to language models

- Data analysis with language models

- Using language models efficiently

Language models are powerful neural networks that can be used for various dataprocessing tasks. This chapter introduces language models and shows how and why to use them for data analysis.

1.1 What can language models do?

We will start this section with a little poem and an associated picture (figure 1.1) connecting the two main topics of this book, data analysis and large language models:

In the silent hum of the server’s light, Data flows through the veins of night. Rows and columns, a structured sea, With stories hidden, waiting to be free. Each number sings of pasts untold, Trends and truths in patterns bold. And here arrives a curious friend, A language model, eager to comprehend.

It listens close, with circuits keen, To turn raw facts into insight unseen. From scatter plots to sentences clear, Data’s language is all it can hear.

The figures dance, the texts reply, As code meets meaning under AI’s eye. They merge their worlds, a seamless blend, Where logic and language have no end.

For in this bond, both deep and wide, Data’s essence finds a guide. And in the neural net’s embrace, Data analysis gains a poetic grace.

Figure 1.1 Illustration by GPT-4o, connecting the topics “data analysis” and “large language models”

The poem and the picture were generated by GPT-4o (“o” for “omni”), a language model by OpenAI that processes multimodal data, based solely on the instructions “Write a poem connecting data analysis and large language models!” followed by “Now draw a corresponding picture!” Both the picture and the poem seem to relate to the requested topics. Although the poem may not win any literature awards, its text is coherent, it is structured as we would expect from a poem, and it rhymes! Perhaps most importantly, all it took to generate the poem and the picture were short instructions expressed in natural language. Whereas prior machine learning methods relied on large amounts of task-specific training data, this requirement is now obsolete. And, of course, the task is specific enough to convince us that the language model is not copying existing solutions from the web and generates original content instead.

Writing poems and generating pictures are only two of many possible use cases (albeit possibly the most entertaining ones). Models like GPT-4o can solve various tasks, such as summarizing text documents, writing program code, and answering questions about pictures. In this book, you will learn how to use language models to accomplish a plethora of data-analysis tasks ranging from extracting information from large collections of text documents to writing code for data analysis. After reading this book, you will be able to quickly build data-analysis pipelines that are based on language models and extract useful insights from a variety of data formats.

What does GPT stand for?

GPT stands for Generative Pretrained Transformer.

Generative: GPT is a large neural network that generates content (e.g., text or code) in response to input text. This fact distinguishes it from other neural networks that, for example, can only classify input text into a fixed set of predefined categories.

Pretrained: GPT is pretrained on large amounts of data, solving generic tasks such as predicting the next word in text. Typically, the pretraining task is different from the tasks it is primarily used for. However, pretraining helps it learn more specialized tasks faster.

The Transformer is a new neural network architecture that is particularly useful for learning tasks that involve variable-length input or output (such as text documents). It is currently the dominant architecture for generative AI approaches.

1.2 What you will learn

This book is about using language models for data analysis. We can categorize dataanalysis tasks by the type of data we’re analyzing and by the type of analysis. This book covers a wide range of data types and analysis tasks.

We focus on multimodal data analysis: that is, we use language models to analyze various types of data. More precisely, we cover the following data types in this book:

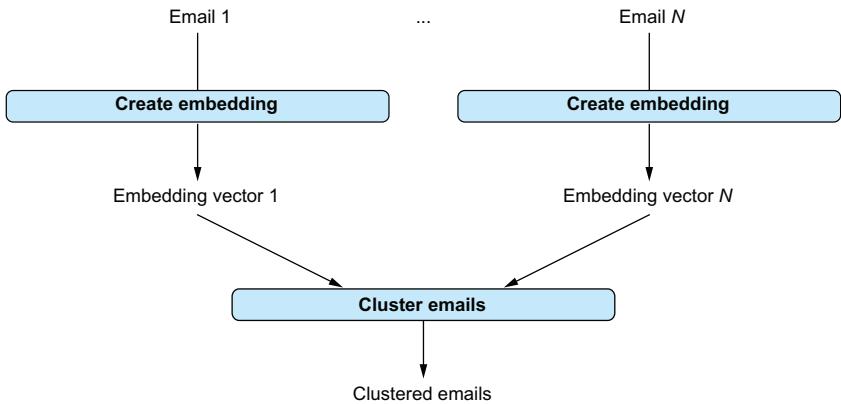

Text—Think of emails, newspaper articles, and comments on a web forum. Text data is ubiquitous and contains valuable information. In this book, we will see how to use language models to automatically classify text documents based on their content, how to extract specific pieces of information from text, and how to group text documents about related topics.

- Images—A picture is worth a thousand words, as they say. Images help us to understand complex concepts, capture fond memories of our last holiday, and illustrate current events. Language models can easily extract information from pictures. For instance, we will use language models to answer arbitrary questions about images or identify people who appear in pictures based on a database of profiles.

- Videos—A large percentage of the data on the web is video data. Even on your smartphone, video data is probably taking up a significant part of your phone’s total storage capacity. In this book, we will see that language models can be applied to analyze videos as well: for instance, to generate suitable video titles based on the video content.

- Audio—To many people, speech is the most natural form of communication. Audio recordings capture speeches and conversations and complement videos. In this book, we will see how to transcribe audio recordings, how to translate spoken language into other languages, and how to build a query interface that answers spoken questions about data.

- Tables—Imagine a data set containing information about customers. It is natural to represent that data as a table, featuring columns for the customer’s address, phone number, and credit card information, while different rows store information about different customers. In this book, we will see how to use language models to write code that performs complex operations on such tabular data.

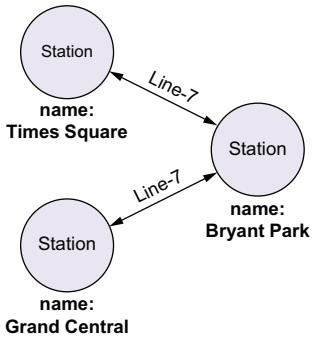

- Graphs—From social networks to metro networks, many data sets are conveniently represented as graphs, modeling entities (such as people or metro stations) and their connections (representing friendships or metro connections). We will see how we can use language models to generate code that analyzes large graphs in various ways.

Structured vs. unstructured data

Data types are often categorized into two groups: structured and unstructured data. Structured data has a structure that facilitates efficient data processing via specialized tools. Examples of structured data include tables and graph data. For such data, we typically use the language model as an interface to specialized dataprocessing tools. Unstructured data, including text, images, videos, and audio files, does not have a structure that can be easily exploited for efficient processing. So, for unstructured data, we typically need to use the language model directly on the data.

For most of this book, we will use OpenAI models via OpenAI’s Python library. Toward the end of the book, we will also discuss language models from other providers. As libraries from different providers tend to offer similar functionality, getting used to other models shouldn’t take long.

Typically, using language models incurs monetary fees proportional to the amount of data being processed. The fees depend on the language model used, the model configuration, and the way in which the input to the language model is formulated. In this book, not only will you learn to solve various data-analysis tasks via language models, but we will discuss how to do so with minimal costs.

1.3 How to use language models

State-of-the-art language models are used via a method called prompting. We discuss prompting next, followed by the interfaces we can use for prompting.

1.3.1 Prompting

Until a few years ago, machine learning models were trained for one specific task. For instance, we might have a model trained to classify the text of a review as either “positive” (i.e., the review author is satisfied) or “negative” (i.e., the author is dissatisfied). To use that model, we only need the review text as input. There’s no need to describe the task (classifying the review) as part of the input because the model has been specialized to do that task and that task only.

This has changed in recent years with the emergence of large language models such as GPT. Such models are no longer trained for specific tasks. Instead, they are intended to serve as universal task solvers that can, in principle, solve any task the user desires. When using such a model, it is up to the user to describe to the model in precise terms what the model should do.

The prompt is the input to the language model. The prompt can contain multimodal data: for example, text and images. At a minimum, to get the language model to solve a specific task, the prompt should contain a text instructing the model on what to do. Beyond those instructions, the prompt should contain all relevant context. For instance, if the instructions ask the model to determine whether a car is visible in a picture, the prompt must also contain the picture. The instructions in the prompt should be specific and clarify, for instance, the expected output format. For example, if we want the model to output “1” if a car is present and “0” otherwise, enabling us to easily add the numbers generated by the model to count cars, we need to explicitly clarify that in the prompt (otherwise, the model might answer “Yes, there is a car in the picture,” which makes it harder to count in the post-processing stage). Besides instructions and context, the prompt may contain examples to help the language model understand the task.

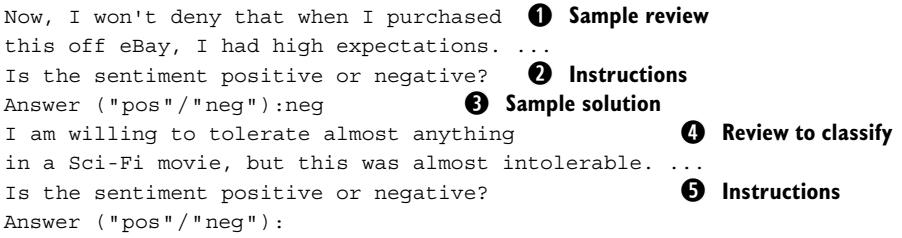

Few-shot vs. zero-shot learning

We can help the language model better understand a task by providing examples as part of the prompt. Those examples are similar to the task we want the model

to solve and specify the input and desired output. This approach is sometimes called few-shot learning, as the model learns the task based on a few samples. On the other hand, we can use zero-shot learning, meaning the model learns the task without any (zero) samples based only on the task description.

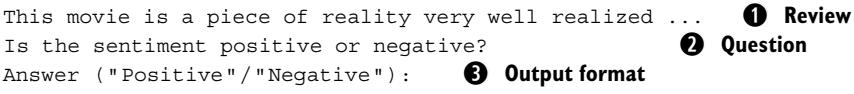

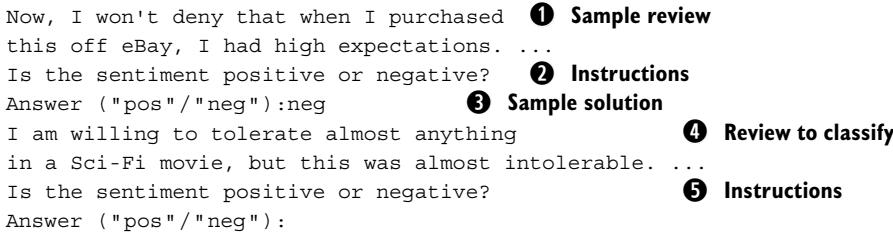

1.3.2 Example prompt

Let’s illustrate prompts with an example. A classical use case for language models is analyzing product reviews to determine the sentiment underlying the review: whether the review is positive (i.e., the customer recommends the product) or negative (i.e., the customer is unhappy with the product). Assume that we have a review to classify as positive or negative. If we have a specialized model trained for review classification for the specific product category we’re interested in, all it takes is to send our review to that model. As the model is specialized to the target problem, it already “knows” what to do with the input and the required output format. However, because we use large language models, we have to provide a bit more context along with the review.

Our prompt should contain all relevant information for the model, describing the task to solve and all context. In the example scenario, we probably want to include the following pieces of information:

- Review text—The text of the review we want to classify.

- Task description—A description of the task to solve.

- Output formats—What is the required output format?

- Relevant context—For example, are we reviewing laptops or lawn mowers?

Optionally, we can include a few example reviews with their associated correct classification. This may help the model classify reviews more accurately.

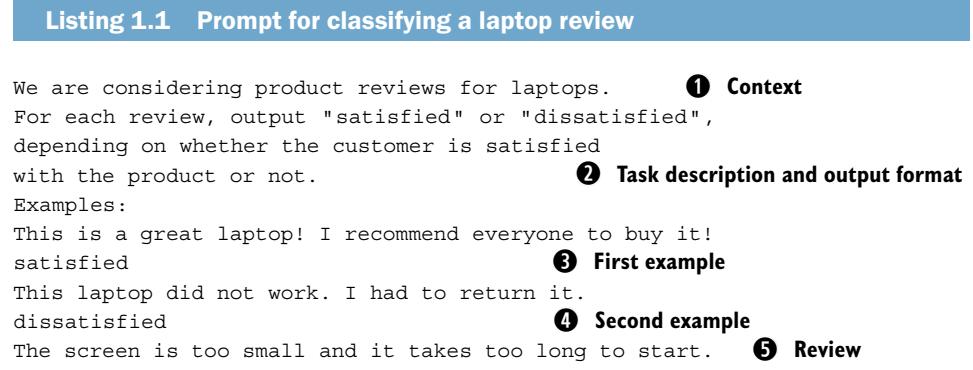

The following prompt includes all the relevant pieces of information for an example review.

This prompt starts with a description of relevant context (①). Customers are reviewing laptops, so, for example, if they label items as “heavy,” that’s probably a bad sign (unlike analyzing reviews for, let’s say, steamrollers). The task description (②) tells

the model what to do with the reviews and specifies the desired output format (output “satisfied” or “dissatisfied”) as well. Next, we have a list of examples. Strictly speaking, adding examples in the prompt may not be necessary for this simple task. However, adding examples in the prompt can sometimes increase the accuracy of the output. Here, we add two example reviews (③and ④), together with the desired output for those reviews. Finally, we add the review (⑤) that we want the model to classify. Given the preceding prompt, state-of-the-art language models are likely to output “dissatisfied” when sent this prompt as input. That, of course, is indeed the desired output.

1.3.3 Interfaces

So how can we send prompts to a language model? Providers such as OpenAI typically offer web interfaces, enabling users to send single prompts to their language models. In chapter 2, we will use OpenAI’s web interface to send prompts instructing the model to analyze text or to write code for data processing.

The web interface works well as long as we send only a few prompts. However, analyzing a large collection of text documents would require sending many prompts (one per text document). Clearly, we don’t want to enter thousands of prompts by hand. This is where OpenAI’s Python library comes in handy. Using this library enables us to send prompts to OpenAI’s models directly from Python and to process the model’s answer in Python. This enables us to automate data loading, prompt generation, and any kind of post-processing we need to do on the model’s answers. It also allows us to integrate language models with other useful tools: for example, to use the language model to write code for data processing and immediately execute that code using other tools.

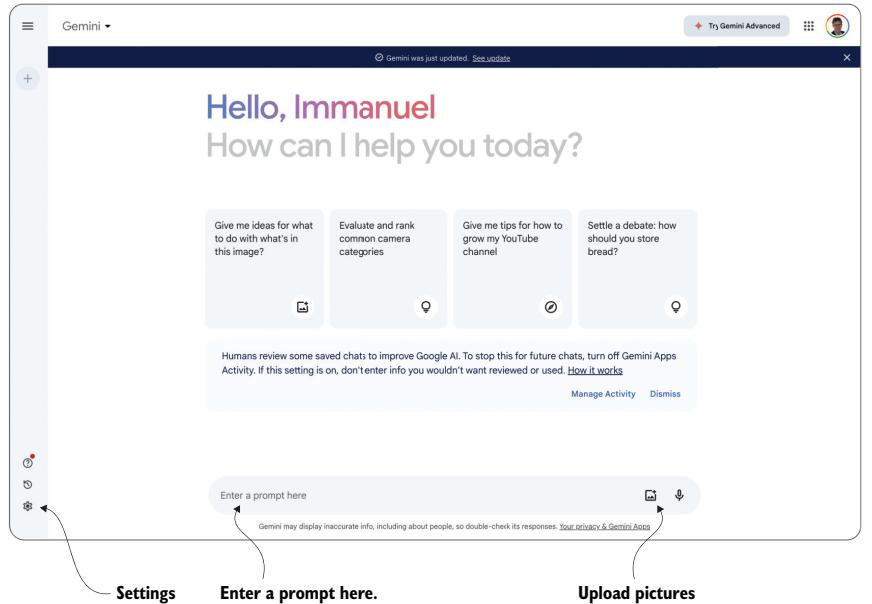

We will review OpenAI’s Python library in chapter 3. We will use this library throughout most of this book. Other providers of language models, including Google, Anthropic, and Cohere, offer similar Python libraries to send prompts to their language models. We will discuss those libraries in more detail in chapter 8.

1.4 Using language models for data analysis

So how do we use language models specifically for data analysis? This book considers two possibilities. First, we can use the language model directly on the data. This means the language model receives the data we want to analyze as part of the prompt (along with instructions on which analysis to perform). Second, we can use the language model indirectly to analyze data. Here, the language model does not directly “see” the data: that is, we do not include the data in its entirety in the prompt. Instead, we use the language model to write code for data processing, executed in specialized data-processing tools. Which approach to use depends on the data properties and the task. Let’s have a closer look at both methods.

1.4.1 Using language models directly on data

The most natural approach to analyzing data with language models is to put the data directly into the prompt. This is what we did in section 1.3.2: to analyze a review, we include the review text in the prompt, along with instructions on what to do with the text. We can use the same approach for other types of data besides text. For example, when using multimodal models such as GPT-4o, we can simply include the pictures to analyze, together with analysis instructions, in the prompt.

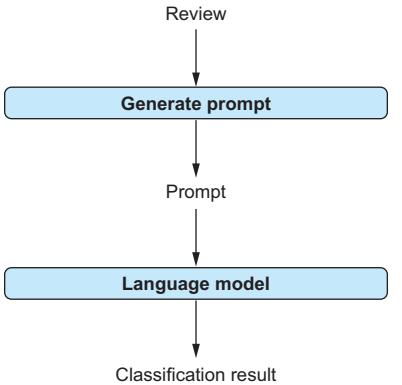

Typically, we do not want to analyze a single picture or review but a whole collection of them. For instance, assume that we want to classify an entire collection of reviews, determining for each of them whether the review is positive or negative. In such cases, we generally take the following approach, implemented in Python using OpenAI’s Python library (or an equivalent library allowing users to send prompts to other providers’ models). We load the reviews to classify and generate one prompt for each review. Then, we send those prompts to the language model, extract the classification result from the answer generated by the model for each review, and save the results in a file on disk.

In this scenario, we want to solve the same task (review classification) for multiple text documents (i.e., reviews). As you can imagine, the prompts for different reviews should therefore bear some similarity. Although the text of the review to classify changes each time, the task description and other parts of the prompt remain the same.

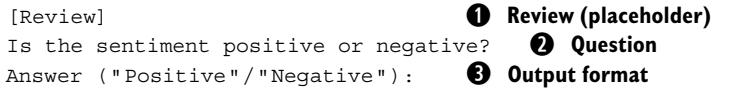

To generate prompts in Python, we use a prompt template. A prompt template specifies a prompt associated with a specific task to solve. In our example, we would use a prompt template to classify reviews as positive or negative. A prompt template contains placeholders to represent parts of the prompt that change depending on the input data. Considering our prompt template for review classification, we should probably include a placeholder for the review text. Then, when generating prompts in Python, we replace that placeholder with the text of the current review to classify.

For instance, we can use the following prompt template to classify reviews.

Listing 1.2 Prompt template for classifying laptop reviews

We are considering product reviews for laptops. ① **Context**

For each review, output ''satisfied'' or ''dissatisfied'',

depending on whether the customer is satisfied

with the product or not. ② **Task description and output format**

Examples:

This is a great laptop! I recommend everyone to buy it!

satisfied ③ **First example**

This laptop did not work. I had to return it.

dissatisfied ④ **Second example**

[ReviewText] ⑤ **Placeholder for review text**This prompt template generalizes the prompt we saw for classifying one specific review (have a look at listing 1.1 in section 1.3.2). Again, we provide context (the fact that we’re classifying laptop reviews) (①) and instructions describing the task to solve, as well as the output format (②). We also provide a few example reviews with associated classification results (③and④). Although the review to classify changes, depending on the input, we do not need to change the example reviews. Those reviews merely illustrate what task the language model needs to solve. Finally (⑤), we have a placeholder for the review text. When iterating over different reviews, we generate a prompt for each of them by substituting the review text for this placeholder.

The example prompt template has only a single placeholder. In general, several parts of the prompt may change depending on the input data. If so, we introduce placeholders for each of those parts and substitute all of them to generate prompts.

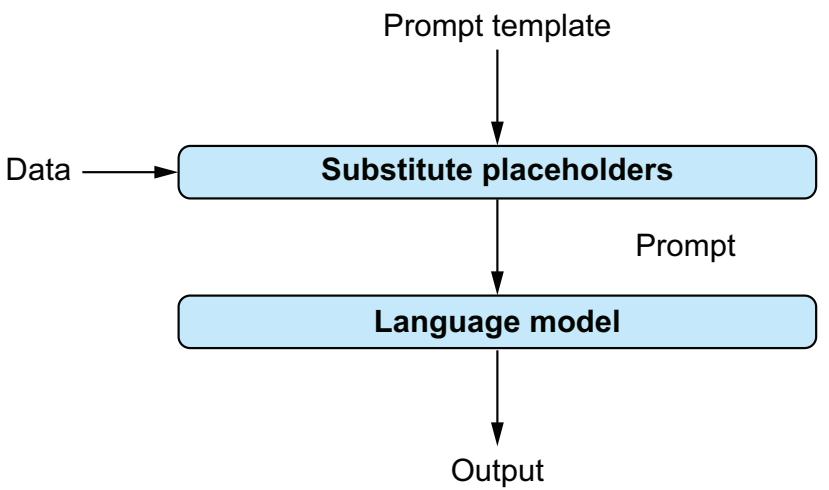

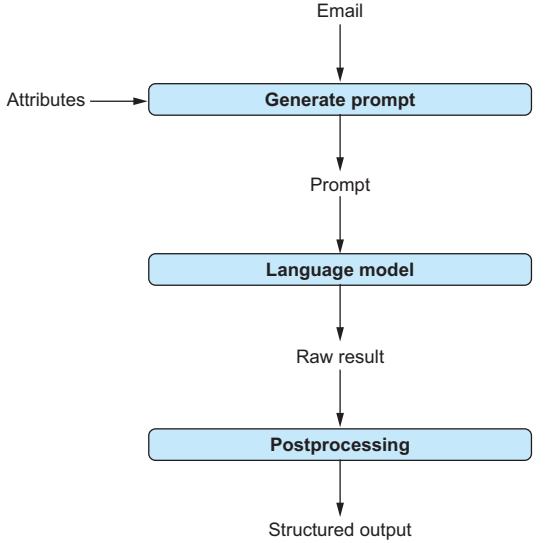

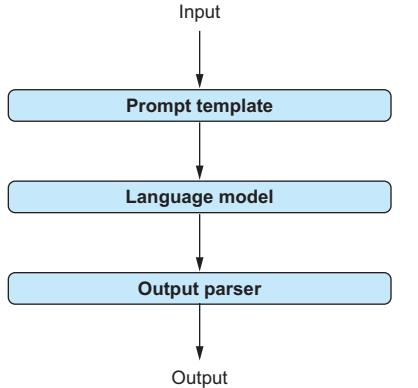

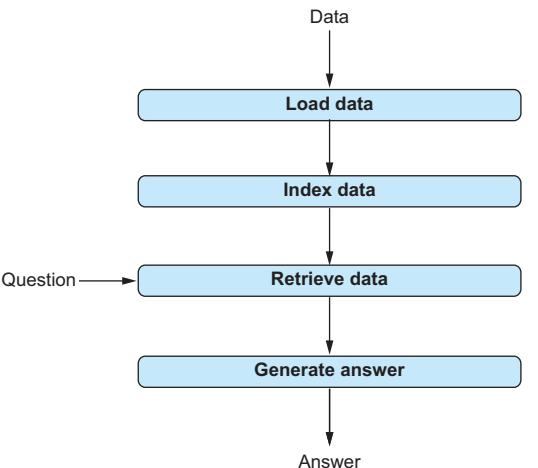

Figure 1.2 summarizes how we use prompt templates when analyzing data directly with language models. For each data item (e.g., a review to classify), we substitute for placeholders in the prompt template to generate a prompt (we can also say that we instantiate a prompt). We then send this prompt to the language model to solve the data-analysis task we’re interested in.

Figure 1.2 Using language models directly for data analysis. A prompt template describes the analysis task. It contains placeholders that are replaced with data to analyze. After substituting for the placeholders, the resulting prompt is submitted to the language model to produce output.

1.4.2 Data analysis via external tools

Putting data directly into the prompt is not always the most efficient approach. For some types of data, specialized tools are available that process certain operations on that data very efficiently. In those cases, it is often more efficient to use the language model to write code for data processing (rather than analyzing the data directly). The code generated by the language model can then be executed by the specialized tool.

We will apply this approach to structured data. For structured data such as data tables and graphs, specialized data-processing tools are available that support a wide range of analysis operations. Those operations, such as filtering and aggregating data, can be performed very efficiently on structured data. Even if it was possible to perform the same operations reliably with language models (which is not the case), we would not want to do it because the fees we pay to providers like OpenAI are proportional to the size of the input data. Processing large structured data sets (such as tables with millions of rows) using language models is prohibitively expensive. In the following chapters, we discuss the following types of tools for structured data processing:

- Relational database management system—Stores and processes relational data: that is, collections of data tables. Most relational database management systems support SQL, the Structured Query Language. We will use language models to translate questions about data to queries in SQL.

- Graph data management system—Handles graph data representing entities and the relationships between them. Different graph data management systems support different query languages. In chapter 5, we see how to use language models to translate questions about data into queries in the Cypher language, supported by the Neo4j graph data management system.

For instance, let’s assume we want to enable lay users to analyze a relational database: that is, a collection of data tables. Perhaps a table contains the results of a survey, and we want to let users aggregate answers from different groups of respondents. The survey results are stored in a relational database management system (the most suitable type of tool for this data type). Using language models, we can enable users to ask questions about the data in natural language (that is, in plain English). The language model takes care of translating those questions into formal queries. More precisely, given that the data is stored in a relational database management system, we want to translate those questions into SQL queries.

Again, we introduce a prompt template for the task we’re interested in. Here, we’re interested in text-to-SQL translation, meaning we want to use the language model to translate questions in natural language to SQL queries. Although the task (text-to-SQL translation) and the data (the database containing survey results) remain fixed, the user’s questions will change over time. Therefore, we introduce a placeholder for the user question in our prompt template. In principle, the following prompt template should enable us to translate questions on our survey data into SQL queries.

Listing 1.3 Prompt template for translating questions to SQL

Database: ① Description of database

The database contains the results of a survey, stored

in a table called ''SurveyResults'' with the following

columns: ...

Question: ② Question to translate

[Question]

Translate the question to SQL! ③ Task descriptionFirst the prompt describes the structure of our data (①). This is required to enable the system to write correct queries (e.g., queries that refer to the correct names of tables and columns in those tables). The description in the example template is abbreviated. We will see how to accurately describe the structure of a relational database in later chapters. Next, the prompt template contains the question to translate (②). This is a placeholder to enable users to ask different questions using the same prompt template. Finally, the prompt template contains a (concise) task description (③): we want to translate questions to SQL queries!

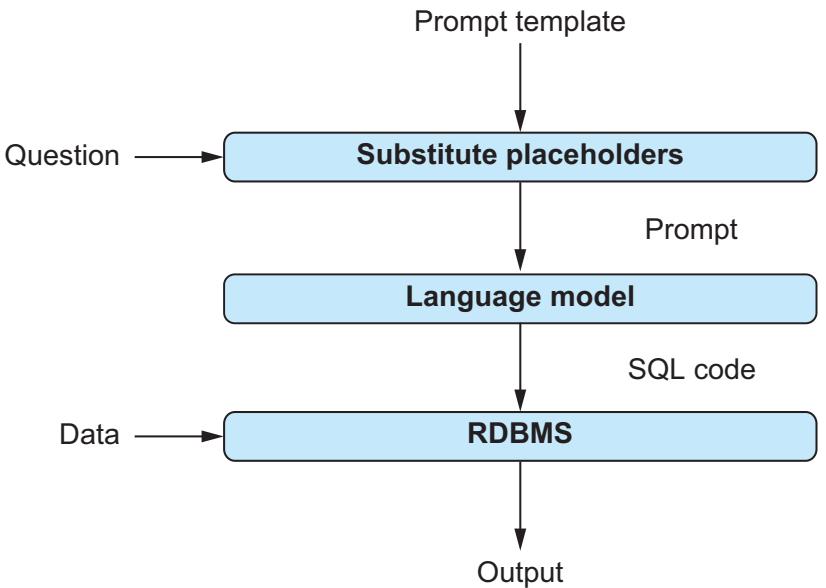

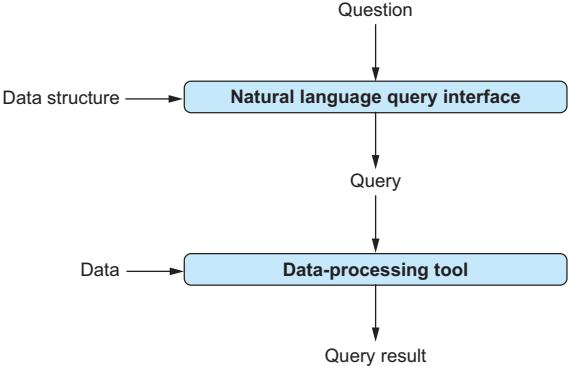

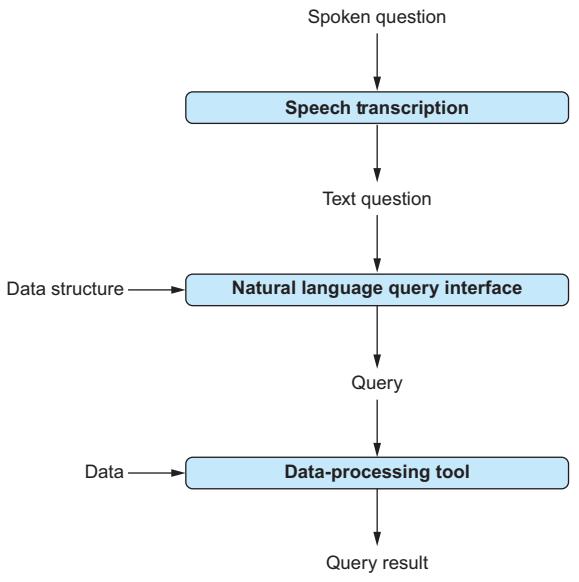

Figure 1.3 summarizes the process for text-to-SQL translation. Given a corresponding prompt template, we substitute the user question for the placeholder, translate the question to an SQL query via the language model, and finally execute the query in a relational database management system. The query result is shown to the user.

Figure 1.3 Using language models indirectly to build a natural language interface for tabular data. The prompt template contains placeholders for questions about data. After substituting for placeholders, the resulting prompt is used as input for the language model. The model translates the question into an SQL query that is executed via a relational database management system.

1.5 Minimizing costs

When processing data with language models, we typically pay fees to a model provider. The larger the amount of data we process, the higher the fees. Before analyzing large amounts of data, we want to make sure we’re not overpaying. For instance, using larger language models (the neural network implementing the language model has

more “neurons,” so to speak) is often more expensive, but for complex tasks, it may pay off with higher-quality results. But if the large model is not needed to solve our current task well, we should save the money and use a smaller model. Fortunately, there are quite a few ways in which we can optimize the tradeoff between processing costs and result quality. We discuss the different options next. All of them are covered in more detail in later book chapters.

1.5.1 Picking the best model

OpenAI offers many different versions of the GPT model, ranging from relatively small models to giant models like GPT-4. At the time of writing, using GPT-4 is over 100 times more expensive, per input token, than using the cheapest version.

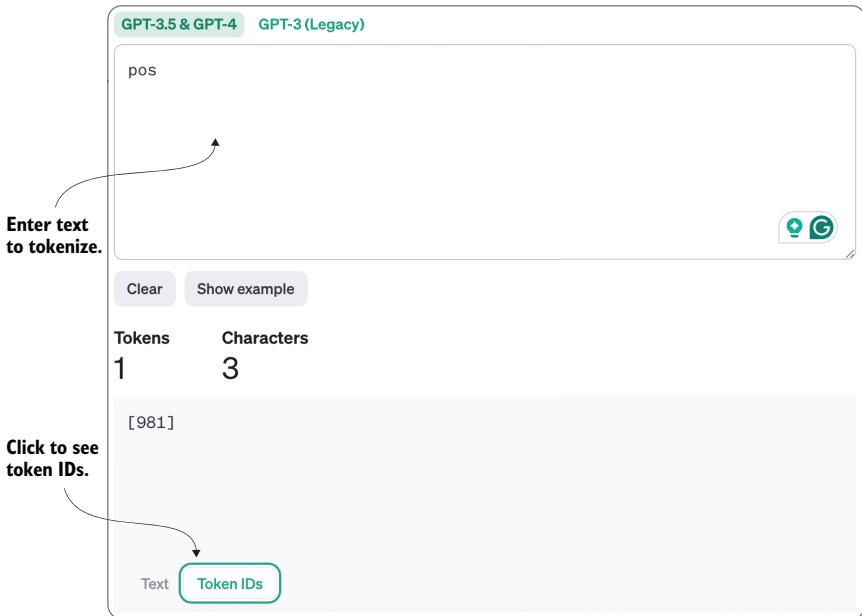

What are tokens?

The processing fees for language models like GPT-4 are proportional to the number of tokens read and generated by the model. A token is the atomic unit at which the language model represents text internally. Typically, one token corresponds to approximately four characters.

Given those price differences, it is clearly a good idea to think hard about which specific model satisfies our needs. For instance, for a simple task like review classification, we probably don’t need to use OpenAI’s most expensive model. But if we want to use the model to write complex code for data processing, using the most expensive version may be worth it.

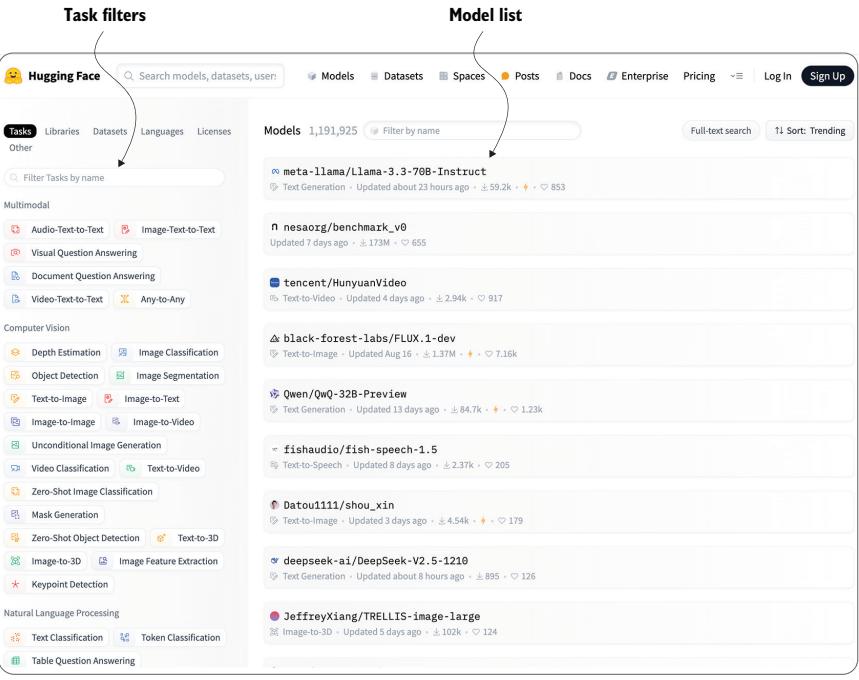

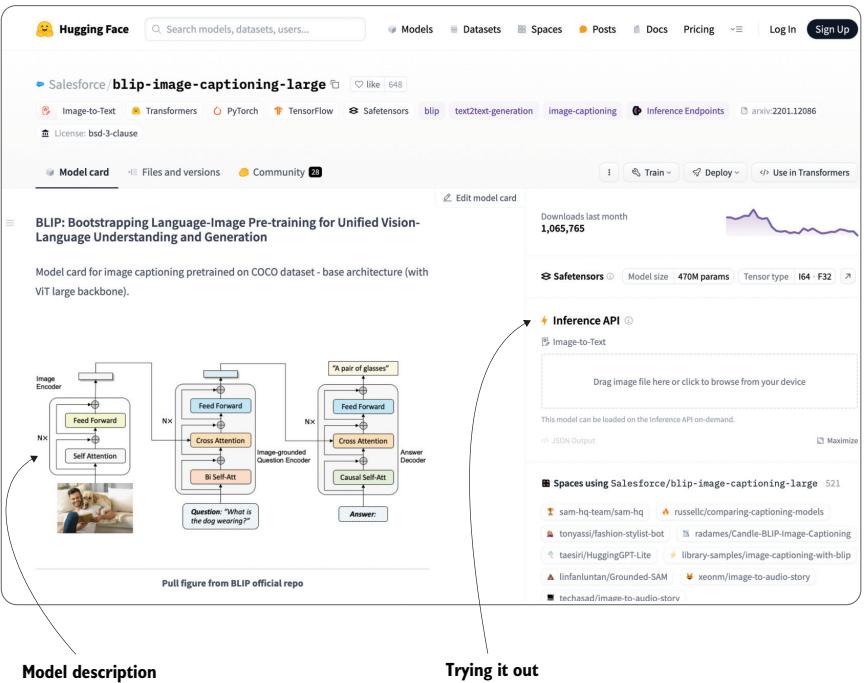

Of course, we don’t need to restrict ourselves to models offered by OpenAI. Language models are offered by many providers, including Google, Anthropic, and Cohere. In principle, we might even choose to host our own model, using models that are publicly available: for example, on the Hugging Face platform. Some of those models are generic (similar to OpenAI’s GPT models), whereas others are trained for more specific tasks. If we happen to be interested in tasks for which specialized models exist, we may want to use one of them. We discuss models from other providers in more detail in chapter 8.

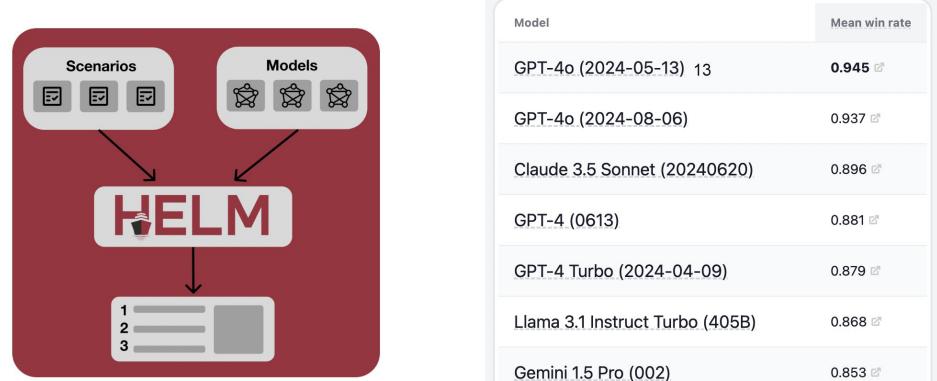

Picking the right model for your needs is not an easy task. As a first step, you might want to look at benchmarks such as Stanford’s Holistic Evaluation of Language Models (HELM, https://crfm.stanford.edu/helm/; see figure 1.4). This benchmark compares the quality of results produced by different language models on different types of tasks. Ultimately, you may have to try a few models on your task and a data sample to ensure that you choose the optimal one. In chapter 9, we will see how to benchmark different models systematically for an example task.

Figure 1.4 Holistic Evaluation of Language Models (HELM): comparing language models offered by different providers according to various metrics

1.5.2 Optimally configuring models

The OpenAI Python library offers a variety of tuning parameters to influence model behavior. For instance, we can influence the probability that certain words appear in the output of a model. This can be useful, for instance, when classifying reviews. If the output of the model should be one of only a few possible choices (such as “positive” and “negative”), it makes sense to restrict possible outputs to those choices. That way, we avoid cases in which the model generates output that does not correspond to any of the class names. To take another example, we can fine-tune the criteria used to decide when the model stops generating output. For instance, if we know that the output should consist of a single token (e.g., the name of a class when classifying reviews), we can explicitly limit the output length to a single token. This prevents the model from generating more output than necessary (saving us money in the process, as costs depend on the amount of output generated).

We will discuss those and many other tuning parameters in more detail in chapter 3. In chapter 9, we will see how to use those tuning parameters to get better performance from our language models.

Another option to configure models is to fine-tune them. This means, essentially, that we’re creating our own variant of an existing model. By training the model with a small amount of task-specific training data, we get a model that potentially performs better at our task than the vanilla version. For instance, if we want to classify reviews, we might train the model with a few hundred example reviews and associated classification results. This may enable us to use a much smaller and cheaper model, fine-tuned for our specific task, that performs as well on this task as a much larger model that has not been fine-tuned.

Of course, fine-tuning also costs money, and it may not be immediately clear whether it is worth it for a specific task. We discuss fine-tuning and the associated tradeoffs in more detail in chapter 9.

1.5.3 Prompt engineering

The prompt template can significantly affect the quality of the results produced by the language model. A good prompt template clearly specifies the task to solve and provides all relevant context. We will see how to map various tasks to suitable prompt templates throughout the following chapters, covering a variety of data types. After working through those examples, you should be able to design your own prompt templates for novel tasks, following the same principles.

Similar to the model choice, it can be hard to pick the best prompt template for a given task without doing any testing. In chapter 9, we will test prompt templates in an example scenario and illustrate how different prompt templates lead to different outcomes. In some cases, investing a little time in finding the best prompt template may enable you to get satisfactory performance with fairly cheap models (whereas working with the unoptimized prompt template may make a more expensive model necessary).

Where to get prompt templates

Finding a good prompt template for a new task may take some time. If you do not want to spend that time, have somebody else do it for you! More precisely, you can find platforms on the web that enable users to buy and sell prompt templates. One of them is PromptBase (https://promptbase.com). Say you want to translate English questions into SQL queries. By entering corresponding keywords, you will find not one but multiple alternative prompt templates on that platform. If the prompt template seems like a good match based on the associated description, you can buy it and use it for your data-analysis needs.

1.6 Advanced software frameworks and agents

Throughout most of this book, we will use OpenAI’s Python library and similar libraries from other providers. For instance, these libraries enable you to send prompts to language models and receive the models’ answers. Although they are entirely sufficient for many use cases, you may want to consider more advanced software frameworks when developing complex applications that are based on language models.

In this book, we discuss two advanced software frameworks for working with language models: LangChain (https://langchain.com) and LlamaIndex (www.llamain dex.ai). Both make it easier to develop Python applications for data analysis with language models.

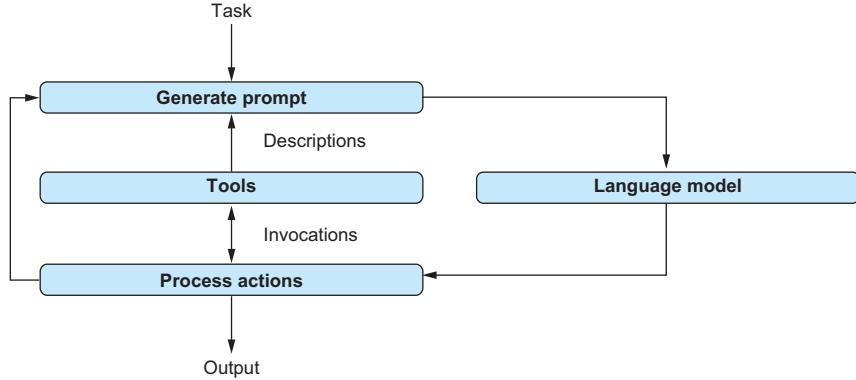

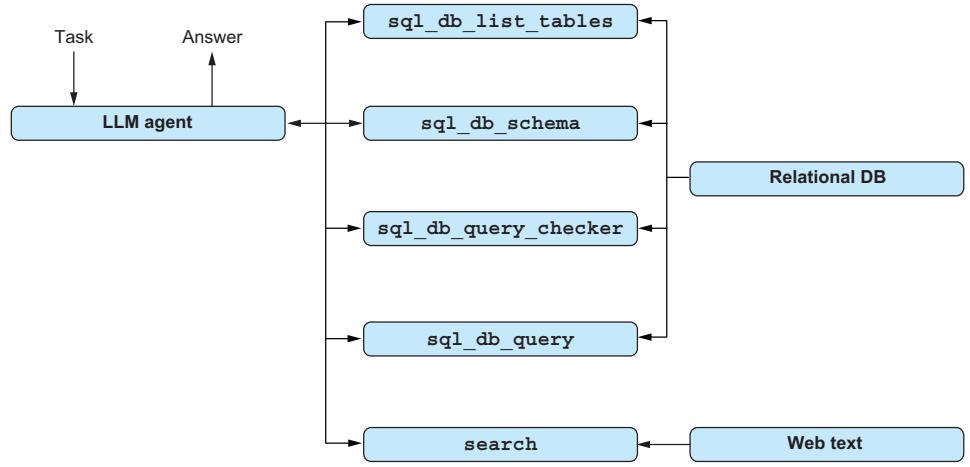

Besides many other features, these frameworks make it easy to create agents that use language models. This approach is useful for complex data-analysis tasks requiring, for instance, combining data from multiple sources. For most of this book, we solve data-analysis tasks with a single invocation of the language model, whether it is analyzing a text document or translating a question about data to a formal query. If the task requires multiple steps, such as performing preprocessing before calling the language model or post-processing on the model’s answer, we must hard-code the corresponding processing logic.

This approach works as long as we can reliably predict the sequence of steps required for data processing. However, in some cases, it can be difficult to predict which steps are required. For instance, we may get questions from users that refer either to a text document or to a relational database. So, depending on the question, we need to either write an SQL query or extract information from text documents. Or perhaps we might need information from both the text and the relational database, extracting information related to the question from the text and then using the information we obtain to formulate an SQL query.

In such cases, it is not possible to hard-code all possible sequences of steps in advance. Instead, we want to design an approach that is flexible enough to decide independently what step is required next. This can be done using agents and language models. With this approach, the language model is used to decompose complex analysis tasks into subproblems. Furthermore, the language model may choose to invoke tools: arbitrary functions whose interfaces are described in natural language. Such tools can, for instance, encapsulate the invocation of an SQL query on a relational database. After invoking a corresponding tool, the language model is given access to the invocation result (e.g., the query result) and can use that result to plan the next steps. We will see how to use agents to solve complex data-analysis tasks where it is unclear, a priori, which data sources and processing methods are required to solve them.

Summary

- Language models can solve novel tasks without specialized training.

- The prompt is the input to the language model.

- Prompts may combine text with other types of data, such as images.

- A prompt contains a task description, context, and (optionally) examples.

- Language models can analyze certain types of data directly.

- When analyzing data directly, the data must appear in the prompt.

- Prompt templates contain placeholders: for example, to represent data items.

- By substituting for placeholders in a prompt template, we obtain a prompt.

- Language models can also help to analyze data via external tools.

- Language models can instruct other tools on how to process data.

- Models are available in many different sizes with significant cost differences.

- Models can be configured using various configuration parameters.

- LangChain and LlamaIndex help to develop complex applications.

- Agents use language models to solve complex problems.

2 Chatting with ChatGPT

This chapter covers

- Accessing the ChatGPT web interface

- Using ChatGPT directly for data processing

- Using ChatGPT indirectly for data processing

Time to meet ChatGPT! In this chapter, we will have a chat with ChatGPT and start using it for data analysis. If you have never used ChatGPT, this chapter will teach you how to access it and give you a first impression of its capabilities (as well as its limitations). If you have used ChatGPT but have not yet done so for data analysis, this chapter will show you some of the many ways you can exploit ChatGPT in this context.

We will first discuss a web interface that will give you access to OpenAI’s ChatGPT. We will go over the OpenAI registration process, discuss the main functions offered by the interface, and use it to have a first dialogue with ChatGPT. After that, we will start using ChatGPT to analyze data in a few example scenarios. We will see two different ways to exploit ChatGPT for data analysis: directly and indirectly. When using ChatGPT directly, we have it do the actual data processing given data and a task description as input. This works for data types that ChatGPT processes natively (such as text data).

On the other hand, we can also use ChatGPT to analyze data indirectly. Here, ChatGPT merely serves as a translator, translating descriptions of analysis tasks into formal languages that are understood by external data-processing tools. The actual data processing is then handled by those external tools. In this chapter, you will see that ChatGPT is useful in both scenarios.

2.1 Accessing the web interface

Open your web browser, and type https://chat.openai.com/ into the address bar. You will create an OpenAI account that enables you to use ChatGPT. If you already have an account, you can skip the following steps, log in to your account, and proceed with the next section.

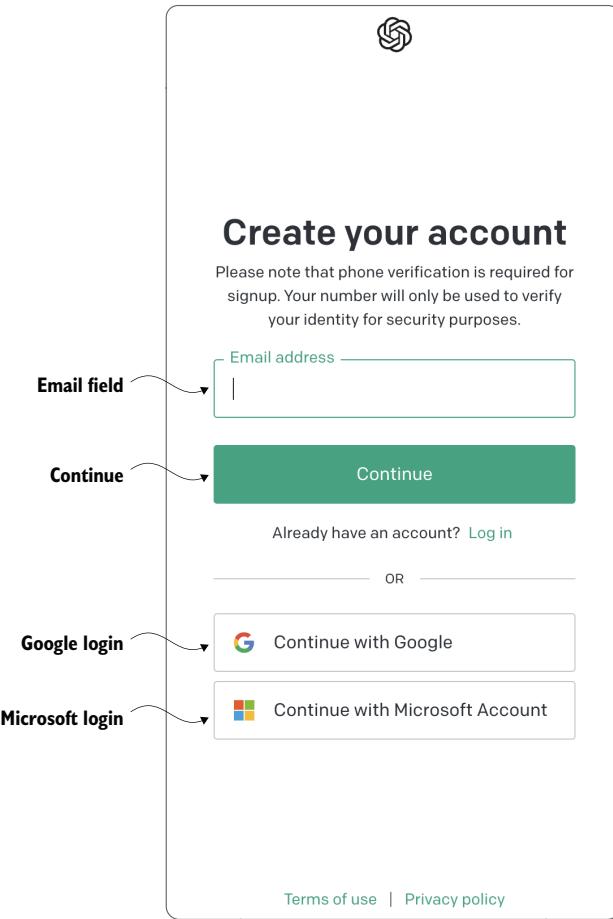

To create an account, click the Signup button. This brings you to the screen shown in figure 2.1.

Figure 2.1 Signup page for an OpenAI account. Enter your email address, and click the Continue button, or sign up using a Google account or a Microsoft account.

You have several options when signing up for an OpenAI account:

- Sign up using a Google account (by clicking Continue with Google).

- Sign up using a Microsoft account (by clicking Continue with Microsoft Account).

- Sign up with an arbitrary email address by entering that address into the Email Address field and then clicking Continue. After that, follow the instructions given on the screen.

After creating your account using any of these options, log in to your newly created account, and continue with the steps outlined in the next section.

OpenAI subscriptions

OpenAI offers different types of accounts; some are free, and others come with a monthly fee. For the following examples, a free account is sufficient. You may still choose to sign up for the paid subscription to gain access to more models and get faster answers from ChatGPT. Depending on which subscription you choose, your screens may look slightly different from the screenshots in this chapter.

2.2 Making introductions

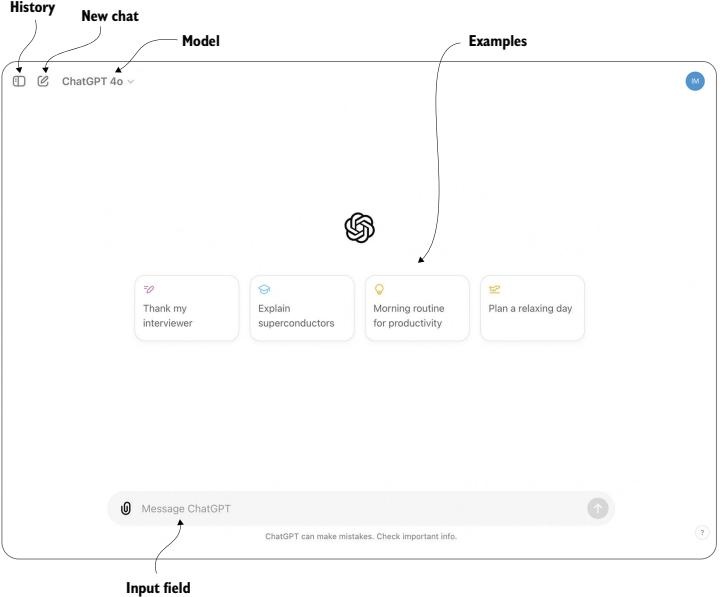

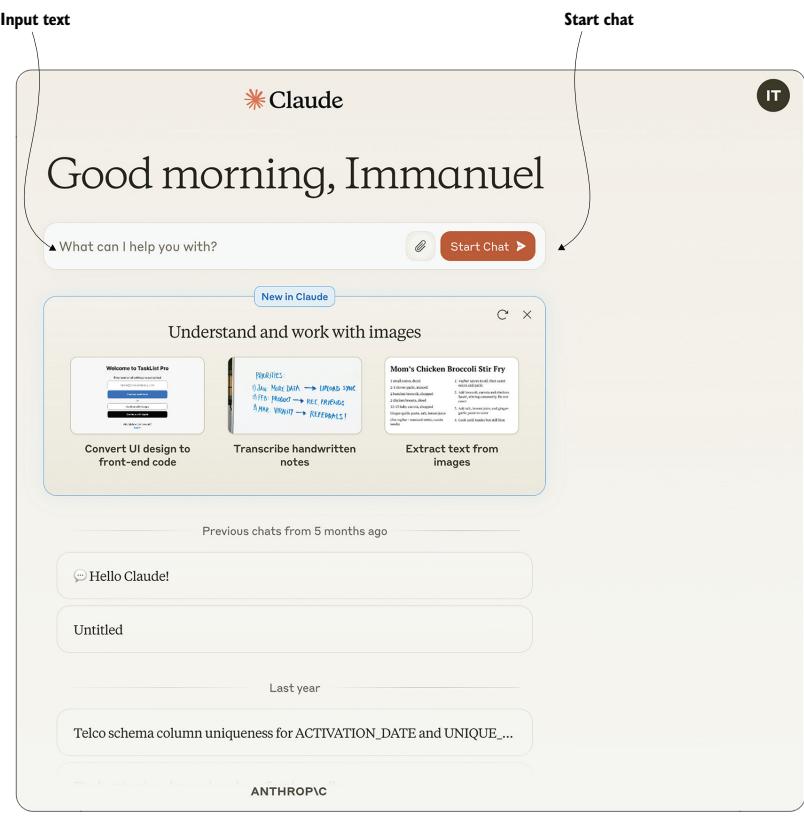

After logging in to your OpenAI account, you should see the interface shown in figure 2.2.

Figure 2.2 ChatGPT web interface. Interact with ChatGPT by clicking predefined example inputs or entering arbitrary text into the input field. Click the New Chat button to reset the conversation. This interface enables you to have a dialogue with ChatGPT by submitting text input and receiving answers. Let’s take a moment to understand the most important interface components in figure 2.2. First, you have a couple of predefined input examples. You can simply click any of those examples to get the conversation started. Besides predefined examples, you have the option to enter arbitrary text into a text field. We will refer to this interface element as the input field in the rest of this chapter. Finally, you can start a new conversation at any point via the New Chat button. Doing so erases ChatGPT’s memory of all prior dialogue steps.

Which model should I choose?

Clicking the button labeled Model in figure 2.2 enables you to choose between different language models. ChatGPT supports several models from OpenAI’s GPT model series. The examples discussed next should, in principle, work with any of the available models. You might try a few different models to see how the output differs. Depending on your subscription, the number of requests you can send to specific models may be limited.

Time to say hello! Click the input field, and say hello to ChatGPT. You can type anything. ChatGPT has been trained to conduct dialogues with human users and should be able to answer most inputs in a reasonable manner. For instance, tell ChatGPT a little about yourself! Ask for opinions or help with upcoming tasks! Or, perhaps, ask it to write a poem about a topic of your choice! You may want to spend a few minutes chatting with ChatGPT to get a better sense of its capabilities as well as its limitations.

Note that ChatGPT can refer back to prior inputs. For instance, if you are not satisfied with a prior reply, you may ask ChatGPT to correct it or to change it. No need to repeat the original request. Also, if you are not satisfied with an answer but do not want to provide further clarifications, try the button labeled Regenerate in figure 2.3.

Figure 2.3 After ChatGPT generates an answer, the Regenerate button appears below the generated response. Click this button to receive an alternative answer to your last input.

Clicking that button will cause ChatGPT to regenerate its last answer. As ChatGPT uses a certain degree of randomization while generating output, chances are good that the second output will be different (and possibly better) than the first version.

Typically, all text you enter is part of the same conversation. If at any time you want to start a new chat (essentially erasing ChatGPT’s “memory” of the prior conversation), simply click the button labeled New Chat in figure 2.2. Note that prior conversations are

not lost, even when you start a new one. Instead, OpenAI stores past conversations and enables users to go back and study them (or continue the conversation from where it ended last time). You can access a history of prior conversations by clicking the button labeled History in figure 2.2. Each conversation is assigned a short title, generated automatically based on the conversation content.

Using ChatGPT for the first time is often an impressive experience. ChatGPT generates polished and reasonable answers for a variety of topics. This may mislead users into putting too much faith in the information it provides. It’s important to avoid losing sight of the various limitations that apply to the current generation of language models. In general, always verify the output of the language model before relying on it.

What are hallucinations?

The term hallucination in language models refers to situations in which a language model invents new content in the absence of information and integrates it into answers. Often the result sounds convincing, and it can be hard to recognize instances of hallucination. Ongoing research [1] explores methods to reduce the chances of hallucinations. However, at the time of writing, no reliable strategies to avoid hallucinations are known. So don’t blindly trust information from language models, and always use alternative sources for corroboration.

2.3 Processing text with ChatGPT

Let’s start using ChatGPT for data processing! In this section, we will use ChatGPT to analyze text, a common type of unstructured data. At the same time, text is ChatGPT’s native input and output format. This means we can use ChatGPT directly to analyze our (text) data without involving external analysis tools. That helps keep things simple, and that’s what we want for our first steps toward ChatGPT-supported data analysis.

Imagine the following situation: you’re an employee of Banana, a producer of various consumer electronics, including laptops and smartphones. The Banana website enables users to post free-form text comments. It turns out that many users comment on their satisfaction or dissatisfaction with specific Banana products. Users often point out specific aspects of these products that stand out or need improvement. In aggregate, all those reviews should be very helpful to inform the design of future Banana products! But to make that possible, you first need to analyze all of them and extract common points. Your boss asks you to do just that: prepare statistics that summarize reviews for different products and product categories. Also, while you’re reading through reviews, your boss encourages you to write a few nice replies to the product reviews. Ideally, you want to avoid spending the next weeks reading and aggregating reviews. Can’t you automate that? Perhaps ChatGPT can help.

Next, we’ll use the ChatGPT web interface to analyze an example review. If that works well, it may be possible to scale up the process to automatically analyze all of them! If you have started a dialogue previously, first click the button labeled New Chat in figure 2.2 to start a new conversation. This will make sure that the topics you discussed with ChatGPT previously won’t interfere with the analysis examples we try next.

Let’s start by telling ChatGPT about the review that we want to analyze. Click the input field, enter the text after the User marker in the following dialogue, and then press Enter.

User : Have a look at the following product review:

“I bought the BananaBook a year ago and I’m super happy with this product! The screen is awesome and allows me to easily read content, even in bright sunshine. It’s fast enough for everything I want to do with it, e.g., coding, office work. The only drawback is its weight, I wish it were a little lighter as I have to carry it around quite a bit. But, for that price, it’s a great offer.”

GPT : Based on the review, the BananaBook is a product that the reviewer purchased a year ago and is still satisfied with. The screen of the BananaBook is impressive, allowing the user to read content easily, even in bright sunshine. The device is also fast enough for the user’s needs, including coding and office work. However, the reviewer did mention that the BananaBook is a bit heavy, which can be an inconvenience when carrying it around. Overall, the reviewer seems to think that the BananaBook is a great value for its price.

The text after the GPT marker is the answer generated by ChatGPT during a sample session. In this case, ChatGPT simply repeats the review, albeit with slightly different wording, demonstrating that it has accepted and “understood” your input.

Why am I getting different output?

ChatGPT uses a certain degree of randomization when generating output. This means you generally won’t see the same output, even when you submit exactly the same input twice. For that reason, the chances of you seeing the precise output shown here are small. However, you are likely to get output of similar quality.

Let’s start our text analysis with a simple classification, mapping the review to a category. Specifically, we will try to classify the review into one of three product categories: mobile phones, personal computers, and game consoles.

WARNING Do not click the New Chat button before entering the next text! The purpose of the New Chat button is to erase ChatGPT’s memory of prior conversation steps. But that’s not what we want here at all. All of the following analyses refer to the same review that we entered initially. This works only if ChatGPT remembers that review.

- User : Which product category does the review refer to (mobile phones, personal computers, game consoles):

- GPT : Based on the information provided in the review, it is not clear which product category the BananaBook belongs to. The reviewer did not specify whether it is a mobile phone, personal computer, or game console. However, based on the fact that the reviewer mentioned using the device for coding and office work, it is more likely that the BananaBook is a personal computer or a laptop rather than a mobile phone or a game console.

Not bad! ChatGPT has clearly understood the task and provides a detailed reply. The BananaBook is a fictitious device, and it’s not immediately clear what type of product we’re talking about. However, as ChatGPT rightfully points out, coding and office work are most typically associated with personal computers (more so than with mobile phones or game consoles). Despite the uncertainty, ChatGPT can come up with a reasonable answer and justify it thoroughly. In this instance, the answer from ChatGPT is even more fine-grained than required: although ChatGPT links the review to one of the three provided categories, it additionally hypothesizes that the review refers to a laptop (a subcategory of personal computers). This assumption seems reasonable in this context, given that the user carries the computer regularly (typically associated with laptops rather than desktop machines).

The previous answer is reasonable but maybe a bit lengthy. In fact, its length is similar to the original review. No point in using ChatGPT for text analysis if the resulting answer is as long as the source text. Let’s ask ChatGPT to be a little more concise. There’s no need to provide a detailed justification; rather, we want to get only the name of the most likely category for the review.

- User : Answer concisely, only write the name of the most likely product category (mobile phones, personal computers, game consoles):

- GPT : Personal computers.

That’s much better! Clearly, ChatGPT was able to understand our instructions and adapt its prior answer accordingly. Besides being faster to read, the concise output has another advantage. If, ultimately, we want to analyze many reviews as opposed to a single one, it is important to have homogeneous output across reviews. For instance, having homogeneous output makes it easier to count the number of reviews in each of the three categories.

Let’s classify the review according to different categories. This time, we will directly hint at the desired output format (note that our specification “answer concisely” refers back to the previous instructions).

- User : Which product category out of food, cosmetics, electronics does the review refer to? Answer concisely:

- GPT : Electronics.

Perfect! That is the right category, and the output format is as expected. So far, we have seen that ChatGPT is able to classify reviews according to different criteria. This seems useful, for instance, for creating statistics by counting the number of reviews in each category. But ideally, we also want to analyze the content of those reviews. Which products or product categories need improvement most urgently? To determine that, we need to know whether reviews are positive or negative: that is, whether the user is satisfied or dissatisfied with the product. This is yet another way to categorize the review text. However, this time, our classification refers not to the product being reviewed but to the review content.

- User : Is the customer satisfied or dissatisfied with the product? Answer with “satisfied” or “dissatisfied”:

- GPT : Satisfied.

Yes, that’s correct! Using the combination of the capabilities seen so far, we could already use ChatGPT to count the number of positive and negative reviews for different product categories. This gives us some information about which product categories urgently need improvements, but that may not be specific enough. For instance, it may be interesting to find out what aspects of a product users are most happy or unhappy with. Let’s try using ChatGPT to extract this information as well.

- User : Which positive aspects of the product does the review mention? Answer with a numbered list, each aspect is described by maximally three words:

- GPT : 1. Impressive screen

- Fast performance

- Suitable for coding

- Great value

Correct again! ChatGPT extracts the most salient product advantages mentioned in the review. Note that the output format complies again with the corresponding instructions: it’s a numbered list, and each entry has at most three words. How about the drawbacks mentioned in the review?

User : Which negative aspects of the product does the review mention? Answer with a numbered list, each aspect is described by maximally three words:

GPT : 1. Heavy weight

Indeed, the only drawback of the BananaBook is the weight, according to the user. Note that ChatGPT sticks to the requested output format (a numbered list) even though the result contains only a single entry.

Classifying reviews and extracting specific strengths and weaknesses works great if we want to aggregate reviews into statistics. However, doing so may discard information: for example, a more detailed justification for why users do or do not like specific aspects of a product. Sometimes we may just want to read a review sample. But to avoid spending time reading overly long reviews, it would be great to summarize those reviews first. Let’s use ChatGPT to do so.

User : Summarize the review in three sentences:

GPT : The reviewer purchased the BananaBook electronics product a year ago and remains satisfied with it. The BananaBook has an impressive screen that allows for easy reading in bright sunlight and is fast enough for coding and office work. The only drawback is its heavy weight, but for the price, the BananaBook is a great value.

The result is a reasonable summary that uses precisely the number of sentences requested in the instructions. Can we get it even more concisely?

- User : Summarize the review in one sentence:

- GPT : The reviewer is happy with the BananaBook electronics product due to its impressive screen, fast performance, and great value, despite it being a bit heavy.

Yes, that works as well! Indeed, after initial testing, it seems that ChatGPT may help automate various analysis tasks. Finally, let’s see whether we can exploit it for tasks beyond mere analysis. Maintaining good customer relationships is a priority for Banana! Hence the question from your boss, asking you to write friendly answers to the user comments wherever possible. But doing that takes time as well. Can’t we use ChatGPT to automate that step too? Let’s see …

- User : Write a friendly reply to the review from the company producing Banana-Book!

- GPT : Sure, here’s a friendly reply to the review:

Dear valued customer,

We are thrilled to hear that you are still happy with your BananaBook electronics product a year after your purchase! We designed the BananaBook to offer an impressive screen and fast performance for our users, and we are glad to see that these features meet your needs for coding and office work. We appreciate your feedback on the weight of the device and will take it into consideration for future improvements. Thank you for choosing BananaBook, and we hope to continue exceeding your expectations!

Best regards,

[Company Name]

Pretty good! ChatGPT answers with a friendly message to the customer, referencing specific points in the review in the appropriate manner (confirming positive points mentioned by the customer and promising improvements with regard to the weaknesses). Note that ChatGPT leaves a placeholder for the name of the corresponding company (marked by square brackets). So far, we haven’t told ChatGPT the name of our company (Banana) yet. Let’s fix that.

User : The name of the company is Banana - replace the corresponding placeholder in your answer.

GPT : I apologize for the mistake in my previous response. Here’s an updated reply with the correct company name:

Dear valued customer,

We are thrilled to hear that you are still happy with your BananaBook electronics product a year after your purchase! We designed the Banana-Book to offer an impressive screen and fast performance for our users, and we are glad to see that these features meet your needs for coding and office work. We appreciate your feedback on the weight of the device and will take it into consideration for future improvements. Thank you for choosing Banana, and we hope to continue exceeding your expectations!

Best regards, The Banana Team

Okay, that works! Based on the additional information, ChatGPT was able to substitute the name of the company for the placeholder in the previous answer.

WARNING Language models like ChatGPT may occasionally generate harmful content, even though recent models have significantly decreased the chance of such occurrences. It is recommended to check the text generated by Chat-GPT before posting it on public forums. Hence, automatically writing answers to customer reviews is not a good use case without some degree of human oversight.

We have seen that we can use ChatGPT for various tasks in text processing. We have used ChatGPT for categorizing text based on different criteria and using custom categories (classification according to review target and according to review content). We have also used it to extract specific pieces of information from a text and to summarize documents (i.e., reviews). Finally, we have used ChatGPT to generate text answering a customer review. In all cases, ChatGPT was able to follow instructions about the task and the desired output format. If you want, try writing a different review, and make sure ChatGPT can still solve all of these tasks.

Until recently, each of the different text-processing tasks we discussed in this section would have required a specialized language model. The latest generation of language models is flexible enough to solve a variety of tasks based on a description of the task in plain English (as well as other natural languages).

Note that we have processed only one short review so far. Here, using ChatGPT does not really provide a benefit. We could have classified or summarized the review manually and done it much more quickly than with the help of ChatGPT. Of course, the goal of automation is to scale processing up to a large number of (possibly longer) reviews. If we’re talking about hundreds, perhaps thousands, of reviews, manual analysis will take much longer than setting up ChatGPT to do the task. But a crucial component is still missing: How do we inform ChatGPT about all the review

text? Can we simply copy and paste the whole collection of reviews into the ChatGPT web interface?

That approach won’t work. Language models generally come with restrictions regarding the amount of information they can process at once. Hence, we need a mechanism that automatically “feeds” single reviews (or small collections of reviews) to ChatGPT for processing. We will discuss corresponding approaches in the following chapters. For now, we just want to verify that ChatGPT can be used, in principle, to perform a diverse range of analysis tasks on text documents.

2.4 Processing tables with ChatGPT

In the last section, we used ChatGPT directly for data processing, providing ChatGPT with data as well as a task description as input. This approach is reasonable as long as we’re processing text, the “native” input and output format of ChatGPT. For other types of data, it is much more efficient to use specialized, data type–specific tools for data processing. You may be wondering: If we process data with external tools, how can ChatGPT still be helpful in this context?

There are many tools for processing data, specialized for different types of data, processing, and hardware or software platforms. To use such tools, users often need to express the desired analysis operations in tool-specific formal languages. Writing code to analyze data can be tedious for experts and even more so for users with a limited IT background. Here, language models like ChatGPT can help because they understand natural language as well as the formal languages used by data-analysis tools. This means we can use ChatGPT as a sort of “translator,” translating our questions about the data, formulated in plain English, into code in various languages to be executed via external tools. This is what we will do next.

You’re back at Banana and have successfully used ChatGPT to analyze all the various reviews submitted by users. It is natural to represent this information as a data table. Each row corresponds to one review, and the columns represent the different types of information extracted from the review. Table 2.1 shows the first few rows.

Table 2.1 Example table with three columns representing the review ID, a flag indicating whether the reviewer is satisfied with a product, and the product category

| ReviewID | Satisfied | Category |

|---|---|---|

| 1 | 1 | Laptops |

| 2 | 0 | Phones |

| 2 | 1 | Gaming |

To keep the example simple, we use a table with a few columns corresponding to a subset of the analyses described in the previous section. The first table column contains the review ID. The second column contains a flag indicating whether the reviewer is satisfied with the product (1) or dissatisfied with the product (0). The final column contains the category. For this example, we consider only three categories: laptops, phones, and gaming.

This table is already a much more concise representation of the original reviews. But the full table has many rows (because we started with many reviews), and reading the raw table data doesn’t provide very much insight. Ideally, we want to aggregate data in interesting ways and present only the high-level trends to our boss. Which tools can we use to do that?

2.4.1 Processing tables in the web interface

The first option is using the OpenAI web interface directly to analyze tabular data. First, let’s download an example table with the review analysis results. Search for the link named Review Table on the book’s companion website (www.dataanalysiswith llms.com), and download the associated file. It contains a table with the structure shown in table 2.1 in .csv format.

What is the .csv format?

CSV stands for comma-separated values. It designates a specific format used to represent tabular data. Each table row is stored in a separate line, and values for different columns in the same row are separated by commas.

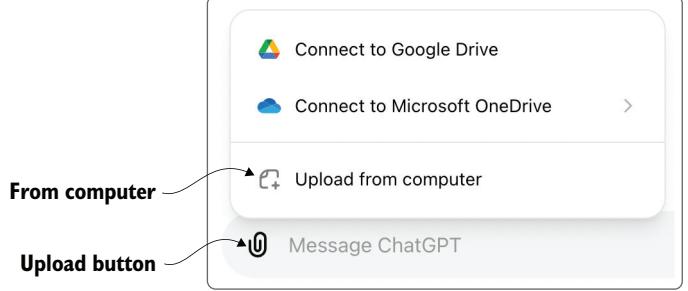

To analyze such data directly in the ChatGPT web interface, we first need to upload it. Click the Upload button shown in figure 2.4 (before doing so, you may also want to start a new chat). Choose the Upload From Computer option, and select the file you just downloaded (reviews_table.csv).

Figure 2.4 Click the Upload button, and choose the Upload From Computer option to upload files on disk.

After uploading the file, it should appear next to the input text field. You can now enter arbitrary questions about the data into the input text field. When generating its answers, ChatGPT will take into account and analyze the data you provided. For instance, let’s ask about the number of reviews for each product category.

User : How many reviews do we have for each product category?

You should see output like that shown in figure 2.5. ChatGPT shows a table containing the answer to your question (each row counts the number of reviews for one product category) and accompanying text.

| Show analysis |

|---|

Figure 2.5 Answer generated by ChatGPT for a question about the input table. Click the Show Analysis button to see how ChatGPT determined the answer.

How did ChatGPT calculate the answer? Did it read the entire table to generate the reply directly? Not quite. In the background, ChatGPT generates and executes Python code on OpenAI’s platform that analyzes the input data (in this scenario, the Python execution engine is the external tool we referred to initially). You can see the generated code by clicking the button labeled Show Analysis in figure 2.5. In fact, it is highly recommended to check the generated code instead of relying blindly on an answer. After all, despite their amazing capabilities, language models do regularly make mistakes.

Try a few more questions, check the generated code, and possibly even try a few different data sets (you can upload any tabular data on your computer, such as in Excel format). You will find that ChatGPT can handle a variety of data sets and requests.

This seems to work pretty well! Why do we need anything else? Well, there are a couple of reasons why we would like to explore other external tools for data analysis. First, there are strict limits on the size of files we can upload (512 MB at the time of writing). Uploading large data sets is not possible. Second, you may have noticed that data analysis with Python can take a few seconds, even for moderately sized data sets (e.g., the table with reviews has only 10,000 rows, which is small according to today’s standards). Analyzing large data sets takes prohibitive amounts of time. Finally, uploading data to OpenAI may not be acceptable for each use case. To preserve privacy for sensitive data, users may prefer analyzing data on their own platforms. In the next section, we will see how we can use ChatGPT to analyze data outside of OpenAI’s web interface.

2.4.2 Processing tables on your platform

In some scenarios, analyzing data directly in OpenAI’s web interface is not an option. Instead, ChatGPT can help us use various other tools for data analysis that are directly under our control. Next, we will use a relational database management system (RDBMS). Such systems are specialized for handling data of the type we’re interested in and tend to achieve high processing efficiency. This is just an example: the proposed approach generalizes to various other types of data-analysis systems.

Relational database management systems

A relational database management system is specialized for handling relational data: that is, data sets that contain one or multiple tables of the type in table 2.1. Most of them support variants of Structured Query Language (SQL), a language used to describe data and operations on data. For the following examples, we assume that you are familiar with the basics of SQL and RDBMSs. If you aren’t, you can find a short introduction to SQL in chapter 5. To get more details, consider reading the book Database Management Systems by Gehrke and Ramakrishnan [2], or try the online course by this book’s author at www.databaselecture.com.

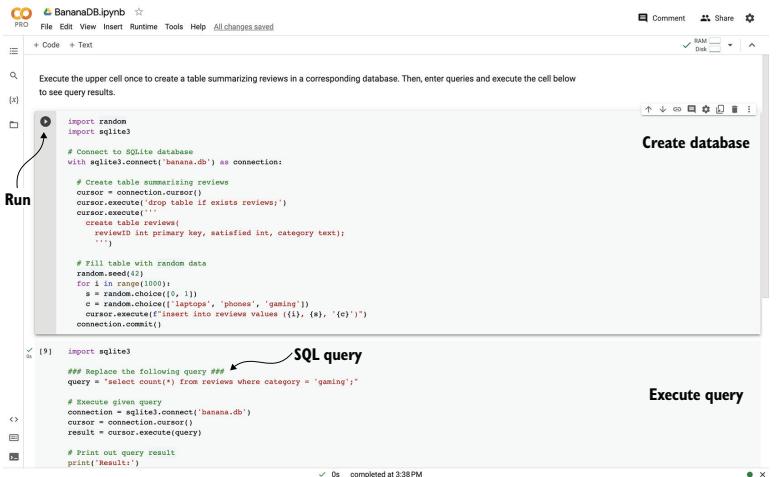

We will use SQLite, one of the most popular RDBMSs. To save you the headaches of installing and configuring that system on your machine, go to the book’s companion website, and follow the link to the BananaDB resource. It will lead you to a Google Colab notebook that you can use for the following steps. Figure 2.6 shows the notebook you should see after following the link.

Figure 2.6 Google Colab notebook allowing you to query the BananaDB database via SQLite. Execute the upper cell (Create Database) to create the database, replace the given SQL query (SQL Query) with a query of your choice, and then execute the lower cell (Execute Query) to see the query result. The arrow marks the Run button to execute the upper cell.

Google Colab notebooks