Causal Inference and Discovery in Python

Machine Learning and Pearlian Perspective

Causal Inference and Discovery in Python – Machine Learning and Pearlian Perspective

Unlock the secrets of modern causal machine learning with DoWhy, EconML, PyTorch, and more

Aleksander Molak

BIRMINGHAM—MUMBAI

Causal Inference and Discovery in Python – Machine Learning and Pearlian Perspective

Copyright © 2023 Packt Publishing

All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews.

Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book.

Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information.

Group Product Manager: Ali Abidi Publishing Product Manager: Dinesh Chaudhary Senior Editor: Tazeen Shaikh Technical Editor: Rahul Limbachiya Copy Editor: Safis Editing Project Coordinator: Farheen Fathima Proofreader: Safis Editing Indexer: Pratik Shirodkar Production Designer: Shankar Kalbhor Marketing Coordinators: Shifa Ansari and Vinishka Kalra

First published: June 2023

Production reference: 1290523

Published by Packt Publishing Ltd.

Livery Place

35 Livery Street

Birmingham

B3 2PB, UK.

ISBN 978-1-80461-298-9

To my wife, Katia. You cause me to smile. I am grateful for every day we spend together.

Foreword

I have been following Aleksander Molak’s work on causality for a while.

I have been using libraries for causal inference, such as DoWhy, in my teaching at the University of Oxford, and causality is one of the key topics I teach in my course.

Based on the discussions with Aleksander, I have invited him to present a session at Oxford in our course in Fall 23.

Hence, I am pleased to write the foreword for Aleksander’s new book, Causal Inference and Discovery in Python.

Despite causality becoming a key topic for AI and increasingly also for generative AI, most developers are not familiar with concepts such as causal graphs and counterfactual queries.

Aleksander’s book makes the journey into the world of causality easier for developers. The book spans both technical concepts and code and provides recommendations for the choice of approaches and algorithms to address specific causal scenarios.

This book is comprehensive yet accessible. Machine learning engineers, data scientists, and machine learning researchers who want to extend their data science toolkit to include causal machine learning will find this book most useful.

Looking to the future of AI, I find the sections on causal machine learning and LLMs especially relevant to both readers and our work.

Ajit Jaokar

Visiting Fellow, Department of Engineering Science, University of Oxford, and Course Director, Artificial Intelligence: Cloud and Edge Implementations, University of Oxford

Contributors

About the reviewers

Nicole Königstein is an experienced data scientist and quantitative researcher, currently working as data science and technology lead at impactvise, an ESG analytics company, and as a technology lead and head quantitative researcher at Quantmate, an innovative FinTech start-up focused on alternative data in predictive modeling. As a guest lecturer, she shares her expertise in Python, machine learning, and deep learning at various universities. Nicole is a regular speaker at renowned conferences, where she conducts workshops and educational sessions. She also serves as a regular reviewer of books in her field, further contributing to the community. Nicole is the author of the well-received online course Math for Machine Learning, and the author of the book Transformers in Action.

Mike Hankin is a data scientist and statistician, with a B.S. from Columbia University and a Ph.D. from the University of Southern California (dissertation topic: sequential testing of multiple hypotheses). He spent 5 years at Google working on a wide variety of causal inference projects. In addition to causal inference, he works on Bayesian models, non-parametric statistics, and deep learning (including contributing to TensorFlow/Keras). In 2021, he took a principal data scientist role at VideoAmp, where he works as a high-level tech lead, overseeing all methodology development. On the side, he volunteers with a schizophrenia lab at the Veterans Administration, working on experiment design and multimodal data analysis.

Amit Sharma is a principal researcher at Microsoft Research India. His work bridges causal inference techniques with machine learning to enhance the generalization, explainability, and avoidance of hidden biases in machine learning models. To achieve these goals, Amit has co-led the development of the open-source DoWhy library for causal inference and the DiCE library for counterfactual explanations. The broader theme of his work revolves around leveraging machine learning for improved decisionmaking. Amit received his Ph.D. in computer science from Cornell University and his B.Tech. in computer science and engineering from the Indian Institute of Technology (IIT) Kharagpur.

Acknowledgments

There’s only one name listed on the front cover of this book, but this book would not exist without many other people whose names you won’t find on the cover.

I want to thank my wife, Katia, for the love, support, and understanding that she provided me with throughout the year-long process of working on this book.

I want to thank Shailesh Jain, who was the first person at Packt with whom I shared the idea about this book.

The wonderful team at Packt made writing this book a much less challenging experience than it would have been otherwise. I thank Dinesh Chaudhary for managing the process, being open to non-standard ideas, and making the entire journey so smooth.

I want to thank my editor, Tazeen Shaikh, and my project manager, Kirti Pisat. Your support, patience, amazing energy, and willingness to go the extra mile are hard to overstate. I am grateful that I had an opportunity to work with you!

Three technical reviewers provided me with invaluable feedback that made this book a better version of itself. I am immensely grateful to Amit Sharma (Microsoft Research), Nicole Königstein (impactvise), and Mike Hankin (VideoAmp) for their comments and questions that gave me valuable hints, sometimes challenged me, and – most importantly – gave me an opportunity to see this book through their eyes.

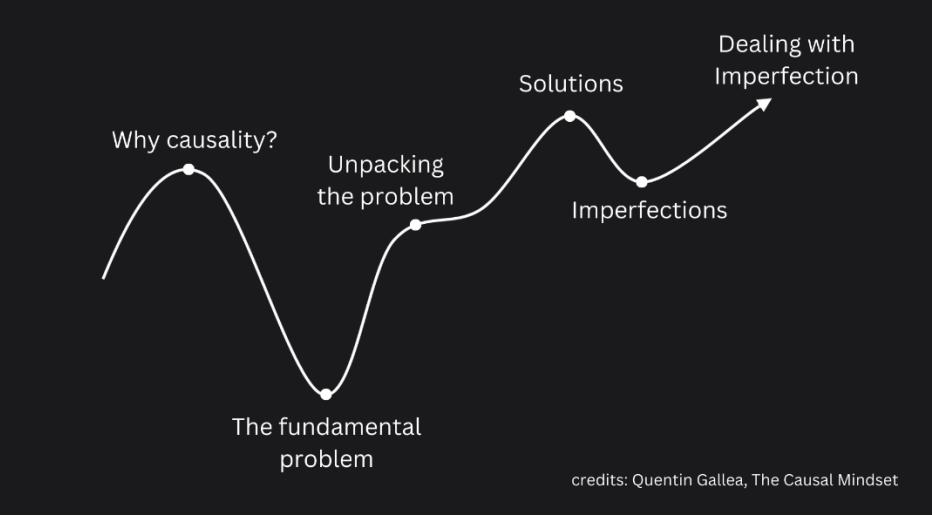

I want to thank all the people, who provided me with clarifications, and additional information, agreed to include their materials in the book, or provided valuable feedback regarding parts of this book outside of the formal review process: Kevin Hillstrom, Matheus Facure, Rob Donnelly, Mehmet Süzen, Ph.D., Piotr Migdał, Ph.D., Quentin Gallea, Ph.D., Uri Itai, Ph.D., prof. Judea Pearl, Alicia Curth.

I want to thank my friends, Uri Itai, Natan Katz, and Leah Bar, with whom we analyzed and discussed some of the papers mentioned in this book.

Additionally, I want to thank Prof. Frank Harrell and Prof. Stephen Senn for valuable exchanges on Twitter that gave me many insights into experimentation and causal modeling as seen through the lens of biostatistics and medical statistics.

I am grateful to the CausalPython.io community members who shared their feedback regarding the contents of this book: Marcio Minicz; Elie Kawerk, Ph.D.; Dr. Tony Diana; David Jensen; and Michael Wexler.

I received a significant amount of support from causalpython.io members and people on LinkedIn and Twitter who shared their ideas, questions, and excitement, or expressed their support for me writing this book by following me or liking and sharing the content related to this book. Thank you!

Finally, I want to thank Rahul Limbachiya, Vinishka Kalra, Farheen Fathima, Shankar Kalbhor, and the entire Packt team for their engagement and great work on this project, and the team at Safis Editing, for their helpful suggestions.

I did my best not to miss anyone from this list. Nonetheless, if I missed your name, the next line is for you.

Thank you!

I also want to thank you for buying this book.

Congratulations on starting your causal journey today!

Table of Contents

Preface xix

Part 1: Causality – an Introduction

1

| Causality – Hey, We Have Machine Learning, So Why Even Bother? | 3 | ||

|---|---|---|---|

| A brief history of causality | 4 | A marketer’s dilemma | 9 |

| Why causality? Ask babies! | 5 | Let’s play doctor! Associations in the wild |

10 |

| Interacting with the world | 5 | 12 | |

| Confounding – relationships that are not real | 6 | Wrapping it up | 12 |

| How not to lose money… and | References | 12 | |

| human lives | 9 |

2

| Judea Pearl and the Ladder of Causation | 15 | ||

|---|---|---|---|

| From associations to logic and | The fundamental problem of causal inference | 30 | |

| imagination – the Ladder | Computing counterfactuals | 30 | |

| of Causation | 15 | Time to code! | 32 |

| Associations | 18 | Extra – is all machine learning | |

| Let’s practice! | 20 | causally the same? | 33 |

| What are interventions? | 23 | Causality and reinforcement learning | 33 |

| Changing the world | 24 | Causality and semi-supervised and unsupervised learning |

|

| Correlation and causation | 26 | 34 | |

| What are counterfactuals? | 28 | Wrapping it up | 34 |

| Let’s get weird (but formal)! | 28 | References | 35 |

| Regression, Observations, and Interventions | 37 | ||

|---|---|---|---|

| If you don’t know where you’re going, you might end up somewhere else |

45 | ||

| Get involved! | 48 | ||

| 41 | To control or not to control? | 48 | |

| 42 | 49 | ||

| 42 | SCMs | 49 | |

| Linear regression versus SCMs | 49 | ||

| Finding the link | 49 | ||

| 45 | Regression and causal effects | 51 | |

| Wrapping it up | 53 | ||

| References | 53 | ||

| 37 37 44 |

Regression and structural models |

4

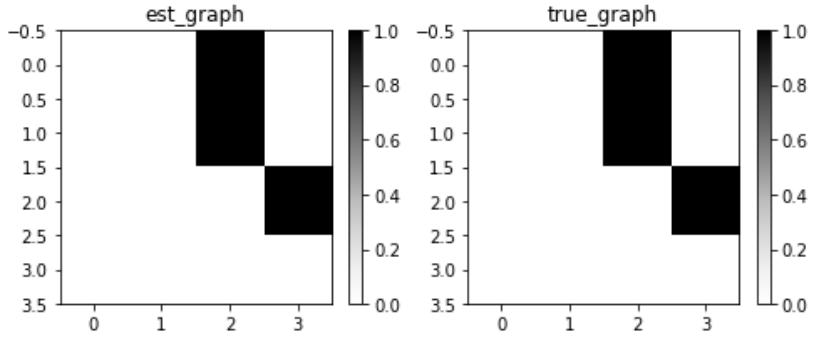

Graphical Models 55

| Graphs, graphs, graphs | 55 | Sources of causal graphs in the | |

|---|---|---|---|

| Types of graphs | 56 | real world | 66 |

| Graph representations | 58 | Causal discovery | 67 |

| Graphs in Python | 60 | Expert knowledge | 67 |

| What is a graphical model? | 63 | Combining causal discovery and expert knowledge |

67 |

| DAG your pardon? Directed acyclic graphs in the causal wonderland |

64 | Extra – is there causality | |

| Definitions of causality | 64 | beyond DAGs? | 67 |

| DAGs and causality | 65 | Dynamical systems | 67 |

| Let’s get formal! | 65 | Cyclic SCMs | 68 |

| Limitations of DAGs | 66 | Wrapping it up | 68 |

| References | 69 |

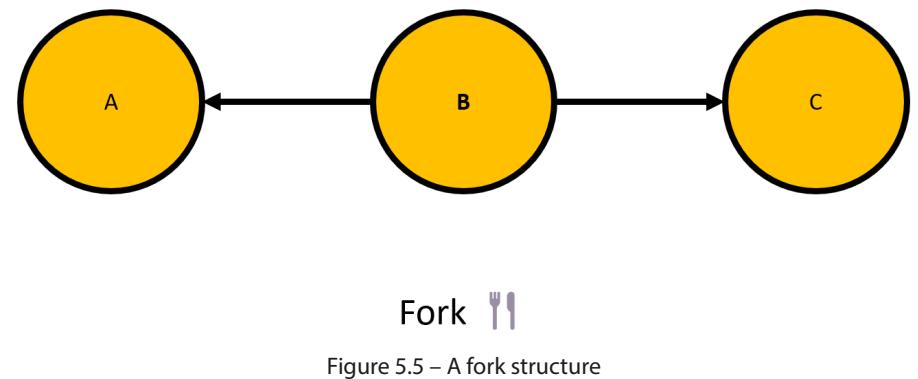

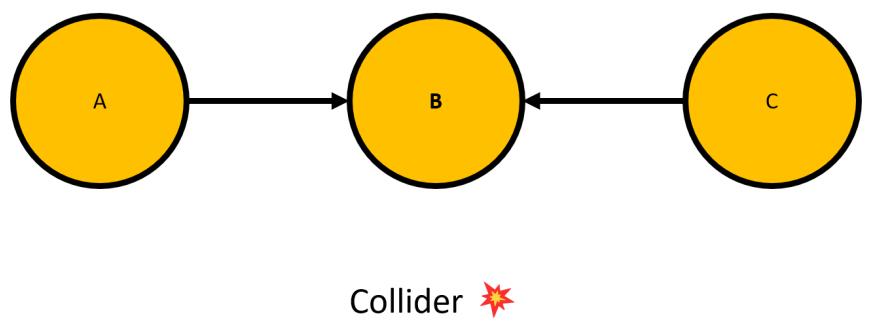

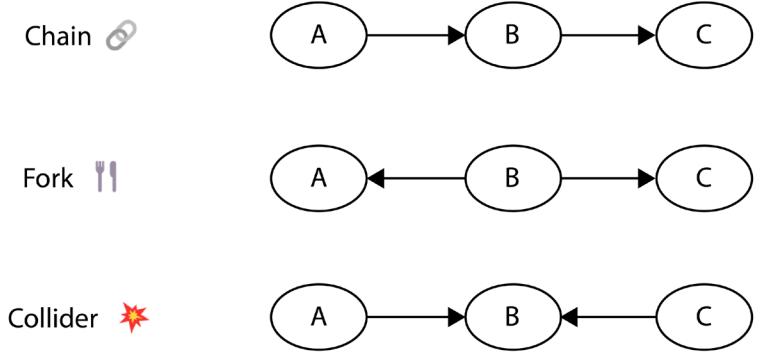

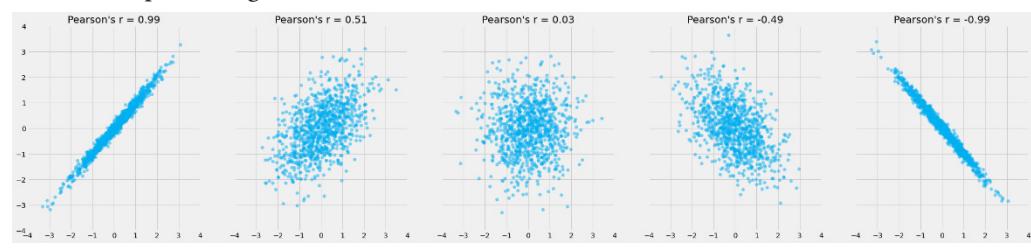

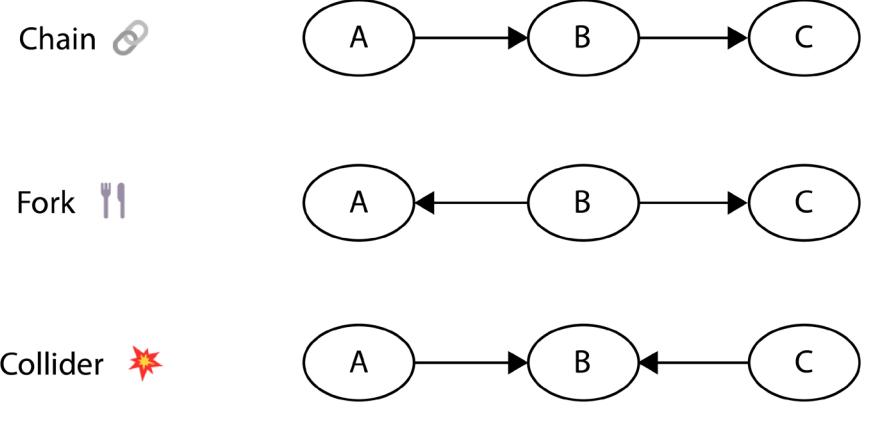

Forks, Chains, and Immoralities 71

| Graphs and distributions and how to | |

|---|---|

| map between them | 71 |

| How to talk about independence | 72 |

| Choosing the right direction | 73 |

| Conditions and assumptions | 74 |

| Chains, forks, and colliders or… | |

| immoralities | 78 |

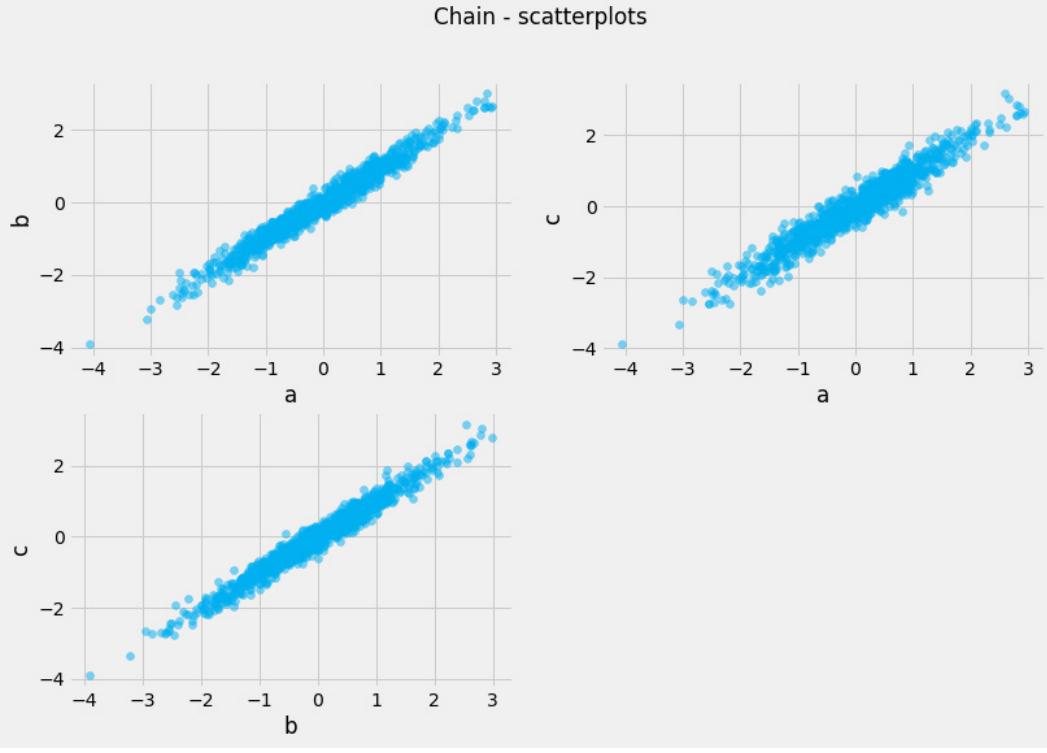

| A chain of events | 78 |

| Chains | 79 |

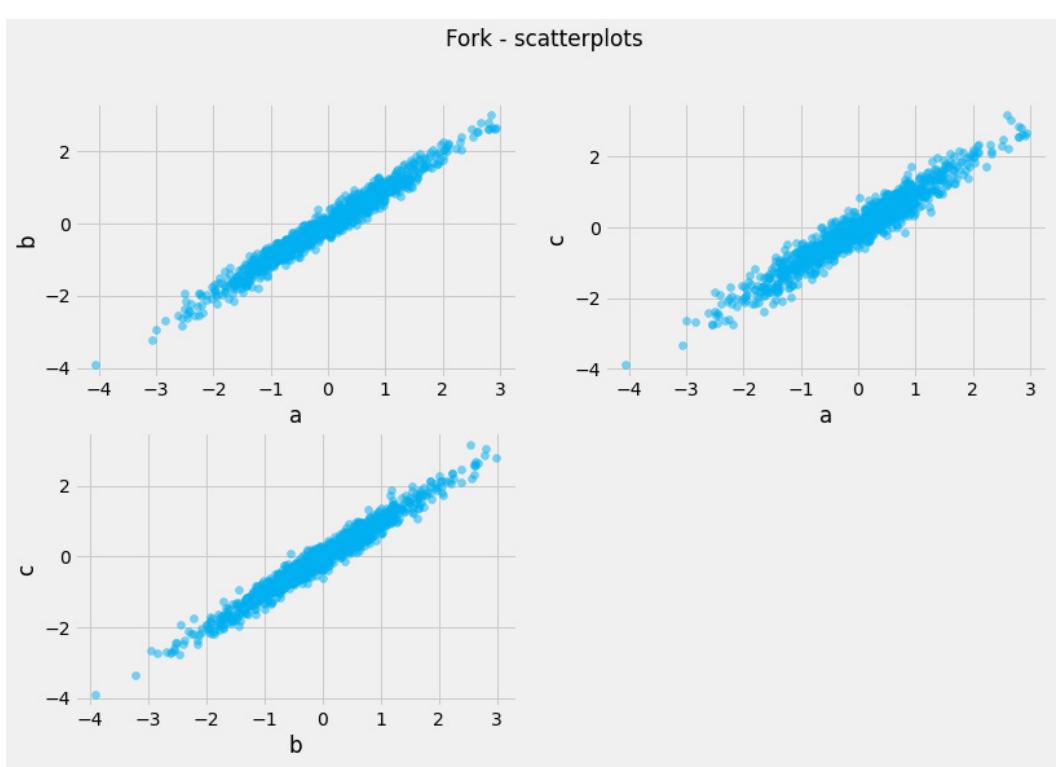

| Forks | 80 |

| 82 |

|---|

| 84 |

| 85 |

| 87 |

| 88 |

| 89 |

| 90 |

| 93 |

| 93 |

Part 2: Causal Inference

6

| Nodes, Edges, and Statistical (In)dependence | 97 | ||

|---|---|---|---|

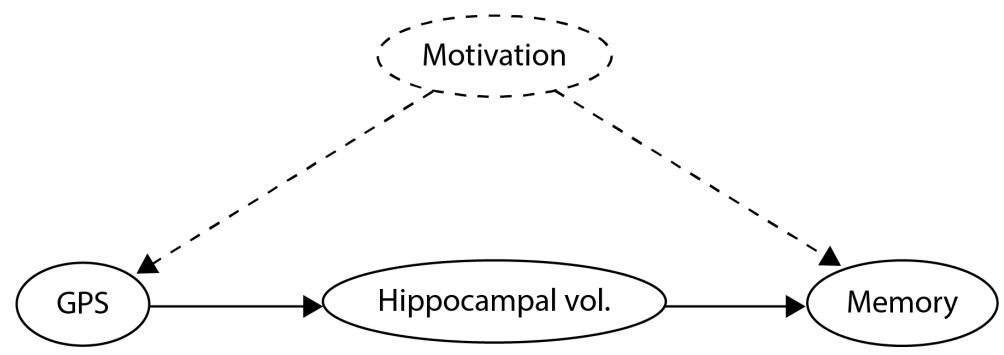

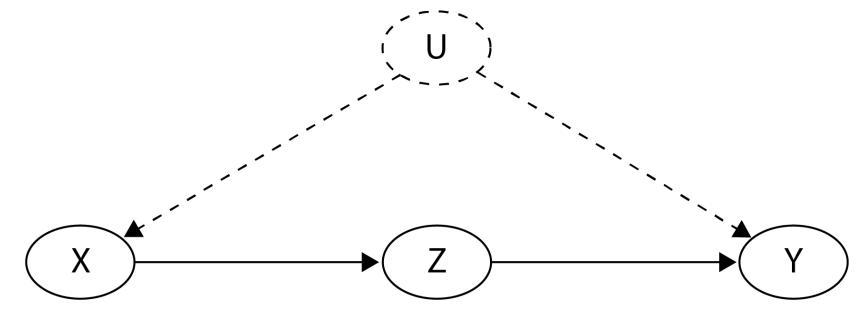

| You’re gonna keep ’em d-separated | 98 | London cabbies and the magic pebble | 109 |

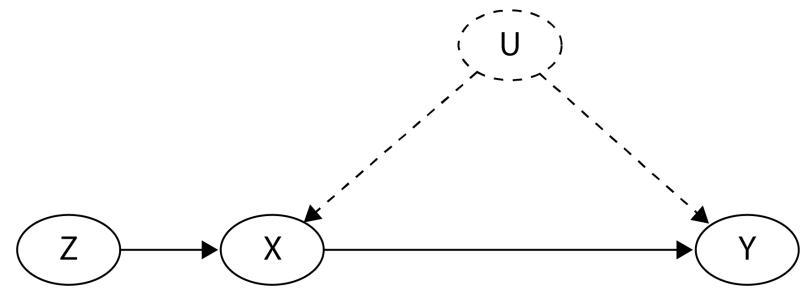

| Practice makes perfect – d-separation | 99 | Opening the front door | 110 |

| Estimand first! | 102 | Three simple steps toward the front door Front-door in practice |

111 112 |

| We live in a world of estimators So, what is an estimand? |

102 102 |

Are there other criteria out there? | |

| The back-door criterion | 104 | Let’s do-calculus! The three rules of do-calculus |

118 119 |

| What is the back-door criterion? | 105 | Instrumental variables | 120 |

| Back-door and equivalent estimands | 105 | Wrapping it up | 122 |

| The front-door criterion | 107 | Answer | 122 |

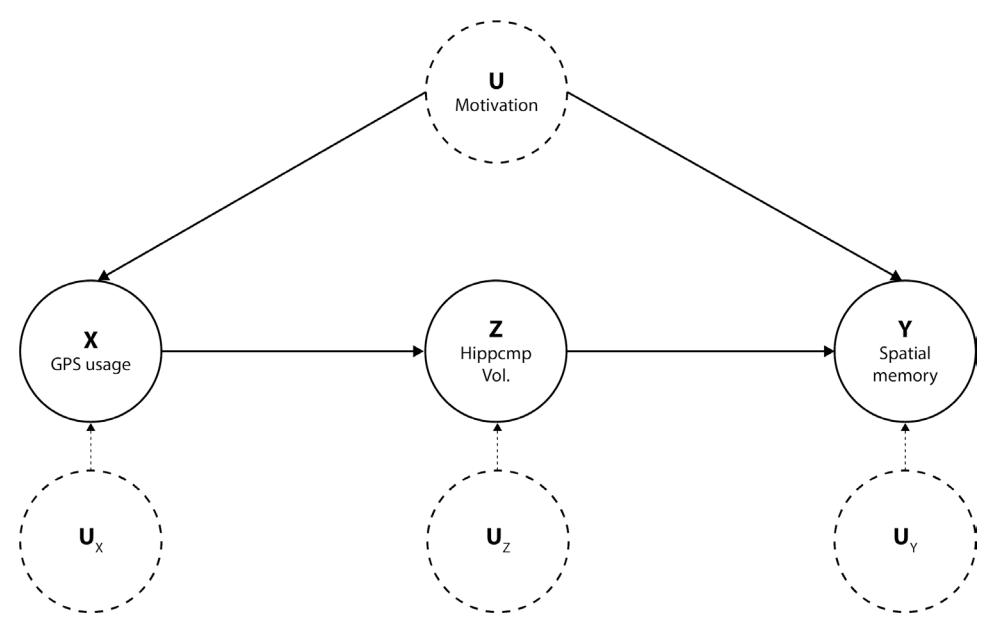

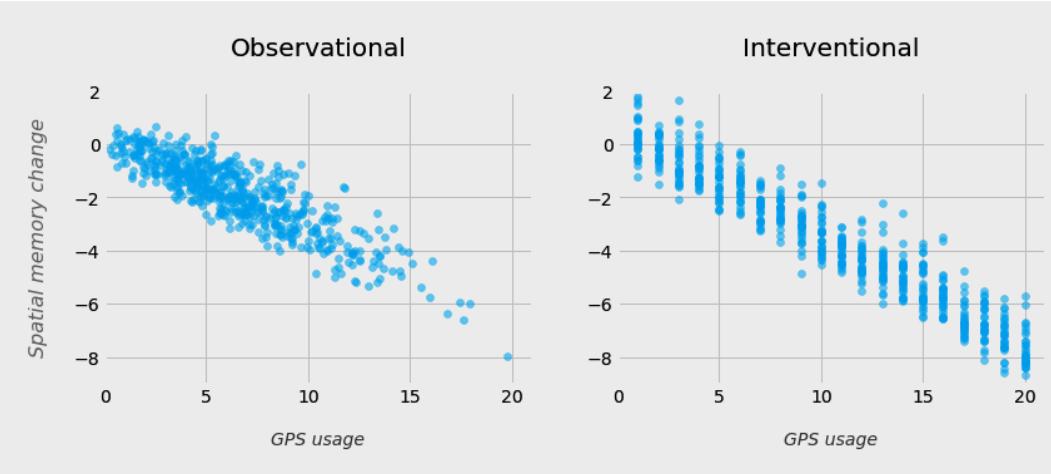

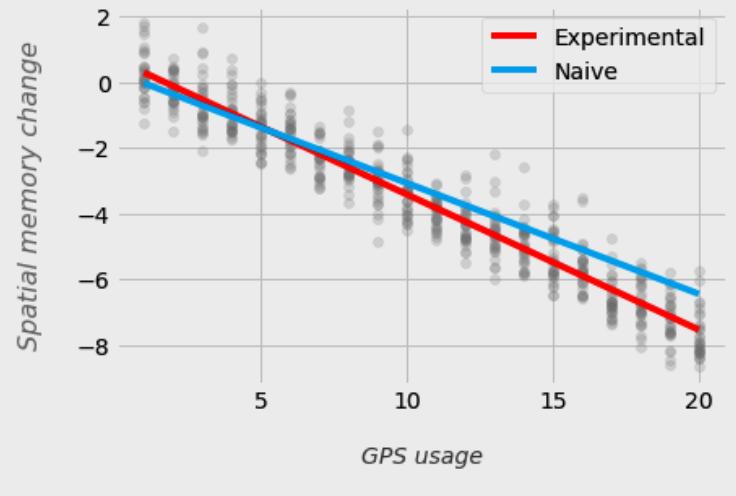

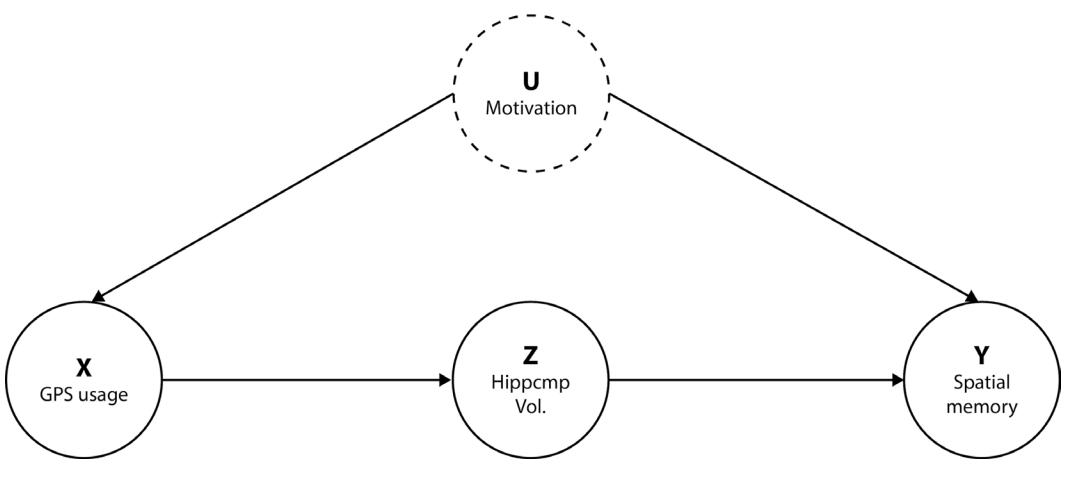

| Can GPS lead us astray? | 108 | References | 123 |

| The Four-Step Process of Causal Inference | 125 |

|---|---|

| ——————————————- | —– |

| Introduction to DoWhy and EconML |

126 | Step 4 – where’s my validation set? Refutation tests |

135 |

|---|---|---|---|

| Python causal ecosystem | 126 | How to validate causal models | 135 |

| Why DoWhy? | 128 | Introduction to refutation tests | 137 |

| Oui, mon ami, but what is DoWhy? | 128 | ||

| How about EconML? | 129 | Full example Step 1 – encode the assumptions |

139 140 |

| Step 1 – modeling the problem | 130 | Step 2 – getting the estimand | 142 |

| Creating the graph | 130 | Step 3 – estimate! | 142 |

| Building a CausalModel object | 132 | Step 4 – refute them! | 144 |

| Step 2 – identifying the estimand(s) | 133 | Wrapping it up | 149 |

| Step 3 – obtaining estimates | 134 | References | 149 |

8

| Causal Models – Assumptions and Challenges | 151 | ||

|---|---|---|---|

| I am the king of the world! But am I? | 152 | …and more | 162 |

| In between | 152 | Modularity | 162 |

| Identifiability | 153 | SUTVA | 164 |

| Lack of causal graphs | 153 | Consistency | 164 |

| Not enough data | 154 | Call me names – spurious | |

| Unverifiable assumptions | 156 | relationships in the wild | 165 |

| An elephant in the room – hopeful or hopeless? Let’s eat the elephant |

156 156 |

Names, names, names Should I ask you or someone who’s not here? |

165 166 |

| Positivity | 157 | DAG them! More selection bias |

166 168 |

| Exchangeability | 161 | Wrapping it up | 169 |

| Exchangeable subjects | 161 | ||

| Exchangeability versus confounding | 161 | References | 170 |

Causal Inference and Machine Learning – from Matching to Meta-Learners 173

| The basics I – matching | 174 | Jokes aside, say hi to the | |

|---|---|---|---|

| Types of matching | 174 | heterogeneous crowd | 190 |

| Treatment effects – ATE versus ATT/ATC | 175 | Waving the assumptions flag | 192 |

| Matching estimators | 176 | You’re the only one – modeling with | |

| Implementing matching | 178 | S-Learner | 192 |

| Small data | 198 | ||

| The basics II – propensity scores | 183 | S-Learner’s vulnerabilities | 199 |

| Matching in the wild | 183 | T-Learner – together we can do more | 200 |

| Reducing the dimensionality with | |||

| propensity scores | 185 | Forcing the split on treatment | 200 |

| Propensity score matching (PSM) | 185 | T-Learner in four steps and a formula | 201 |

| Inverse probability weighting (IPW) | 186 | Implementing T-Learner | 202 |

| Many faces of propensity scores | 186 | X-Learner – a step further | 204 |

| Formalizing IPW | 187 | Squeezing the lemon | 204 |

| Implementing IPW | 187 | Reconstructing the X-Learner | 205 |

| IPW – practical considerations | 188 | X-Learner – an alternative formulation | 207 |

| Implementing X-Learner | 208 | ||

| S-Learner – the Lone Ranger | 188 | ||

| The devil’s in the detail | 189 | Wrapping it up | 212 |

| Mom, Dad, meet CATE | 190 | References | 213 |

10

Causal Inference and Machine Learning – Advanced Estimators, Experiments, Evaluations, and More 215

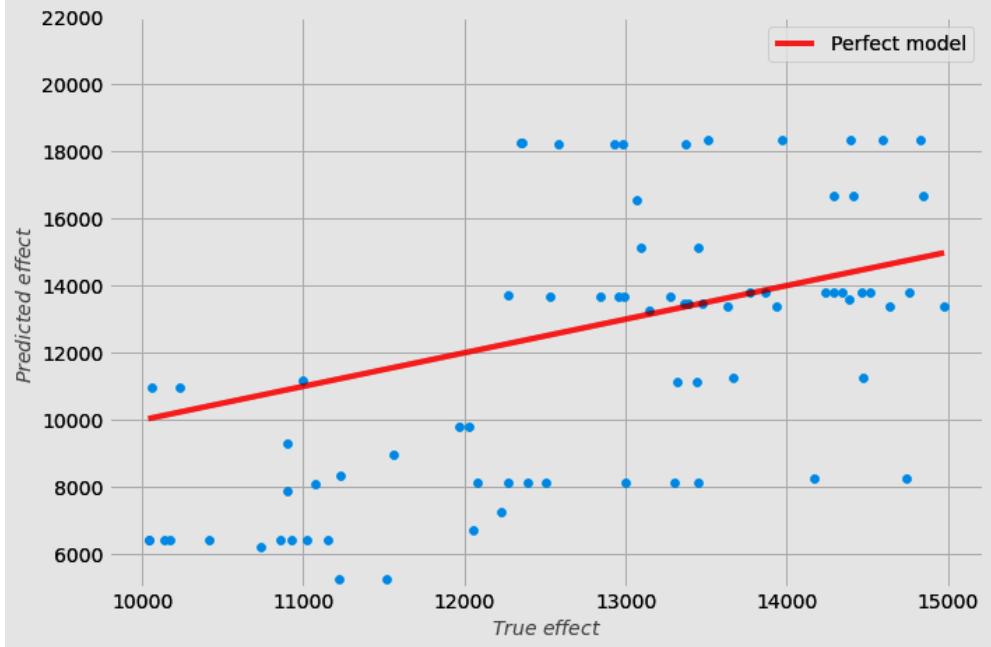

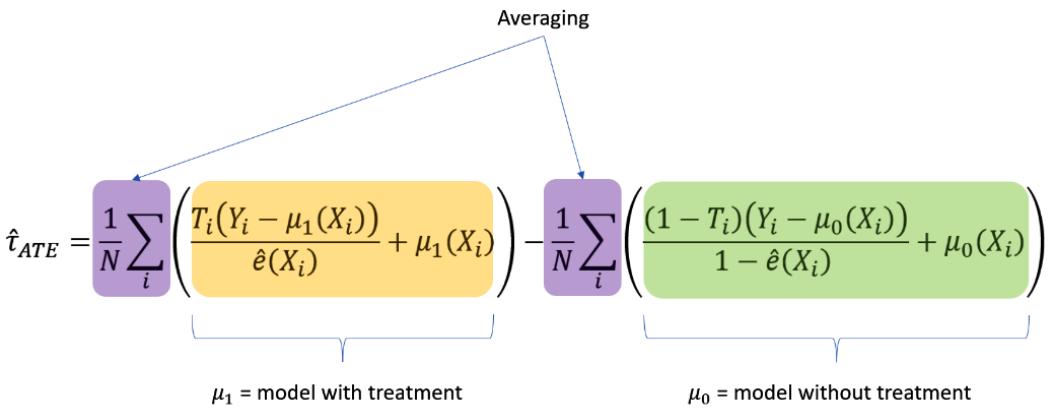

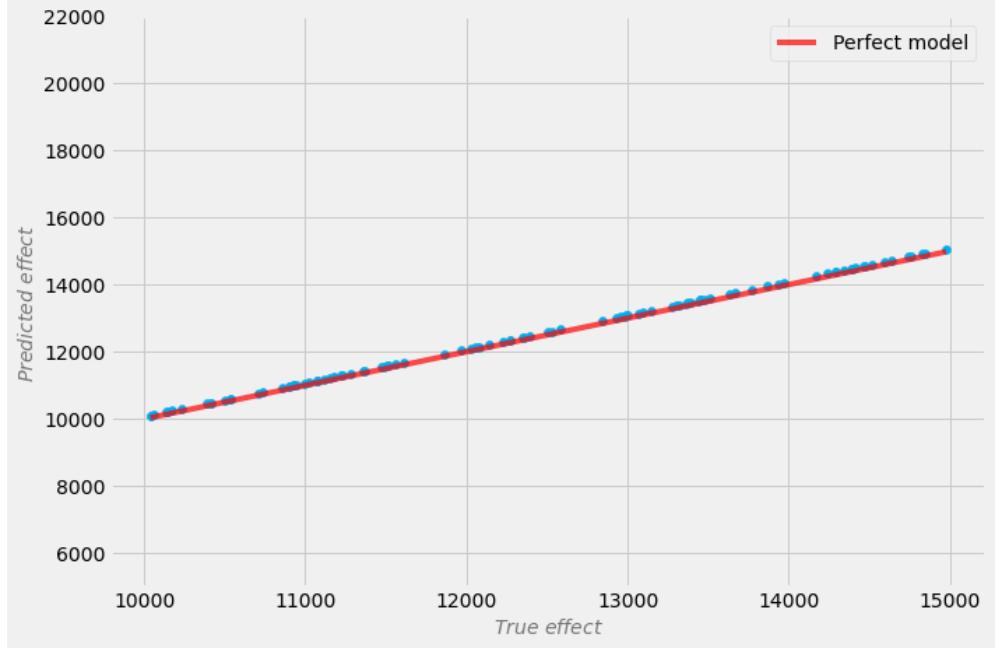

| Doubly robust methods – let’s get | 216 | DR-Learners – more options | 224 |

|---|---|---|---|

| more! | Targeted maximum likelihood estimator | 224 | |

| Do we need another thing? Doubly robust is not equal to bulletproof… |

216 218 |

If machine learning is cool, how | |

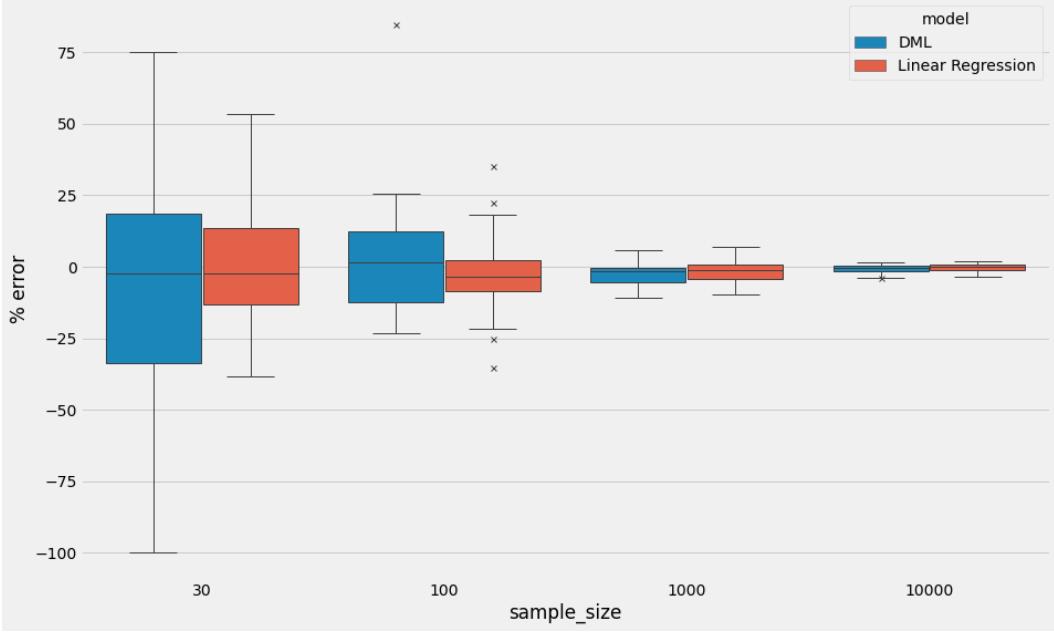

| …but it can bring a lot of value | 218 | about double machine learning? | 227 |

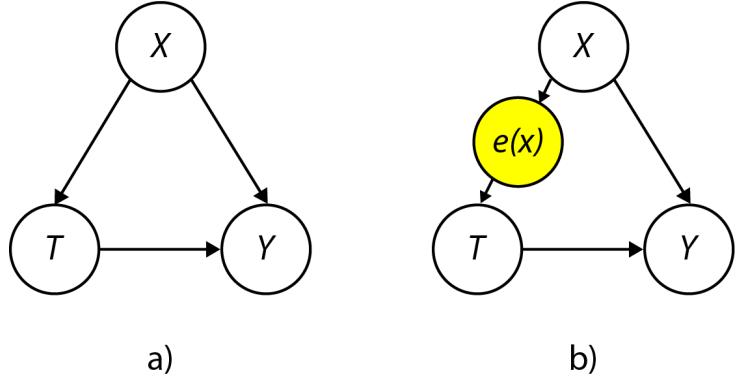

| The secret doubly robust sauce | 218 | Why DML and what’s so double about it? | 228 |

| Doubly robust estimator versus assumptions DR-Learner – crossing the chasm |

220 220 |

DML with DoWhy and EconML Hyperparameter tuning with DoWhy and EconML |

231 234 |

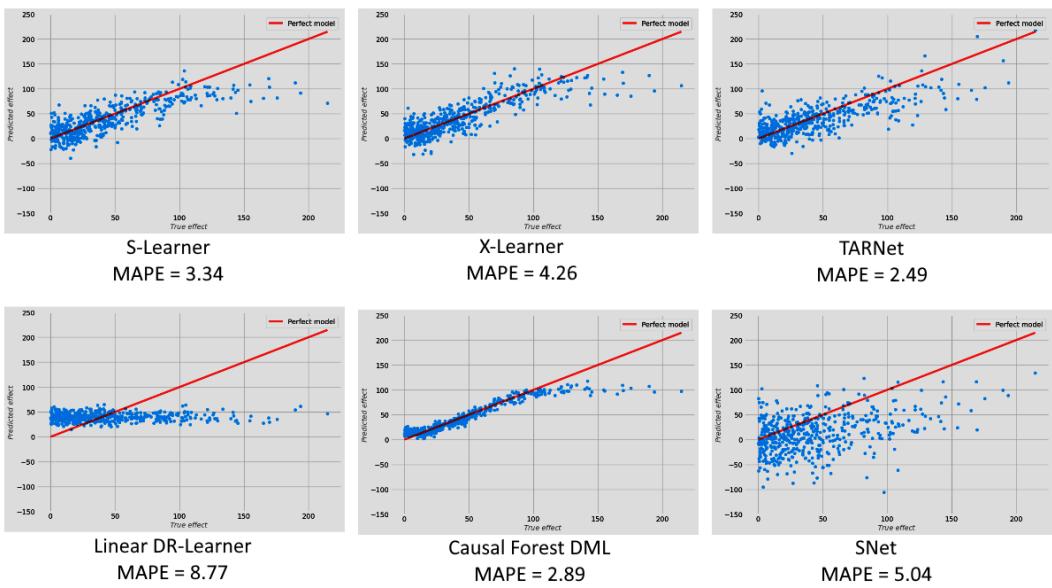

| Is DML a golden bullet? | 239 | Kevin’s challenge | 252 |

|---|---|---|---|

| Doubly robust versus DML | 240 | Opening the toolbox | 253 |

| What’s in it for me? | 241 | Uplift models and performance | 257 |

| Causal Forests and more | 242 | Other metrics for continuous outcomes with multiple treatments |

262 |

| Causal trees | 242 | Confidence intervals | 263 |

| Forests overflow | 242 | Kevin’s challenge’s winning submission | 264 |

| Advantages of Causal Forests | 242 | When should we use CATE estimators for | |

| Causal Forest with DoWhy and EconML | 243 | experimental data? | 264 |

| Heterogeneous treatment | Model selection – a simplified guide | 265 | |

| effects with experimental data – the | Extra – counterfactual explanations | 267 | |

| uplift odyssey | 245 | Bad faith or tech that does not know? | 267 |

| The data | 245 | ||

| Choosing the framework | 251 | Wrapping it up | 268 |

| We don’t know half of the story | 251 | References | 269 |

Causal Inference and Machine Learning – Deep Learning, NLP, and Beyond 273

| Going deeper – deep learning for | Quasi-experiments | 297 | |

|---|---|---|---|

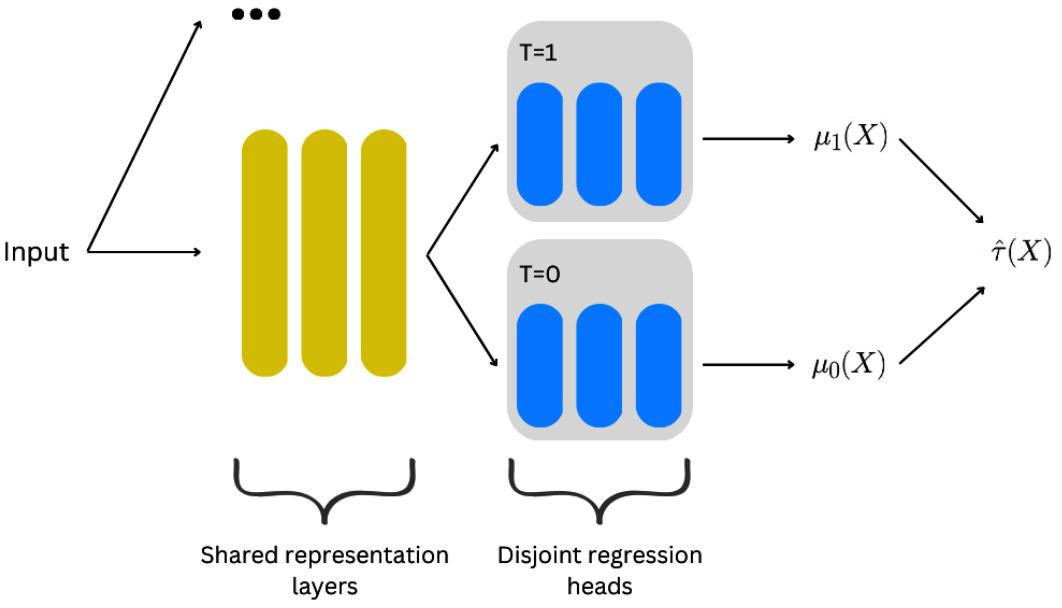

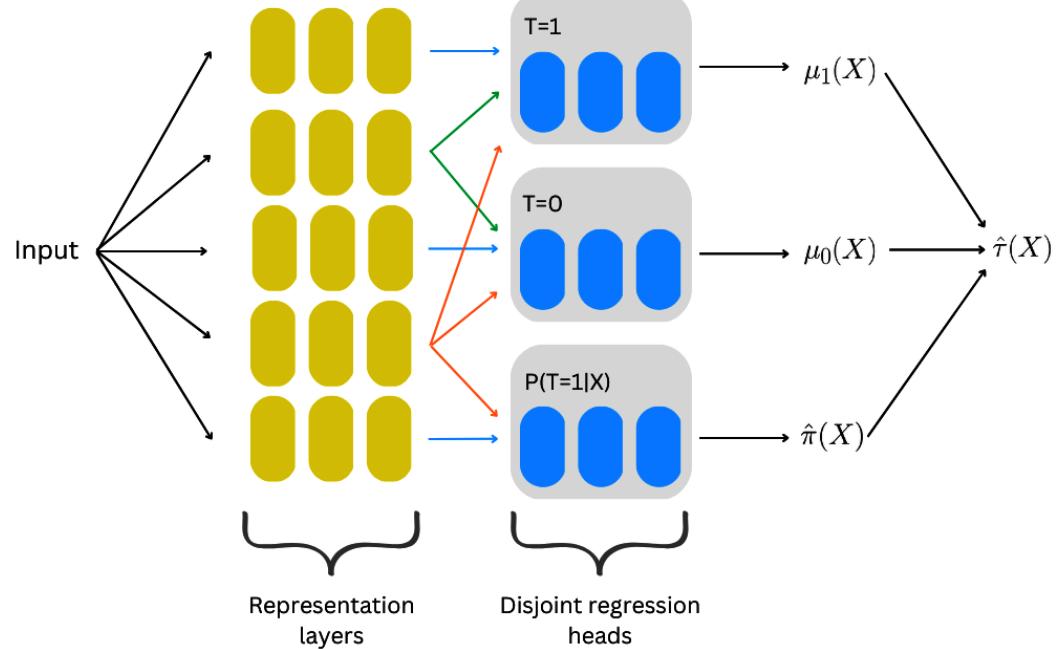

| heterogeneous treatment effects | 274 | Twitter acquisition and our | |

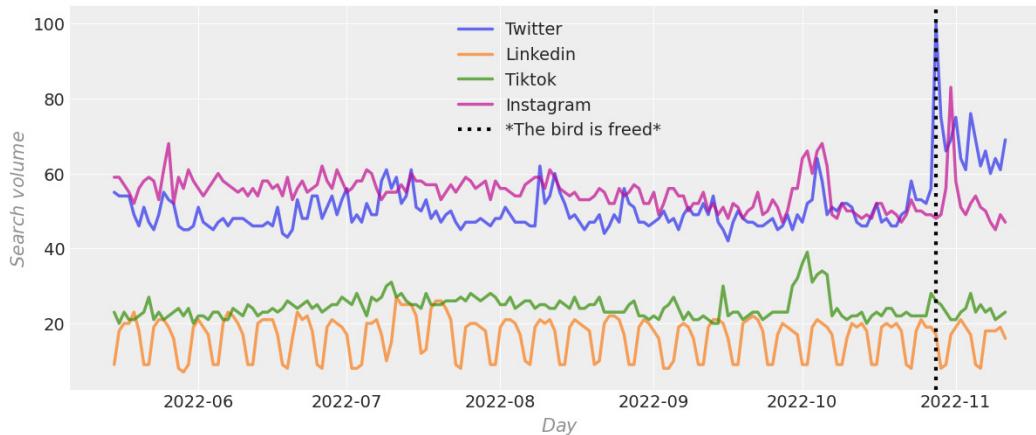

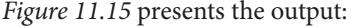

| CATE goes deeper | 274 | googling patterns | 298 |

| SNet | 276 | The logic of synthetic controls | 298 |

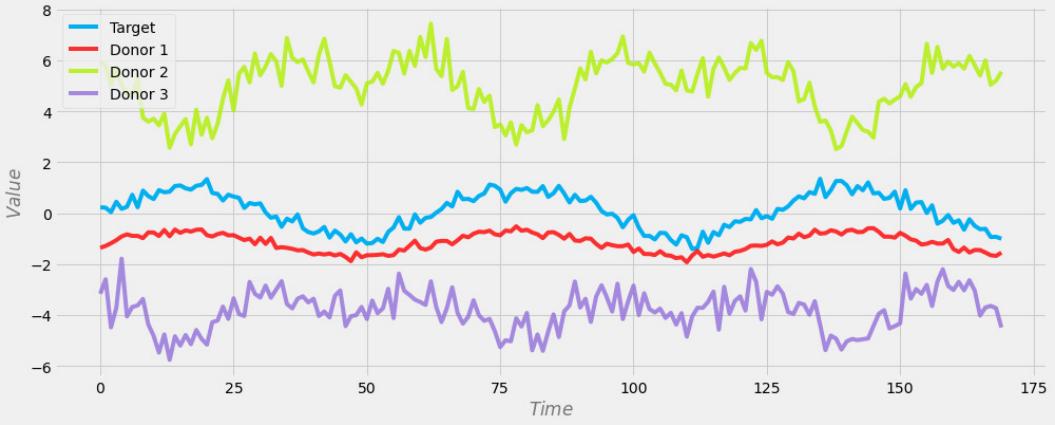

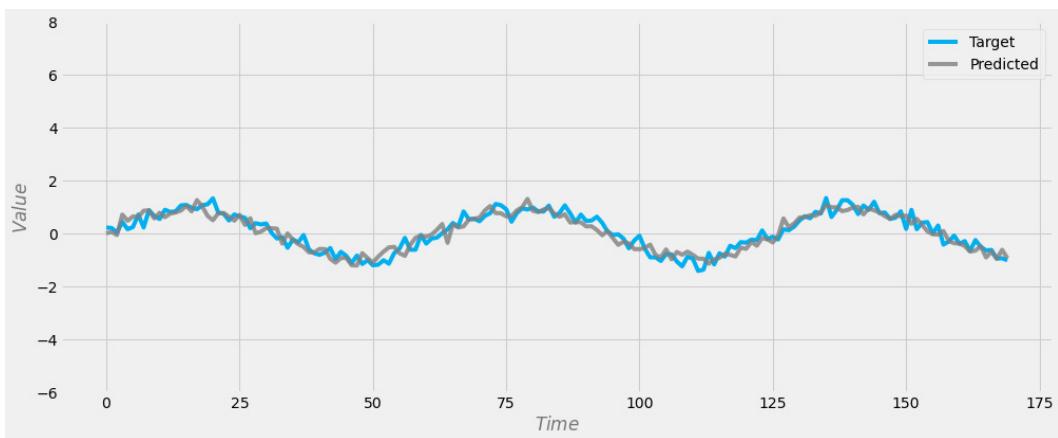

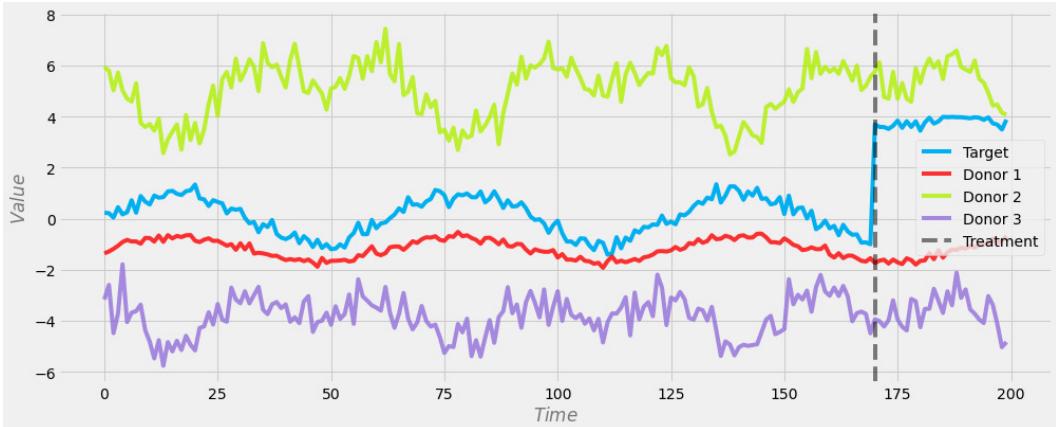

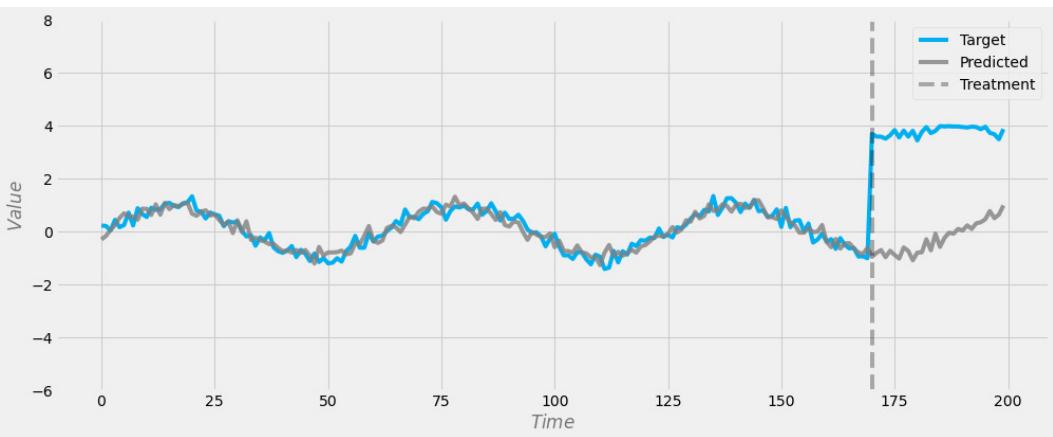

| Transformers and causal inference | 284 | A visual introduction to the logic of synthetic controls |

300 |

| The theory of meaning in five paragraphs | 285 | Starting with the data | 302 |

| Making computers understand language | 285 | Synthetic controls in code | 303 |

| From philosophy to Python code | 286 | Challenges | 308 |

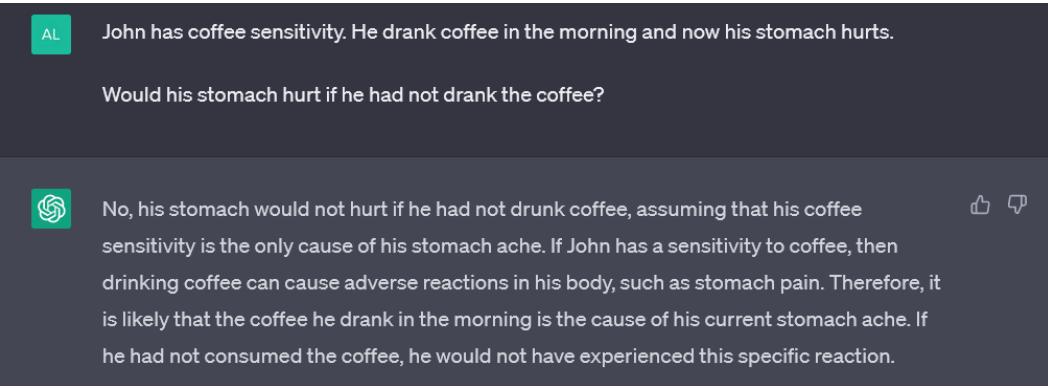

| LLMs and causality | 286 | ||

| The three scenarios | 288 | Wrapping it up | 309 |

| CausalBert | 292 | References | 309 |

| Causality and time series – when an | |||

| econometrician goes Bayesian | 297 |

Part 3: Causal Discovery

12

Can I Have a Causal Graph, Please? 315

| Sources of causal knowledge | 316 | From experiments to graphs | 321 |

|---|---|---|---|

| You and I, oversaturated | 316 | Simulations | 321 |

| The power of a surprise | 317 | Personal experience and domain | |

| Scientific insights | 317 | knowledge | 321 |

| The logic of science | 318 | Personal experiences | 322 |

| Hypotheses are a species | 318 | Domain knowledge | 323 |

| One logic, many ways | 319 | Causal structure learning | 323 |

| Controlled experiments | 319 | Wrapping it up | 324 |

| Randomized controlled trials (RCTs) | 320 | References | 324 |

13

Causal Discovery and Machine Learning – from Assumptions to Applications 327

| Causal discovery – assumptions | Visualizing the model | 336 | |

|---|---|---|---|

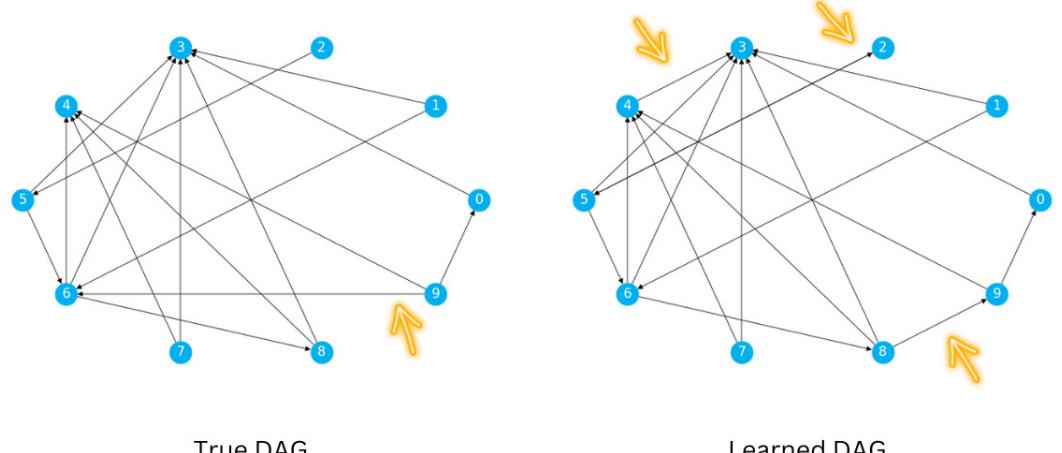

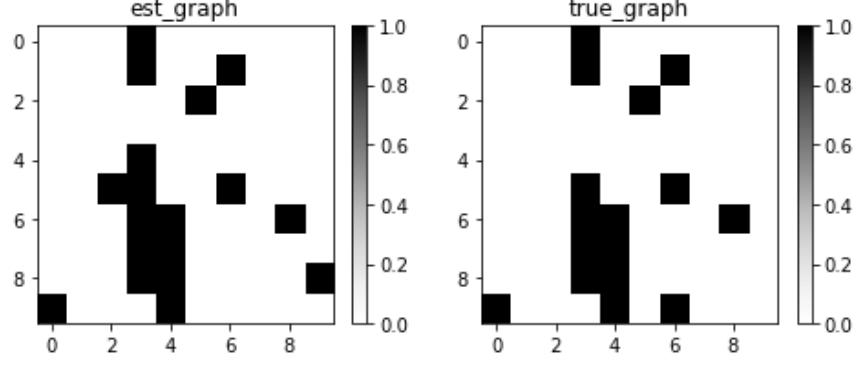

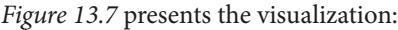

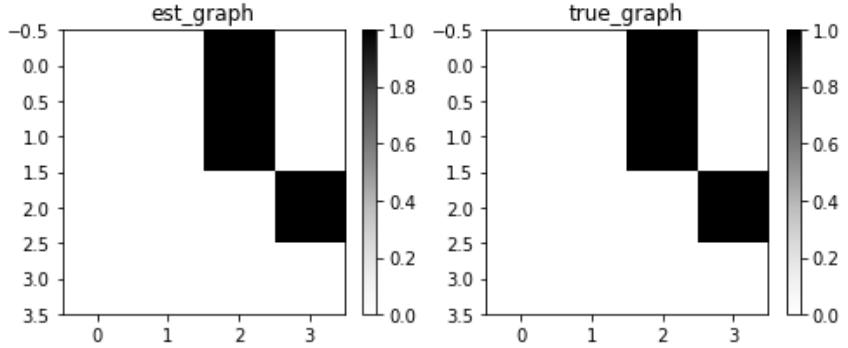

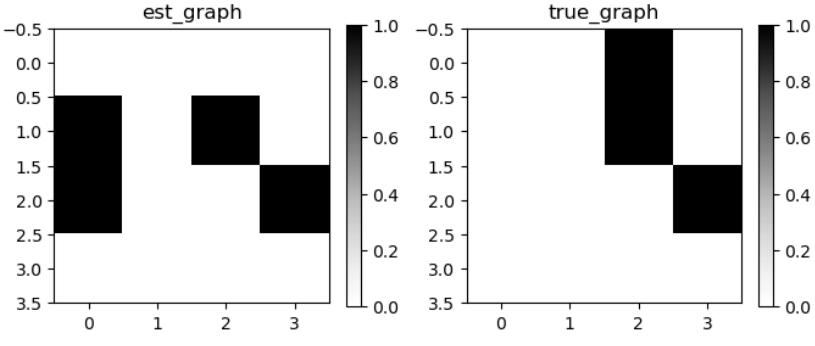

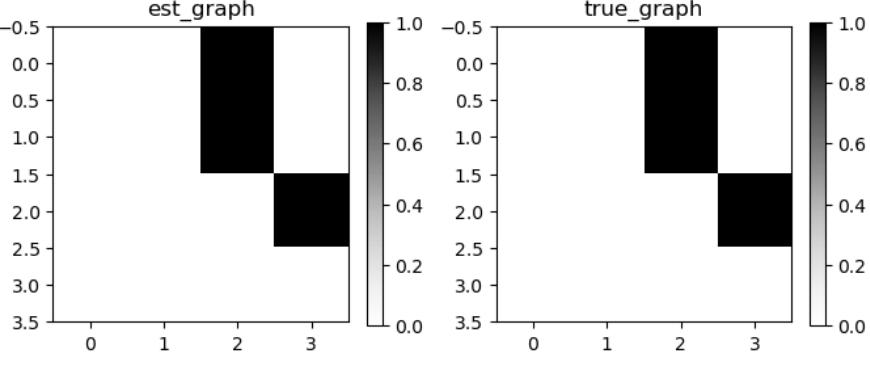

| refresher | 328 | Model evaluation metrics | 338 |

| Gearing up | 328 | Constraint-based causal discovery | 341 |

| Always trying to be faithful… | 328 | Constraints and independence | 341 |

| …but it’s difficult sometimes | 328 | ||

| Minimalism is a virtue | 329 | Leveraging the independence structure to recover the graph |

342 |

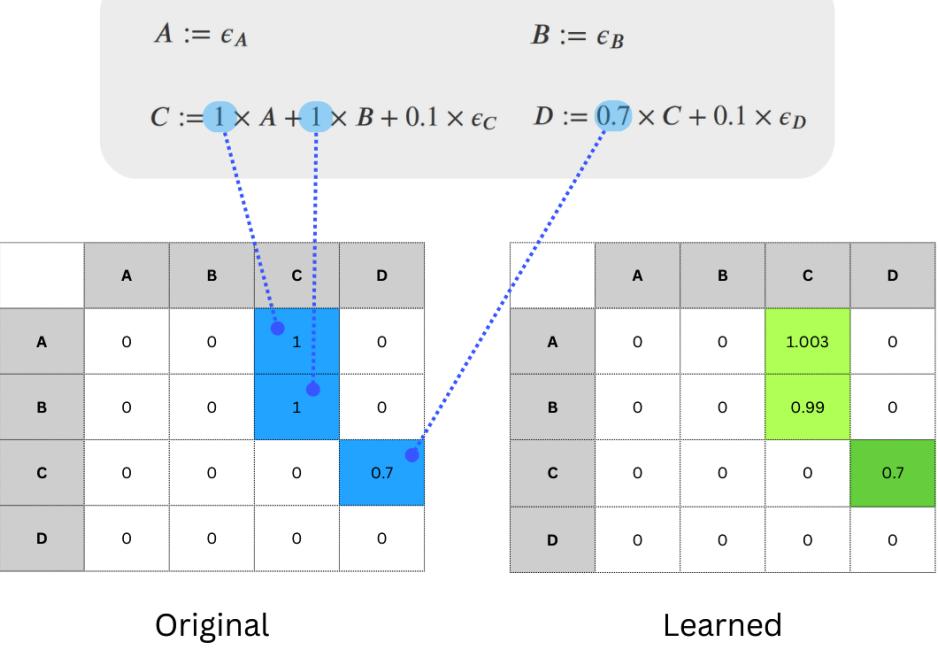

| The four (and a half) families | 329 | PC algorithm – hidden challenges | 345 |

| The four streams | 329 | PC algorithm for categorical data | 346 |

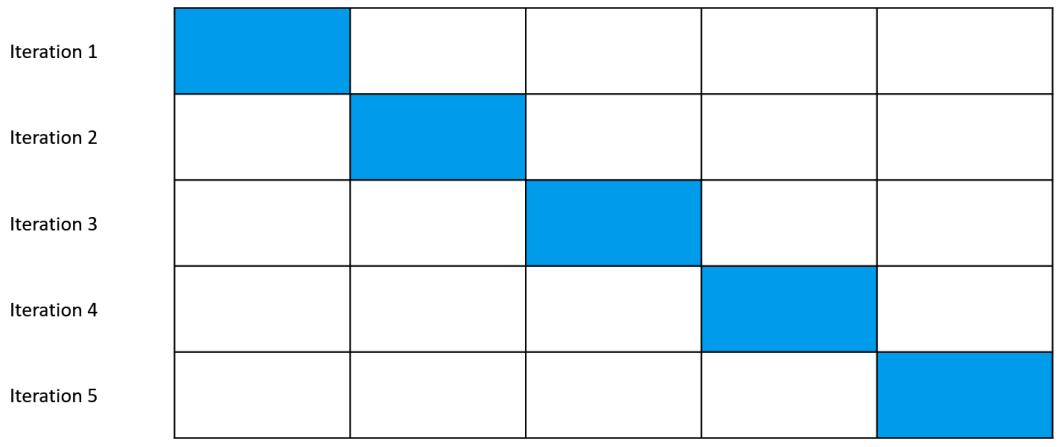

| Introduction to gCastle | 331 | Score-based causal discovery | 347 |

| Hello, gCastle! | 331 | Tabula rasa – starting fresh | 347 |

| Synthetic data in gCastle | 331 | GES – scoring | 347 |

| Fitting your first causal discovery model | 336 | GES in gCastle | 348 |

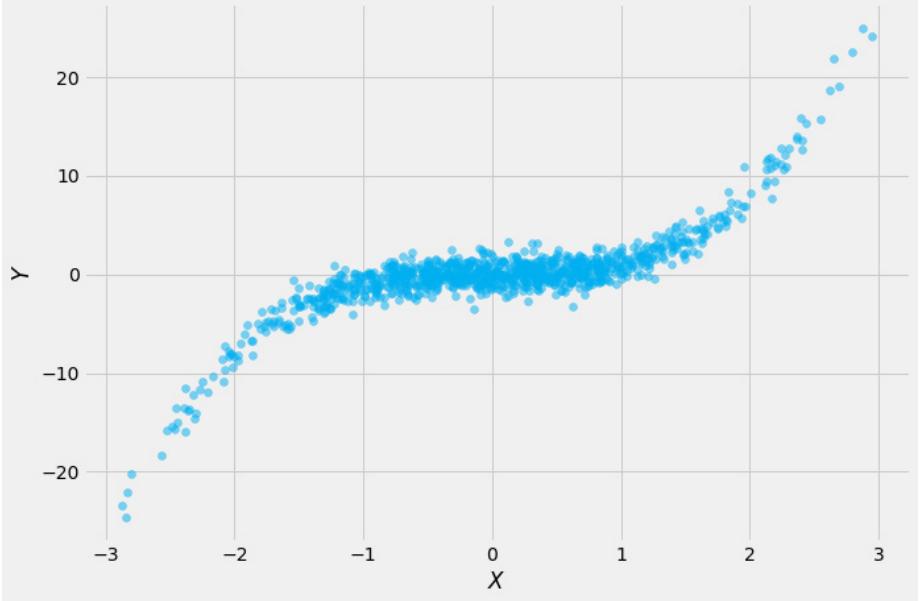

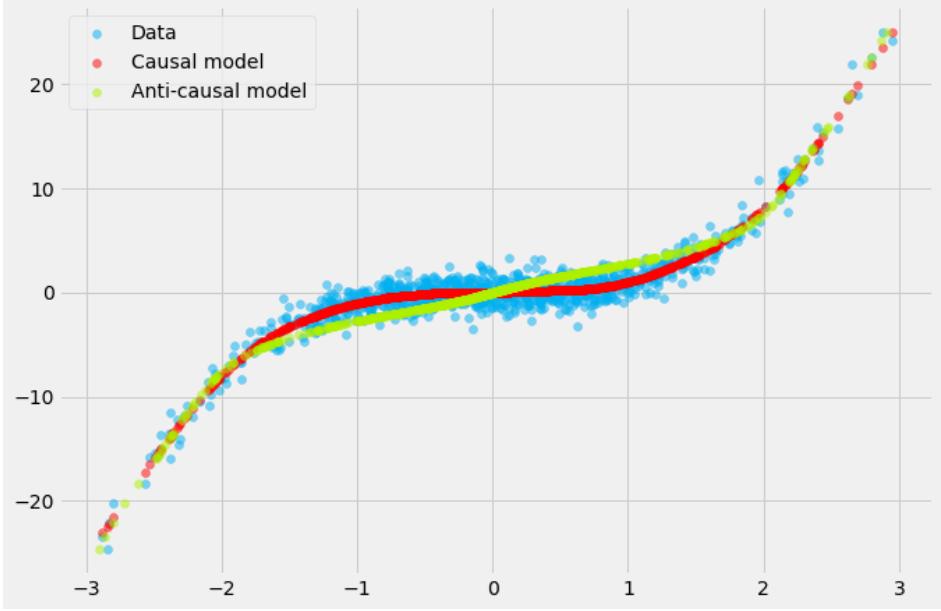

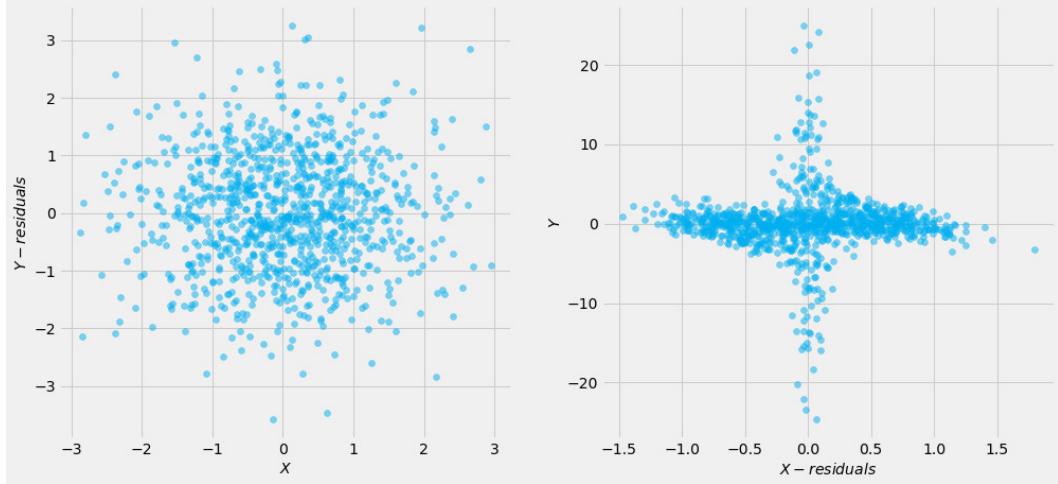

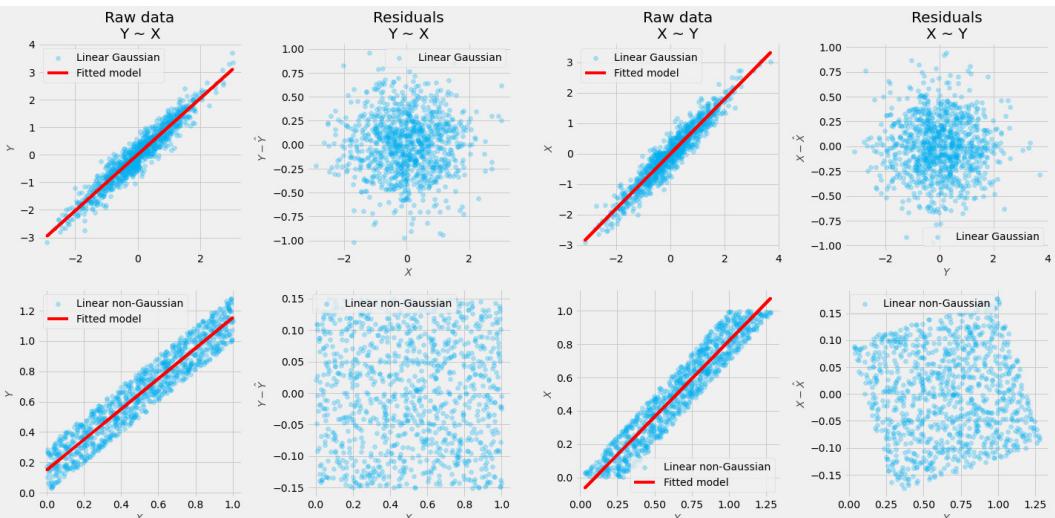

| Functional causal discovery | 349 | GOLEMs don’t cry | 363 |

|---|---|---|---|

| The blessings of asymmetry | 349 | The comparison | 363 |

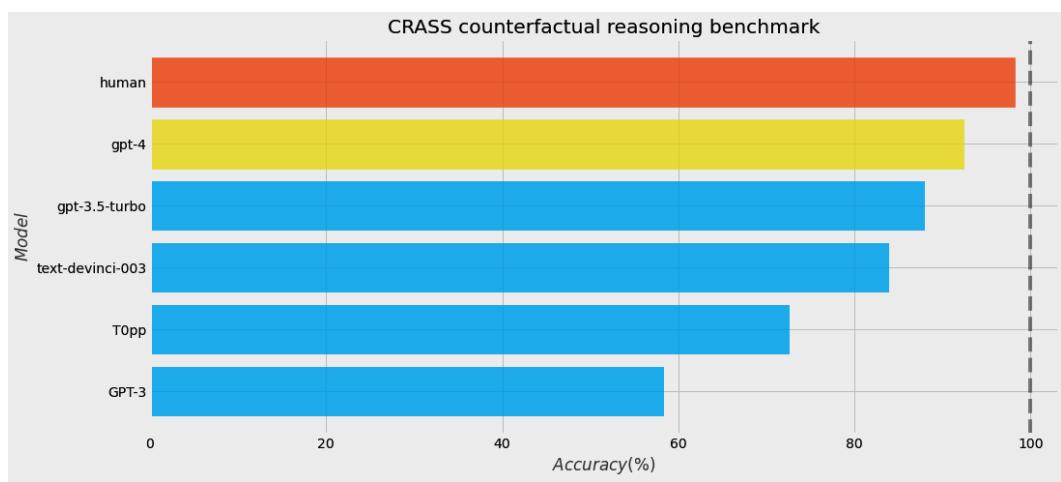

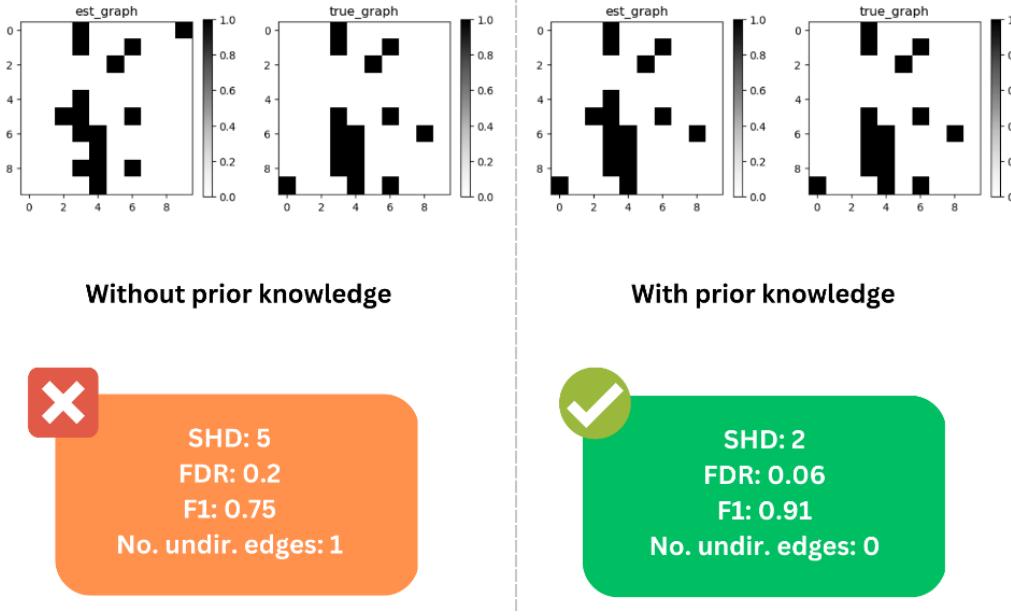

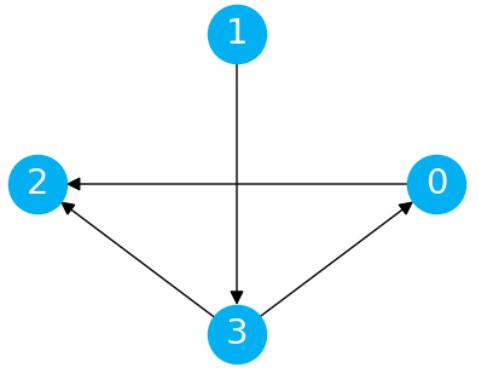

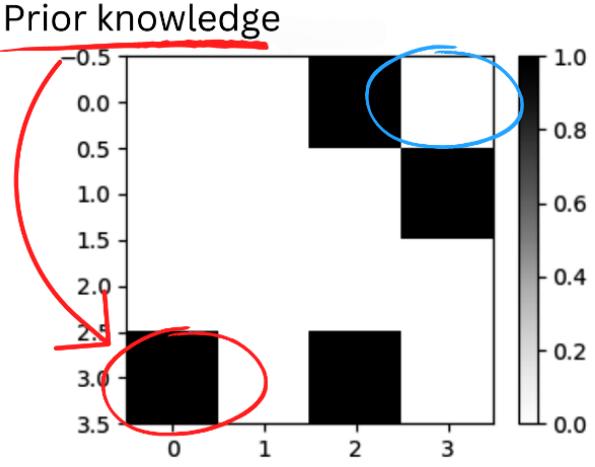

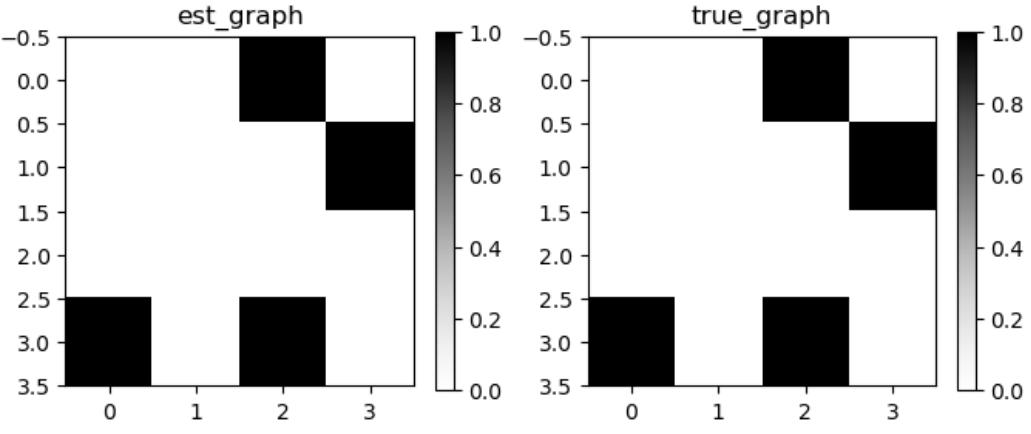

| ANM model | 350 | Encoding expert knowledge | 366 |

| Assessing independence | 353 | What is expert knowledge? | 366 |

| LiNGAM time | 355 | Expert knowledge in gCastle | 366 |

| Gradient-based causal discovery | 360 | Wrapping it up | 368 |

| What exactly is so gradient about you? | 360 | ||

| Shed no tears | 362 | References | 368 |

Causal Discovery and Machine Learning – Advanced Deep Learning and Beyond 371

Advanced causal discovery with deep learning 372 From generative models to causality 372 Looking back to learn who you are 373 DECI’s internal building blocks 373 DECI in code 375 DECI is end-to-end 387 Causal discovery under hidden confounding 387 The FCI algorithm 388 Other approaches to confounded data 392 Extra – going beyond observations 393 ENCO 393 ABCI 393 Causal discovery – real-world applications, challenges, and open problems 394 Wrapping it up! 395 References 396

15

| Epilogue | 399 | ||

|---|---|---|---|

| What we’ve learned in this book | 399 | Causality and business | 403 |

| Five steps to get the best out of your | How causal doers go from vision to | ||

| causal project | 400 | implementation | 403 |

| Starting with a question | 400 | Toward the future of causal ML | 405 |

| Obtaining expert knowledge | 401 | Where are we now and where | |

| Generating hypothetical graph(s) | 401 | are we heading? | 406 |

| Check identifiability | 402 | Causal benchmarks | 406 |

| Falsifying hypotheses | 402 | Causal data fusion | 407 |

| Other Books You May Enjoy | 426 | ||

|---|---|---|---|

| Index | 413 | ||

| Learning causality | 409 | ||

| Imitation learning | 408 | References | 411 |

| Causal structure learning | 408 | Wrapping it up | 411 |

| Intervening agents | 407 | Let’s stay in touch | 410 |

Preface

I wrote this book with a purpose in mind.

My journey to practical causality was an exciting but also challenging road.

Going from great theoretical books to implementing models in practice, and from translating assumptions to verifying them in real-world scenarios, demanded significant work.

I could not find unified, comprehensive resources that could be my guide through this journey.

This book is intended to be that guide.

This book provides a map that allows you to break into the world of causality.

We start with basic motivations behind causal thinking and a comprehensive introduction to Pearlian causal concepts: structural causal model, interventions, counterfactuals, and more.

Each concept comes with a theoretical explanation and a set of practical exercises accompanied by Python code.

Next, we dive into the world of causal effect estimation. Starting simple, we consistently progress toward modern machine learning methods. Step by step, we introduce the Python causal ecosystem and harness the power of cutting-edge algorithms.

In the last part of the book, we sneak into the secret world of causal discovery. We explore the mechanics of how causes leave traces and compare the main families of causal discovery algorithms to unravel the potential of end-to-end causal discovery and human-in-the-loop learning.

We close the book with a broad outlook into the future of causal AI. We examine challenges and opportunities and provide you with a comprehensive list of resources to learn more.

Who this book is for

The main audience I wrote this book for consists of machine learning engineers, data scientists, and machine learning researchers with three or more years of experience, who want to extend their data science toolkit and explore the new unchartered territory of causal machine learning.

People familiar with causality who have worked with another technology (e.g., R) and want to switch to Python can also benefit from this book, as well as people who have worked with traditional causality and want to expand their knowledge and tap into the potential of causal machine learning.

Finally, this book can benefit tech-savvy entrepreneurs who want to build a competitive edge for their products and go beyond the limitations of traditional machine learning.

What this book covers

Chapter 1, Causality: Hey, We Have Machine Learning, So Why Even Bother?, briefly discusses the history of causality and a number of motivating examples. This chapter introduces the notion of spuriousness and demonstrates that some classic definitions of causality do not capture important aspects of causal learning (which human babies know about). This chapter provides the basic distinction between statistical and causal learning, which is a cornerstone for the rest of the book.

Chapter 2, Judea Pearl and the Ladder of Causation, provides us with a definition of the Ladder of Causation – a crucial concept introduced by Judea Pearl that emphasizes the differences between observational, interventional, and counterfactual queries and distributions. We build on top of these ideas and translate them into concrete code examples. Finally, we briefly discuss how different families of machine learning (supervised, reinforcement, semi-, and unsupervised) relate to causal modeling.

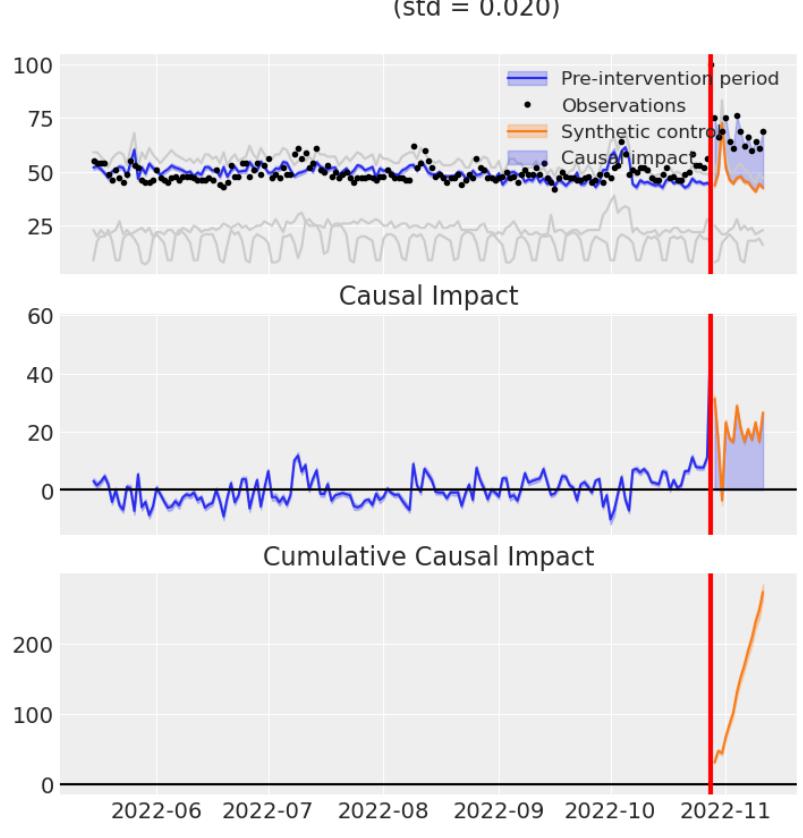

Chapter 3, Regression, Observations, and Interventions, prepares us to take a look at linear regression from a causal perspective. We analyze important properties of observational data and discuss the significance of these properties for causal reasoning. We re-evaluate the problem of statistical control through the causal lens and introduce structural causal models (SCMs). These topics help us build a strong foundation for the rest of the book.

Chapter 4, Graphical Models, starts with a refresher on graphs and basic graph theory. After refreshing the fundamental concepts, we use them to define directed acyclic graphs (DAGs) – one of the most crucial concepts in Pearlian causality. We briefly introduce the sources of causal graphs in the real world and touch upon causal models that are not easily describable using DAGs. This prepares us for Chapter 5.

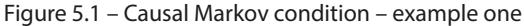

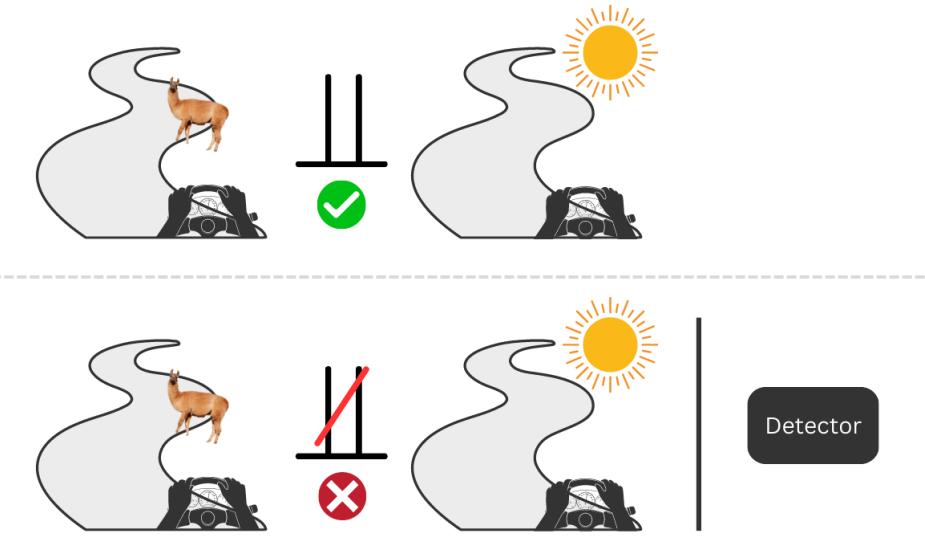

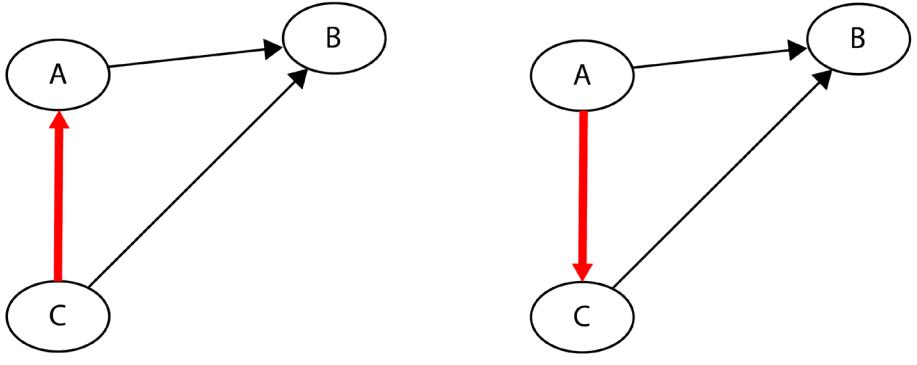

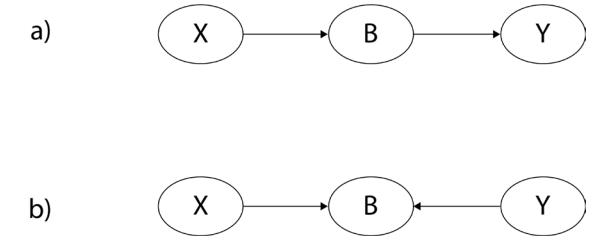

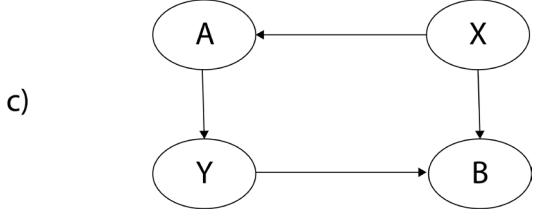

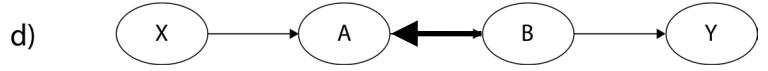

Chapter 5, Forks, Chains, and Immoralities, focuses on three basic graphical structures: forks, chains, and immoralities (also known as colliders). We learn about the crucial properties of these structures and demonstrate how these graphical concepts manifest themselves in the statistical properties of the data. The knowledge we gain in this chapter will be one of the fundamental building blocks of the concepts and techniques that we introduced in Part 2 and Part 3 of this book.

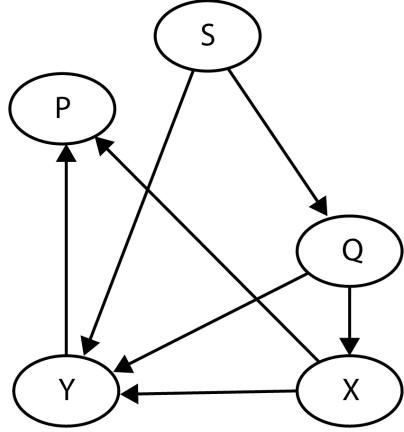

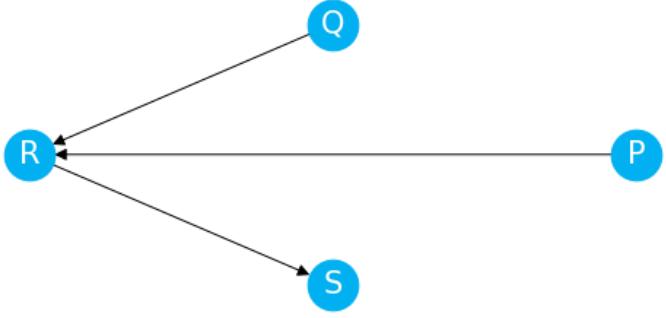

Chapter 6, Nodes, Edges, and Statistical (In)Dependence, builds on top of the concepts introduced in Chapter 5 and takes them a step further. We introduce the concept of d-separation, which will allow us to systematically evaluate conditional independence queries in DAGs, and define the notion of estimand. Finally, we discuss three popular estimands and the conditions under which they can be applied.

Chapter 7, The Four-Step Process of Causal Inference, takes us to the practical side of causality. We introduce DoWhy – an open source causal inference library created by researchers from Microsoft – and show how to carry out a full causal inference process using its intuitive APIs. We demonstrate how to define a causal model, find a relevant estimand, estimate causal effects, and perform refutation tests.

Chapter 8, Causal Models – Assumptions and Challenges, brings our attention back to the topic of assumptions. Assumptions are a crucial and indispensable part of any causal project or analysis. In this chapter, we take a broader view and discuss the most important assumptions from the point of view of two causal formalisms: the Pearlian (graph-based) framework and the potential outcomes framework.

Chapter 9, Causal Inference and Machine Learning – from Matching to Meta-learners, opens the door to causal estimation beyond simple linear models. We start by introducing the ideas behind matching and propensity scores and discussing why propensity scores should not be used for matching. We introduce meta-learners – a class of models that can be used for the estimation of conditional average treatment effects (CATEs) and implement them using DoWhy and EconML packages.

Chapter 10, Causal Inference and Machine Learning – Advanced Estimators, Experiments, Evaluations, and More, introduces more advanced estimators: DR-Learner, double machine learning (DML), and causal forest. We show how to use CATE estimators with experimental data and introduce a number of useful evaluation metrics that can be applied in real-world scenarios. We conclude the chapter with a brief discussion of counterfactual explanations.

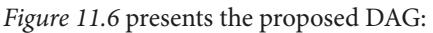

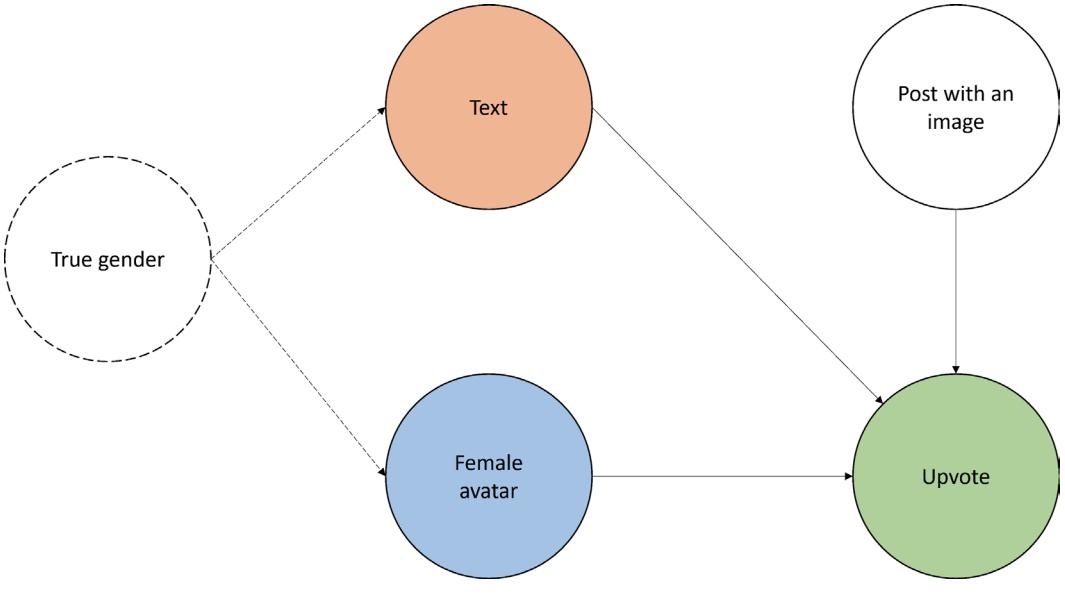

Chapter 11, Causal Inference and Machine Learning – Deep Learning, NLP, and Beyond, introduces deep learning models for CATE estimation and a PyTorch-based CATENets library. In the second part of the chapter, we take a look at the intersection of causal inference and NLP and introduce CausalBert – a Transformer-based model that can be used to remove spurious relationships present in textual data. We close the chapter with an introduction to the synthetic control estimator, which we use to estimate causal effects in real-world data.

Chapter 12, Can I Have a Causal Graph, Please?, provides us with a deeper look at the real-world sources of causal knowledge and introduces us to the concept of automated causal discovery. We discuss the idea of expert knowledge and its value in the process of causal analysis.

Chapter 13, Causal Discovery and Machine Learning – from Assumptions to Applications, starts with a review of assumptions required by some of the popular causal discovery algorithms. We introduce four main families of causal discovery methods and implement key algorithms using the gCastle library, addressing some of the important challenges on the way. Finally, we demonstrate how to encode expert knowledge when working with selected methods.

Chapter 14, Causal Discovery and Machine Learning – Advanced Deep Learning and Beyond, introduces an advanced causal discovery algorithm – DECI. We implement it using the modules coming from an open source Microsoft library, Causica, and train it using PyTorch. We present methods that allow us to work with datasets with hidden confounding and implement one of them – fast causal inference (FCI) – using the causal-learn library. Finally, we briefly discuss two frameworks that allow us to combine observational and interventional data in order to make causal discovery more efficient and less error-prone.

Chapter 15, Epilogue, closes Part 3 of the book with a summary of what we’ve learned, a discussion of causality in business, a sneak peek into the (potential) future of the field, and pointers to more resources on causal inference and discovery for those who are ready to continue their causal journey.

To get the most out of this book

The code for this book is provided in the form of Jupyter notebooks. To run the notebooks, you’ll need to install the required packages.

The easiest way to install them is using Conda. Conda is a great package manager for Python. If you don’t have Conda installed on your system, the installation instructions can be found here: https:// bit.ly/InstallConda.

Note that Conda’s license might have some restrictions for commercial use. After installing Conda, follow the environment installation instructions in the book’s repository README.md file (https:// bit.ly/InstallEnvironments).

If you want to recreate some of the plots from the book, you might need to additionally install Graphviz. For GPU acceleration, CUDA drivers might be needed. Instructions and requirements for Graphviz and CUDA are available in the same README.md file in the repository (https://bit. ly/InstallEnvironments).

| Software/hardware covered in the book | Operating system requirements |

|---|---|

| Python 3.9 | Windows, macOS, or Linux |

| DoWhy 0.8 | Windows, macOS, or Linux |

| EconML 0.12.0 | Windows, macOS, or Linux |

| CATENets 0.2.3 | Windows, macOS, or Linux |

| gCastle 1.0.3 | Windows, macOS, or Linux |

| Causica 0.2.0 | Windows, macOS, or Linux |

| Causal-learn 0.1.3.3 | Windows, macOS, or Linux |

| Transformers 4.24.0 | Windows, macOS, or Linux |

The code for this book has been only tested on Windows 11 (64-bit).

Download the example code files

You can download the example code files for this book from GitHub at https://github.com/ PacktPublishing/Causal-Inference-and-Discovery-in-Python. If there’s an update to the code, it will be updated in the GitHub repository.

We also have other code bundles from our rich catalog of books and videos available at https:// github.com/PacktPublishing/. Check them out!

Conventions used

There are a number of text conventions used throughout this book.

Code in text: Indicates code words in text, database table names, folder names, filenames, file extensions, pathnames, dummy URLs, user input, and Twitter handles. Here is an example: “We’ll model the adjacency matrix using the ENCOAdjacencyDistributionModule object.”

A block of code is set as follows:

preds = causal_bert.inference(

texts=df['text'],

confounds=df['has_photo'],

)[0]Any command-line input or output is written as follows:

$ mkdir css

$ cd cssBold: Indicates a new term, an important word, or words that you see onscreen. For instance, words in menus or dialog boxes appear in bold. Here is an example: “Select System info from the Administration panel.”

Tips or important notes

Appear like this.Get in touch

Feedback from our readers is always welcome.

General feedback: If you have questions about any aspect of this book, email us at customercare@ packtpub.com and mention the book title in the subject of your message.

Errata: Although we have taken every care to ensure the accuracy of our content, mistakes do happen. If you have found a mistake in this book, we would be grateful if you would report this to us. Please visit www.packtpub.com/support/errata and fill in the form.

Piracy: If you come across any illegal copies of our works in any form on the internet, we would be grateful if you would provide us with the location address or website name. Please contact us at copyright@packt.com with a link to the material.

If you are interested in becoming an author: If there is a topic that you have expertise in and you are interested in either writing or contributing to a book, please visit authors.packtpub.com.

Download a free PDF copy of this book

Thanks for purchasing this book!

Do you like to read on the go but are unable to carry your print books everywhere? Is your eBook purchase not compatible with the device of your choice?

Don’t worry, now with every Packt book you get a DRM-free PDF version of that book at no cost.

Read anywhere, any place, on any device. Search, copy, and paste code from your favorite technical books directly into your application.

The perks don’t stop there, you can get exclusive access to discounts, newsletters, and great free content in your inbox daily

Follow these simple steps to get the benefits:

- Scan the QR code or visit the link below

https://packt.link/free-ebook/9781804612989

- Submit your proof of purchase

- That’s it! We’ll send your free PDF and other benefits to your email directly

Part 1: Causality – an Introduction

Part 1 of this book will equip us with a set of tools necessary to understand and tackle the challenges of causal inference and causal discovery.

We’ll learn about the differences between observational, interventional, and counterfactual queries and distributions. We’ll demonstrate connections between linear regression, graphs, and causal models.

Finally, we’ll learn about the important properties of graphical structures that play an essential role in almost any causal endeavor.

This part comprises the following chapters:

- Chapter 1, Causality Hey, We Have Machine Learning, So Why Even Bother?

- Chapter 2, Judea Pearl and the Ladder of Causation

- Chapter 3, Regression, Observations, and Interventions

- Chapter 4, Graphical Models

- Chapter 5, Forks, Chains, and Immoralities

1 Causality – Hey, We Have Machine Learning, So Why Even Bother?

Our journey starts here.

In this chapter, we’ll ask a couple of questions about causality.

What is it? Is causal inference different from statistical inference? If so – how?

Do we need causality at all if machine learning seems good enough?

If you have been following the fast-changing machine learning landscape over the last 5 to 10 years, you have likely noticed many examples of – as we like to call it in the machine learning community – the unreasonable effectiveness of modern machine learning algorithms in computer vision, natural language processing, and other areas.

Algorithms such as DALL-E 2 or GPT-3/4 made it not only to the consciousness of the research community but also the general public.

You might ask yourself – if all this stuff works so well, why would we bother and look into something else?

We’ll start this chapter with a brief discussion of the history of causality. Next, we’ll consider a couple of motivations for using a causal rather than purely statistical approach to modeling and we’ll introduce the concept of confounding.

Finally, we’ll see examples of how a causal approach can help us solve challenges in marketing and medicine. By the end of this chapter, you will have a good idea of why and when causal inference can be useful. You’ll be able to explain what confounding is and why it’s important.

In this chapter, we will cover the following:

- A brief history of causality

- Motivations to use a causal approach to modeling

- How not to lose money… and human lives

A brief history of causality

Causality has a long history and has been addressed by most, if not all, advanced cultures that we know about. Aristotle – one of the most prolific philosophers of ancient Greece – claimed that understanding the causal structure of a process is a necessary ingredient of knowledge about this process. Moreover, he argued that being able to answer why-type questions is the essence of scientific explanation (Falcon, 2006; 2022). Aristotle distinguishes four types of causes (material, formal, efficient, and final), an idea that might capture some interesting aspects of reality as much as it might sound counterintuitive to a contemporary reader.

David Hume, a famous 18th-century Scottish philosopher, proposed a more unified framework for cause-effect relationships. Hume starts with an observation that we never observe cause-effect relationships in the world. The only thing we experience is that some events are conjoined:

“We only find, that the one does actually, in fact, follow the other. The impulse of one billiard-ball is attended with motion in the second. This is the whole that appears to the outward senses. The mind feels no sentiment or inward impression from this succession of objects: consequently, there is not, in any single, particular instance of cause and effect, any thing which can suggest the idea of power or necessary connexion” (original spelling; Hume & Millican, 2007; originally published in 1739).

One interpretation of Hume’s theory of causality (here simplified for clarity) is the following:

- We only observe how the movement or appearance of object A precedes the movement or appearance of object B

- If we experience such a succession a sufficient number of times, we’ll develop a feeling of expectation

- This feeling of expectation is the essence of our concept of causality (it’s not about the world; it’s about a feeling we develop)

Hume’s theory of causality

The interpretation of Hume’s theory of causality that we give here is not the only one. First, Hume presented another definition of causality in his later work An Enquiry Concerning the Human Understanding (1758). Second, not all scholars would necessarily agree with our interpretation (for example, Archie (2005)).

This theory is very interesting from at least two points of view.

First, elements of this theory have a high resemblance to a very powerful idea in psychology called conditioning. Conditioning is a form of learning. There are multiple types of conditioning, but they all rely on a common foundation – namely, association (hence the name for this type of learning – associative learning). In any type of conditioning, we take some event or object (usually called stimulus) and associate it with some behavior or reaction. Associative learning works across species. You can find it in humans, apes, dogs, and cats, but also in much simpler organisms such as snails (Alexander, Audesirk & Audesirk, 1985).

Conditioning

If you want to learn more about different types of conditioning, check this https://bit. ly/MoreOnConditioning or search for phrases such as classical conditioning versus operant conditioning and names such as Ivan Pavlov and Burrhus Skinner, respectively.

Second, most classic machine learning algorithms also work on the basis of association. When we’re training a neural network in a supervised fashion, we’re trying to find a function that maps input to the output. To do it efficiently, we need to figure out which elements of the input are useful for predicting the output. And, in most cases, association is just good enough for this purpose.

Why causality? Ask babies!

Is there anything missing from Hume’s theory of causation? Although many other philosophers tried to answer this question, we’ll focus on one particularly interesting answer that comes from… human babies.

Interacting with the world

Alison Gopnik is an American child psychologist who studies how babies develop their world models. She also works with computer scientists, helping them understand how human babies build commonsense concepts about the external world. Children – to an even greater extent than adults – make use of associative learning, but they are also insatiable experimenters.

Have you ever seen a parent trying to convince their child to stop throwing around a toy? Some parents tend to interpret this type of behavior as rude, destructive, or aggressive, but babies often have a different set of motivations. They are running systematic experiments that allow them to learn the laws of physics and the rules of social interactions (Gopnik, 2009). Infants as young as 11 months prefer to perform experiments with objects that display unpredictable properties (for example, can pass through a wall) than with objects that behave predictably (Stahl & Feigenson, 2015). This preference allows them to efficiently build models of the world.

What we can learn from babies is that we’re not limited to observing the world, as Hume suggested. We can also interact with it. In the context of causal inference, these interactions are called interventions, and we’ll learn more about them in Chapter 2. Interventions are at the core of what many consider the Holy Grail of the scientific method: randomized controlled trial, or RCT for short.

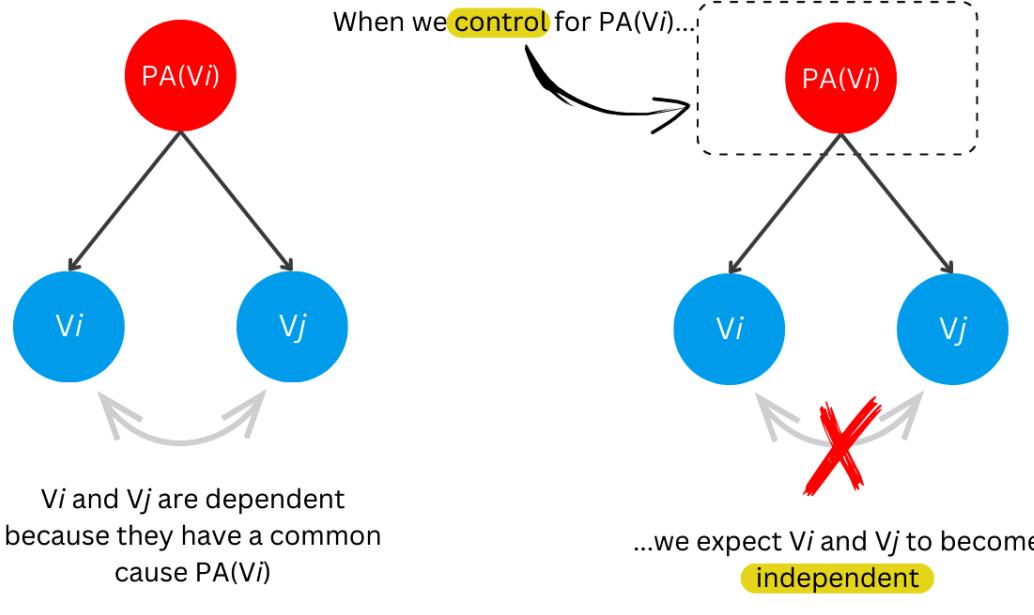

Confounding – relationships that are not real

The fact that we can run experiments enhances our palette of possibilities beyond what Hume thought about. This is very powerful! Although experiments cannot solve all of the philosophical problems related to gaining new knowledge, they can solve some of them. A very important aspect of a properly designed randomized experiment is that it allows us to avoid confounding. Why is it important?

A confounding variable influences two or more other variables and produces a spurious association between them. From a purely statistical point of view, such associations are indistinguishable from the ones produced by a causal mechanism. Why is that problematic? Let’s see an example.

Imagine you work at a research institute and you’re trying to understand the causes of people drowning. Your organization provides you with a huge database of socioeconomic variables. You decide to run a regression model over a large set of these variables to predict the number of drownings per day in your area of interest. When you check the results, it turns out that the biggest coefficient you obtained is for daily regional ice cream sales. Interesting! Ice cream usually contains large amounts of sugar, so maybe sugar affects people’s attention or physical performance while they are in the water.

This hypothesis might make sense, but before we move forward, let’s ask some questions. How about other variables that we did not include in the model? Did we add enough predictors to the model to describe all relevant aspects of the problem? What if we added too many of them? Could adding just one variable to the model completely change the outcome?

Adding too many predictors

Adding too many predictors to the model might be harmful from both statistical and causal points of view. We will learn more on this topic in Chapter 3.

It turns out that this is possible.

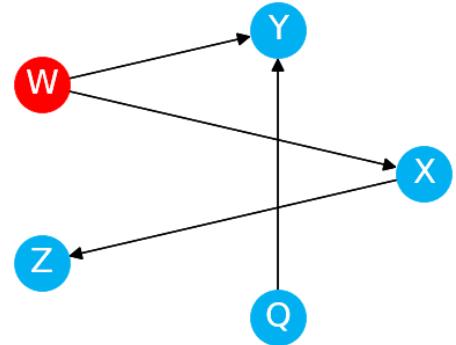

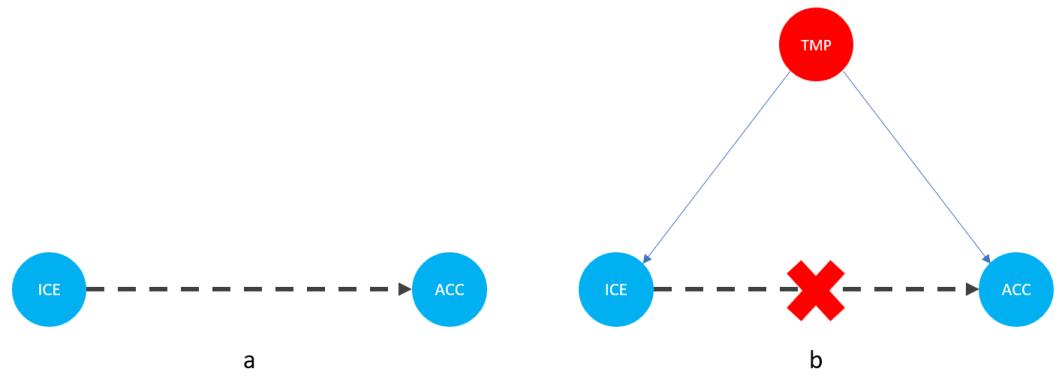

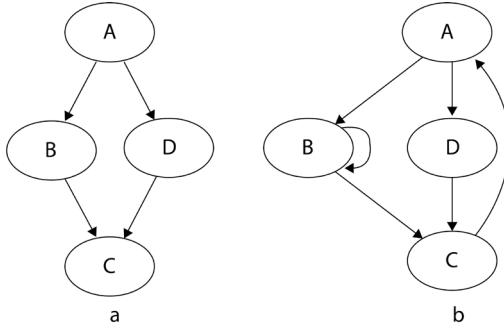

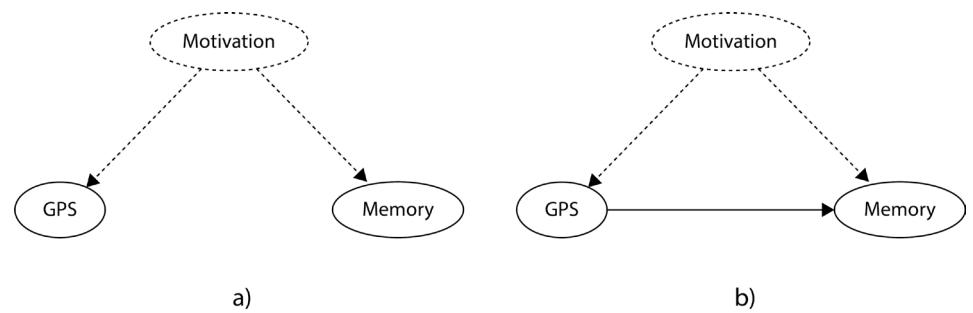

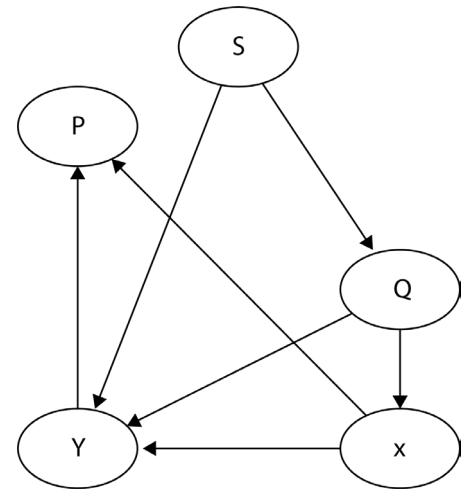

Let me introduce you to daily average temperature – our confounder. Higher daily temperature makes people more likely to buy ice cream and more likely to go swimming. When there are more people swimming, there are also more accidents. Let’s try to visualize this relationship:

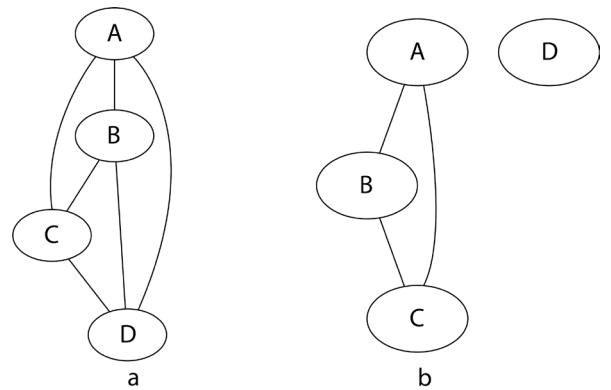

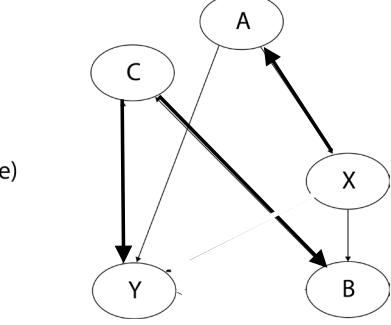

Figure 1.1 – Graphical representation of models with two (a) and three variables (b). Dashed lines represent the association, solid lines represent causation. ICE = ice cream sales, ACC = the number of accidents, and TMP = temperature.

In Figure 1.1, we can see that adding the average daily temperature to the model removes the relationship between regional ice cream sales and daily drownings. Depending on your background, this might or might not be surprising to you. We’ll learn more about the mechanism behind this effect in Chapter 3.

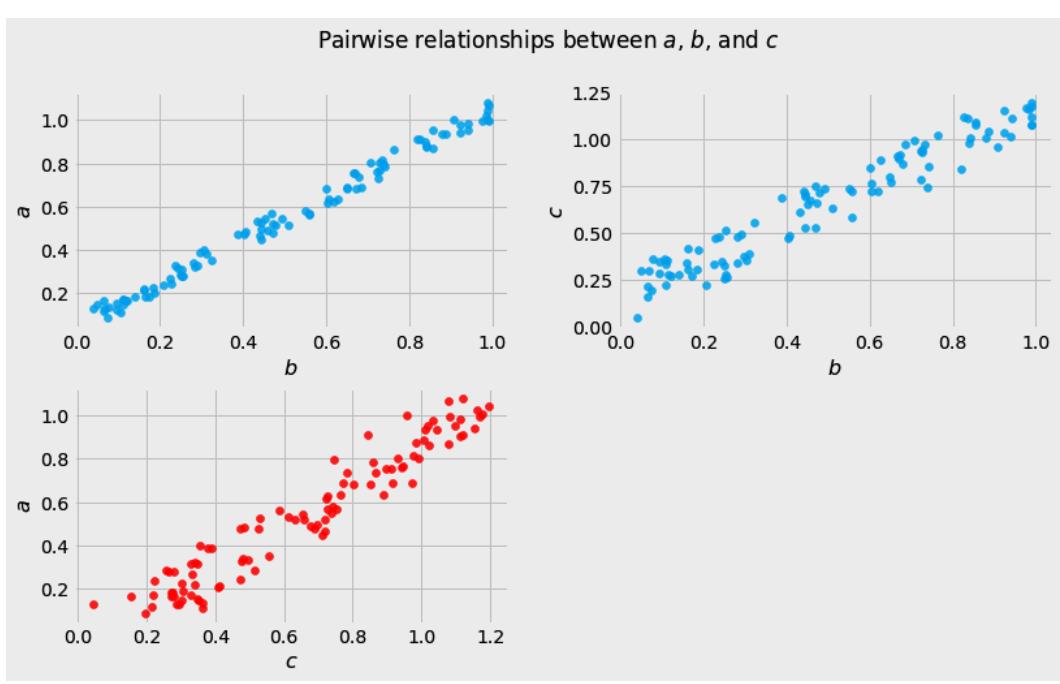

Before we move further, we need to state one important thing explicitly: confounding is a strictly causal concept. What does it mean? It means that we’re not able to say much about confounding using purely statistical language (note that this means that Hume’s definition as we presented it here cannot capture it). To see this clearly, let’s look at Figure 1.2:

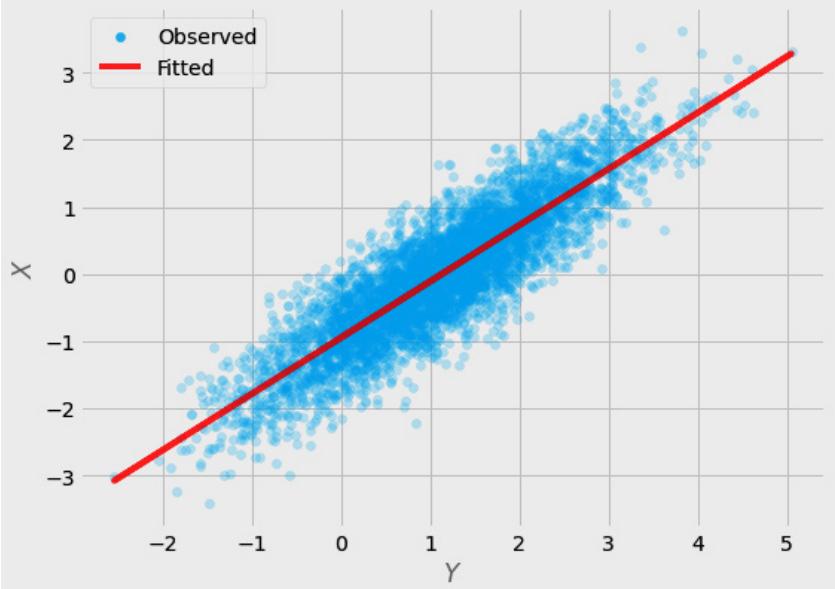

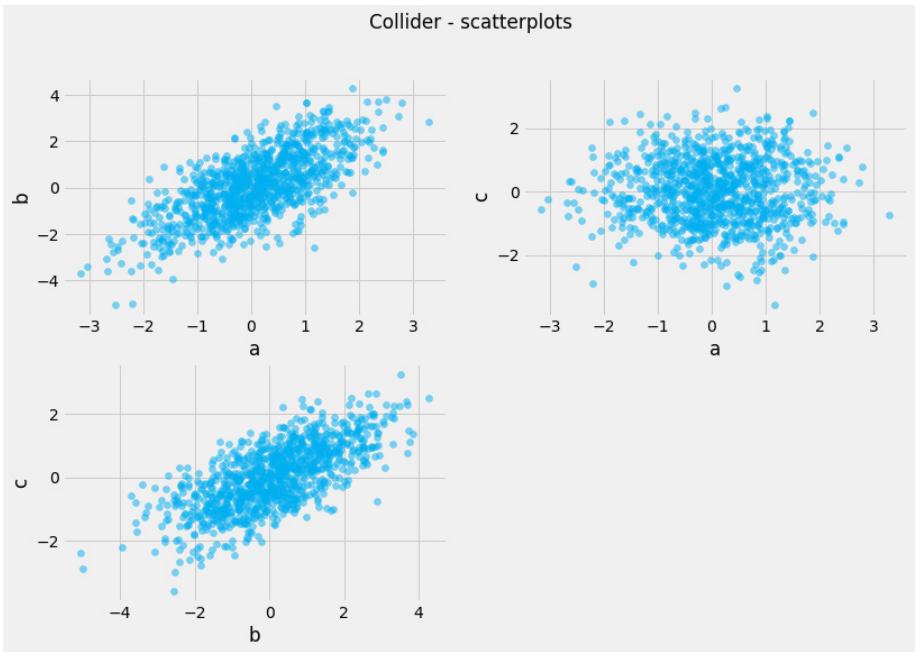

Figure 1.2 – Pairwise scatterplots of relations between a, b, and c. The code to recreate the preceding plot can be found in the Chapter_01.ipynb notebook (https://github.com/PacktPublishing/Causal-Inferenceand-Discovery-in-Python/blob/main/Chapter\_01.ipynb).

In Figure 1.2, blue points signify a causal relationship while red points signify a spurious relationship, and variables a, b, and c are related in the following way:

- b causes a and c

- a and c are causally independent

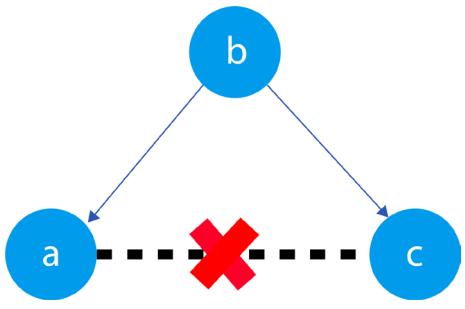

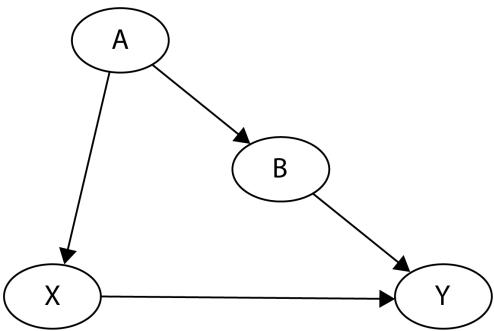

Figure 1.3 presents a graphical representation of these relationships:

Figure 1.3 – Relationships between a, b, and c

The black dashed line with the red cross denotes that there is no causal relationship between a and c in any direction.

Hey, but in Figure 1.2 we see some relationship! Let’s unpack it!

In Figure 1.2, non-spurious (blue) and spurious (red) relationships look pretty similar to each other and their correlation coefficients will be similarly large. In practice, most of the time, they just cannot be distinguished based on solely statistical criteria and we need causal knowledge to distinguish between them.

Asymmetries and causal discovery

If fact, in some cases, we can use noise distribution or functional asymmetries to find out which direction is causal. This information can be leveraged to recover causal structure from observational data, but it also requires some assumptions about the data-generating process. We’ll learn more about this in Part 3, Causal Discovery (Chapter 13).

Okay, we said that there are some spurious relationships in our data; we added another variable to the model and it changed the model’s outcome. That said, I was still able to make useful predictions without this variable. If that’s true, why would I care whether the relationship is spurious or non-spurious? Why would I care whether the relationship is causal or not?

How not to lose money… and human lives

We learned that randomized experiments can help us avoid confounding. Unfortunately, they are not always available. Sometimes, experiments can be too costly to perform, unethical, or virtually impossible (for example, running an experiment where the treatment is a migration of a large group of some population). In this section, we’ll look at a couple of scenarios where we’re limited to observational data but we still want to draw causal conclusions. These examples will provide us with a solid foundation for the next chapters.

A marketer’s dilemma

Imagine you are a tech-savvy marketer and you want to effectively allocate your direct marketing budget. How would you approach this task? When allocating the budget for a direct marketing campaign, we’d like to understand what return we can expect if we spend a certain amount of money on a given person. In other words, we’re interested in estimating the effect of our actions on some customer outcomes (Gutierrez, Gérardy, 2017). Perhaps we could use supervised learning to solve this problem? To answer this question, let’s take a closer look at what exactly we want to predict.

We’re interested in understanding how a given person would react to our content. Let’s encode it in a formula:

τi = Yi (1) − Yi(0)

In the preceding formula, the following applies:

- τi is the treatment effect for person i

- Yi (1) is the outcome for person i when they received the treatment T (in our example, they received marketing content from us)

- Yi(0) is the outcome for the same person i given they did not receive the treatment T

What the formula says is that we want to take the person i’s outcome Yi when this person does not receive treatment T and subtract it from the same person’s outcome when they receive treatment T.

An interesting thing here is that to solve this equation, we need to know what person i’s response is under treatment and under no treatment. In reality, we can never observe the same person under two mutually exclusive conditions at the same time. To solve the equation in the preceding formula, we need counterfactuals.

Counterfactuals are estimates of how the world would look if we changed the value of one or more variables, holding everything else constant. Because counterfactuals cannot be observed, the true causal effect τ is unknown. This is one of the reasons why classic machine learning cannot solve this problem for us. A family of causal techniques usually applied to problems like this is called uplift modeling, and we’ll learn more about it in Chapter 9 and 10.

Let’s play doctor!

Let’s take another example. Imagine you’re a doctor. One of your patients, Jennifer, has a rare disease, D. Additionally, she was diagnosed with a high risk of developing a blood clot. You study the information on the two most popular drugs for D. Both drugs have virtually identical effectiveness on D, but you’re not sure which drug will be safer for Jennifer, given her diagnosis. You look into the research data presented in Table 1.1:

| Drug | A | B | ||

|---|---|---|---|---|

| Blood clot | Yes | No | Yes | No |

| Total | 27 | 95 | 23 | 99 |

| Percentage | 22% | 78% | 19% | 81% |

| Table 1.1 – Data for drug A and drug B | |||

|---|---|---|---|

| – | – | —————————————- | – |

The numbers in Table 1.1 represent the number of patients diagnosed with disease D who were administered drug A or drug B. Row 2 (Blood clot) gives us information on whether a blood clot was found in patients or not. Note that the percentage scores are rounded. Based on this data, which drug would you choose? The answer seems pretty obvious. 81% of patients who received drug B did not develop blood clots. The same was true for only 78% of patients who received drug A. The risk of developing a blood clot is around 3% lower for patients receiving drug B compared to patients receiving drug A.

| Drug | A | B | ||

|---|---|---|---|---|

| Blood clot | Yes | No | Yes | No |

| Female | 24 | 56 | 17 | 25 |

| Male | 3 | 39 | 6 | 74 |

| Total | 27 | 95 | 23 | 99 |

| Percentage | 22% | 78% | 18% | 82% |

| Percentage (F) | 30% | 70% | 40% | 60% |

| Percentage (M) | 7% | 93% | 7.5% | 92.5% |

This looks like a fair answer, but you feel skeptical. You know that blood clots can be very risky and you want to dig deeper. You find more fine-grained data that takes the patient’s gender into account. Let’s look at Table 1.2:

Table 1.2 – Data for drug A and drug B with gender-specific results added. F = female, M = male. Color-coding added for ease of interpretation, with better results marked in green and worse results marked in orange.

Something strange has happened here. We have the same numbers as before and drug B is still preferable for all patients, but it seems that drug A works better for females and for males! Have we just found a medical Schrödinger’s cat (https://en.wikipedia.org/wiki/Schr%C3%B6dinger%27s\_ cat) that flips the effect of a drug when a patient’s gender is observed?

If you think that we might have messed up the numbers – don’t believe me, just check the data for yourself. The data can be found in data/ch_01_drug_data.csv (https://github.com/ PacktPublishing/Causal-Inference-and-Discovery-in-Python/blob/main/ data/ch\_01\_drug\_data.csv).

What we’ve just experienced is called Simpson’s paradox (also known as the Yule-Simpson effect). Simpson’s paradox appears when data partitioning (which we can achieve by controlling for the additional variable(s) in the regression setting) significantly changes the outcome of the analysis. In the real world, there are usually many ways to partition your data. You might ask: okay, so how do I know which partitioning is the correct one?

We could try to answer this question from a pure machine learning point of view: perform crossvalidated feature selection and pick the variables that contribute significantly to the outcome. This solution is good enough in some settings. For instance, it will work well when we only care about making predictions (rather than decisions) and we know that our production data will be independent and identically distributed; in other words, our production data needs to have a distribution that is virtually identical (or at least similar enough) to our training and validation data. If we want more than this, we’ll need some sort of a (causal) world model.

Associations in the wild

Some people tend to think that purely associational relationships happen rarely in the real world or tend to be weak, so they cannot bias our results too much. To see how surprisingly strong and consistent spurious relationships can be in the real world, visit Tyler Vigen’s page: https://www. tylervigen.com/spurious-correlations. Notice that relationships between many variables are sometimes very strong and they last for long periods of time! I personally like the one with space launches and sociology doctorates and I often use it in my lectures and presentations. Which one is your favorite? Share and tag me on LinkedIn, Twitter (See the Let’s stay in touch section in Chapter 15 to connect!) so we can have a discussion!

Wrapping it up

“Let the data speak” is a catchy and powerful slogan, but as we’ve seen earlier, data itself is not always enough. It’s worth remembering that in many cases “data cannot speak for themselves” (Hernán, Robins, 2020) and we might need more information than just observations to address some of our questions.

In this chapter, we learned that when thinking about causality, we’re not limited to observations, as David Hume thought. We can also experiment – just like babies.

Unfortunately, experiments are not always available. When this is the case, we can try to use observational data to draw a causal conclusion, but the data itself is usually not enough for this purpose. We also need a causal model. In the next chapter, we’ll introduce the Ladder of Causation – a neat metaphor for understanding three levels of causation proposed by Judea Pearl.

References

Alexander, J. E., Audesirk, T. E., & Audesirk, G. J. (1985). Classical Conditioning in the Pond Snail Lymnaea stagnalis. The American Biology Teacher, 47(5), 295–298. https://doi.org/10.2307/4448054

Archie, L. (2005). Hume’s Considered View on Causality. [Preprint] Retrieved from: http:// philsci-archive.pitt.edu/id/eprint/2247 (accessed 2022-04-23)

Falcon, A. “Aristotle on Causality”, The Stanford Encyclopedia of Philosophy (Spring 2022 Edition), Edward N. Zalta (ed.). https://plato.stanford.edu/archives/spr2022/entries/ aristotle-causality/. Retrieved 2022-04-23

Gopnik, A. (2009). The philosophical baby: What children’s minds tell us about truth, love, and the meaning of life. New York: Farrar, Straus and Giroux

Gutierrez, P., & Gérardy, J. (2017). Causal Inference and Uplift Modelling: A Review of the Literature. Proceedings of The 3rd International Conference on Predictive Applications and APIs in Proceedings of Machine Learning Research, 67, 1-13

Hernán M. A., & Robins J. M. (2020). Causal Inference: What If. Boca Raton: Chapman & Hall/CRC

Hume, D., & Millican, P. F. (2007). An enquiry concerning human understanding. Oxford: Oxford University Press

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux

Lorkowski, C. M. https://iep.utm.edu/hume-causation/. Retrieved 2022-04-23

Stahl, A. E., & Feigenson, L. (2015). Cognitive development. Observing the unexpected enhances infants’ learning and exploration. Science, 348(6230), 91–94. https://doi.org/10.1126/ science.aaa3799

2 Judea Pearl and the Ladder of Causation

In the last chapter, we discussed why association is not sufficient to draw causal conclusions. We talked about interventions and counterfactuals as tools that allow us to perform causal inference based on observational data. Now, it’s time to give it a bit more structure.

In this chapter, we’re going to introduce the concept of the Ladder of Causation. We’ll discuss associations, interventions, and counterfactuals from theoretical and mathematical standpoints. Finally, we’ll implement a couple of structural causal models in Python to solidify our understanding of the three aforementioned concepts. By the end of this chapter, you should have a firm grasp of the differences between associations, interventions, and counterfactuals. This knowledge will be a foundation of many of the ideas that we’ll discuss further in the book and allow us to understand the mechanics of more sophisticated methods that we’ll introduce in Part 2, Causal Inference, and Part 3, Causal Discovery.

In this chapter, we will cover the following topics:

- The concept of the Ladder of Causation

- Conceptual, mathematical, and practical differences between associations, interventions, and counterfactuals

From associations to logic and imagination – the Ladder of Causation

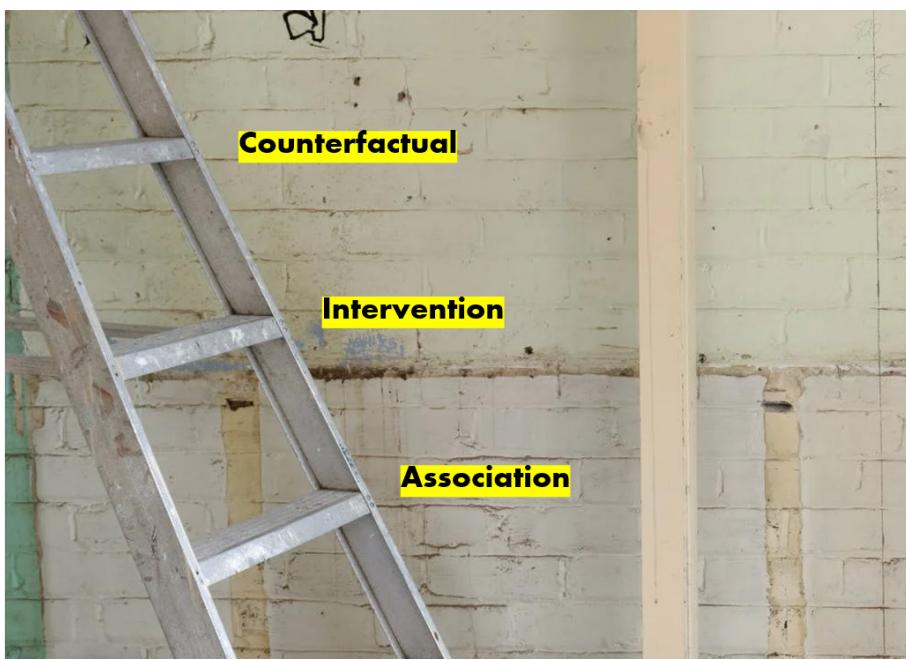

In this section, we’ll introduce the concept of the Ladder of Causation and summarize its building blocks. Figure 2.1 presents a symbolic representation of the Ladder of Causation. The higher the rung, the more sophisticated our capabilities become, but let’s start from the beginning:

The Ladder of Causation, introduced by Judea Pearl (Pearl, Mackenzie, 2019), is a helpful metaphor for understanding distinct levels of relationships between variables – from simple associations to counterfactual reasoning. Pearl’s ladder has three rungs. Each rung is related to different activity and offers answers to different types of causal questions. Each rung comes with a distinct set of mathematical tools.

Judea Pearl

Judea Pearl is an Israeli-American researcher and computer scientist, who devoted a large part of his career to researching causality. His original and insightful work has been recognized by Association for Computing Machinery (ACM), who awarded him with the Turing Award – considered by many the equivalent of the Nobel Prize in computer science. The Ladder of Causation was introduced in Pearl’s popular book on causality, The Book of Why (Pearl, Mackenzie, 2019).

Rung one of the ladder represents association. The activity that is related to this level is observing. Using association, we can answer questions about how seeing one thing changes our beliefs about another thing – for instance, how observing a successful space launch by SpaceX changes our belief that SpaceX stock price will go up.

Rung two represents intervention. Remember the babies from the previous chapter? The action related to rung two is doing or intervening. Just like babies throwing their toys around to learn about the laws of physics, we can intervene on one variable to check how it influences some other variable. Interventions can help us answer questions about what will happen to one thing if we change another thing – for instance, if I go to bed earlier, will I have more energy the following morning?

Rung three represents counterfactual reasoning. Activities associated with rung three are imagining and understanding. Counterfactuals are useful to answer questions about what would have happened if we had done something differently. For instance, would I have made it to the office on time if I took the train rather than the car?

| Rung | Action | Question |

|---|---|---|

| Association (1) | Observing | How does observing X change my belief in Y? |

| Intervention (2) | Doing | What will happen to Y if I do X? |

| Counterfactual (3) | Imagining | If I had done X, what would Y be? |

Table 2.1 summarizes the three rungs of the Ladder of Causation:

Table 2.1 – A summary of the three rungs of the Ladder of Causation

To cement our intuitions, let’s see an example of each of the rungs.

Imagine that you’re a doctor and you consider prescribing drug D to one of your patients. First, you might recall hearing other doctors saying that D helped their patients. It seems that in the sample of doctors you heard talking about D, there is an association between their patients taking the drug and getting better. That’s rung one. We are skeptical about the rung one evidence because it might just be the case that these doctors only treated patients with certain characteristics (maybe just mild cases or only patients of a certain age). To overcome the limitation of rung one, you decide to read articles based on randomized clinical trials.

Randomized controlled trials

Randomized controlled trials (RCTs), sometimes referred to as randomized experiments, are often considered the gold standard for causal inference. The key idea behind RCTs is randomization. We randomly assign subjects in an experiment to treatment and control groups, which helps us achieve deconfounding. There are many possible RCT designs. For an introduction and discussion, check out Matthews (2006). We also briefly discuss RCTs in Chapter 12.

These trials were based on interventions (rung two) and – assuming that they were properly designed – they can be used to determine the relative efficacy of the treatment. Unfortunately, they cannot tell us whether a patient would be better off if they had taken the treatment earlier, or which of two available treatments with similar relative efficacy would have worked better for this particular patient. To answer this type of question, we need rung three.

Now, let’s take a closer look at each of the rungs and their respective mathematical apparatus.

Associations

In this section, we’ll demonstrate how to quantify associational relationships using conditional probability. Then, we’ll briefly introduce structural causal models. Finally, we’ll implement conditional probability queries using Python.

We already learned a lot about associations. We know that associations are related to observing and that they allow us to generate predictions. Let’s take a look at mathematical tools that will allow us to talk about associations in a more formal way.

We can view the mathematics of rung one from a couple of angles. In this section, we’ll focus on the perspective of conditional probability.

Conditional probability

Conditional probability is the probability of one event, given that another event has occurred. A mathematical symbol that we use to express conditional probability is | (known as a pipe or vertical bar). We read P(X|Y) as a probability of X given Y. This notation is a bit simplified (or abused if you will). What we usually mean by P(X|Y) is P(X = x|Y = y), the probability that the variable X takes the value x, given that the variable Y takes the value y. This notation can also be extended to continuous cases, where we want to work with probability densities – for example, P(0 < X < 0.25|Y > 0.5).

Imagine that you run an internet bookstore. What is the probability that a person will buy book A, given that they bought book B? This question can be answered using the following conditional probability query:

P(book A|book B)

Note that the preceding formula does not give us any information on the causal relationship between both events. We don’t know whether buying book A caused the customer to buy book B, buying book B caused them to buy book A, or there is another (unobserved) event that caused both. We only get information about non-causal association between these events. To see this clearly, we will implement our bookstore example in Python, but before we start, we’ll briefly introduce one more important concept.

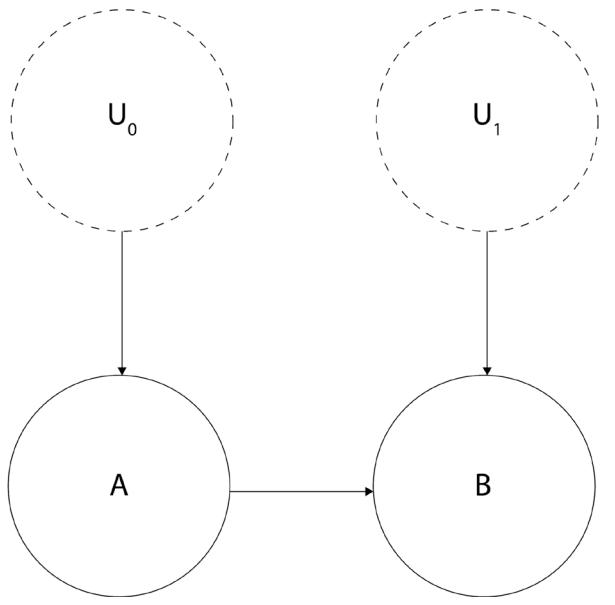

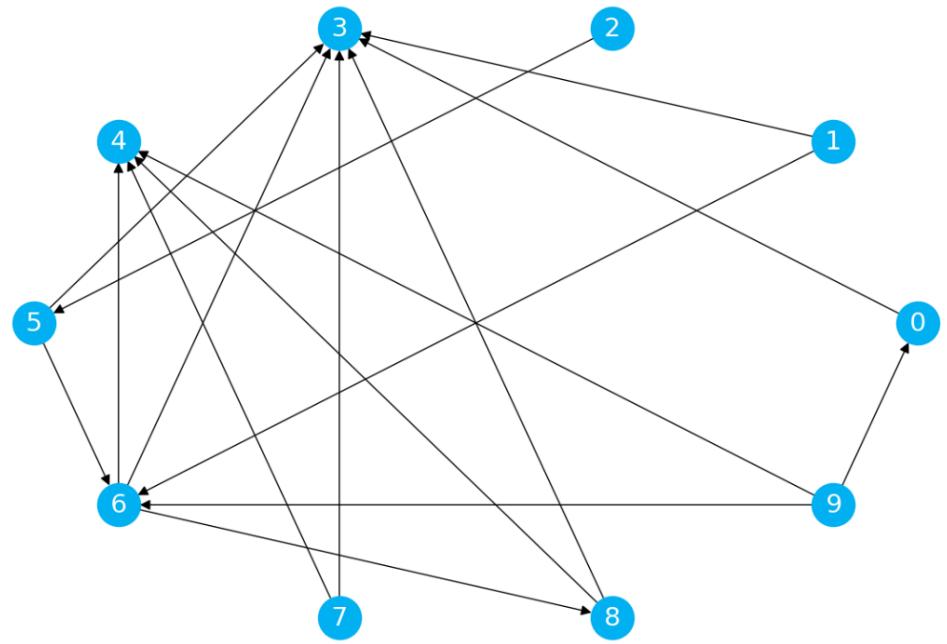

Structural causal models (SCMs) are a simple yet powerful tool to encode causal relationships between variables. You might be surprised that we are discussing a causal model in the section on association. Didn’t we just say that association is usually not enough to address causal questions? That’s true. The reason why we’re introducing an SCM now is that we will use it as our data-generating process. After generating the data, we will pretend to forget what the SCM was. This way, we’ll mimic a frequent real-world scenario where the true data-generating process is unknown, and the only thing we have is observational data.

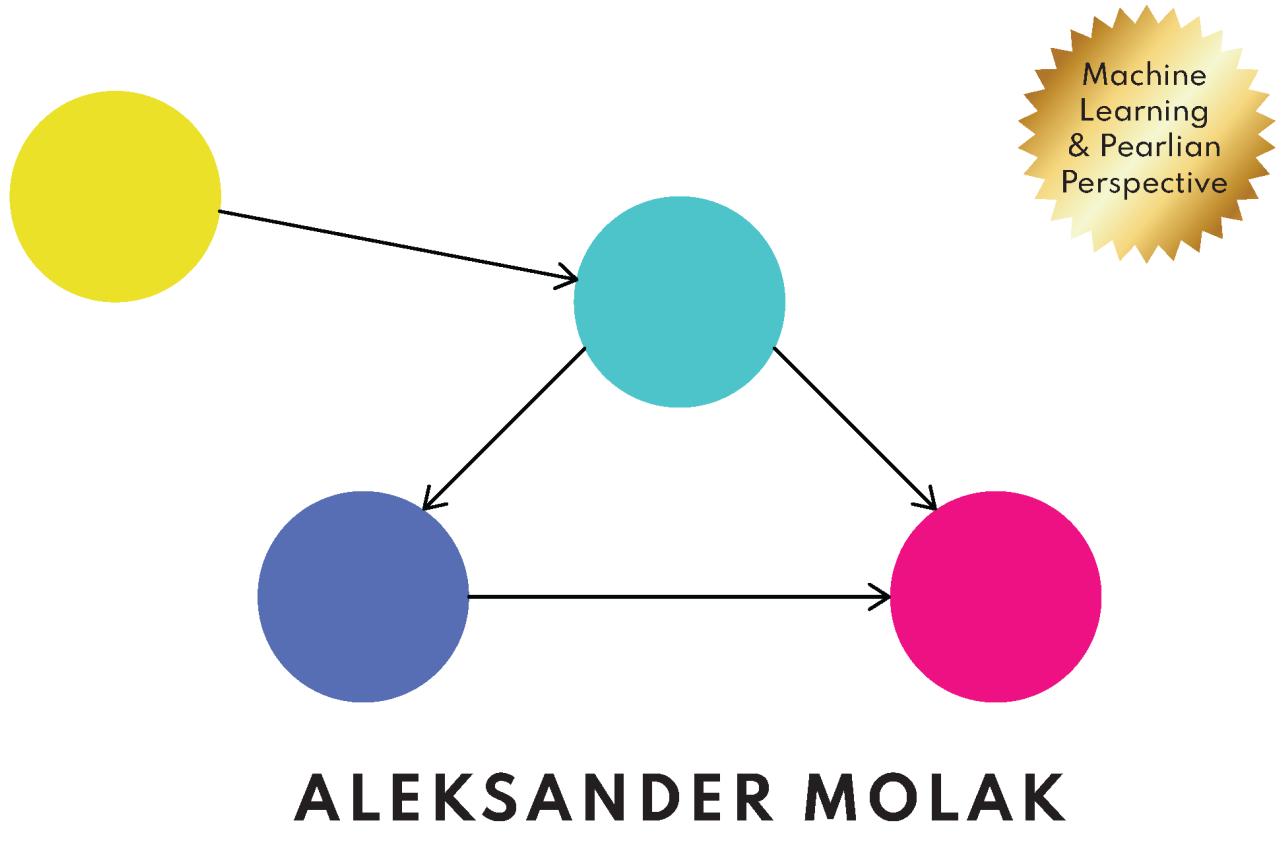

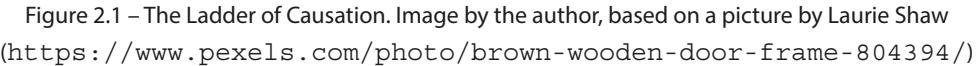

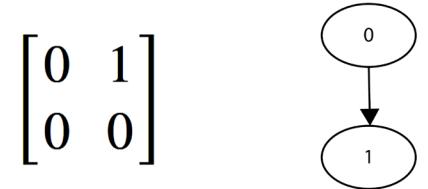

Let’s take a small detour from our bookstore example and take a look at Figure 2.2:

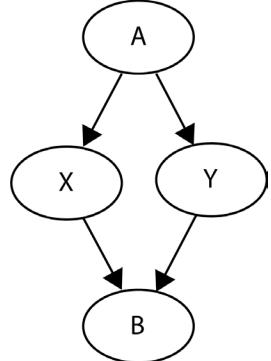

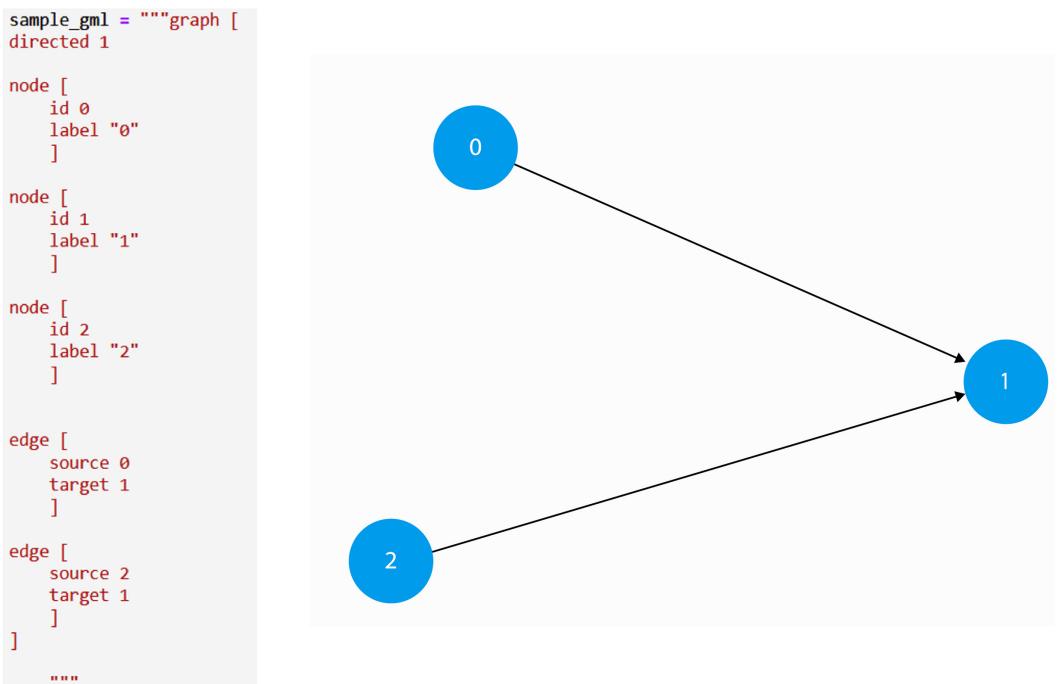

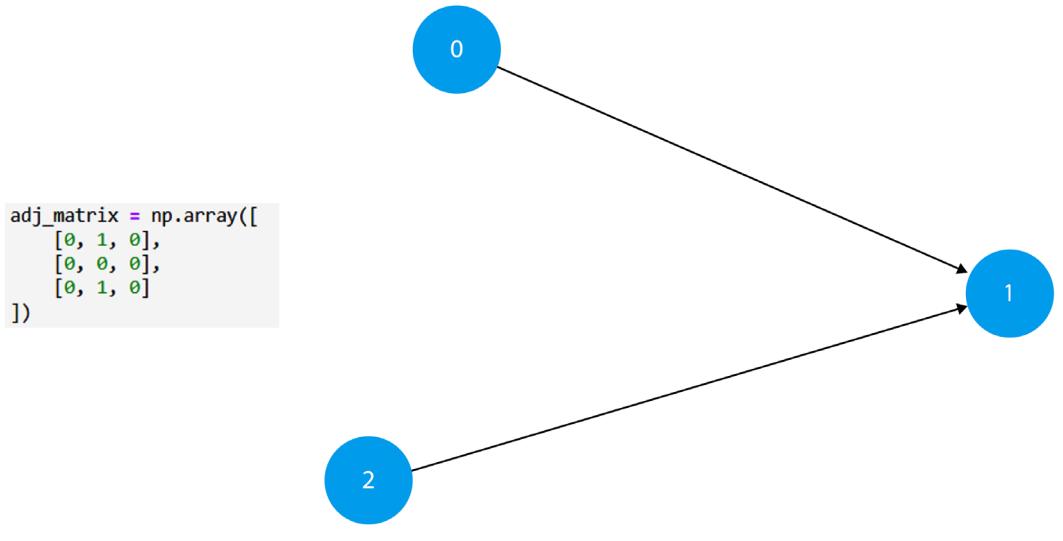

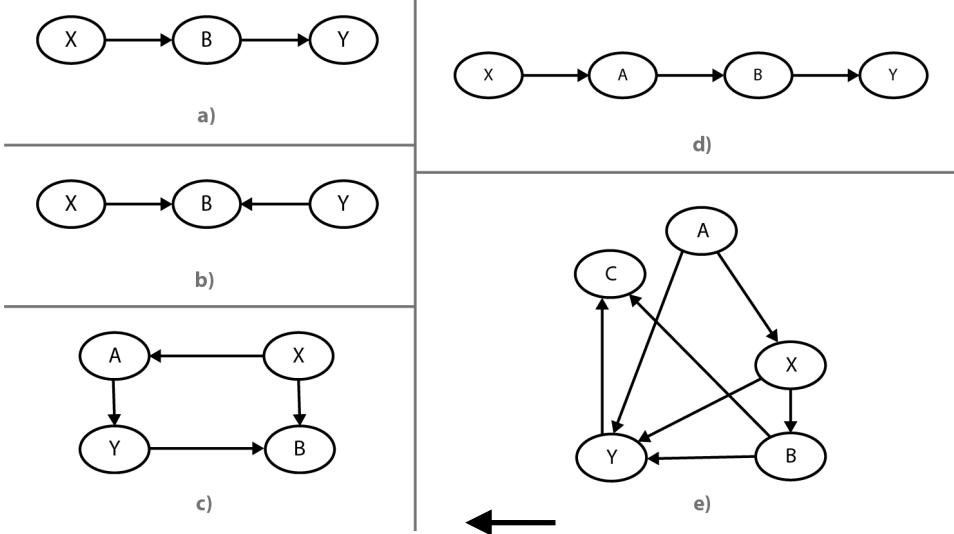

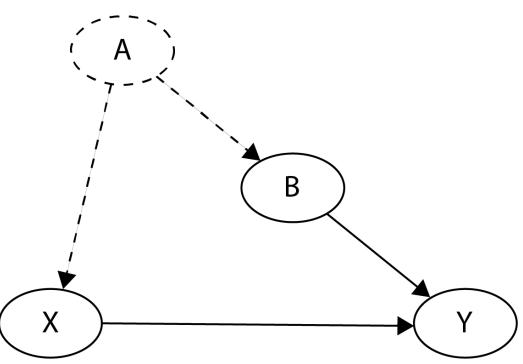

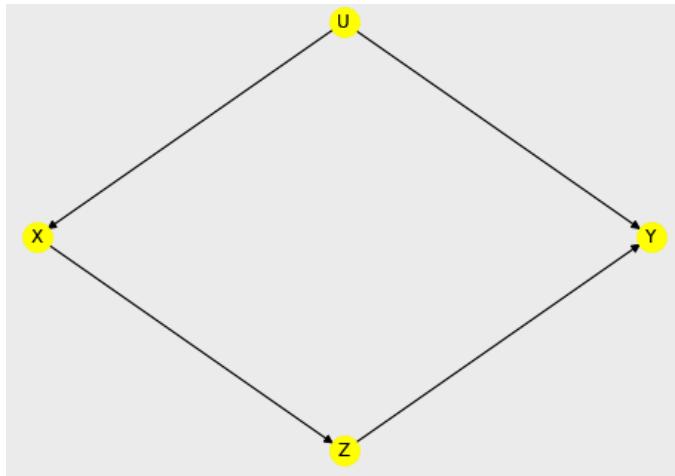

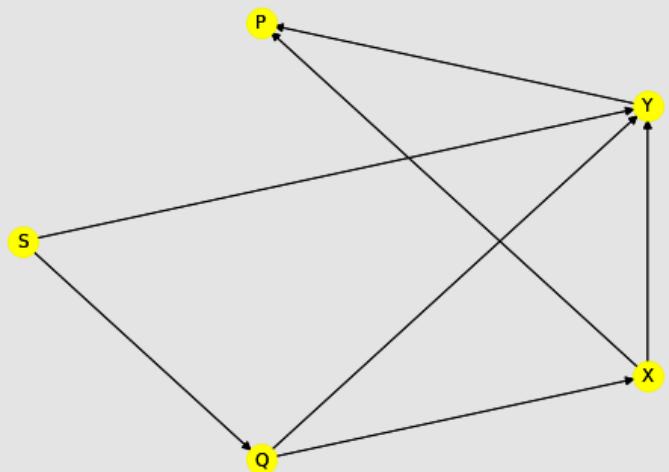

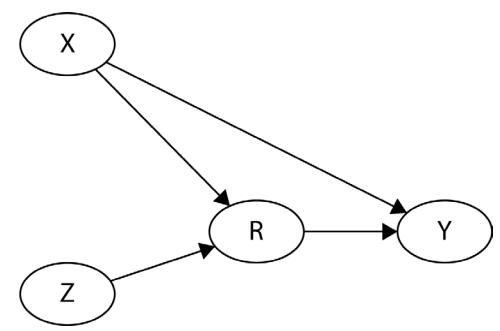

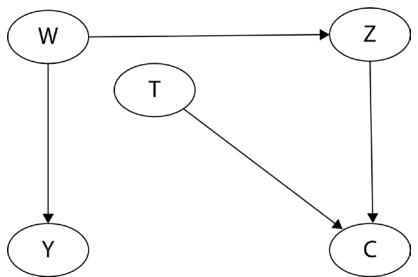

Figure 2.2 – A graphical representation of a structural causal model

Circles or nodes in the preceding figure represent variables. Lines with arrows or edges represent relationships between variables.

As you can see, there are two types of variables (marked with dashed versus regular lines). Arrows at the end of the lines represent the direction of the relationship.

Nodes A, B, and C are marked with solid lines. They represent the observed variables in our model. We call this type of variable endogenous. Endogenous variables are always children of at least one other variable in a model.

The other type of nodes (UX nodes) are marked with dashed lines. We call these variables exogenous, and they are represented by root nodes in the graph (they are not descendants of any other variable; Pearl, Glymour, and Jewell, 2016). Exogenous variables are also called noise variables.

Noise variables

Note that most causal inference and causal discovery methods require that noise variables are uncorrelated with each other (otherwise, they become unobserved confounders). This is one of the major difficulties in real-world causal inference, as sometimes, it’s very hard to be sure that we have met this assumption.

An SCM can be represented graphically (as in Figure 2.2) or as a series of equations. These two representations have different properties and might require different assumptions (we’ll leave this complexity out for now), but they refer to the same object – a data-generating process.

Let’s return to the SCM from Figure 2.2. We’ll define the functional relationships in this model in the following way:

\[\begin{aligned} A &:= f\_{\mathcal{A}}(\;{U\_{\mathcal{o}}}) \\ B &:= f\_{\mathcal{B}}(A, \;{U\_{\mathcal{1}}}) \\ C &:= f\_{\mathcal{C}}(A, B, \;{U\_{\mathcal{2}}}) \end{aligned}\]

A, B, C, and UX represent the nodes in Figure 2.1, and ≔ is an assignment operator, also known as a walrus operator. We use it here to emphasize that the relationship that we’re describing is directional (or asymmetric), as opposed to the regular equal sign that suggests a symmetric relation. Finally, f A, f B, f C represent arbitrary functions (they can be as simple as a summation or as complex as you want). This is all we need to know about SCMs at this stage. We will learn more about them in Chapter 3.

Equipped with a basic understanding of SCMs, we are now ready to jump to our coding exercise in the next section.

Let’s practice!

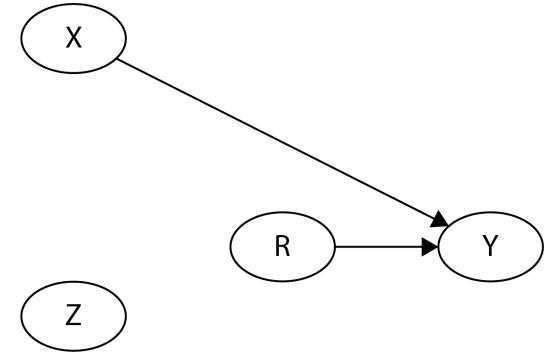

For this exercise, let’s recall our bookstore example from the beginning of the section.

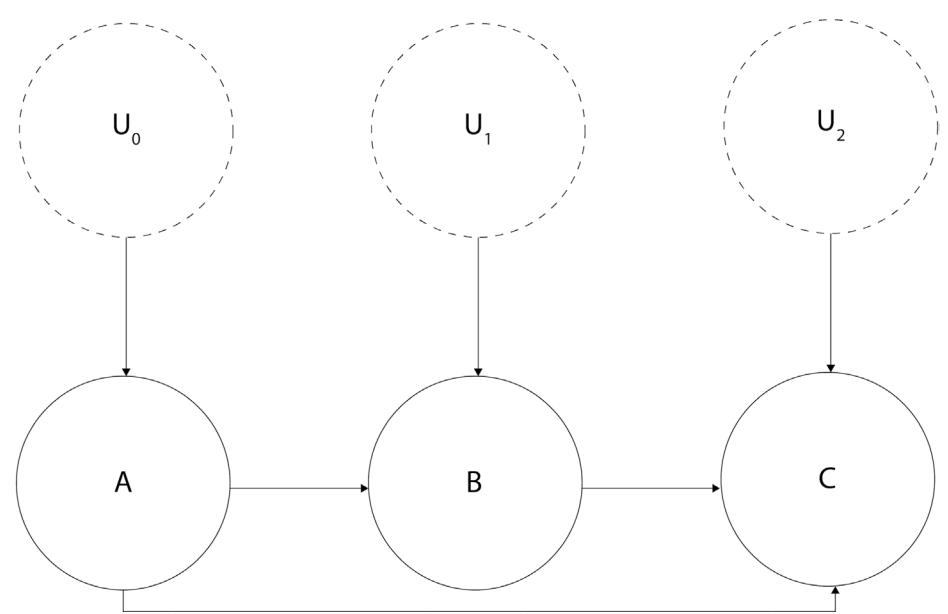

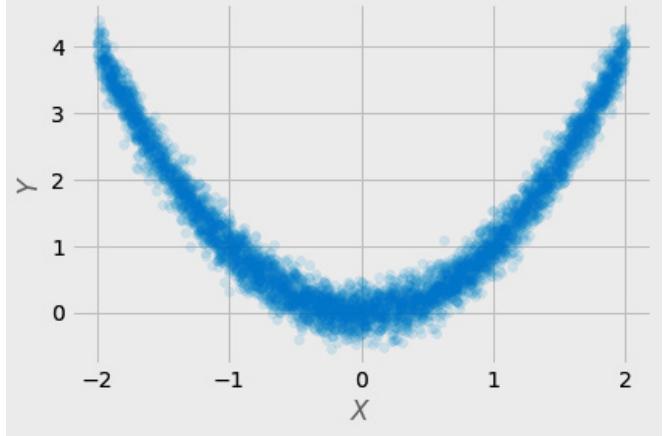

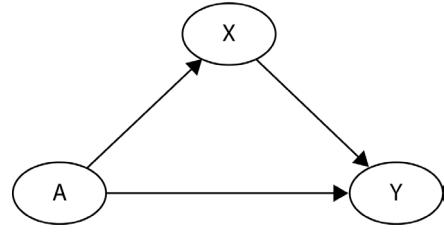

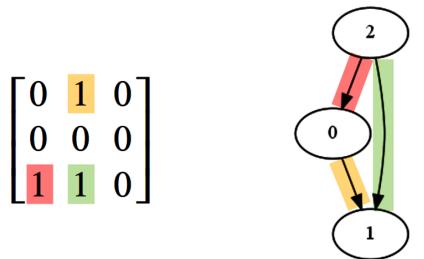

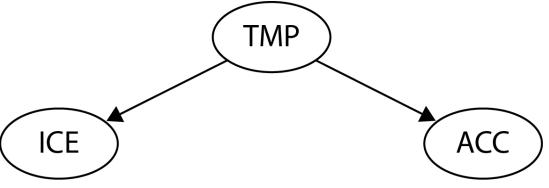

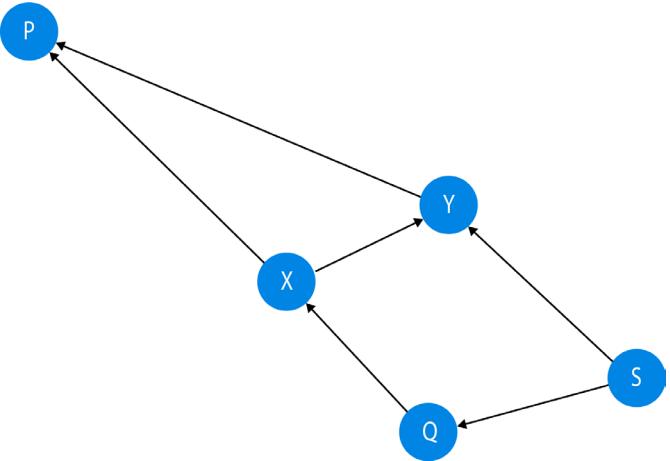

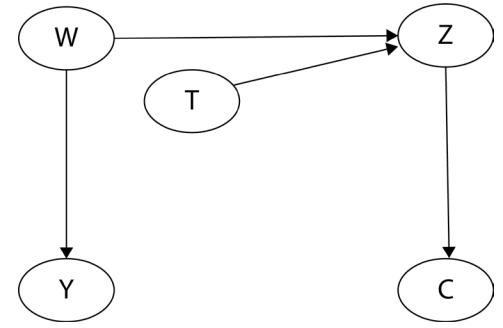

First, let’s define an SCM that can generate data with a non-zero probability of buying book A, given we bought book B. There are many possible SCMs that could generate such data. Figure 2.3 presents the model we have chosen for this section:

Figure 2.3 – A graphical model representing the bookstore example

To precisely define causal relations that drive our SCM, let’s write a set of equations:

\[U\_0 \sim U(0, 1)\]

\[U\_1 \sim N(0, 1)\]

\[A := 1\_{\{U\_i > \delta 1\}}\]

\[A = 1\_{\{\Pi\_i = 1\}}\]

B ≔ 1{(A+ .5*U1)> .2}

In the preceding formulas, U0 is a continuous random variable uniformly distributed between 0 and 1. U1 is a normally distributed random variable, with a mean value of 0 and a standard deviation of 1. A and B are binary variables, and 1{f} is an indicator function.

The indicator function

The notation for the indicator function might look complicated, but the idea behind it is very simple. The indicator function returns 1 when the condition in the curly braces is met and returns 0 otherwise. For instance, let’s take the following function:

X = 1{Z>0} If Z > 0 then X = 1, otherwise X = 0.

Now, let’s recreate this SCM in code. You can find the code for this exercise in the notebook for this chapter https://bit.ly/causal-ntbk-02:

- First, let’s import the necessary libraries:

import numpy as np

from scipy import stats- Next, let’s define the SCM. We will use the object-oriented approach for this purpose, although you might want to choose other ways for yourself, which is perfectly fine:

class BookSCM:

def __init__(self, random_seed=None):

self.random_seed = random_seed

self.u_0 = stats.uniform()

self.u_1 = stats.norm()

def sample(self, sample_size=100):

"""Samples from the SCM"""

if self.random_seed:

np.random.seed(self.random_seed)

u_0 = self.u_0.rvs(sample_size) u_1 = self.u_1.rvs(sample_size)

a = u_0 > .61

b = (a + .5 * u_1) > .2

return a, bLet’s unpack this code. In the __init__() method of our BookSCM, we define the distributions for U0 and U1 and set a random seed for reproducibility; the .sample() method samples from U0 and U1 , computes values for A and B (according to the formulas specified previously), and returns them.

Great! We’re now ready to generate some data and quantify an association between the variables using conditional probability:

- First, let’s instantiate our SCM and set the random seed to 45:

scm = BookSCM(random_seed=45)- Next, let’s sample 100 samples from it:

buy_book_a, buy_book_b = scm.sample(100)

Let’s check whether the shapes are as expected:

buy_book_a.shape, buy_book_b.shapeThe output is as follows:

((100,), (100,))

The shapes are correct. We generated the data, and we’re now ready to answer the question that we posed at the beginning of this section – what is the probability that a person will buy book A, given that they bought book B?

- Let’s compute the P(book A|book B) conditional probability to answer our question:

proba_book_a_given_book_b = buy_book_a[buy_book_b].sum() / buy_

book_a[buy_book_b].shape[0]

print(f'Probability of buying book A given B: {proba_book_a_

given_book_b:0.3f}')This returns the following result:

Probability of buying book A given B: 0.638

As we can see, the probability of buying book A, given we bought book B, is 63.8%. This indicates a positive relationship between both variables (if there was no association between them, we would expect the result to be 50%). These results inform us that we can make meaningful predictions using observational data alone. This ability is the essence of most contemporary (supervised) machine learning models.

Let’s summarize. Associations are useful. They allow us to generate meaningful predictions of potentially high practical significance in the absence of knowledge of the data-generating process. We used an SCM to generate hypothetical data for our bookstore example and estimated the strength of association between book A and book B sales, using a conditional probability query. Conditional probability allowed us to draw conclusions in the absence of knowledge of the true data-generating process, based on the observational data alone (note that although we knew the true SCM, we did not use any knowledge about it when computing the conditional probability query; we virtually forgot anything about the SCM before generating the predictions). That said, associations only allow us to answer rung one questions.

Let’s climb to the second rung of the Ladder of Causation to see how to go beyond some of these limitations.

What are interventions?

In this section, we’ll summarize what we’ve learned about interventions so far and introduce mathematical tools to describe them. Finally, we’ll use our newly acquired knowledge to implement an intervention example in Python.

The idea of intervention is very simple. We change one thing in the world and observe whether and how this change affects another thing in the world. This is the essence of scientific experiments. To describe interventions mathematically, we use a special do-operator. We usually express it in mathematical notation in the following way:

P(Y = 1|do(X = 0))

The preceding formula states that the probability of Y = 1, given that we set X to 0. The fact that we need to change X’s value is critical here, and it highlights the inherent difference between intervening and conditioning (conditioning is the operation that we used to obtain conditional probabilities in the previous section). Conditioning only modifies our view of the data, while intervening affects the distribution by actively setting one (or more) variable(s) to a fixed value (or a distribution). This is very important – intervention changes the system, but conditioning does not. You might ask, what does it mean that intervention changes the system? Great question!

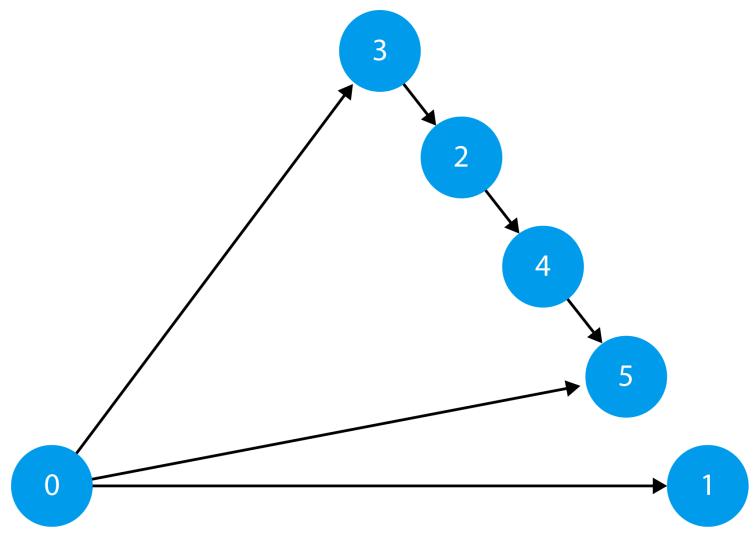

The graph saga – parents, children, and more

When we talk about graphs, we often use terms such as parents, children, descendants, and ancestors. To make sure that you can understand the next subsection clearly, we’ll give you a brief overview of these terms here.

We say that the node X is a parent of the node Y and that Y is a child of X when there’s a direct arrow from X to Y. If there’s also an arrow from Y to Z, we say that Z is a grandchild of X and that X is a grandparent of Z. Every child of X, all its children and their children, their children’s children, and so on are descendants of X, which is their ancestor. For a more formal explanation, check out Chapter 4.

Changing the world

When we intervene in a system and fix a value or alter the distribution of some variable – let’s call it X – one of three things can happen:

- The change in X will influence the values of its descendants (assuming X has descendants and excluding special cases where X’s influence is canceled – for example, f(x) = x − x)

- X will become independent of its ancestors (assuming that X has ancestors)

- Both situations will take place (assuming that X has descendants and ascendants, excluding special cases)

Note that none of these would happen if we conditioned on X, because conditioning does not change the value of any of the variables – it does not change the system.

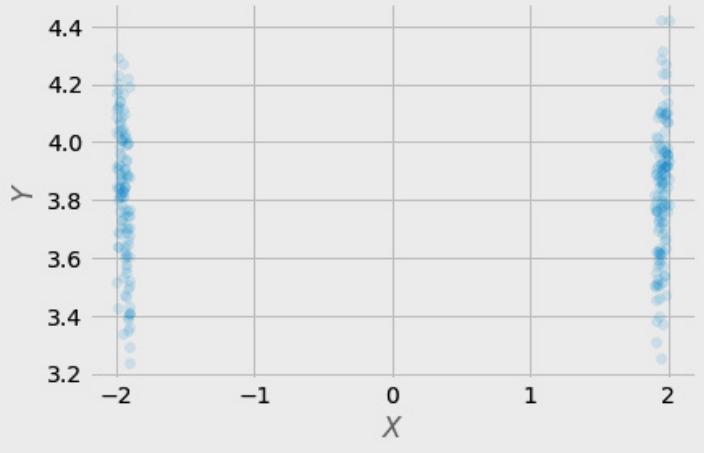

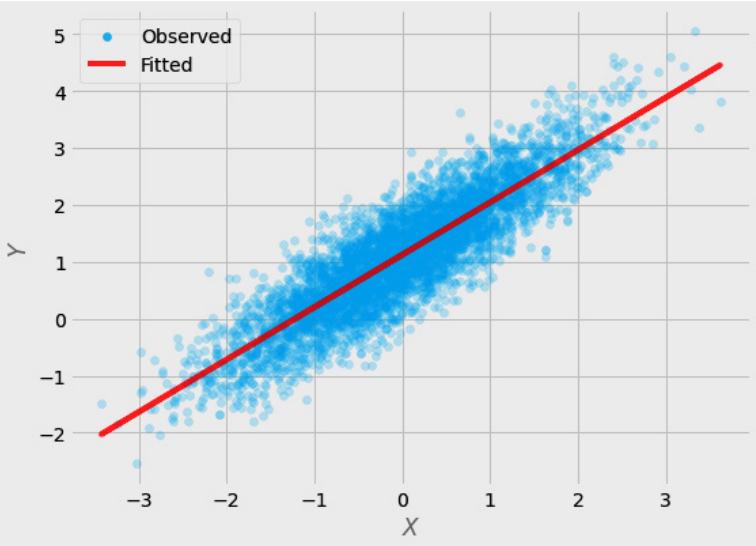

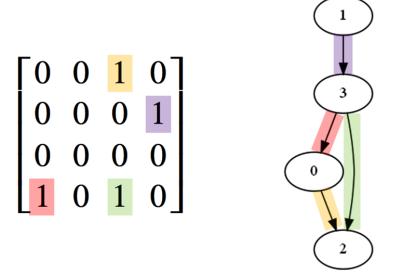

Let’s translate interventions into code. We will use the following SCM for this purpose:

U0 ~ N(0, 1)

U1 ~ N(0, 1)

A ≔ U0

B ≔ 5A + U1

The graphical representation of this model is identical to the one in Figure 2.3. Its functional assignments are different though, and – importantly – we set A and B to be continuous variables (as opposed to the model in the previous section, where A and B were binary; note that this is a new example, and the only thing it shares with the bookstore example is the structure of the graph):

- First, we’ll define the sample size for our experiment and set a random seed for reproducibility:

SAMPLE_SIZE = 100

np.random.seed(45)- Next, let’s build our SCM. We will also compute the correlation coefficient between A and B and print out a couple of statistics:

u_0 = np.random.randn(SAMPLE_SIZE)

u_1 = np.random.randn(SAMPLE_SIZE)

a = u_0

b = 5 * a + u_1

r, p = stats.pearsonr(a, b)

print(f'Mean of B before any intervention: {b.mean():.3f}')

print(f'Variance of B before any intervention: {b.var():.3f}')

print(f'Correlation between A and B:\nr = {r:.3f}; p =

{p:.3f}\n')We obtain the following result:

Mean of B before any intervention: -0.620

Variance of B before any intervention: 22.667

Correlation between A and B: