Bayesian Analysis with Python

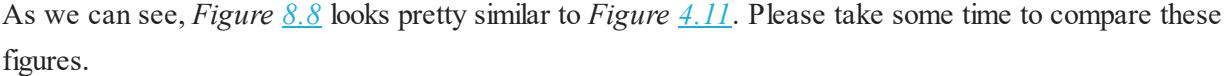

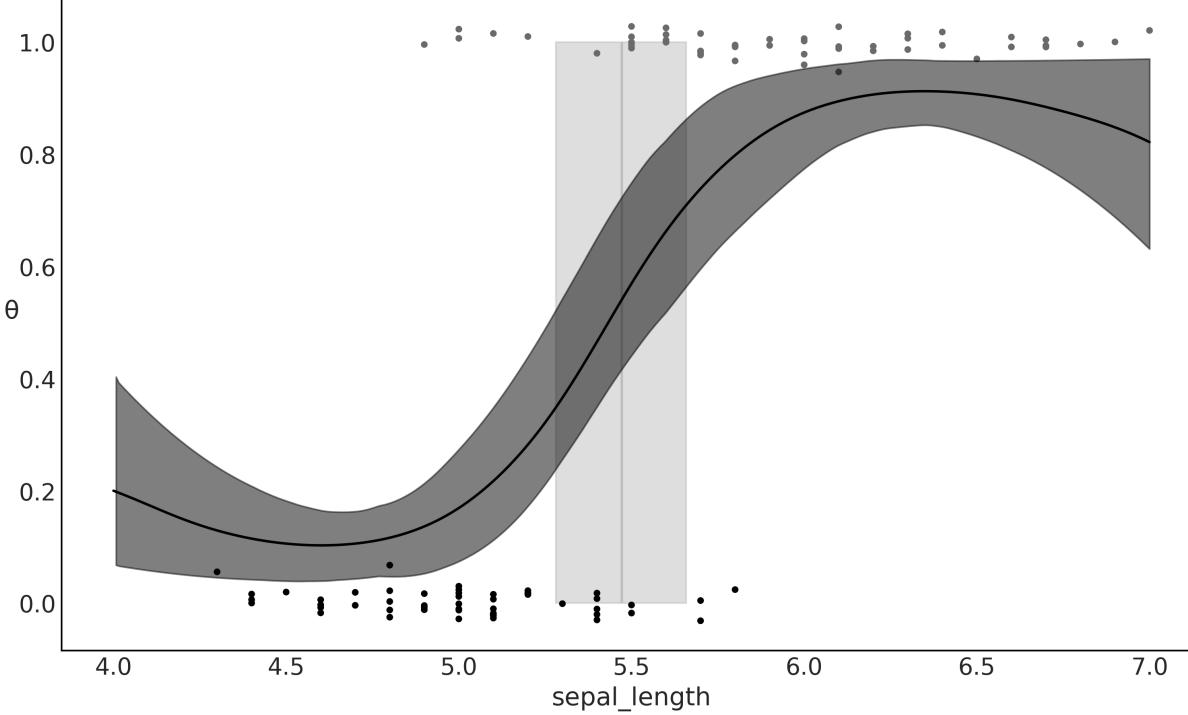

Bayesian Analysis with Python

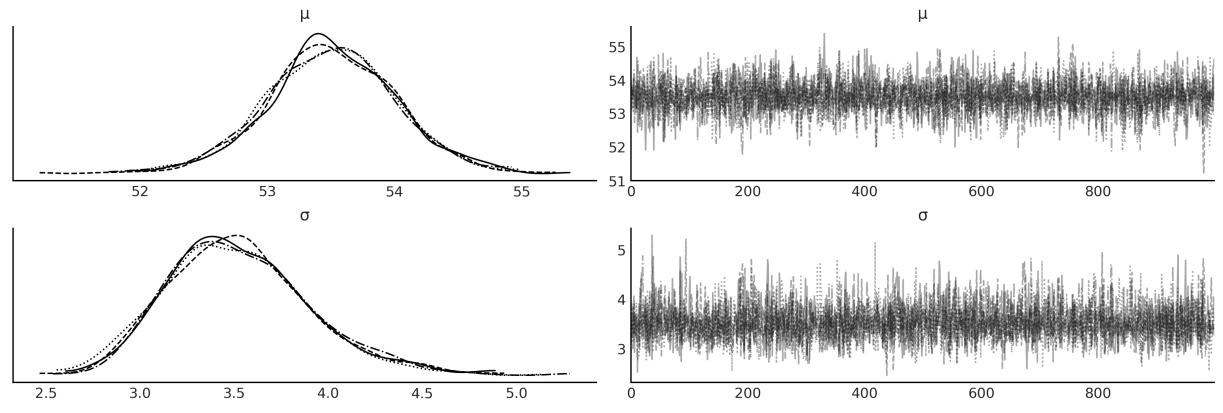

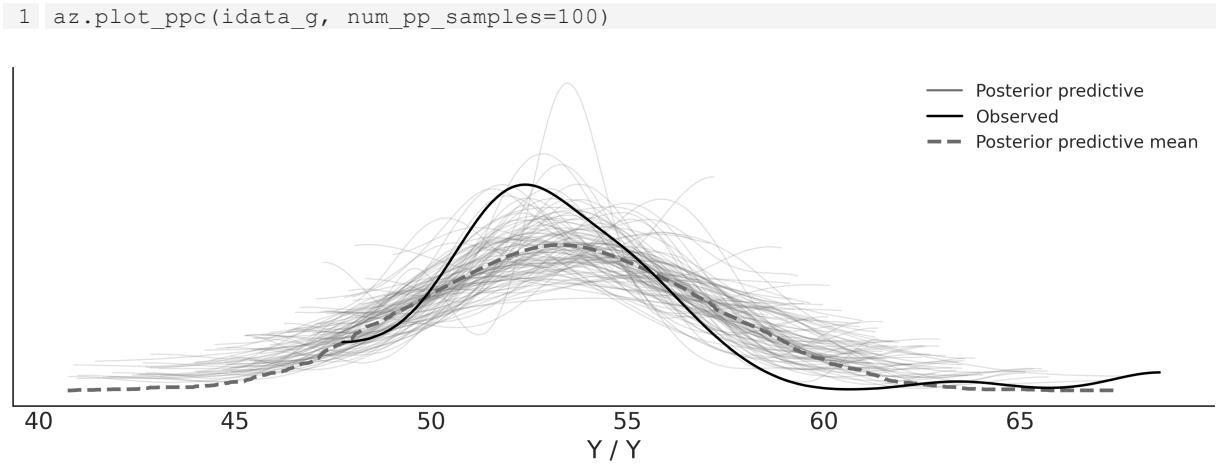

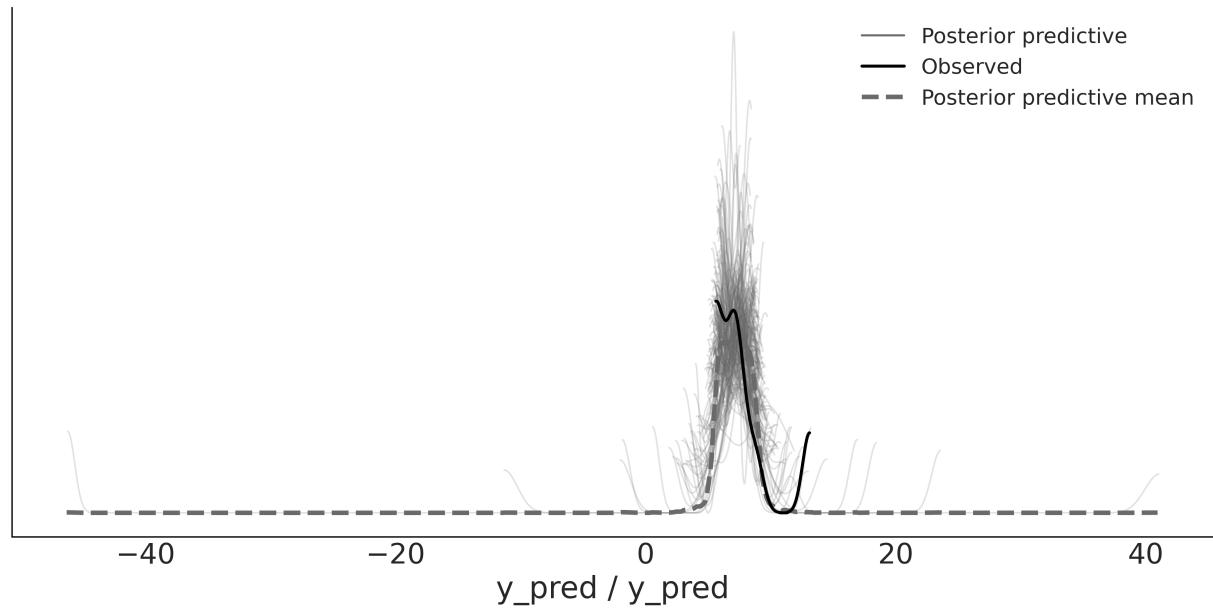

Third Edition

A practical guide to probabilistic modeling

Osvaldo Martin

Copyright © 2024 Packt Publishing

All rights reserved. No part of this book may be reproduced, stored in a retrievalsystem, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews.

Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book.

Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information.

Lead Senior Publishing Product Manager: Tushar Gupta

Acquisition Editor – Peer Reviews: Bethany O’Connell

Project Editor: Namrata Katare Development Editor: Tanya D’cruz

Copy Editor: Safis Editing

Technical Editor: Aniket Shetty

Indexer: Rekha Nair

Proofreader: Safis Editing

Presentation Designer: Pranit Padwal

Developer Relations Marketing Executive: Monika Sangwan

First published: November 2016

Second edition: December 2018

Third edition:January 2024

Production reference: 1290124

Published by Packt Publishing Ltd.

Grosvenor House 11

St Paul’s Square Birmingham B3 1RB, UK. ISBN 978-1-80512-716-1 www.packt.com

In gratitude to my family: Romina, Abril, and Bruno.

Foreword

As we present this new edition of Bayesian Analysis with Python, it’s essential to recognize the profound impact this book has had on advancing the growth and education of the probabilistic programming user community. The journey from its first publication to this current edition mirrors the evolution of Bayesian modeling itself – a path marked by significant advancements, growing community involvement, and an increasing presence in both academia and industry.

The field of probabilistic programming is in a different place today than it was when the first edition was devised in the middle of the last decade. As long-term practitioners, we have seen firsthand how Bayesian methods grew from a more fringe methodology to the primary way of solving some of the most advanced problems in science and various industries. This trend is supported by the continued development of advanced, performant, high-level tools such as PyMC. With this is a growing number of new applied users, many of whom have limited experience with either Bayesian methods, PyMC, or the underlying libraries that probabilistic programming packages increasingly rely on to accelerate computation. In this context, this new edition comes at the perfect time to introduce the next generation of data scientists to this increasingly powerful methodology.

Osvaldo Martin, a teacher, applied statistician, and long-time core PyMC developer, is the perfect guide to help readers navigate this complex landscape. He provides a clear concise and comprehensive introduction to Bayesian methods and the PyMC library, and he walks readers through a variety of realworld examples. As the population of data scientists using probabilistic programming grows, it is important to instill them with good habits and a sound workflow; Dr. Martin here provides sound, engaging guidance for doing so.

What makes this book a go-to reference is its coverage of most of the key questions posed by applied users: How do I express my problem as a probabilistic program? How do I know if my model is working? How do I know which model is best? Herein you will find a primer on Bayesian best practices, updated to current standards based on methodological improvements since the release of the last edition. This includes innovations related to the PyMC library itself, which has come a long way since PyMC3, much to the benefit of you, the end-user.

Complementing these improvements is the expansion of the PyMC ecosystem, a reflection of the broadening scope and capabilities of Bayesian modeling. This edition includes discussions on four notable new libraries: Bambi, Kulprit, PreliZ, and PyMC-BART. These additions, along with the continuous refinement of text and code, ensure that readers are equipped with the latest tools and methodologies in Bayesian analysis. This edition is not just an update but a significant step forward in the journey of probabilistic programming, mirroring the dynamic evolution of PyMC and its community.

The previous two editions of this book have been cornerstones for many in understanding and applying Bayesian methods. Each edition, including this latest one, has evolved to incorporate new developments, making it an indispensable resource for both newcomers and experienced practitioners. As PyMC continues to evolve - perhaps even to newer versions by the time this book is read - the content here remains relevant, providing foundational knowledge and insights into the latest advancements. In this edition, readers will find not only a comprehensive introduction to Bayesian analysis but also a window into the cutting-edge techniques that are currently shaping the field. We hope this book serves as both a guide and an inspiration, showcasing the power and flexibility of Bayesian modeling in addressing complex data-driven challenges.

As co-authors of this foreword, we are excited about the journey that lies ahead for readers of this book. You are joining a vibrant, ever-expanding community of enthusiasts and professionals who are pushing the boundaries of what’s possible in data analysis. We trust that this book will be a valuable companion in your exploration of Bayesian modeling and a catalyst for your own contributions to this dynamic field.

Christopher Fonnesbeck, PyMC’s original author and Principal Quantitative Analyst for the Philadelphia Phillies

Thomas Wiecki, CEO & Founder of PyMC Labs

Contributors

About the reviewer

Joon (Joonsuk) Park is a former quantitative psychologist and currently a machine learning engineer. He graduated from the Ohio State University with a PhD in Quantitative Psychology in 2019. His research during graduate study was focused on the applications of Bayesian statistics to cognitive modeling and behavioral research methodology. He transitioned into an industry data science and has worked as a data scientist since 2020. He has also published several books on psychology, statistics, and data science in Korean.

Table of Contents

- Bayesian Analysis with Python

Third Edition

- Chapter 1 Thinking Probabilistically

- 1.1 Statistics, models, and this book’s approach

- 1.2 Working with data

- 1.3 Bayesian modeling

- 1.4 A probability primer for Bayesian practitioners

- 1.5 Interpreting probabilities

- 1.6 Probabilities, uncertainty, and logic

- 1.7 Single-parameter inference

- 1.8 How to choose priors

- 1.9 Communicating a Bayesian analysis

- 1.10 Summary

- 1.11 Exercises

- Join our community Discord space

- Chapter 2 Programming Probabilistically

- 2.1 Probabilistic programming

- 2.2 Summarizing the posterior

- 2.3 Posterior-based decisions

- 2.4 Gaussians all the way down

- 2.5 Posterior predictive checks

- 2.6 Robust inferences

- 2.7 InferenceData

- 2.8 Groups comparison

- 2.9 Summary

- 2.10 Exercises

- Join our community Discord space

- Chapter 3 Hierarchical Models

- 3.1 Sharing information, sharing priors

- 3.2 Hierarchical shifts

- 3.3 Water quality

- 3.4 Shrinkage

- 3.5 Hierarchies all the way up

- 3.6 Summary

- 3.7 Exercises

- Join our community Discord space

- Chapter 4 Modeling with Lines

- 4.1 Simple linear regression

- 4.2 Linear bikes

- 4.3 Generalizing the linear model

- 4.4 Counting bikes

- 4.5 Robust regression

- 4.6 Logistic regression

- 4.7 Variable variance

- 4.8 Hierarchical linear regression

- 4.9 Multiple linear regression

- 4.10 Summary

- 4.11 Exercises

- Join our community Discord space

- Chapter 5 Comparing Models

- 5.1 Posterior predictive checks

- 5.2 The balance between simplicity and accuracy

- 5.3 Measures of predictive accuracy

- 5.4 Calculating predictive accuracy with ArviZ

- 5.5 Model averaging

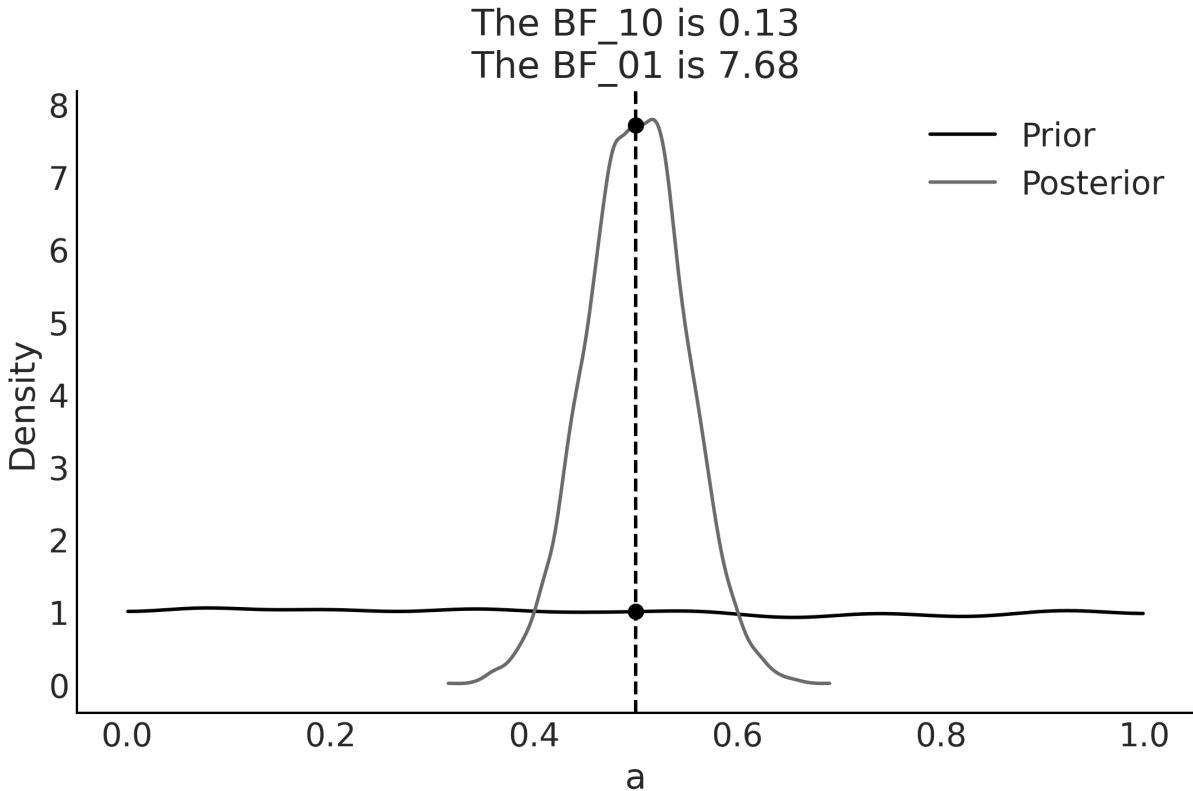

- 5.6 Bayes factors

- 5.7 Bayes factors and inference

- 5.8 Regularizing priors

- 5.9 Summary

- 5.10 Exercises

- Join our community Discord space

- Chapter 6 Modeling with Bambi

- 6.1 One syntax to rule them all

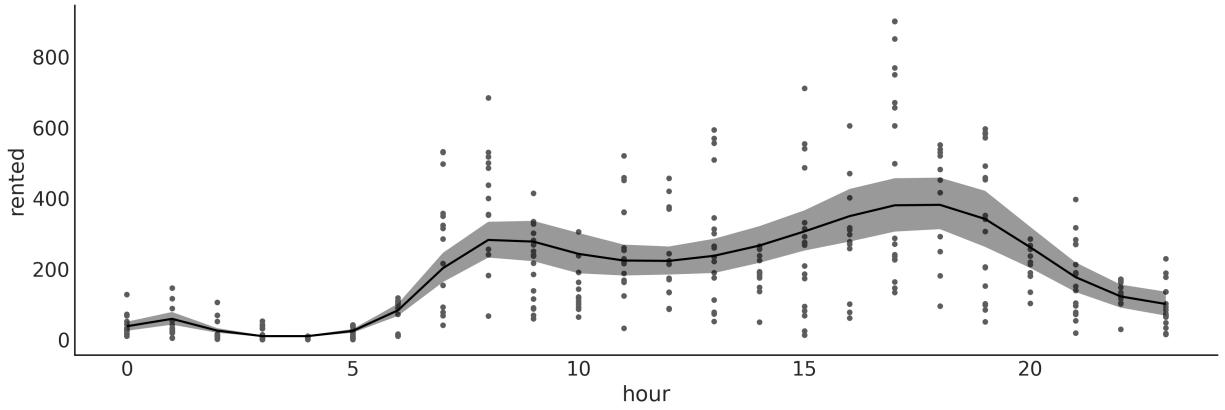

- 6.2 The bikes model, Bambi’s version

- 6.3 Polynomial regression

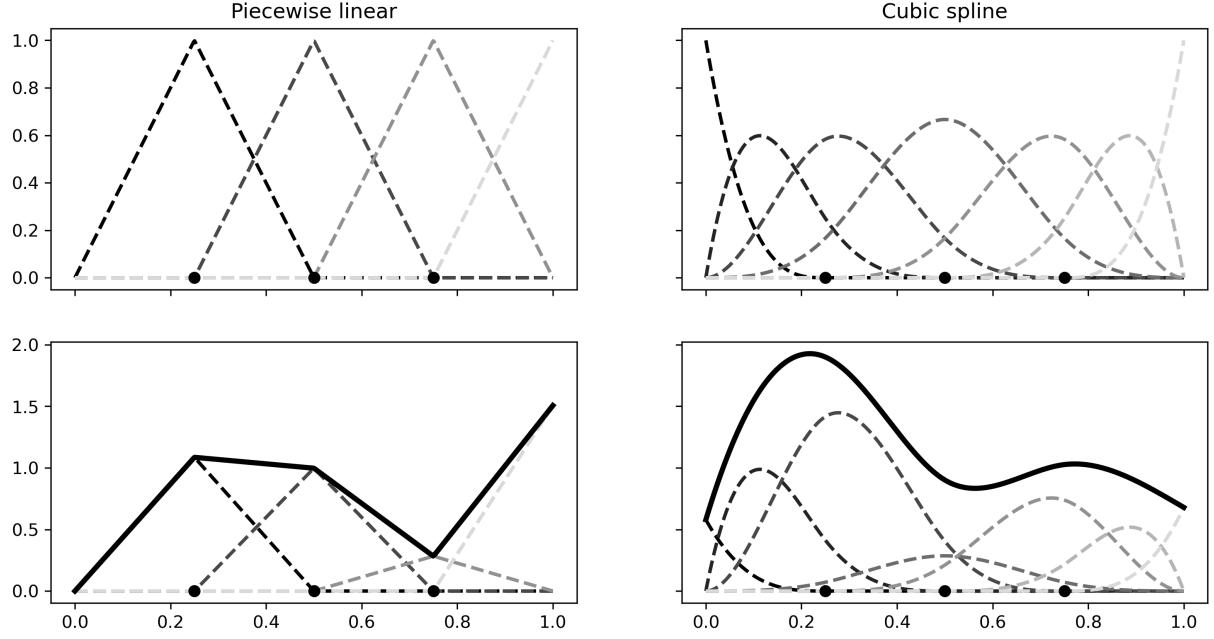

- 6.4 Splines

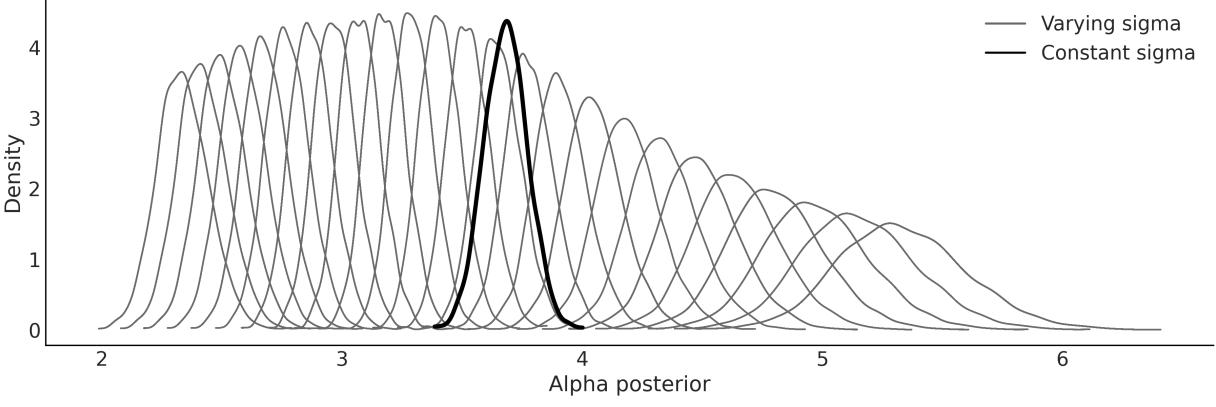

- 6.5 Distributional models

- 6.6 Categorical predictors

- 6.7 Interactions

- 6.8 Interpreting models with Bambi

- 6.9 Variable selection

- 6.10 Summary

- 6.11 Exercises

- Join our community Discord space

- Chapter 7 Mixture Models

- 7.1 Understanding mixture models

- 7.2 Finite mixture models

- 7.3 The non-identifiability of mixture models

- 7.4 How to choose K

- 7.5 Zero-Inflated and hurdle models

- 7.6 Mixture models and clustering

- 7.7 Non-finite mixture model

- 7.8 Continuous mixtures

- 7.9 Summary

- 7.10 Exercises

- Join our community Discord space

- Chapter 8 Gaussian Processes

- 8.1 Linear models and non-linear data

- 8.2 Modeling functions

- 8.3 Multivariate Gaussians and functions

- 8.4 Gaussian processes

- 8.5 Gaussian process regression

- 8.6 Gaussian process regression with PyMC

- 8.7 Gaussian process classification

- 8.8 Cox processes

- 8.9 Regression with spatial autocorrelation

- 8.10 Hilbert space GPs

- 8.11 Summary

- 8.12 Exercises

- Join our community Discord space

- Chapter 9 Bayesian Additive Regression Trees

- 9.1 Decision trees

- 9.2 BART models

- 9.3 Distributional BART models

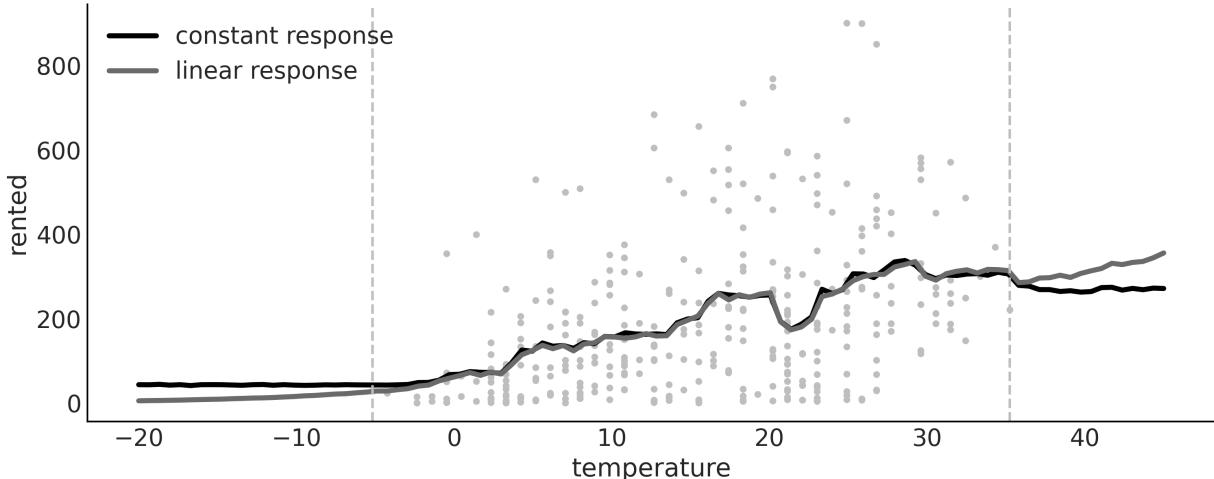

- 9.4 Constant and linear response

- 9.5 Choosing the number of trees

- 9.6 Summary

- 9.7 Exercises

- Join our community Discord space

- Chapter 10 Inference Engines

- 10.1 Inference engines

- 10.2 The grid method

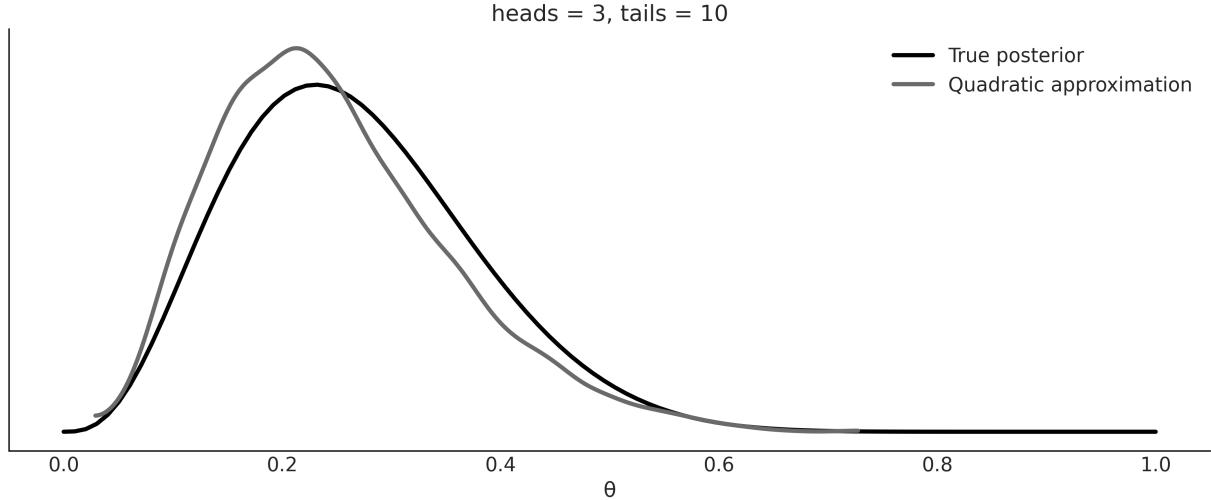

- 10.3 Quadratic method

- 10.4 Markovian methods

- 10.5 Sequential Monte Carlo

- 10.6 Diagnosing the samples

- 10.7 Convergence

- 10.8 Effective Sample Size (ESS)

- 10.9 Monte Carlo standard error

- 10.10 Divergences

- 10.11 Keep calm and keep trying

- 10.12 Summary

- 10.13 Exercises

- Join our community Discord space

- Chapter 11 Where to Go Next

Preface

Bayesian statistics has been developing for more than 250 years. During this time, it has enjoyed as much recognition and appreciation as it has faced disdain and contempt. Throughout the last few decades, it has gained more and more attention from people in statistics and almost all the other sciences, engineering, and even outside the boundaries of the academic world. This revival has been possible due to theoretical and computational advancements developed mostly throughout the second half of the 20th century. Indeed, modern Bayesian statistics is mostly computationalstatistics. The necessity for flexible and transparent models and a more intuitive interpretation of statistical models and analysis has only contributed to the trend.

In this book, our focus will be on a practical approach to Bayesian statistics and we will not delve into discussions about the frequentist approach or its connection to Bayesian statistics. This decision is made to maintain a clear and concise focus on the subject matter. If you are interested in that perspective, Doing Bayesian Data Analysis may be the book for you [Kruschke, 2014]. We also avoid philosophical discussions, not because they are not interesting or relevant, but because this book aims to be a practical guide to Bayesian data analysis. One good reading for such discussion is Clayton [2021].

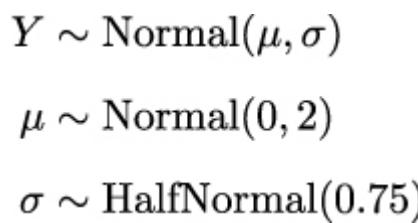

We follow a modeling approach to statistics. We will learn how to think in terms of probabilistic models and apply Bayes’ theorem to derive the logical consequences of our models and data. The approach will also be computational; models will be coded using PyMC [Abril-Pla et al., 2023] and Bambi [Capretto et al., 2022]. These are libraries for Bayesian statistics that hide most of the mathematical details and computations from the user. We will then use ArviZ [Kumar et al., 2019], a Python package for exploratory analysis of Bayesian models, to better understand our results. We will also be assisted by other libraries in the Python ecosystem, including PreliZ [Icazatti et al., 2023] for prior elicitation, Kulprit for variable selection, and PyMC-BART [Quiroga et al., 2022] for flexible regression. And of course, we will also use common tools from the standard Python Data stack, like NumPy [Harris et al., 2020], matplotlib [Hunter, 2007], Pandas [Wes McKinney, 2010], etc.

Bayesian methods are theoretically grounded in probability theory, and so it’s no wonder that many books about Bayesian statistics is full of mathematical formulas requiring a certain level of mathematical sophistication. Learning the mathematical foundations of statistics will certainly help you build better models and gain intuition about problems, models, and results. Nevertheless, libraries such as PyMC allow us to learn and do Bayesian statistics with only a modest amount of mathematical knowledge, as you will be able to verify yourself throughout this book.

Who this book is for

If you are a student, data scientist, researcher in the natural or socialsciences, or developer looking to get started with Bayesian data analysis and probabilistic programming, this book is for you. The book is introductory, so no previous statistical knowledge is required. However, the book assumes you have experience with Python and familiarity with libraries like NumPy and matplotlib.

What this book covers

Chapter 1, Thinking Probabilistically, covers the basic concepts of Bayesian statistics and its implications for data analysis. This chapter contains most of the foundational ideas used in the rest of the book.

Chapter 2, Programming Probabilistically, revisits the concepts from the previous chapter from a more computational perspective. The PyMC probabilistic programming library and ArviZ, a Python library for exploratory analysis of Bayesian models are introduced.

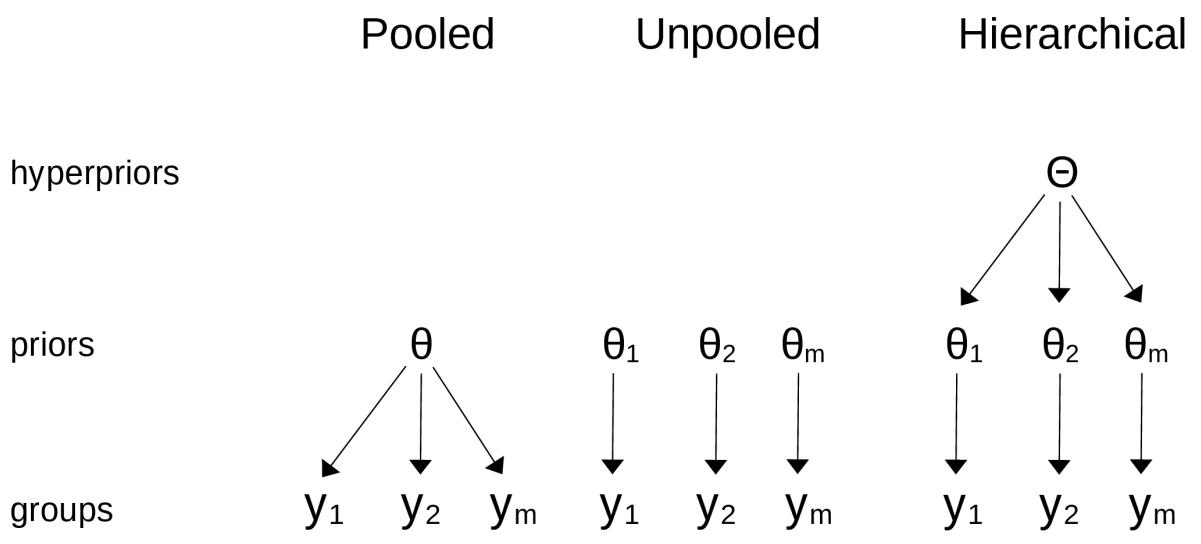

Chapter 3, Hierarchical Models, illustrates the core ideas of hierarchical models through examples.

Chapter 4, Modeling with Lines, covers the basic elements of linear regression, a very widely used model and the building block of more complex models, and then moves into generalizing linear models to solve many data analysis problems.

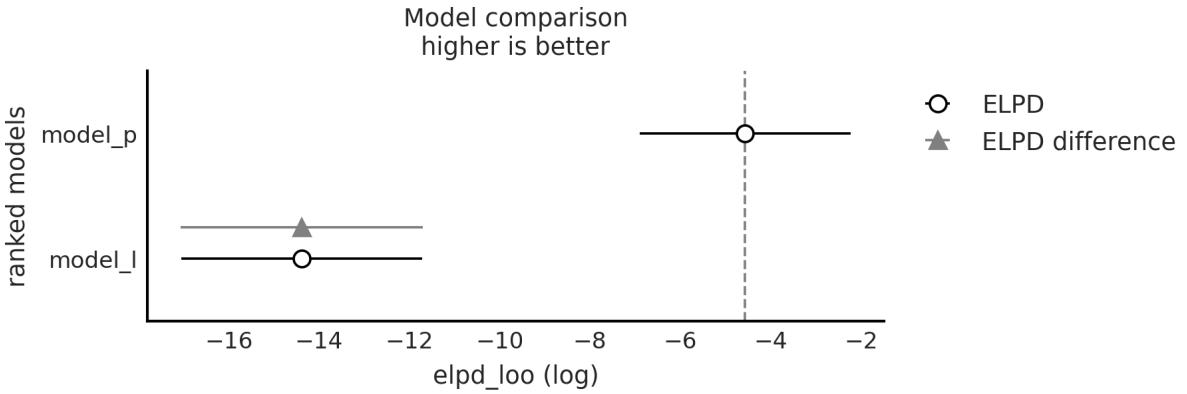

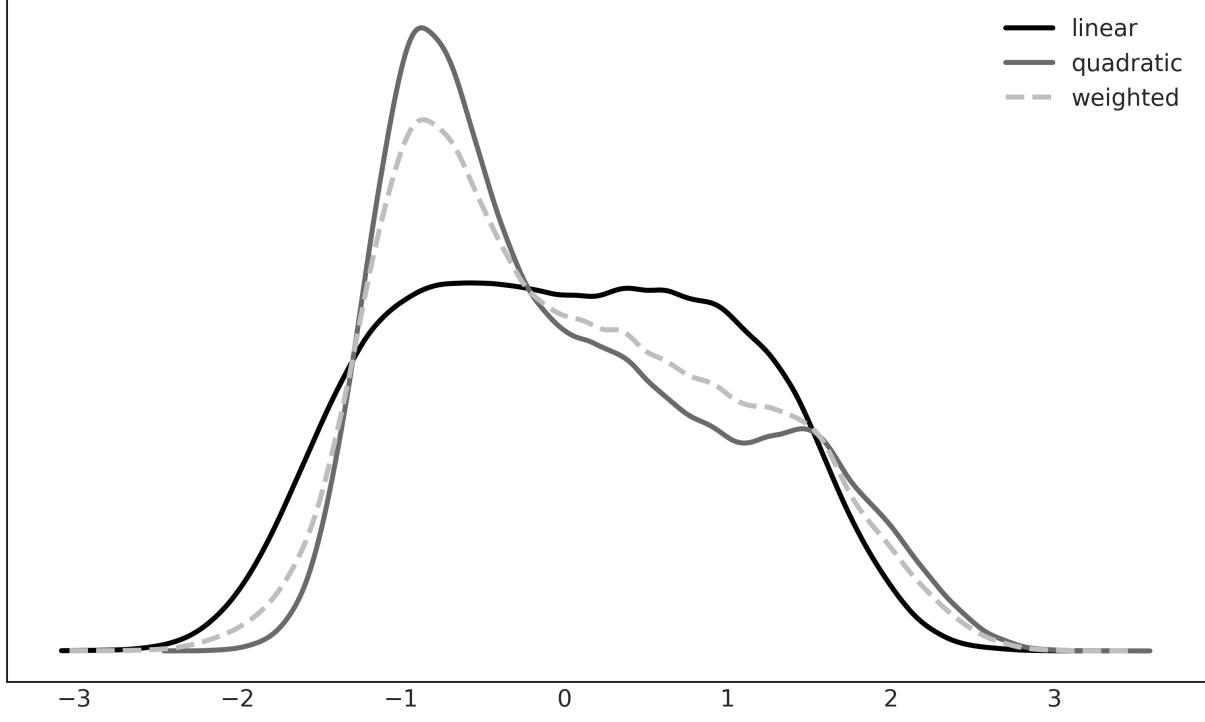

Chapter 5, Comparing Models, discusses how to compare and select models using posterior predictive checks, LOO, and Bayes factors. The general caveats of these methods are discussed and model averaging is also illustrated.

Chapter 6, Modeling with Bambi, introduces Bambi, a Bayesian library built on top of PyMC that simplifies working with generalized linear models. In this chapter, we will also discuss variable selection and new models like splines.

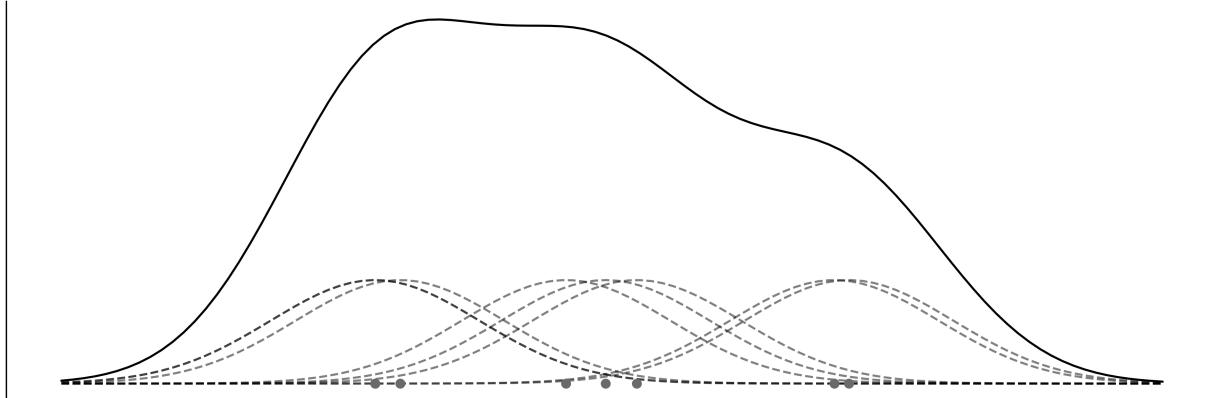

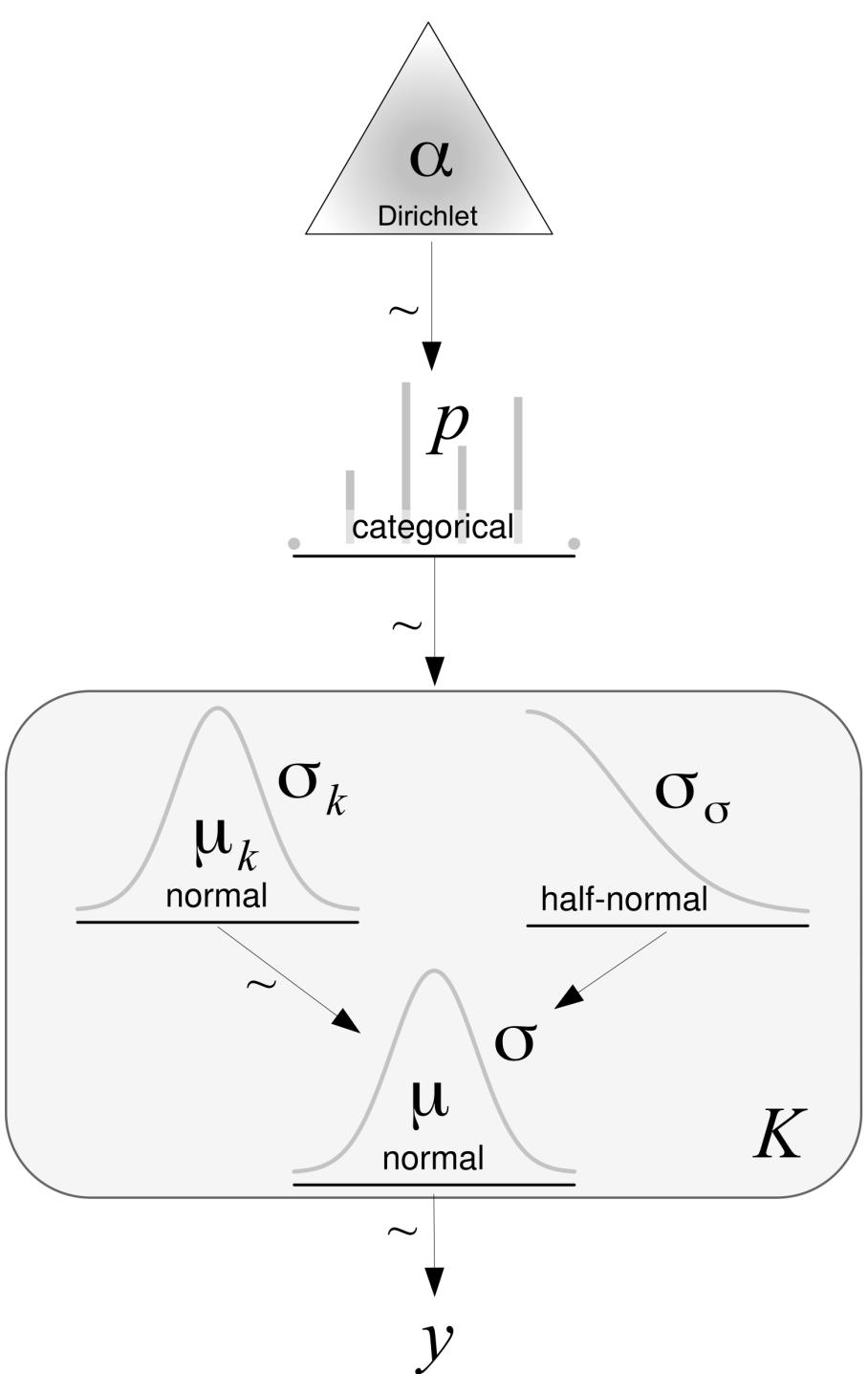

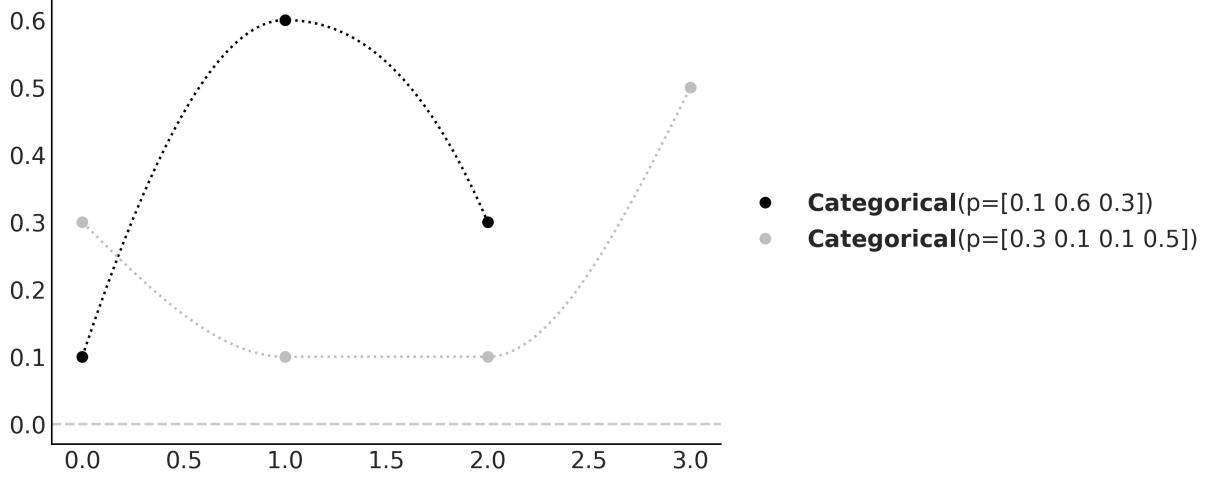

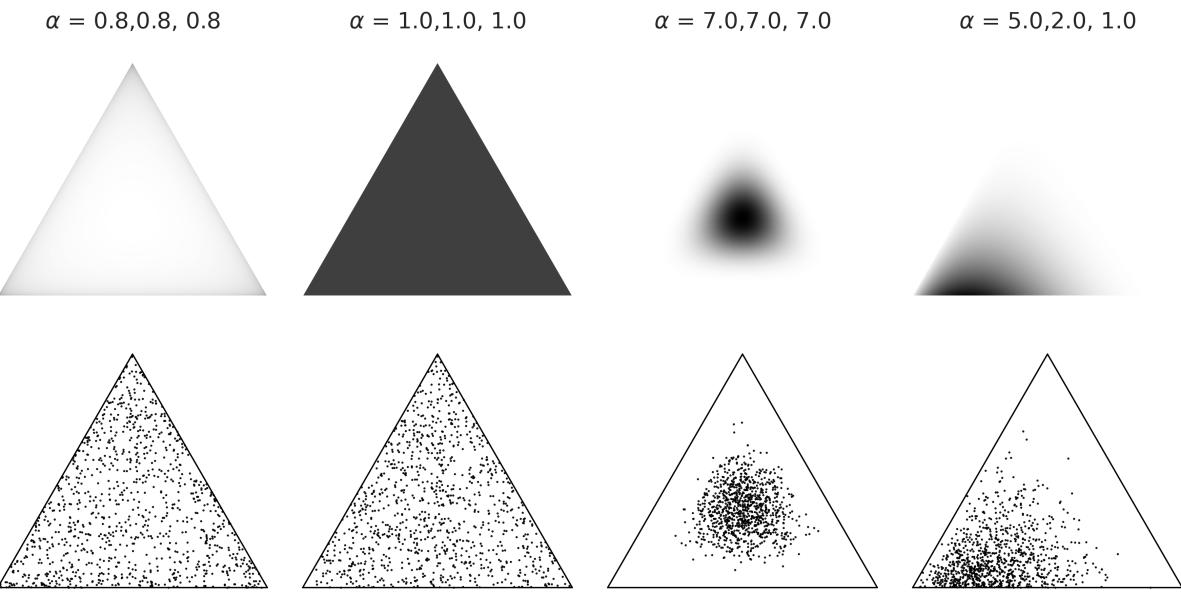

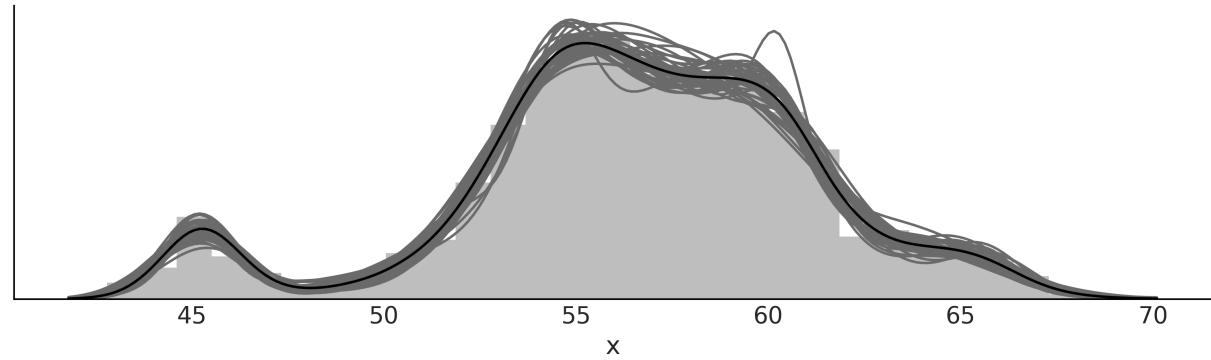

Chapter 7, Mixture Models, discusses how to add flexibility to models by mixing simpler distributions to build more complex ones. The first non-parametric model in the book is also introduced: the Dirichlet process.

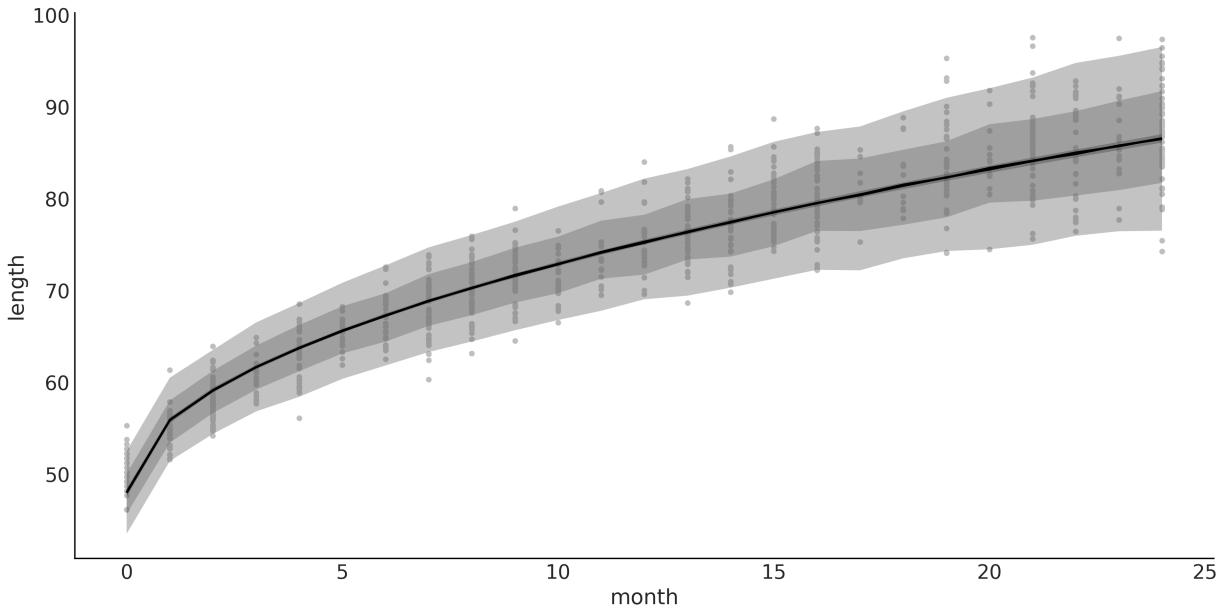

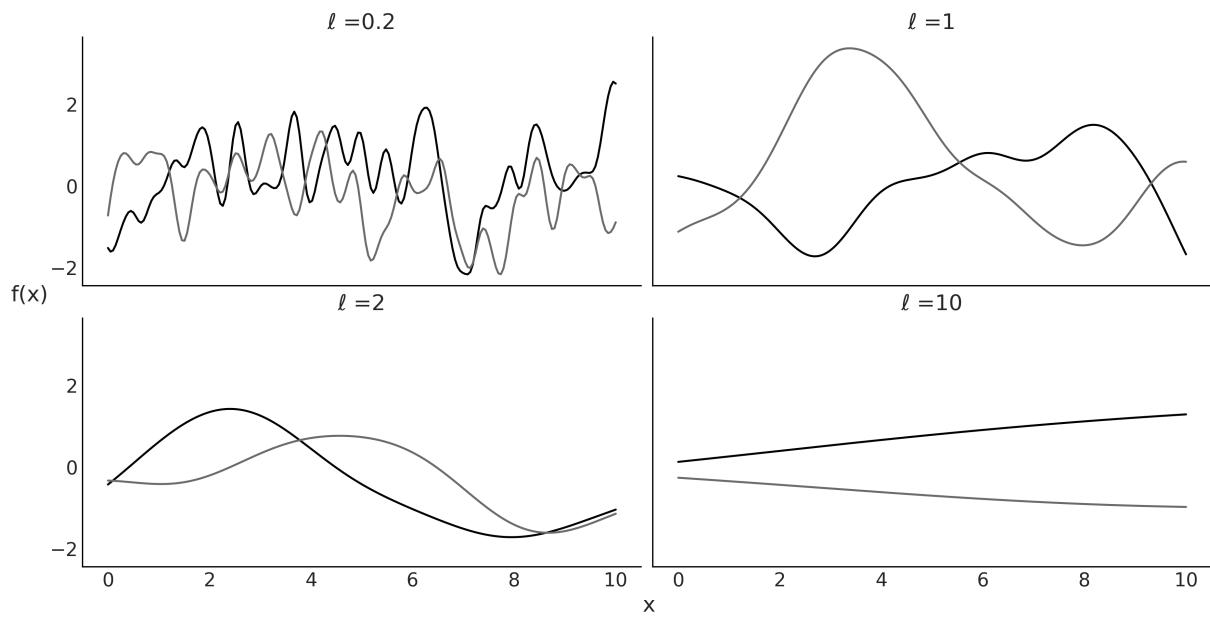

Chapter 8, Gaussian Processes, covers the basic idea behind Gaussian processes and how to use them to build non-parametric models over functions for a wide array of problems.

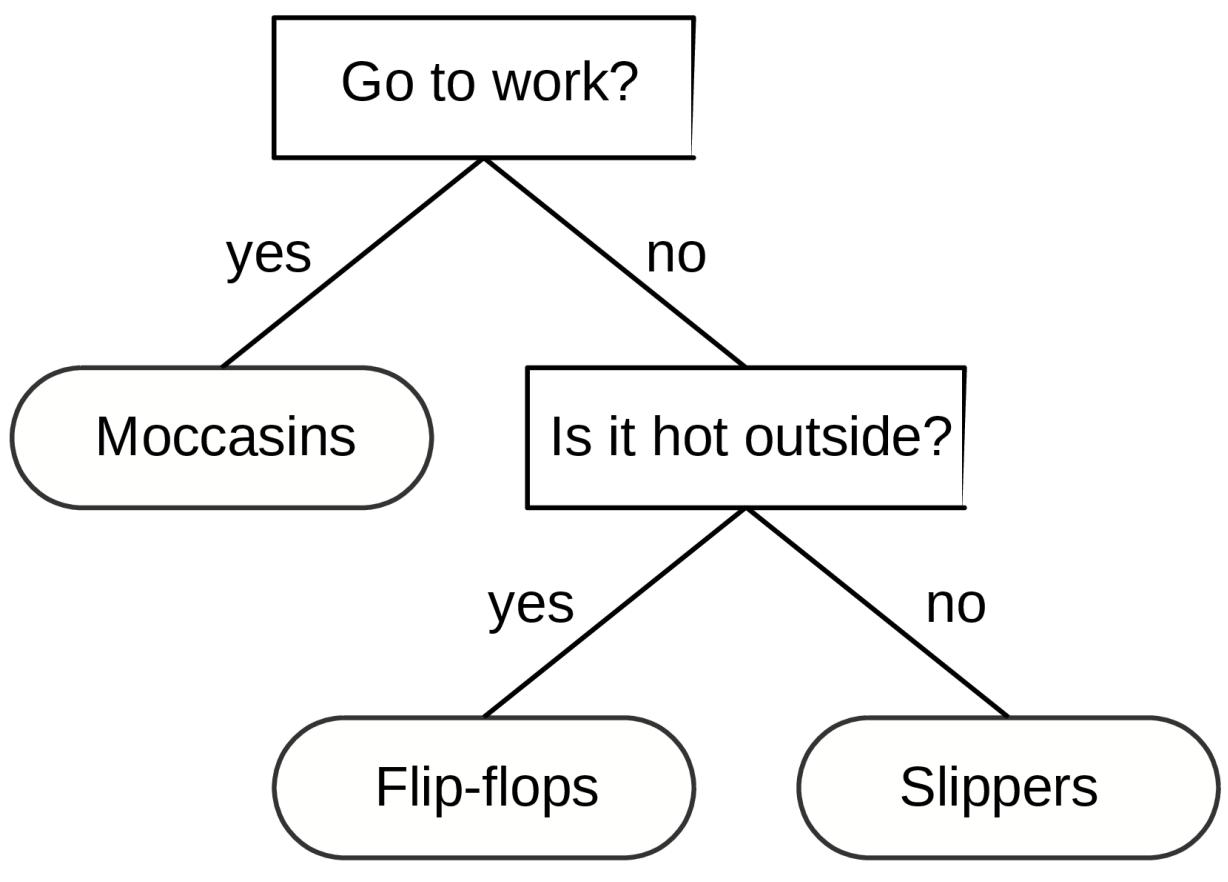

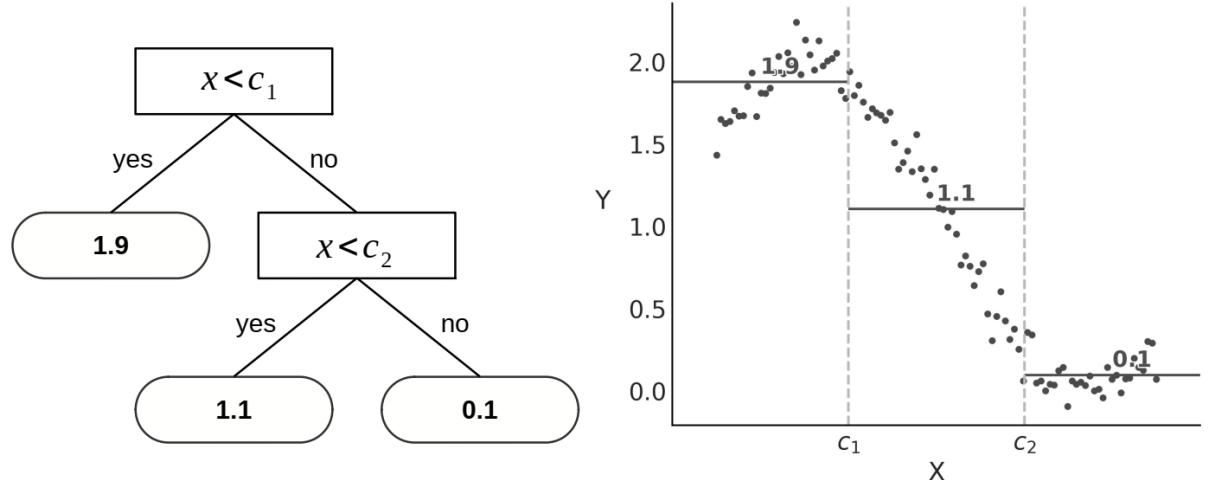

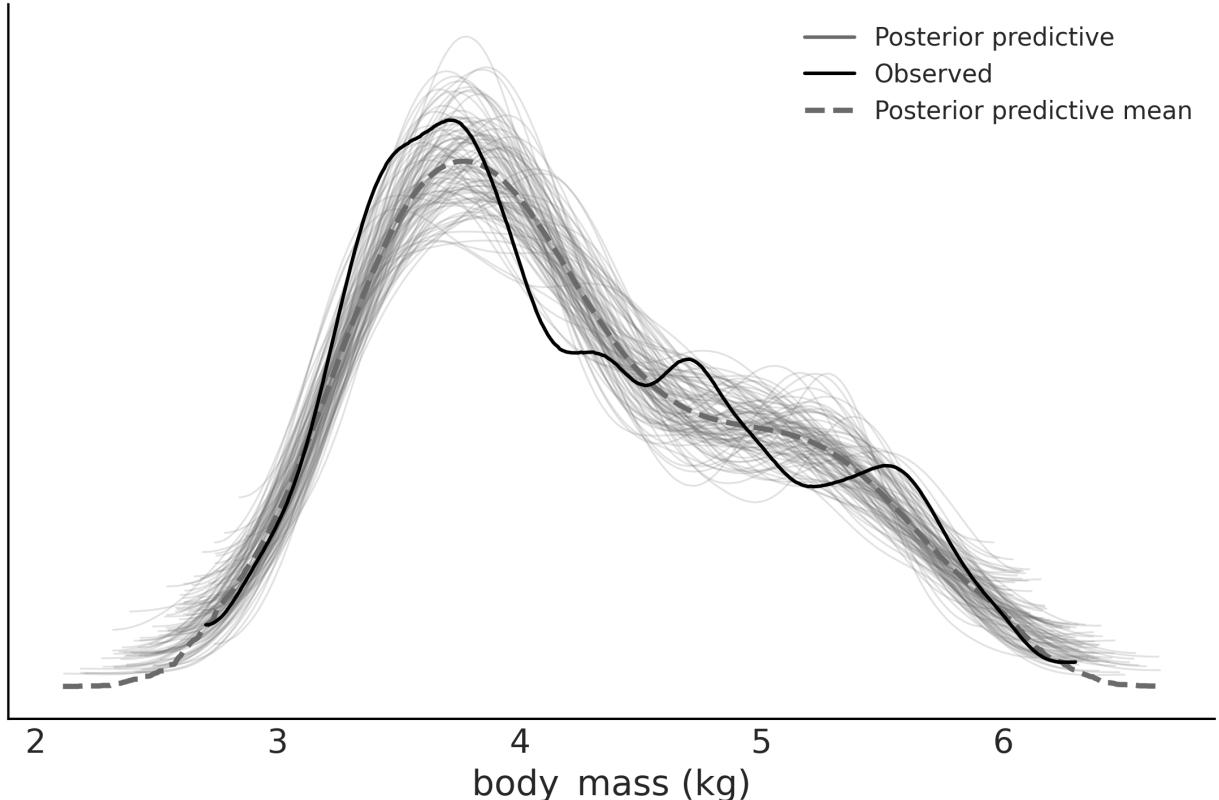

Chapter 9, Bayesian Additive Regression Trees, introduces readers to a flexible regression model that combines decision trees and Bayesian modeling techniques. The chapter will cover the key features of BART, including its flexibility in capturing non-linear relationships between predictors and outcomes and how it can be used for variable selection.

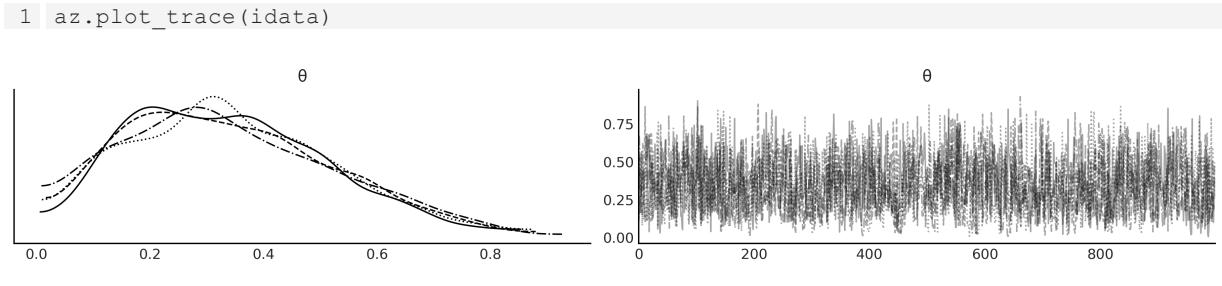

Chapter 10, Inference Engines, provides an introduction to methods for numerically approximating the posterior distribution, as well as a very important topic from the practitioner’s perspective: how to diagnose the reliability of the approximated posterior.

Chapter 11, Where to Go Next?, provides a list of resources to keep learning from beyond this book, and a concise farewellspeech.

What’s new in this edition?

We have incorporated feedback from readers of the second edition to refine the text and the code in this third edition, to improve clarity and readability. We have also added new examples and new sections and removed some sections that in retrospect were not that useful.

In the second edition, we extensively use PyMC and ArviZ. In this new edition, we use the last available version of PyMC and ArviZ at the time of writing and we showcase some of its new features. This new edition also reflects how the PyMC ecosystem has bloomed in the last few years. We discuss 4 new libraries:

- Bambi, a library for Bayesian regression models with a very simple interface. We have a dedicated chapter to it.

- Kulprit, a very new library for variable selection built on top of Bambi. We show one example of how to use it and provide the intuition for the theory behind this package.

- PreliZ is a library for prior elicitation. We use it from Chapter 1 and in many chapters after that.

- PyMC-BART, a library that extends PyMC to support Bayesian Additive Regression Trees. We have a dedicated chapter to it.

The following list delineates the changes introduced in the third edition as compared to the second edition.

Chapter 1, Thinking Probabilistically We have added a new introduction to probability theory. This is something many readers asked for. The introduction is not meant to be a replacement for a proper course in probability theory, but it should be enough to get you started.

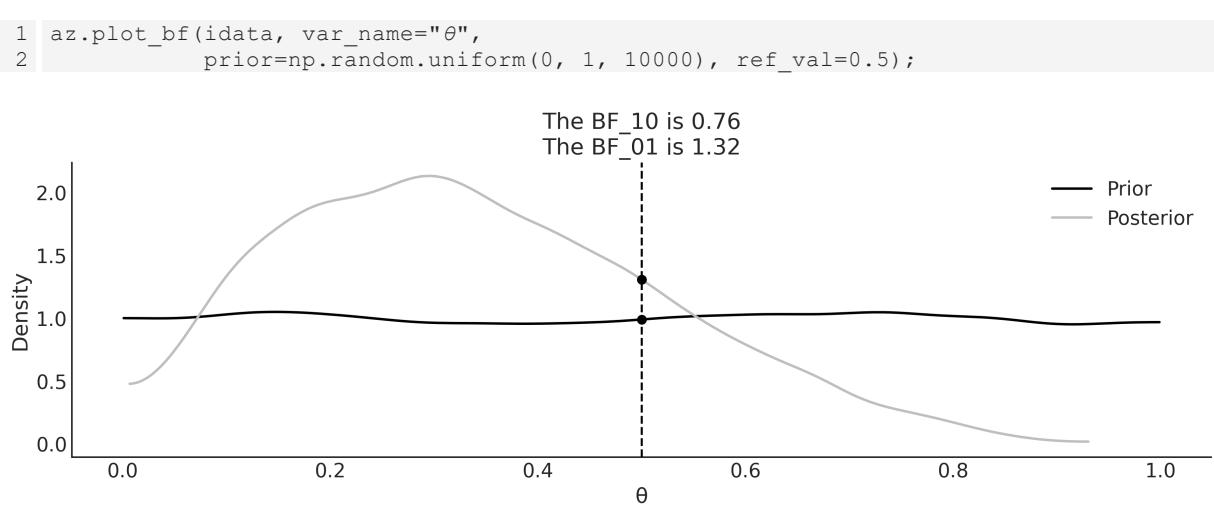

Chapter 2, Programming Probabilistically We discuss the Savage-Dickey density ratio (also discussed in Chapter 5). We explain the InferenceData object from ArviZ and how to use coords and dims with PyMC and ArviZ. We moved the section on hierarchical models to its own chapter, Chapter 3.

Chapter 3, Hierarchical Models We have promoted the discussion of hierarchical models to its dedicated chapter. We refine the discussion of hierarchical models and add a new example, for which we use a dataset from football European leagues.

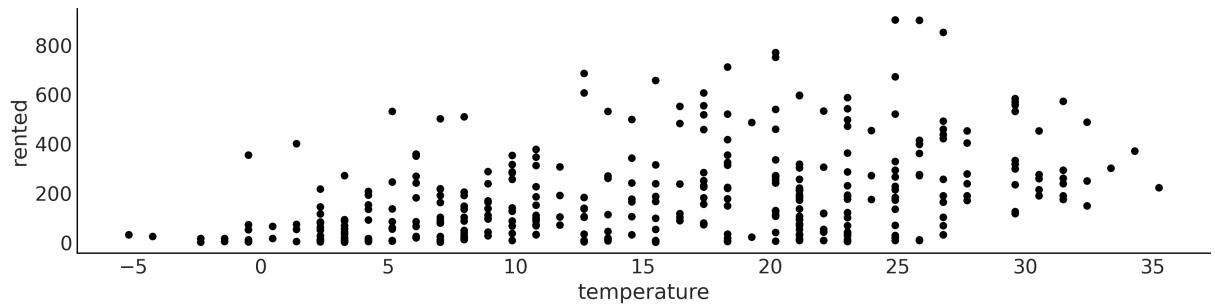

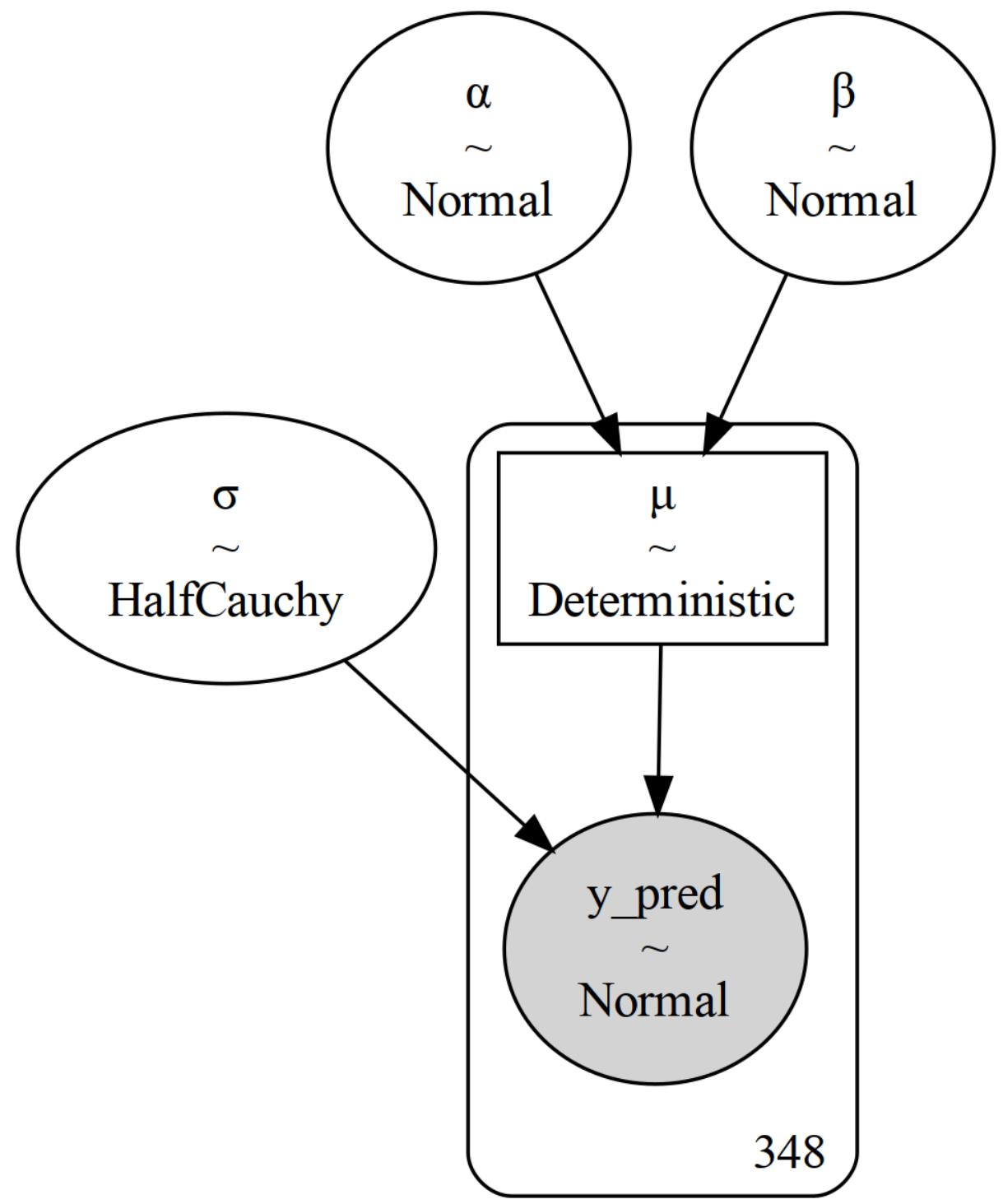

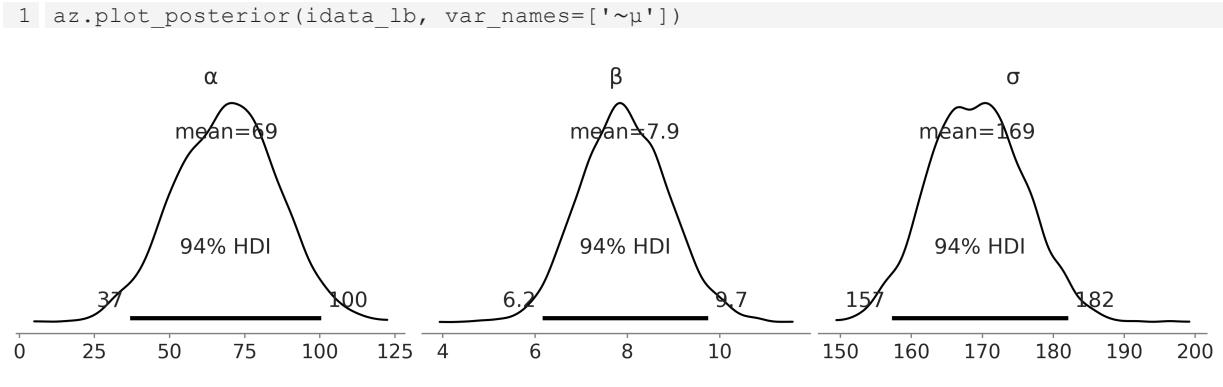

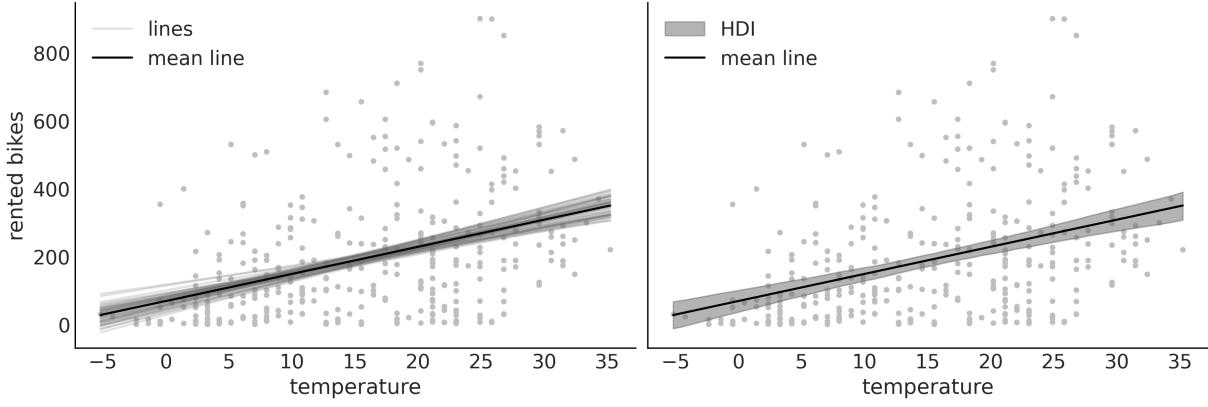

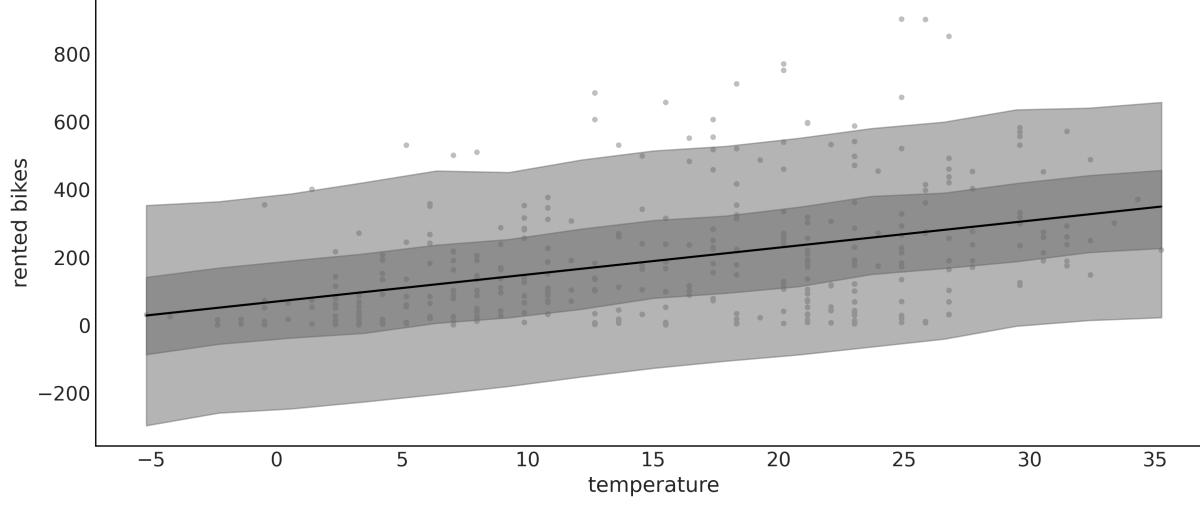

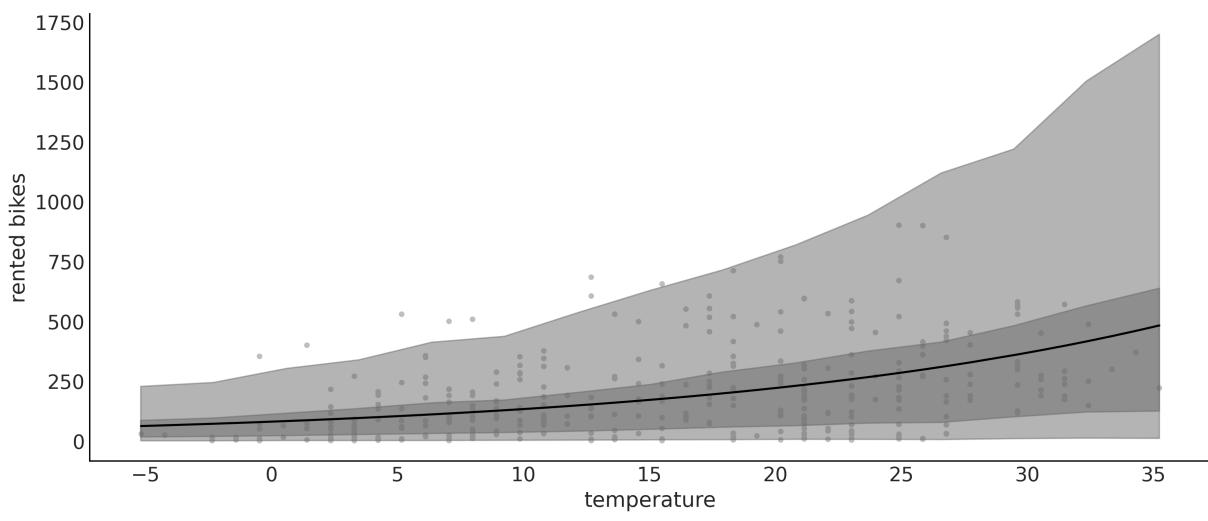

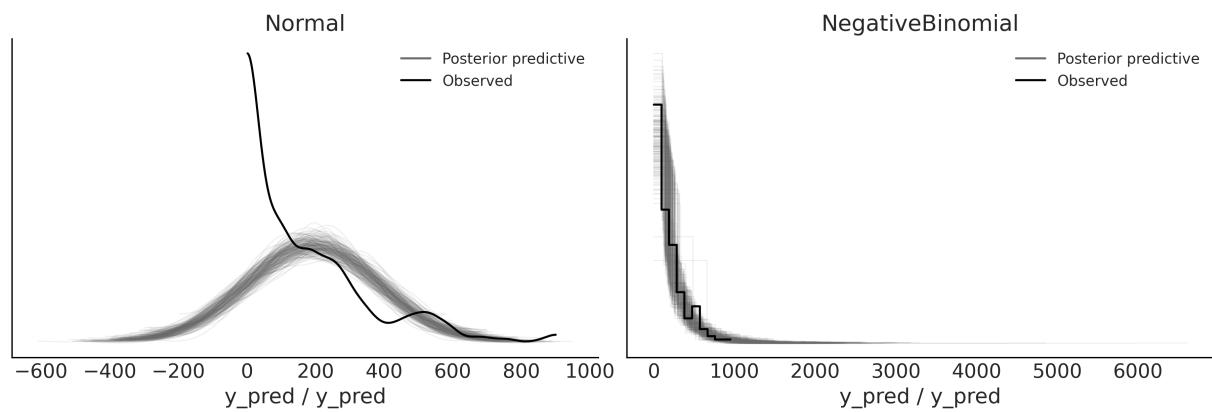

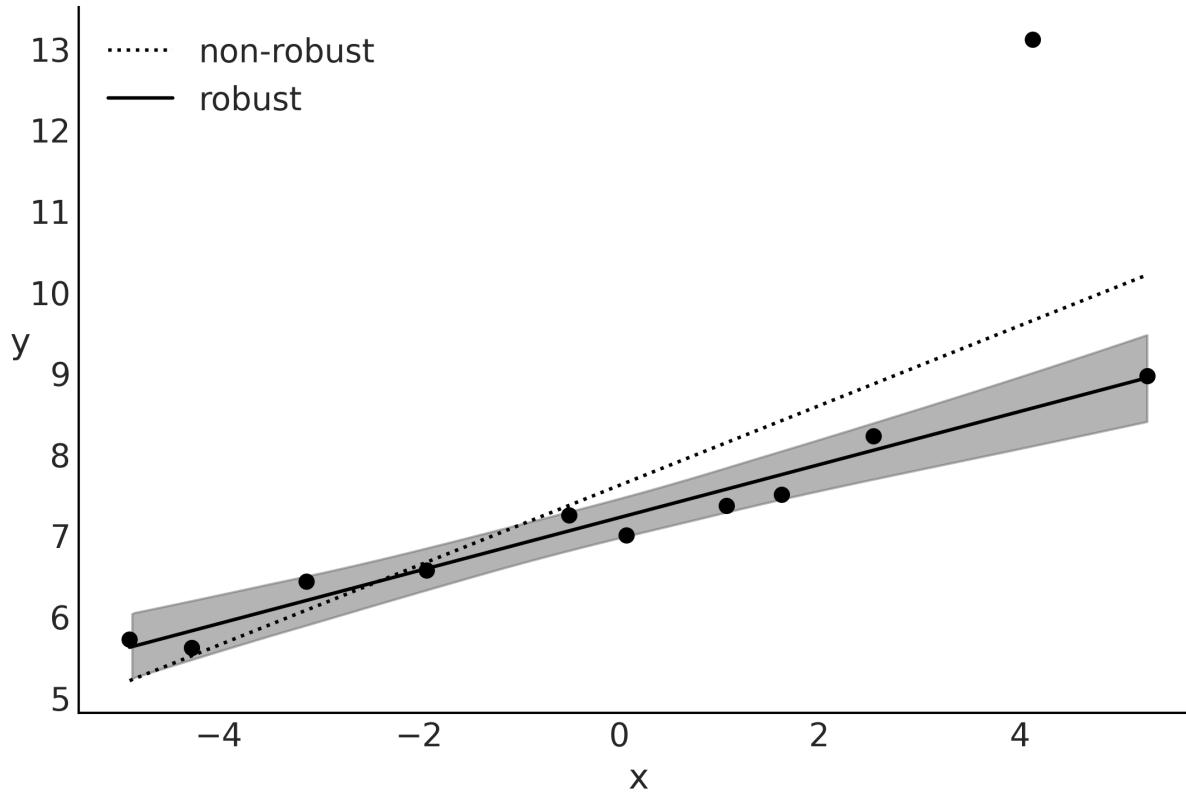

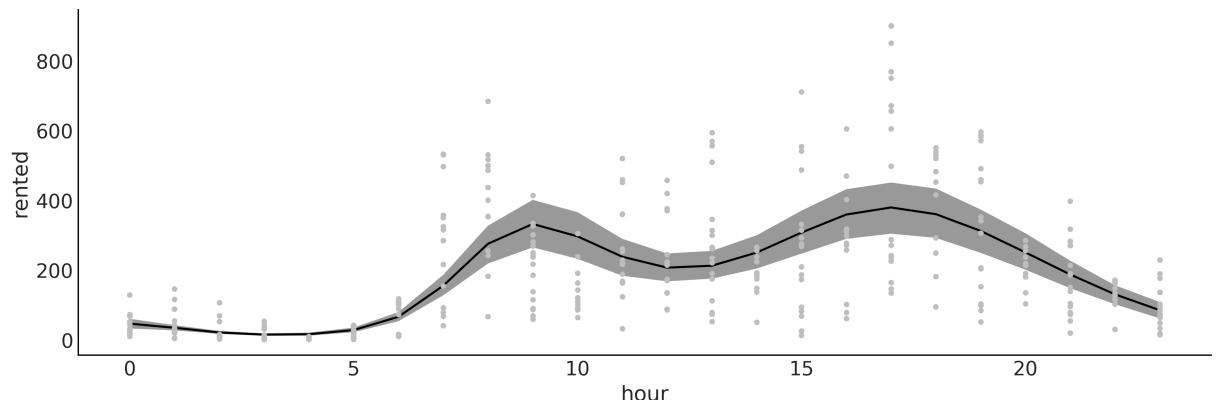

Chapter 4, Modeling with Lines This chapter has been extensively rewritten. We use the Bikes dataset to introduce both simple linear regression and negative binomial regression. Generalized linear models (GLMs) are introduced early in this chapter (in the previous edition they were introduced in another chapter). This helps you to see the connection between linear regression and GLMs and allows us to introduce more advanced concepts in Chapter 6. We discuss the centered vs non-centered parametrization of linear models.

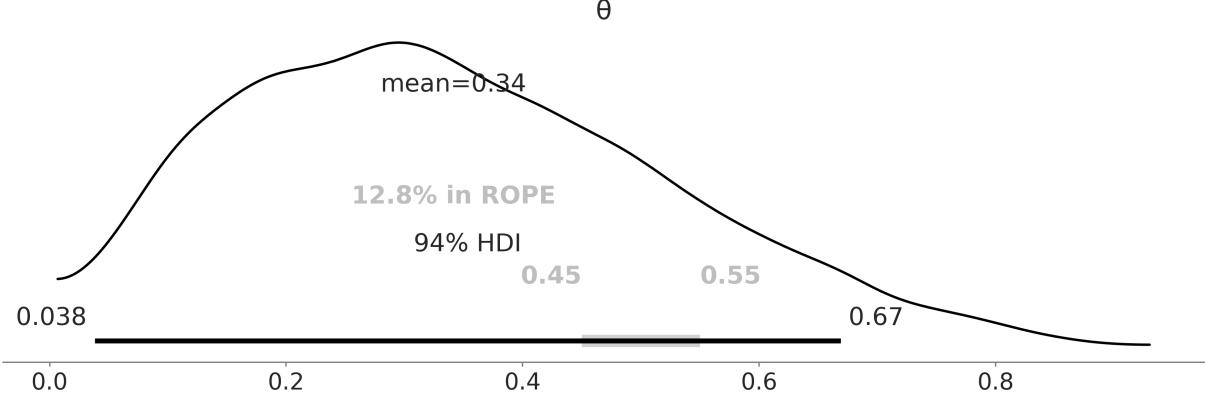

Chapter 5, Comparing Models We have cleaned the text to make it more clear and removed some bits that were not that useful after all. We now recommend the use of LOO over WAIC. We have added a discussion about the Savage-Dickey density ratio to compute Bayes factors.

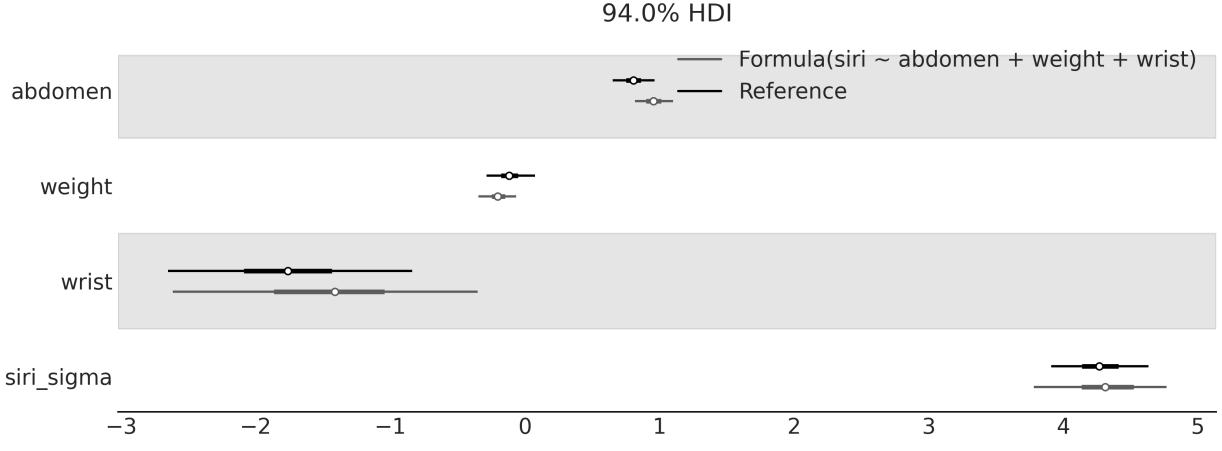

Chapter 6, Modeling with Bambi We show you how to use Bambi, a high-level Bayesian modelbuilding interface written in Python. We take advantage of the simple syntax offered by Bambi to expand what we learned in Chapter 4, including splines, distributional models, categorical models, and interactions. We also show how Bambi can help us to interpret complex linear models that otherwise can become confusing, error-prone, or just time-consuming. We close the chapter by discussing variable selection with Kulprit, a Python package that tightly integrates with Bambi.

Chapter 7, Mixture Models We have clarified some of the discussions based on feedback from readers. We also discuss Zero-Inflated and hurdle models and show how to use rootograms to evaluate the fit of discrete models.

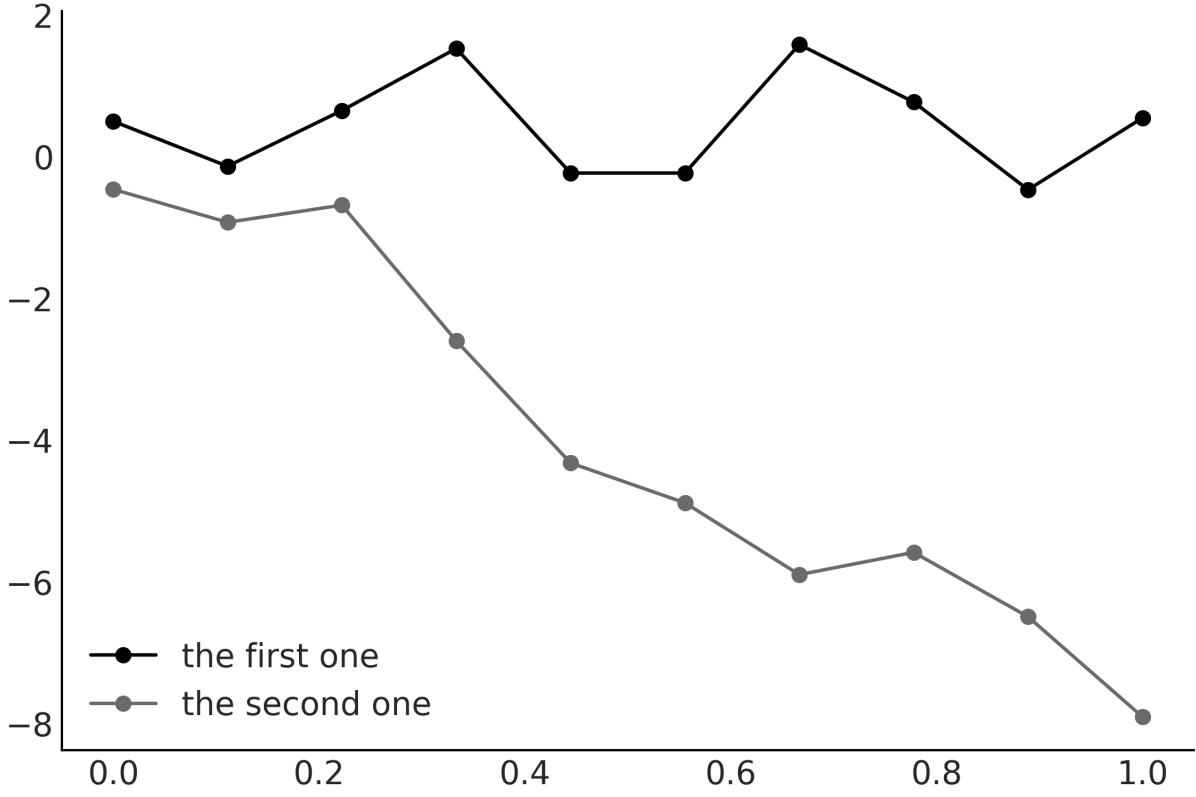

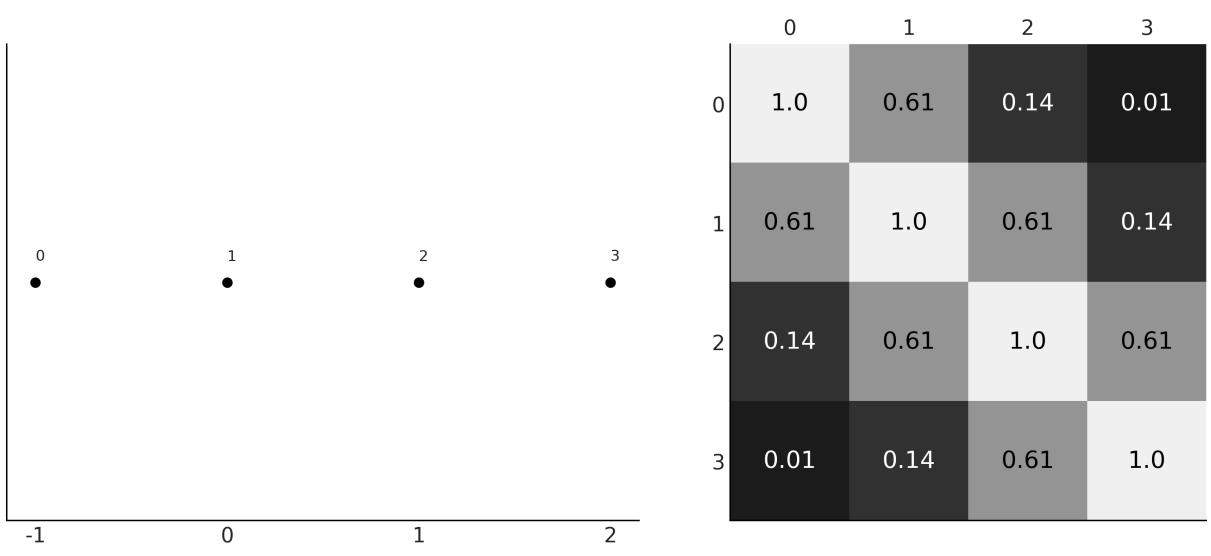

Chapter 8, Gaussian Processes We have cleaned the text to make explanations clear and removed some of the boilerplate code and text for a more fluid reading. We also discuss how to define a kernel with a custom distance instead of the default Euclidean distance. We discuss the practical application of Hilbert Space Gaussian processes, a fast approximation to Gaussian processes.

Chapter 9, Bayesian Additive Regression Trees This is an entirely new chapter discussing BART models, a flexible and easy-to-use non-parametric Bayesian method.

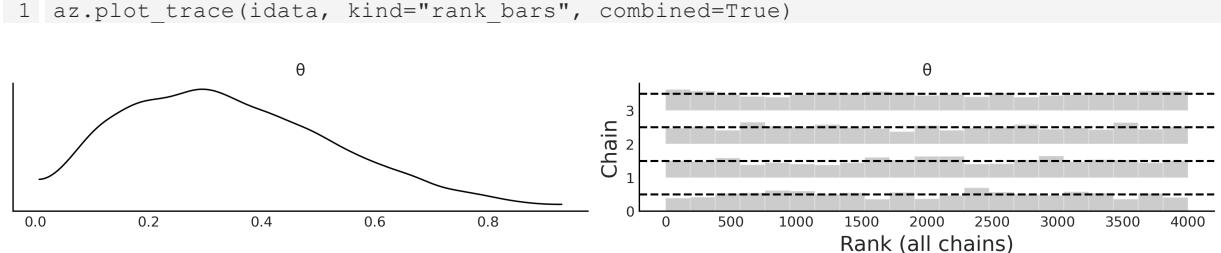

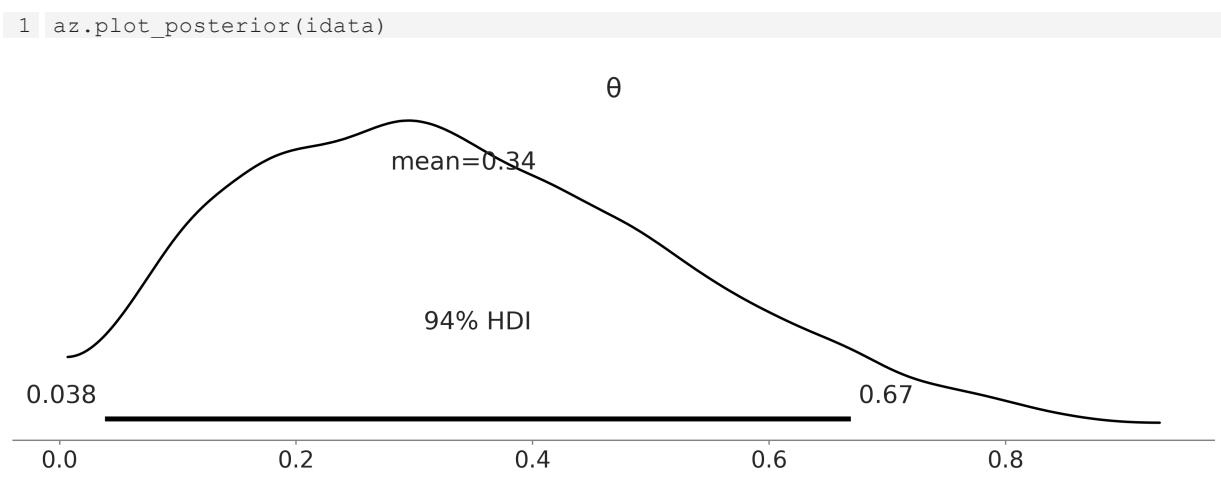

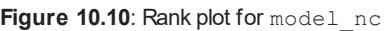

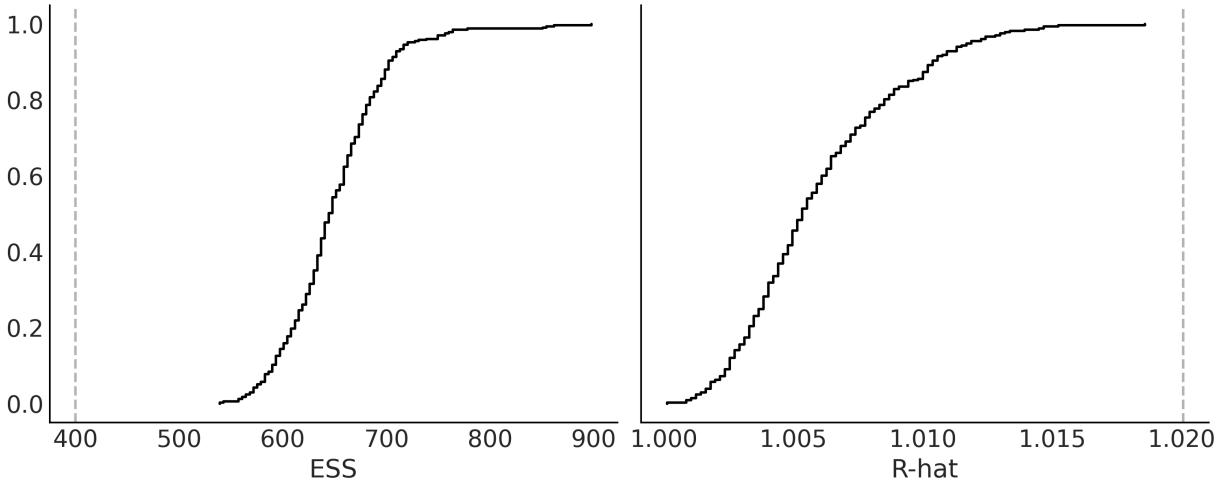

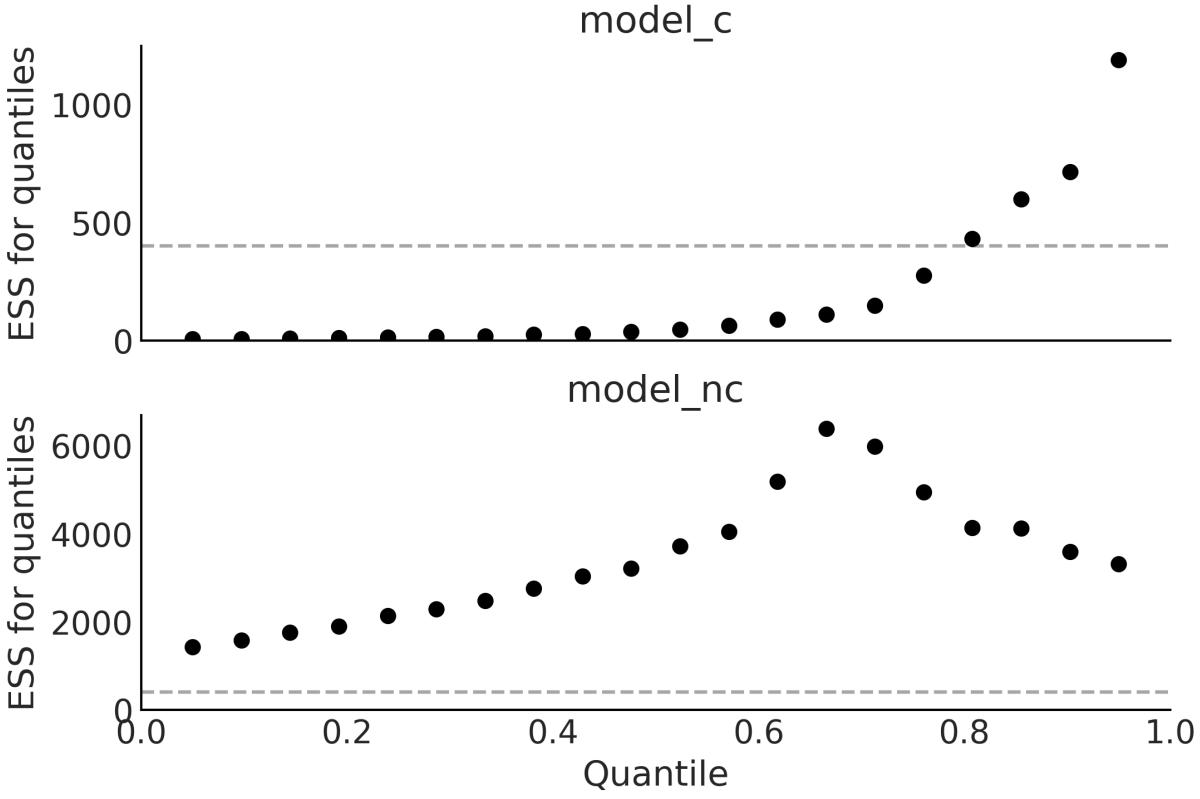

Chapter 10, Inference Engines We have removed the discussion on variational inference as it is not used in the book. We have updated and expanded the discussion of trace plots, , ESS, and MCSE. We also included a discussion on rank plots and a better example of divergences and centered vs noncentered parameterizations. R ^

Installation instructions

The code in the book was written using Python version 3.11.6. To install Python and Python libraries, I recommend using Anaconda, a scientific computing distribution. You can read more about Anaconda and download it at https://www.anaconda.com/products/distribution. This will install many useful Python packages on your system.

Additionally, you will need to installsome packages. To do that, please use:

conda install -c conda-forge pymc==5.8.0 arviz==0.16.1 bambi==0.13.0

pymc-bart==0.5.2 kulprit==0.0.1 preliz==0.3.6 nutpie==0.9.1You can also use pip if you prefer:

pip install pymc==5.8.0 arviz==0.16.1 bambi==0.13.0 pymc-bart==0.5.2

kulprit==0.0.1 preliz==0.3.6 nutpie==0.9.1An alternative way to install the necessary packages once Anaconda is installed in your system is to go to https://github.com/aloctavodia/BAP3 and download the environment file named bap3.yml. With it, you can install all the necessary packages using the following command:

conda env create -f bap3.yml

The Python packages used to write this book are listed here:

- ArviZ 0.16.1

- Bambi 0.13.0

- Kulprit 0.0.1

- PreliZ 0.3.6

- PyMC 5.8.0

- PyMC-BART 0.5.2

- Python 3.11.6

- Notebook 7.0.6

- Matplotlib 3.8.0

- NumPy 1.24.4

- Numba 0.58.1

- Nutpie 0.9.1

- SciPy 1.11.3

- Pandas 2.1.2

- Xarray 2023.10.1

How to run the code while reading

The code presented in each chapter assumes that you have imported at least some of these packages. Instead of copying and pasting the code from the book, I recommend downloading the code from https://github.com/aloctavodia/BAP3 and running it using Jupyter Notebook (or Jupyter Lab). Additionally, most figures in this book are generated using code that is present in the notebooks but not always shown in the book.

If you find a technical problem while running the code in this book, a typo in the text, or any other errors, please fill in the issue at https://github.com/aloctavodia/BAP3 and I will try to resolve it as soon as possible.

Conventions used

There are several text conventions used throughout this book.

code_in_text: Indicates code words in the text, filenames, or names of functions. Here is an example:

“Most of the preceding code is for plotting; the probabilistic part is performed by the y =

stats.norm(mu, sd).pdf(x) line.”

A block of code is set as follows:

Code 1

μ = 0. σ = 1. X = pz.Normal(μ, σ) x = X.rvs(3) 1 2 3 4

Bold: Indicates a new term, or an important word.

Italics: Suggests a less rigorous or colloquial utilization of a term.

Get in touch

Feedback from our readers is always welcome.

General feedback: If you have questions about any aspect of this book, mention the book title in the subject of your message and email us at customercare@packtpub.com.

Errata: Although we have taken every care to ensure the accuracy of our content, mistakes do happen. If you have found a mistake in this book, we would be grateful if you open an issue ticket at https://github.com/aloctavodia/BAP3

Becoming an author: If there is a topic that you have expertise in and you are interested in either writing or contributing to a book, please visit authors.packtpub.com.

For more information about Packt, please visit https://www.packtpub.com/.

Chapter 1 Thinking Probabilistically

Probability theory is nothing but common sense reduced to calculation. – Pierre Simon Laplace

In this chapter, we will learn about the core concepts of Bayesian statistics and some of the instruments in the Bayesian toolbox. We will use some Python code, but this chapter will be mostly theoretical; most of the concepts we willsee here will be revisited many times throughout this book. This chapter, being heavy on the theoreticalside, is perhaps a little anxiogenic for the coder in you, but I think it will ease the path to effectively applying Bayesian statistics to your problems.

In this chapter, we will cover the following topics:

- Statistical modeling

- Probabilities and uncertainty

- Bayes’ theorem and statistical inference

- Single-parameter inference and the classic coin-flip problem

- Choosing priors and why people often don’t like them but should

- Communicating a Bayesian analysis

1.1 Statistics, models, and this book’s approach

Statistics is about collecting, organizing, analyzing, and interpreting data, and hence statistical knowledge is essential for data analysis. Two main statistical methods are used in data analysis:

- Exploratory Data Analysis (EDA): This is about numericalsummaries, such as the mean, mode, standard deviation, and interquartile ranges. EDA is also about visually inspecting the data, using tools you may be already familiar with, such as histograms and scatter plots.

- Inferential statistics: This is about making statements beyond the current data. We may want to understand some particular phenomenon, maybe we want to make predictions for future (yet unobserved) data points, or we need to choose among several competing explanations for the same set of observations. In summary, inferentialstatistics allow us to draw meaningful insights from a limited set of data and make informed decisions based on the results of our analysis.

A Match Made in Heaven

The focus of this book is on how to perform Bayesian inferentialstatistics, but we will also use ideas from EDA to summarize, interpret, check, and communicate the results of Bayesian inference.

Most introductory statistical courses, at least for non-statisticians, are taught as a collection of recipes that go like this: go to the statistical pantry, pick one tin can and open it, add data to taste, and stir until you obtain a consistent p-value, preferably under 0.05. The main goal of these courses is to teach you how to pick the proper can. I never liked this approach, mainly because the most common result is a bunch of confused people unable to grasp, even at the conceptual level, the unity of the different learned methods. We will take a different approach: we will learn some recipes, but they will be homemade rather than canned food; we will learn how to mix fresh ingredients that willsuit different statistical occasions and, more importantly, that will let you apply concepts far beyond the examples in this book.

Taking this approach is possible for two reasons:

- Ontological: Statistics is a form of modeling unified under the mathematical framework of probability theory. Using a probabilistic approach provides a unified view of what may seem like very disparate methods; statistical methods and machine learning methods look much more similar under the probabilistic lens.

- Technical: Modern software, such as PyMC, allows practitioners, just like you and me, to define and solve models in a relatively easy way. Many of these models were unsolvable just a few years ago or required a high level of mathematical and technicalsophistication.

1.2 Working with data

Data is an essential ingredient in statistics and data science. Data comes from severalsources, such as experiments, computer simulations, surveys, and field observations. If we are the ones in charge of generating or gathering the data, it is always a good idea to first think carefully about the questions we want to answer and which methods we will use, and only then proceed to get the data. There is a whole branch of statistics dealing with data collection, known as experimental design. In the era of the data deluge, we can sometimes forget that gathering data is not always cheap. For example, while it is true that the Large Hadron Collider (LHC) produces hundreds of terabytes a day, its construction took years of manual and intellectual labor.

As a general rule, we can think of the process of generating the data as stochastic, because there is ontological, technical, and/or epistemic uncertainty, that is, the system is intrinsically stochastic, there are technical issues adding noise or restricting us from measuring with arbitrary precision, and/or there are conceptual limitations veiling details from us. For all these reasons, we always need to interpret data in the context of models, including mental and formal ones. Data does not speak but through models.

In this book, we will assume that we already have collected the data. Our data will also be clean and tidy, something that’s rarely true in the real world. We will make these assumptions to focus on the subject of this book. I just want to emphasize, especially for newcomers to data analysis, that even

when not covered in this book, there are important skills that you should learn and practice to successfully work with data.

A very usefulskill when analyzing data is knowing how to write code in a programming language, such as Python. Manipulating data is usually necessary given that we live in a messy world with even messier data, and coding helps to get things done. Even if you are lucky and your data is very clean and tidy, coding willstill be very usefulsince modern Bayesian statistics is done mostly through programming languages such as Python or R. If you want to learn how to use Python for cleaning and manipulating data, you can find a good introduction in Python for Data Analysis by McKinney [2022].

1.3 Bayesian modeling

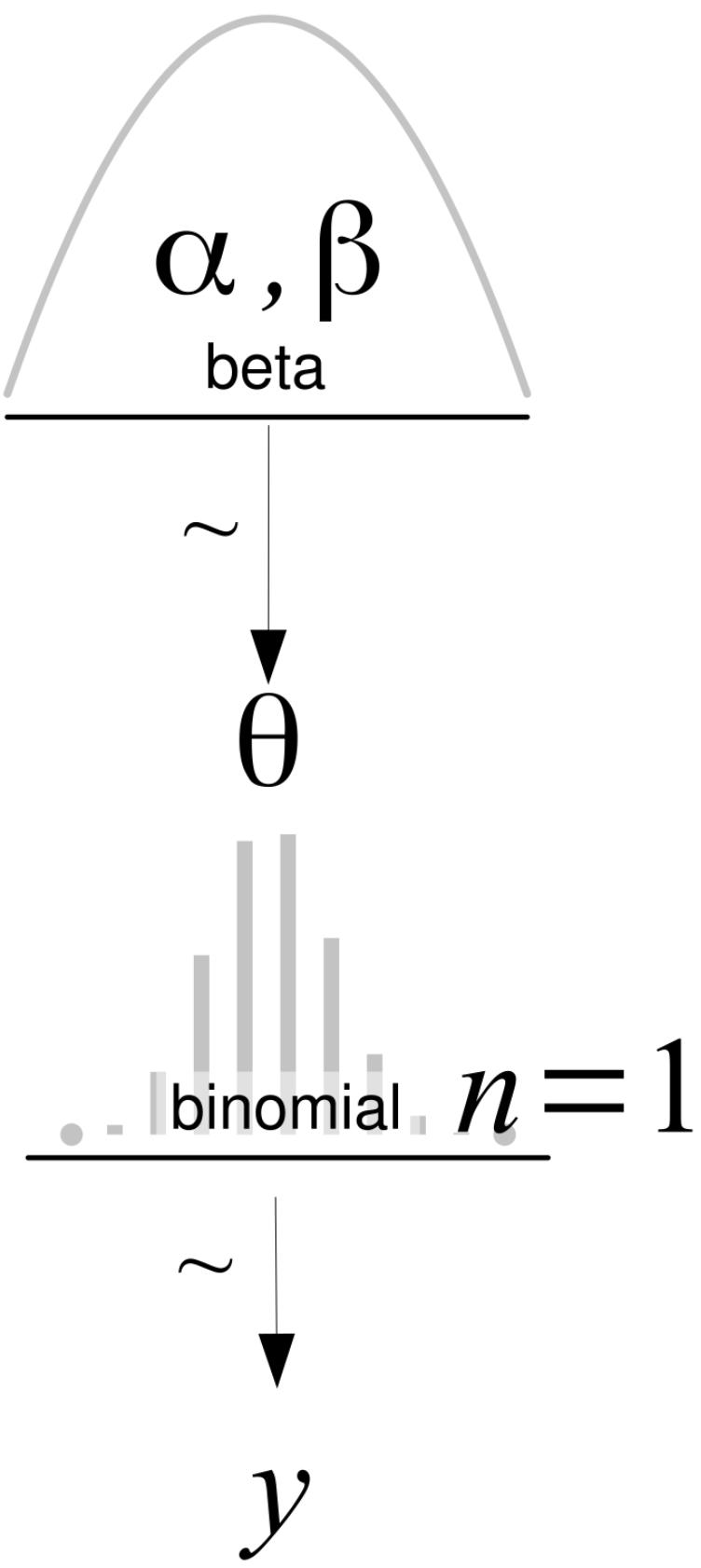

Models are simplified descriptions of a given system or process that, for some reason, we are interested in. Those descriptions are deliberately designed to capture only the most relevant aspects of the system and not to explain every minor detail. This is one reason a more complex model is not always a better one. There are many different kinds of models; in this book, we will restrict ourselves to Bayesian models. We can summarize the Bayesian modeling process using three steps:

- Given some data and some assumptions on how this data could have been generated, we design a model by combining building blocks known as probability distributions. Most of the time these models are crude approximations, but most of the time that’s all we need.

- We use Bayes’ theorem to add data to our models and derive the logical consequences of combining the data and our assumptions. We say we are conditioning the model on our data.

- We evaluate the model, and its predictions, under different criteria, including the data, our expertise on the subject, and sometimes by comparing it to other models.

In general, we will find ourselves performing these three steps in an iterative non-linear fashion. We will retrace our steps at any given point: maybe we made a silly coding mistake, or we found a way to change the model and improve it, or we realized that we need to add more data or collect a different kind of data.

Bayesian models are also known as probabilistic models because they are built using probabilities. Why probabilities? Because probabilities are a very useful tool to model uncertainty; we even have good arguments to state they are the correct mathematical concept. So let’s take a walk through the garden of forking paths [Borges, 1944].

1.4 A probability primer for Bayesian practitioners

In this section, we are going to discuss a few general and important concepts that are key for better understanding Bayesian methods. Additional probability-related concepts will be introduced or elaborated on in future chapters, as we need them. For a detailed study of probability theory, however, I highly recommend the book Introduction to Probability by Blitzstein [2019]. Those already familiar with the basic elements of probability theory can skip this section or skim it.

1.4.1 Sample space and events

Let’s say we are surveying to see how people feel about the weather in their area. We asked three individuals whether they enjoy sunny weather, with possible responses being “yes” or “no.” The sample space of all possible outcomes can be denoted by S and consists of eight possible combinations:

S = {(yes, yes, yes), (yes, yes, no), (yes, no, yes), (no, yes, yes), (yes, no, no), (no, yes, no), (no, no, yes), (no, no, no)}

Here, each element of the sample space represents the responses of the three individuals in the order they were asked. For example, (yes, no, yes) means the first and third people answered “yes” while the second person answered “no.”

We can define events as subsets of the sample space. For example, event A is when all three individuals answered “yes”:

\[A = \{ (\text{yes}, \text{yes}, \text{yes}) \}\]

Similarly, we can define event B as when at least one person answered “no,” and then we will have:

B = {(yes, yes, no), (yes, no, yes), (no, yes, yes), (yes, no, no), (no, yes, no), (no, no, yes), (no, no, no)}

We can use probabilities as a measure of how likely these events are. Assuming all events are equally likely, the probability of event A, which is the event that all three individuals answered “yes,” is:

\[P(A) = \frac{\text{number of outcomes in } A}{\text{total number of outcomes in } S}\]

In this case, there is only one outcome in A, and there are eight outcomes in S. Therefore, the probability of A is:

\[P(A) = \frac{1}{8} = 0.125\]

Similarly, we can calculate the probability of event B, which is the event that at least one person answered “no.” Since there are seven outcomes in B and eight outcomes in S, the probability of B is:

\[P(B) = \frac{7}{8} = 0.875\]

Considering all events equally likely is just a particular case that makes calculating probabilities easier. This is something called the naive definition of probability since it is restrictive and relies on strong assumptions. However, it is still useful if we are cautious when using it. For instance, it is not true that all yes-no questions have a 50-50 chance. Another example. What is the probability of seeing a purple horse? The right answer can vary a lot depending on whether we’re talking about the natural color of a real horse, a horse from a cartoon, a horse dressed in a parade, etc. Anyway, no matter if the events are equally likely or not, the probability of the entire sample space is always equal to 1. We can see that this is true by computing:

1 is the highest value a probability can take. Saying that P(S) = 1 is saying that S is not only very likely, it is certain. If everything that can happen is defined by S, then S will happen.

If an event is impossible, then its probability is 0. Let’s define the event C as the event of three persons saying “banana”:

C = {(banana, banana, banana)}

As C is not part of S, by definition, it cannot happen. Think of this as the questionnaire from our survey only having two boxes, yes and no. By design, our survey is restricting all other possible options.

We can take advantage of the fact that Python includes sets and define a Python function to compute probabilities following their naive definition:

Code 1.1

def P(S, A):

if set(A).issubset(set(S)):

return len(A)/len(S)

else:

return 0

1

2

3

4

5I left for the reader the joy of playing with this function.

One useful way to conceptualize probabilities is as conserved quantities distributed throughout the sample space. This means that if the probability of one event increases, the probability of some other event or events must decrease so that the total probability remains equal to 1. This can be illustrated with a simple example.

Suppose we ask one person whether it will rain tomorrow, with possible responses of “yes” and “no.” The sample space for possible responses is given by S = {yes, no}. An event that will rain tomorrow is represented by A = {yes}. If P(A), is 0.5, then the probability of the complement of event A, denoted by P , must also be 0.5. If for some reason P(A) increases to 0.8, then P must decrease to 0.2. This property holds for disjoint events, which are events that cannot occur simultaneously. For instance,

it cannot rain and not rain at the same time tomorrow. You may object that it can rain during the morning and not rain during the afternoon. That is true, but that’s a different sample space!

So far, we have avoided directly defining probabilities, and instead, we have just shown some of their properties and ways to compute them. A general definition of probability that works for non-equally likely events is as follows. Given a sample space S, and the event A, which is a subset of S, a probability is a function P, which takes A as input and returns a real number between 0 and 1, as output. The function P has some restrictions, defined by the following 3 axioms. Keep in mind that an axiom is a statement that is taken to be true and that we use as the starting point in our reasoning:

- The probability of an event is a non-negative real number

\[\text{2.}\,P(S) = 1\]

- If A1,A2,… are disjoint events, meaning they cannot occur simultaneously then P(A1,A2,…) = P(A1) + P(A2) + …

If this were a book on probability theory, we would likely dedicate a few pages to demonstrating the consequences of these axioms and provide exercises for manipulating probabilities. That would help us to become proficient in manipulating probabilities. However, our main focus is not on those topics. My motivation to present these axioms is just to show that probabilities are well-defined mathematical concepts with rules that govern their operations. They are a particular type of function, and there is no mystery surrounding them.

1.4.2 Random variables

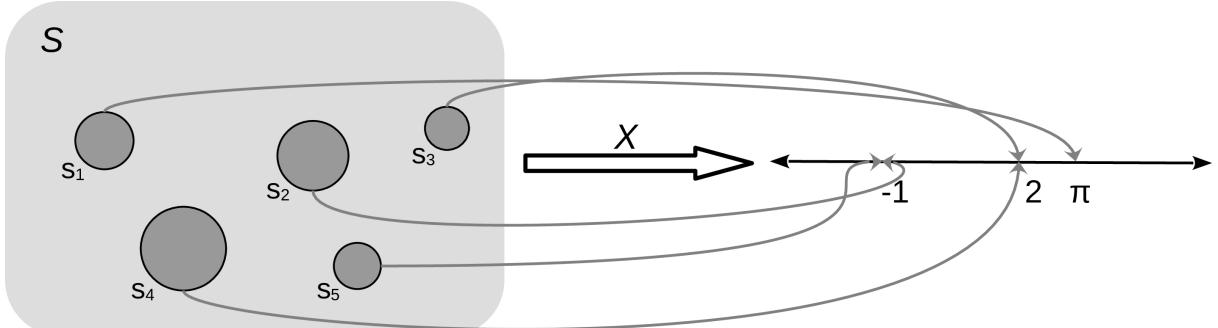

A random variable is a function that maps the sample space into the real numbers ℝ (see Figure 1.1). Let’s assume the events of interest are the number of a die, the mapping is very simple, we associate with the number 1, with 2, etc. Another simple example is the answer to the question, will it rain tomorrow? We can map “yes” to 1 and “no” to 0. It is common, but not always the case, to use a capital letter for random variables like X and a lowercase letter for their outcomes x. For example, if X represents a single roll of a die, then x represents some specific integer {1,2,3,4,5,6}. Thus, we can write P(X = 3) to indicate the probability of getting the value 3, when rolling a die. We can also leave x unspecified, for instance, we can write P(X = x) to indicate the probability of getting some value x, or P(X ≤ x), to indicate the probability of getting a value less than or equal to x.

Being able to map symbols like or strings like “yes” to numbers makes analysis simpler as we already know how to do math with numbers. Random variables are also useful because we can operate with them without directly thinking in terms of the sample space. This feature becomes more and more relevant as the sample space becomes more complex. For example, when simulating molecular systems, we need to specify the position and velocity of each atom; for complex molecules like proteins this means that we will need to track thousands, millions, or even larger numbers. Instead, we can use

random variables to summarize certain properties of the system, such as the total energy or the relative angles between certain atoms of the system.

If you are still confused, that’s fine. The concept of a random variable may sound too abstract at the beginning, but we willsee plenty of examples throughout the book that will help you cement these ideas. Before moving on, let me try one analogy that I hope you find useful. Random variables are useful in a similar way to how Python functions are useful. We often encapsulate code within functions, so we can store, reuse, and hide complex manipulations of data into a single call. Even more, once we have a few functions, we can sometimes combine them in many ways, like adding the output of two functions or using the output of one function as the input of the other. We can do all this without functions, but abstracting away the inner workings not only makes the code cleaner, it also helps with understanding and fostering new ideas. Random variables play a similar role in statistics.

The mapping between the sample space and ℝ is deterministic. There is no randomness involved. So why do we call it a random variable? Because we can ask the variable for values, and every time we ask, we will get a different number. The randomness comes from the probability associated with the events. In Figure 1.1, we have represented P as the size of the circles.

The two most common types of random variables are discrete and continuous ones. Without going into a proper definition, we are going to say that discrete variables take only discrete values and we usually use integers to represent them, like 1, 5, 42. And continuous variables take real values, so we use floats to work with them, like 3.1415, 1.01, 23.4214, and so on. When we use one or the other is problemdependent. If we ask people about their favorite color, we will get answers like “red,” “blue,” and “green.” This is an example of a discrete random variable. The answers are categories – there are no intermediate values between “red” and “green.” But if we are studying the properties of light absorption, then discrete values like “red” and “green” may not be adequate and instead working with wavelength could be more appropriate. In that case, we will expect to get values like 650 nm and 510 nm and any number in between, including 579.1.

1.4.3 Discrete random variables and their distributions

Instead of calculating the probability that all three individuals answered “yes,” or the probability of getting a 3 when rolling a die, we may be more interested in finding out the list of probabilities for all possible answers or all possible numbers from a die. Once this list is computed, we can inspect it visually or use it to compute other quantities like the probability of getting at least one “no,” the probability of getting an odd number, or the probability of getting a number equal to or larger than 5. The formal name of this list is probability distribution.

We can get the empirical probability distribution of a die, by rolling it a few times and tabulating how many times we got each number. To turn each value into a probability and the entire list into a valid probability distribution, we need to normalize the counts. We can do this by dividing the value we got for each number by the number of times we roll the die.

Empirical distributions are very useful, and we are going to extensively use them. But instead of rolling dice by hand, we are going to use advanced computational methods to do the hard work for us; this will not only save us time and boredom but it will allow us to get samples from really complicated distributions effortlessly. But we are getting ahead of ourselves. Our priority is to concentrate on theoretical distributions, which are central in statistics because, among other reasons, they allow the construction of probabilistic models.

As we saw, there is nothing random or mysterious about random variables; they are just a type of mathematical function. The same goes for theoretical probability distributions. I like to compare probability distributions with circles. Because we are all familiar with circles even before we get into school, we are not afraid of them and they don’t look mysterious to us. We can define a circle as the geometric space of points on a plane that is equidistant from another point called the center. We can go further and provide a mathematical expression for this definition. If we assume the location of the center is irrelevant, then the circle of radius r can simply be described as the set of all points (x,y) such that:

\[x^2 + y^2 = r^2\]

From this expression, we can see that given the parameter r, the circle is completely defined. This is all we need to plot it and all we need to compute properties such as the perimeter, which is 2πr.

Now notice that all circles look very similar to each other and that any two circles with the same value of r are essentially the same objects. Thus we can think of the family of circles, where each member is set apart from the rest precisely by the value of r.

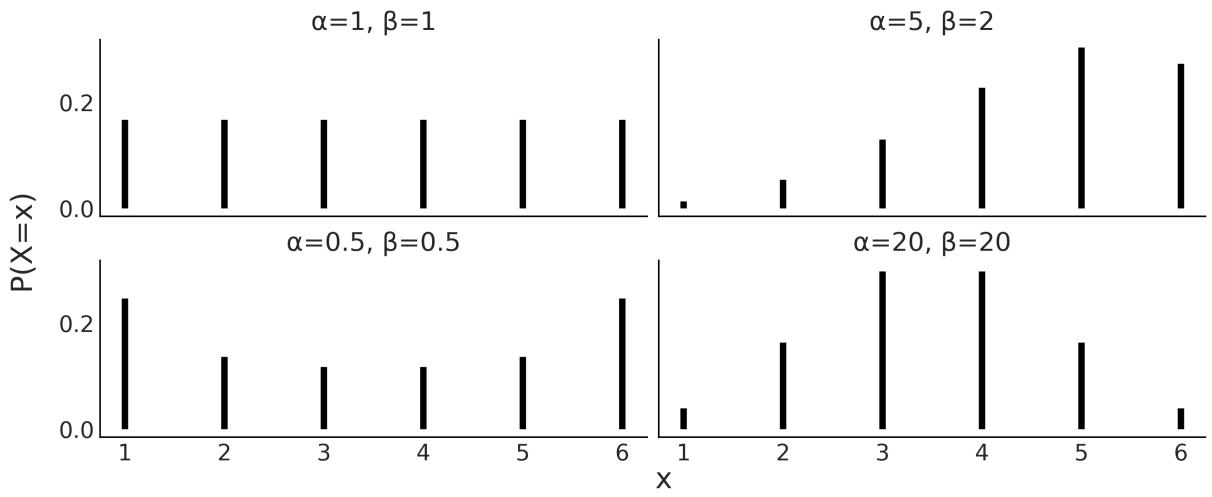

So far, so good, but why are we talking about circles? Because all this can be directly applied to probability distributions. Both circles and probability distributions have mathematical expressions that define them, and these expressions have parameters that we can change to define all members of a family of probability distributions. Figure 1.2 shows four members of one probability distribution known as BetaBinomial. In Figure 1.2, the height of the bars represents the probability of each x value. The values of x below 1 or above 6 have a probability of 0 as they are out of the support of the distribution.

Figure 1.2: Four members of the BetaBinomial distribution w ith parameters α and β

This is the mathematical expression for the BetaBinomial distribution:

\[\text{pmf}(x) = \left(\frac{n}{x}\right) \frac{B(x+\alpha, n-x+\beta)}{B(\alpha, \beta)}\]

pmf stands for probability mass function. For discrete random variables, the pmf is the function that returns probabilities. In mathematical notation, if we have a random variable X, then pmf(x) = P(X = x).

Understanding or remembering the pmf of the BetaBinomial has zero importance for us. I’m just showing it here so you can see that this is just another function; you put in one number and you get out another number. Nothing weird, at least not in principle. I must concede that to fully understand the details of the BetaBinomial distribution, we need to know what is, known as the binomial coefficient, and what B is, the Beta function. But that’s not fundamentally different from showing x + y = r . 2 2 2

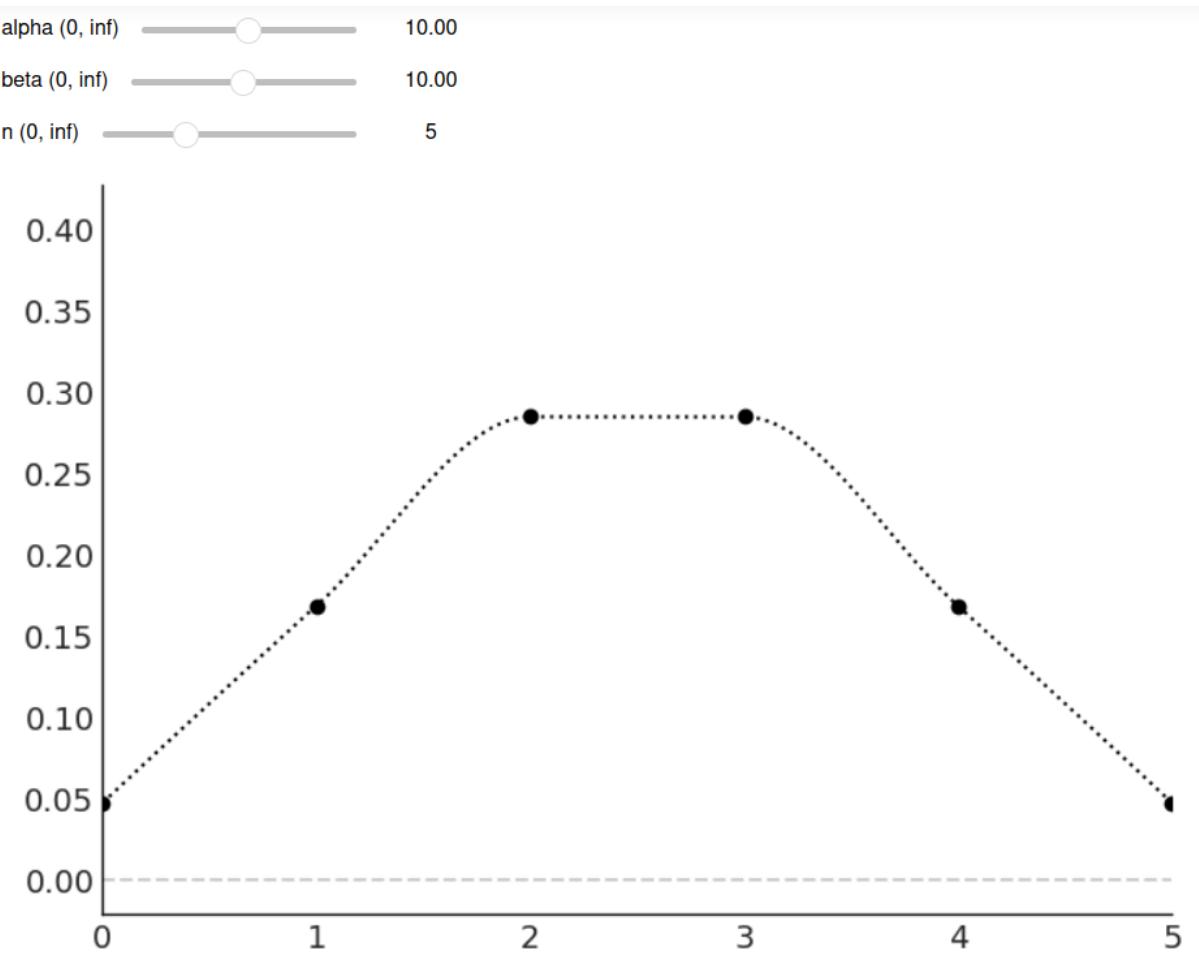

Mathematical expressions can be super useful, as they are concise and we can use them to derive properties from them. But sometimes that can be too much work, even if we are good at math. Visualization can be a good alternative (or complement) to help us understand probability distributions. I cannot fully show this on paper, but if you run the following, you will get an interactive plot that will update every time you move the sliders for the parameters alpha, beta, and n:

Code 1.2

1 pz.BetaBinomial(alpha=10, beta=10, n=6).plot_interactive()

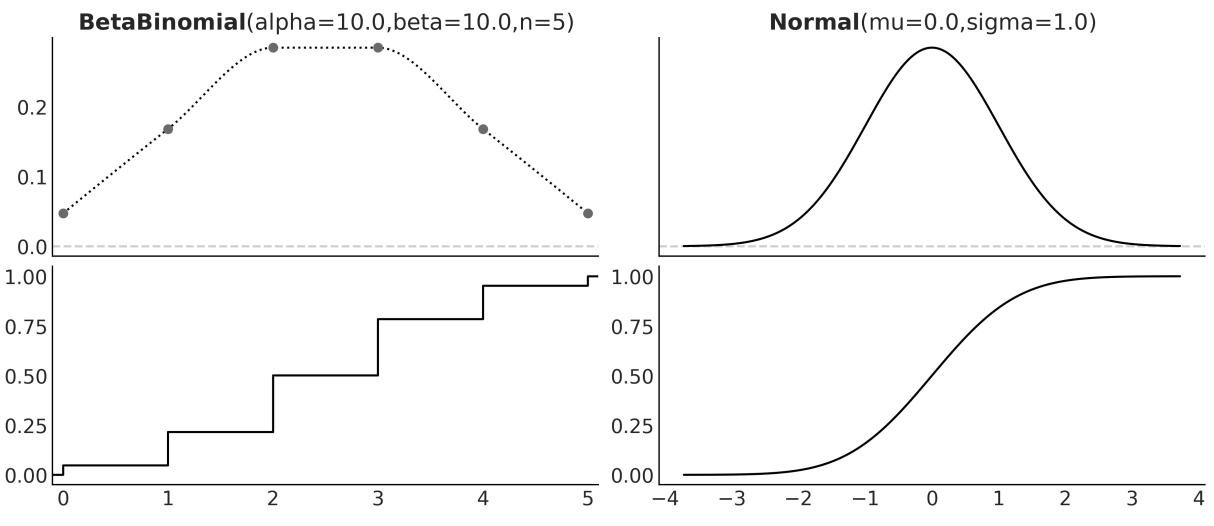

Figure 1.3 shows a static version of this interactive plot. The black dots represent the probabilities for each value of the random variable, while the dotted black line is just a visual aid.

Figure 1.3: The output of pz.BetaBinomial(alpha=10, beta=10, n=6).plot_interactive()

On the x-axis, we have the support of the BetaBinomial distribution, i.e., the values it can take, x ∈{0,1,2,3,4,5}. On the y-axis, the probabilities associated with each of those values. The full list is shown in Table 1.1.

| x value | probability | |

|---|---|---|

| 0 | 0.047 | |

| 1 | 0.168 | |

| 2 | 0.285 | |

| 3 | 0.285 | |

| 4 | 0.168 | |

| 5 | 0.047 |

Table 1.1: Probabilities for pz.BetaBinomial(alpha=10, beta=10, n=6)

Notice that for a BetaBinomial(alpha=10, beta=10, n=6) distribution, the probability of values not in {0,1,2,3,4,5}, including values such as −1,0.5,π,42, is 0.

We previously mentioned that we can ask a random variable for values and every time we ask, we will get a different number. We can simulate this with PreliZ [Icazatti et al., 2023], a Python library for prior elicitation. Take the following code snippet for instance:

Code 1.3

1 pz.BetaBinomial(alpha=10, beta=10, n=6).rvs()This will give us an integer between 0 and 5. Which one? We don’t know! But let’s run the following code:

Code 1.4

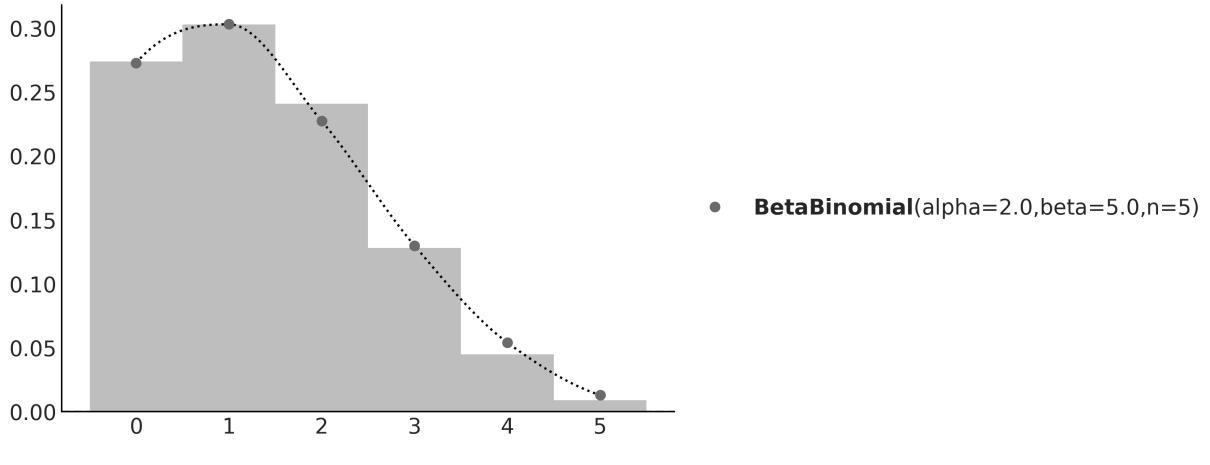

plt.hist(pz.BetaBinomial(alpha=2, beta=5, n=5).rvs(1000))

pz.BetaBinomial(alpha=2, beta=5, n=5).plot_pdf();

1

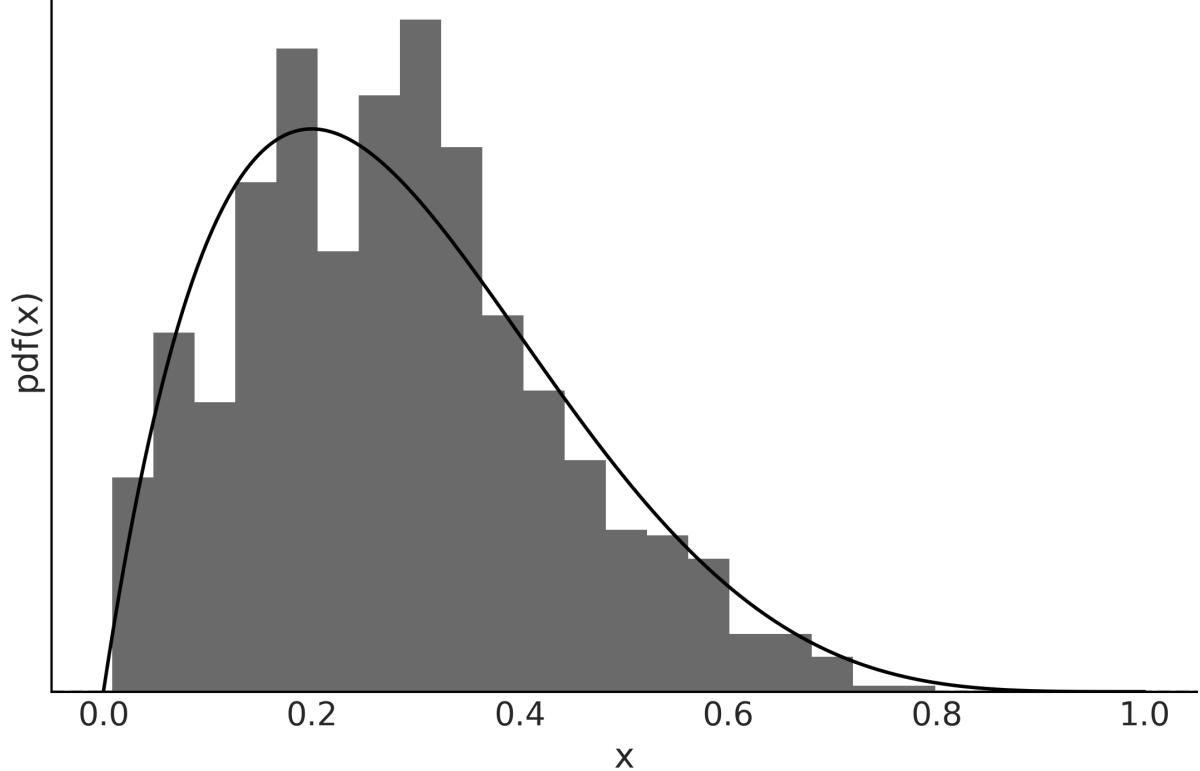

2We will get something similar to Figure 1.4. Even when we cannot predict the next value from a random variable, we can predict the probability of getting any particular value and by the same token, if we get many values, we can predict their overall distribution.

Figure 1.4: The gray dots represent the pmf of the BetaBinomial sample. In light gray, a histogram of 1,000 draw s from that distribution

In this book, we willsometimes know the parameters of a given distribution and we will want to get random samples from it. Other times, we are going to be in the opposite scenario: we will have a set of samples and we will want to estimate the parameters of a distribution. Playing back and forth between these two scenarios will become second nature as we move forward through the pages.

1.4.4 Continuous random variables and their distributions

Probably the most widely known continuous probability distribution is the Normal distribution, also known as the Gaussian distribution. Its probability density function is:

\[\text{pdf}(x) = \frac{1}{\sigma\sqrt{2\pi}} \exp\left\{-\frac{1}{2}\left(\frac{x-\mu}{\sigma}\right)^2\right\}\]

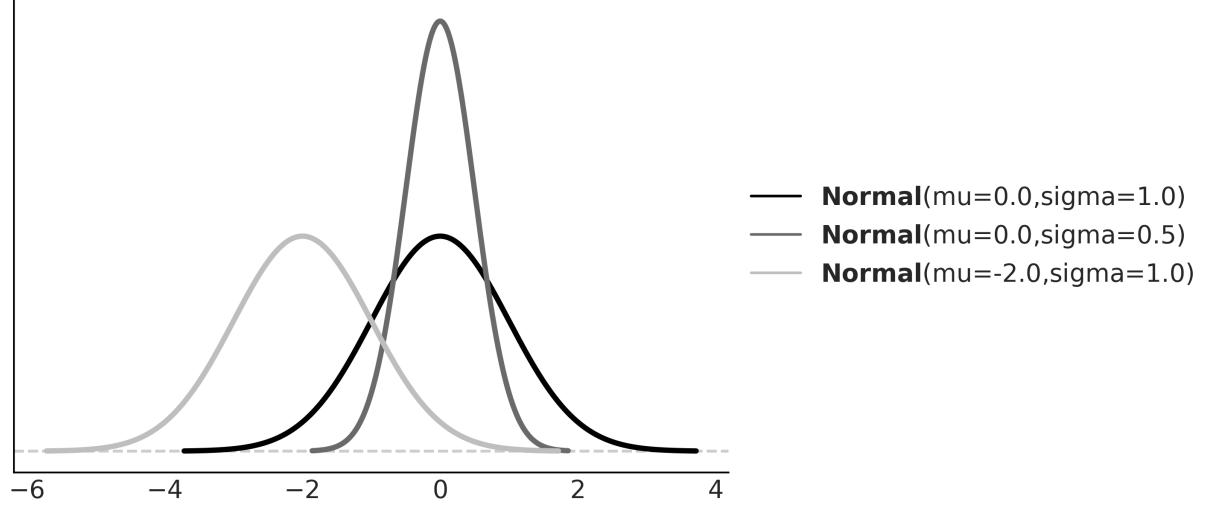

Again, we only show this expression to remove the mystery veil. No need to pay too much attention to its details, other than to the fact that this distribution has two parameters μ, which controls the location of the peak of the curve, and σ, which controls the spread of the curve. Figure 1.5 shows 3 examples from the Gaussian family. If you want to learn more about this distribution, I recommend you watch this video: https://www.youtube.com/watch?v=cy8r7WSuT1I.

Figure 1.5: Three members of the Gaussian family

If you have been paying attention, you may have noticed that we said probability density function (pdf) instead of probability mass function (pmf). This was no typo – they are actually two different objects. Let’s take one step back and think about this; the output of a discrete probability distribution is a probability. The height of the bars in Figure 1.2 or the height of the dots in Figure 1.3 are probabilities. Each bar or dot will never be higher than 1 and if you sum all the bars or dots, you will always get 1. Let’s do the same but with the curve in Figure 1.5. The first thing to notice is that we don’t have bars or dots; we have a continuous, smooth curve. So maybe we can think that the curve is made up of super thin bars, so thin that we assign one bar for every real value in the support of the distributions, we measure the height of each bar, and we perform an infinite sum. This is a sensible thing to do, right?

Well yes, but it is not immediately obvious what are we going to get from this. Will this sum give us exactly 1? Or are we going to get a large number instead? Is the sum finite? Or does the result depend on the parameters of the distribution?

A proper answer to these questions requires measure theory, and this is a very informal introduction to probability, so we are not going into that rabbit hole. But the answer essentially is that for a continuous

random variable, we can only assign a probability of 0 to every individual value it may take; instead, we can assign densities to them and then we can calculate probabilities for a range of values. Thus, for a Gaussian, the probability of getting exactly the number -2, i.e. the number -2 followed by an infinite number of zeros after the decimal point, is 0. But the probability of getting a number between -2 and 0 is some number larger than 0 and smaller than 1. To find out the exact answer, we need to compute the following:

\[P(a < X < b) = \int\_{a}^{b} \text{pdf}(x) dx\]

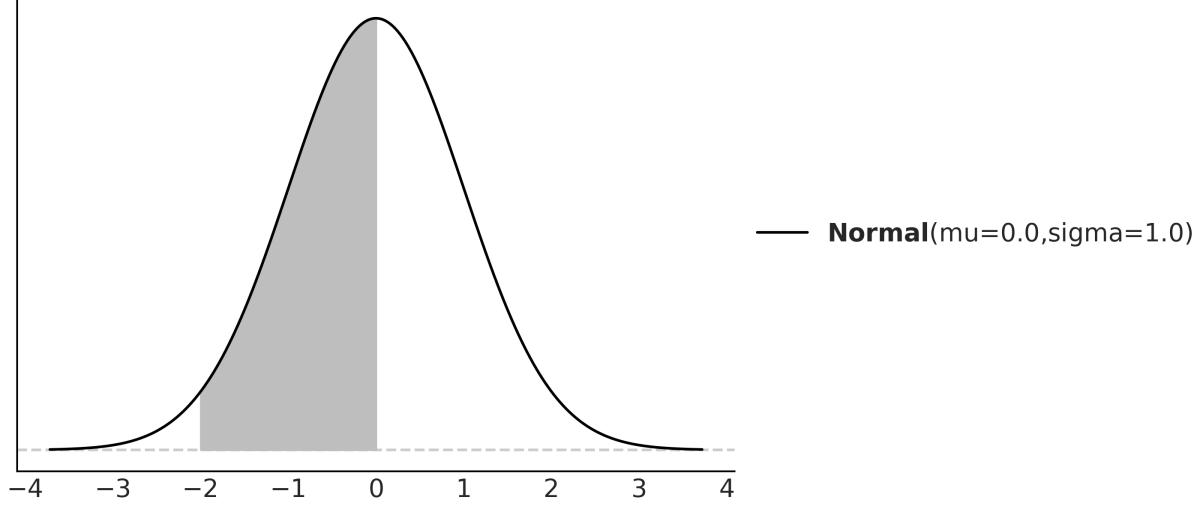

And to compute that, we need to replace the symbols for a concrete quantity. If we replace the pdf by Normal(0,1), and a = −2, b = 0, we will get that P(−2 < X < 0) ≈ 0.477, which is the shaded area in Figure 1.6.

Figure 1.6: The black line represents the pdf of a Gaussian w ith parameters mu=0 and sigma=1, the gray area is the probability of a value being larger than -2 and smaller than 0

You may remember that we can approximate an integral by summing areas of rectangles and the approximation becomes more and more accurate as we reduce the length of the base of the rectangles (see the Wikipedia entry for Riemann integral). Based on this idea and using PreliZ, we can estimate P(−2 < X < 0) as:

Code 1.5

dist = pz.Normal(0, 1)

a = -2

b = 0

num = 10

x_s = np.linspace(a, b, num)

base = (b-a)/num

np.sum(dist.pdf(x_s) * base)

1

2

3

4

5

6

7If we increase the value of num, we will get a better approximation.

1.4.5 Cumulative distribution function

We have seen the pmf and the pdf, but these are not the only ways to characterize distributions. An alternative is the cumulative distribution function (cdf). The cdf of a random variable X is the function F given by F (x) = P(X ≤ x). In words, the cdf is the answer to the question: what is the probability of getting a number lower than or equal to x? On the first column of Figure 1.7, we can see the pmf and cdf of a BetaBinomial, and in the second column, the pdf and cdf of a Gaussian. Notice how the cdf jumps for the discrete variable but it is smooth for the continuous variable. The height of each jump represents a probability – just compare them with the height of the dots. We can use the plot of the cdf of a continuous variable as visual proof that probabilities are zero for any value of the continuous variable. Just notice how there are no jumps for continuous variables, which is equivalent to saying that the height of the jumps is exactly zero. X X

Figure 1.7: The pmf of the BetaBinomial distribution w ith its corresponding cdf and the pdf of the Normal distribution w ith its corresponding cdf

Just by looking at a cdf, it is easier to find what is the probability of getting a number smaller than, let’s say, 1. We just need to go to the value 1 on the x-axis, move up until we cross the black line, and then check the value of the y-axis. For instance, in Figure 1.7 and for the Normal distribution, we can see that the value lies between 0.75 and 1. Let’s say it is ≈ 0.85. This is way harder to do with the pdf because we would need to compare the entire area below 1 to the total area to get the answer. Humans are worse at judging areas than judging heights or lengths.

1.4.6 Conditional probability

Given two events A and B with P(B) > 0, the probability of A given B, which we write as P(A|B) is defined as:

\[P(A \mid B) = \frac{P(A, B)}{P(B)}\]

P(A,B) is the probability that both the event A and event B occur. P(A|B) is known as conditional probability, and it is the probability that event A occurs, conditioned by the fact that we know (or assume, imagine, hypothesize, etc.) that B has occurred. For example, the probability that the pavement is wet is different from the probability that the pavement is wet if we know it’s raining.

A conditional probability can be larger than, smaller than, or equal to the unconditional probability. If knowing B does not provide us with information about A, then P(A|B) = P(A). This will be true only if A and B are independent of each other. On the contrary, if knowing B gives us useful information about A, then the conditional probability could be larger or smaller than the unconditional probability, depending on whether knowing B makes A less or more likely. Let’s see a simple example using a fair six-sided die. What is the probability of getting the number 3 if we roll the die? P(die = 3) = since each of the six numbers has the same chance for a fair six-sided die. And what is the probability of getting the number 3 given that we have obtained an odd number? P(die = 3 | die = {1,3,5}) = , because if we know we have an odd number, the only possible numbers are {1,3,5} and each of them has the same chance. Finally, what is the probability of getting 3 if we have obtained an even number? This is P(die = 3 | die = {2,4,6}) = 0, because if we know the number is even, then the only possible ones are {2,4,6} and thus getting a 3 is not possible.

As we can see from these simple examples, by conditioning on observed data, we are changing the sample space. When asking about P(die = 3), we need to evaluate the sample space S = {1,2,3,4,5,6}, but when we condition on having got an even number, then the new sample space becomes T = {2,4,6}.

Conditional probabilities are at the heart of statistics, irrespective of whether your problem is rolling dice or building self-driving cars.

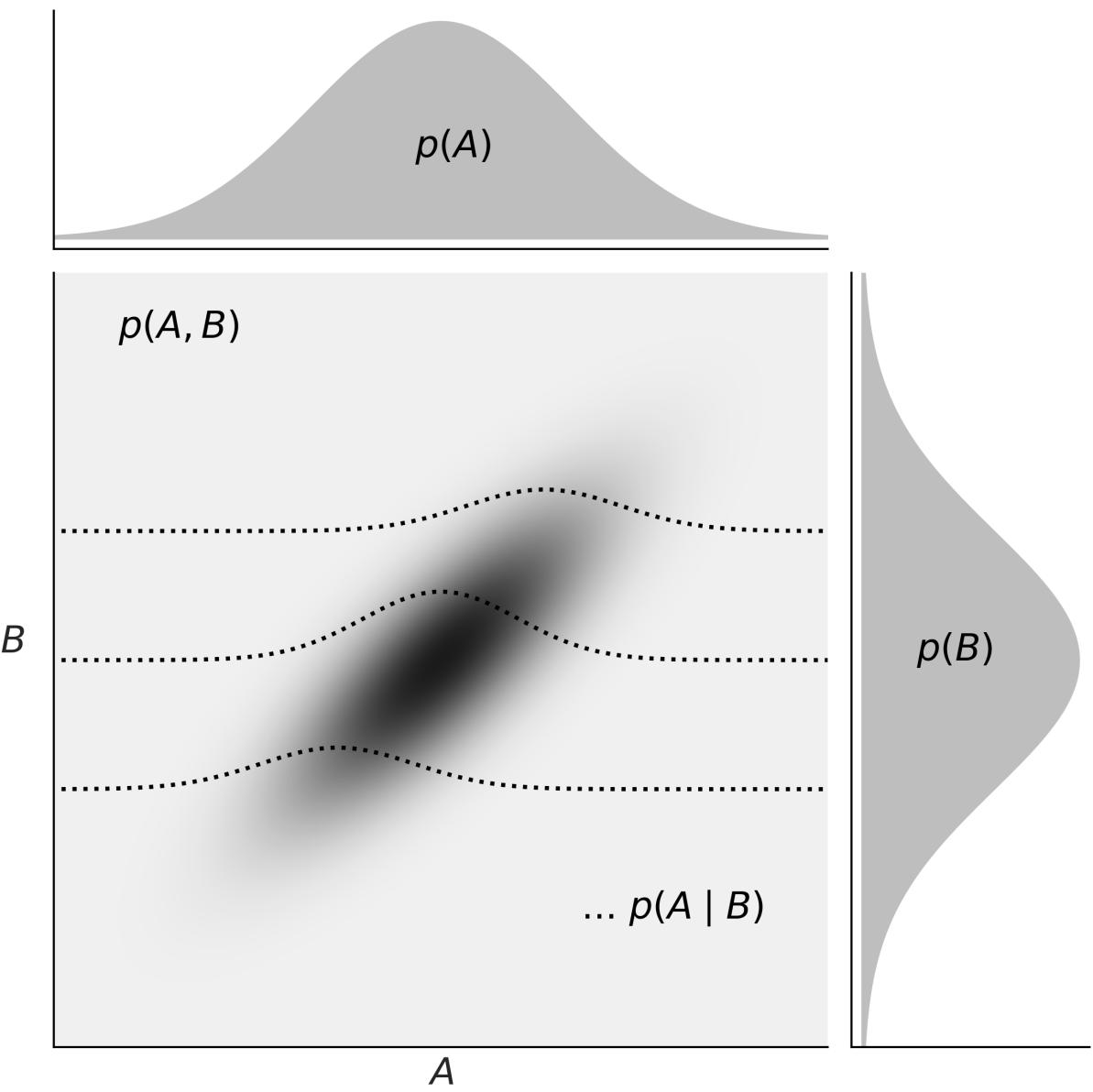

The central panel of Figure 1.8 represents p(A,B) using a grayscale with darker colors for higher probability densities. We see the joint distribution is elongated, indicating that the higher the value of A, the higher the one of B, and vice versa. Knowing the value of A tells us something about the values of B and the other way around. On the top and right margins of Figure 1.8 we have the marginal distributions p(A) and p(B) respectively. To compute the marginal of A, we take p(A,B) and we average overall values of B, intuitively this is like taking a 2D object, the joint distribution, and projecting it into one dimension. The marginal distribution of B is computed similarly. The dashed lines represent the conditional probability p(A|B) for 3 different values of B. We get them by slicing the joint p(A,B) at a given value of B. We can think of this as the distribution of A given that we have observed a particular value of B.

Figure 1.8: Representation of the relationship betw een the joint p(A,B), the marginals p(A) and p(B), and the conditional p(A|B) probabilities

1.4.7 Expected values

If X is a discrete random variable, we can compute its expected value as:

\[\mathbb{E}(X) = \sum\_{x} xP(X=x)\]

This is just the mean or average value.

You are probably used to computing means or averages of samples or collections of numbers, either by hand, on a calculator, or using Python. But notice that here we are not talking about the mean of a

bunch of numbers; we are talking about the mean of a distribution. Once we have defined the parameters of a distribution, we can, in principle, compute its expected values. Those are properties of the distribution in the same way that the perimeter is a property of a circle that gets defined once we set the value of the radius.

Another expected value is the variance, which we can use to describe the spread of a distribution. The variance appears naturally in many computations in statistics, but in practice, it is often more useful to use the standard deviation, which is the square root of the variance. The reason is that the standard deviation is in the same units as the random variable.

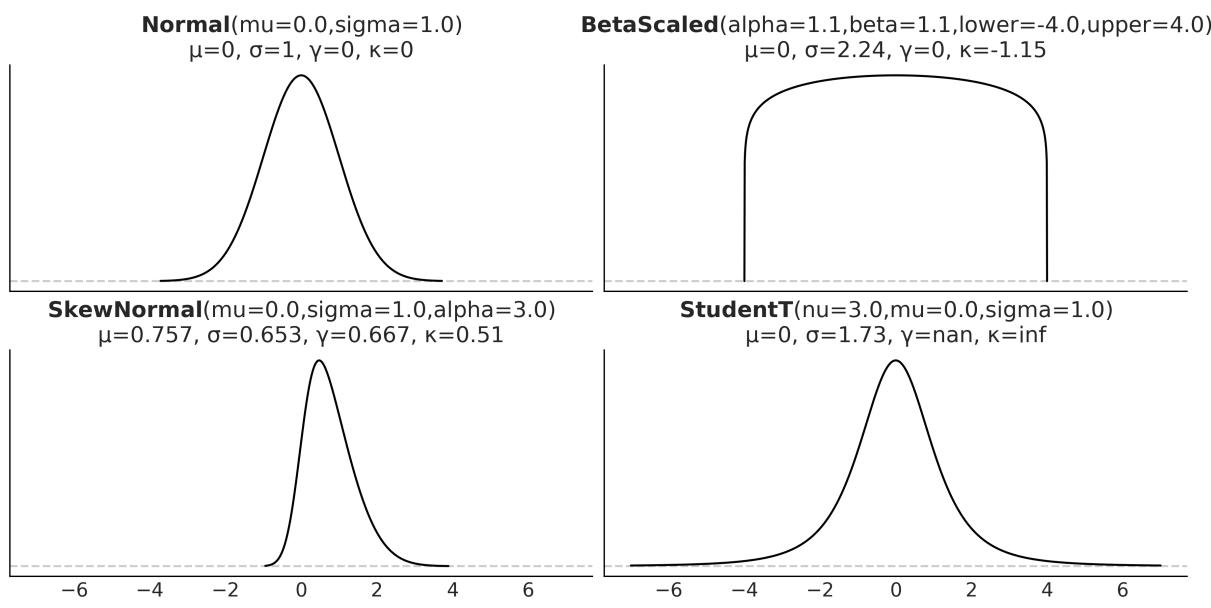

The mean and variance are often called the moments of a distribution. Other moments are skewness, which tells us about the asymmetry of a distribution, and the kurtosis, which tells us about the behavior of the tails or the extreme values [Westfall, 2014]. Figure 1.9 shows examples of different distributions and their mean μ, standard deviation σ, skew γ, and kurtosis . Notice that for some distributions, some moments may not be defined or they may be inf.

Figure 1.9: Four distributions w ith their first four moments

Now that we have learned about some of the basic concepts and jargon from probability theory, we can move on to the moment everyone was waiting for.

1.4.8 Bayes’ theorem

Without further ado, let’s contemplate, in all its majesty, Bayes’ theorem:

\[p(\theta \mid Y) = \frac{p(Y \mid \theta)p(\theta)}{p(Y)}\]

Well, it’s not that impressive, is it? It looks like an elementary school formula, and yet, paraphrasing Richard Feynman, this is all you need to know about Bayesian statistics. Learning where Bayes’ theorem comes from will help us understand its meaning. According to the product rule, we have:

\[p(\theta, Y) = p(\theta \mid Y) \ p(Y)\]

This can also be written as:

\[p(\theta, Y) = p(Y \mid \theta) \ p(\theta)\]

Given that the terms on the left are equal for both equations, we can combine them and write:

\[p(\theta \mid Y) \ p(Y) = p(Y \mid \theta) \ p(\theta)\]

On reordering, we get Bayes’ theorem:

\[p(\theta \mid Y) = \frac{p(Y \mid \theta)p(\theta)}{p(Y)}\]

Why is Bayes’ theorem that important? Let’s see.

First, it says that p(θ|Y ) is not necessarily the same as p(Y |θ). This is a very important fact – one that is easy to miss in daily situations, even for people trained in statistics and probability. Let’s use a simple example to clarify why these quantities are not necessarily the same. The probability of a person being the Pope given that this person is Argentinian is not the same as the probability of being Argentinian given that this person is the Pope. As there are around 47,000,000 Argentinians alive and a single one of them is the current Pope, we have p(Pope | Argentinian ) ≈ and we also have p(Argentinian | Pope ) = 1.

If we replace θ with “hypothesis” and Y with “data,” Bayes’ theorem tells us how to compute the probability of a hypothesis, θ, given the data, Y , and that’s the way you will find Bayes’ theorem is explained in a lot of places. But, how do we turn a hypothesis into something that we can put inside Bayes’ theorem? Well, we do it by using probability distributions. So, in general, our hypothesis is a hypothesis in a very, very, very narrow sense; we will be more precise if we talk about finding a suitable value for parameters in our models, that is, parameters of probability distributions. By the way, don’t try to set θ to statements such as “unicorns are real,” unless you are willing to build a realistic probabilistic model of unicorn existence!

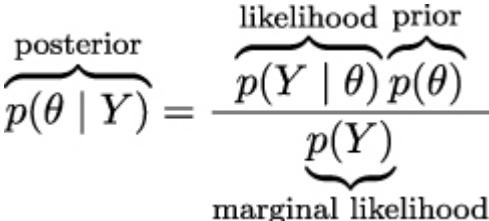

Bayes’ theorem is central to Bayesian statistics. As we willsee in Chapter 2, using tools such as PyMC frees us of the need to explicitly write Bayes’ theorem every time we build a Bayesian model. Nevertheless, it is important to know the name of its parts because we will constantly refer to them and it is important to understand what each part means because this will help us to conceptualize models. So, let me rewrite Bayes’ theorem now with labels:

The prior distribution should reflect what we know about the value of the parameter θ before seeing the data, Y . If we know nothing, like Jon Snow, we could use flat priors that do not convey too much information. In general, we can do better than flat priors, as we will learn in this book. The use of priors is why some people still talk about Bayesian statistics as subjective, even when priors are just another assumption that we made when modeling and hence are just as subjective (or objective) as any other assumption, such as likelihoods.

The likelihood is how we will introduce data in our analysis. It is an expression of the plausibility of the data given the parameters. In some texts, you will find people call this term sampling model, statistical model, or just model. We willstick to the name likelihood and we will model the combination of priors and likelihood.

The posterior distribution is the result of the Bayesian analysis and reflects all that we know about a problem (given our data and model). The posterior is a probability distribution for the parameters in our model and not a single value. This distribution is a balance between the prior and the likelihood. There is a well-known joke: a Bayesian is one who, vaguely expecting a horse, and catching a glimpse of a donkey, strongly believes they have seen a mule. One excellent way to kill the mood after hearing this joke is to explain that if the likelihood and priors are both vague, you will get a posterior reflecting vague beliefs about seeing a mule rather than strong ones. Anyway, I like the joke, and I like how it captures the idea of a posterior being somehow a compromise between prior and likelihood. Conceptually, we can think of the posterior as the updated prior in light of (new) data. In theory, the posterior from one analysis can be used as the prior for a new analysis (in practice, life can be harder). This makes Bayesian analysis particularly suitable for analyzing data that becomes available in sequential order. One example could be an early warning system for natural disasters that processes online data coming from meteorologicalstations and satellites. For more details, read about online machine-learning methods.

The last term is the marginal likelihood, sometimes referred to as the evidence. Formally, the marginal likelihood is the probability of observing the data averaged over all the possible values the parameters can take (as prescribed by the prior). We can write this as ∫ p(Y |θ)p(θ)dθ. We will not really care about the marginal likelihood until Chapter 5. But for the moment, we can think of it as a normalization factor that ensures the posterior is a proper pmf or pdf. If we ignore the marginal Θ

likelihood, we can write Bayes’ theorem as a proportionality, which is also a common way to write Bayes’ theorem:

\[p(\theta \mid Y) \propto p(Y \mid \theta)p(\theta)\]

Understanding the exact role of each term in Bayes’ theorem will take some time and practice, and it will require a few examples, but that’s what the rest of this book is for.

1.5 Interpreting probabilities

Probabilities can be interpreted in various useful ways. For instance, we can think that P(A) = 0.125 means that if we repeat the survey many times, we would expect all three individuals to answer “yes” about 12.5% of the time. We are interpreting probabilities as the outcome of long-run experiments. This is a very common and useful interpretation. It not only can help us think about probabilities but can also provide an empirical method to estimate probabilities. Do we want to know the probability of a car tire exploding if filled with air beyond the manufacturer’s recommendation? Just inflate 120 tires or so, and you may get a good approximation. This is usually called the frequentist interpretation.

Another interpretation of probability, usually called subjective or Bayesian interpretation, states that probabilities can be interpreted as measures of an individual’s uncertainty about events. In this interpretation, probabilities are about our state of knowledge of the world and are not necessarily based on repeated trials. Under this definition of probability, it is valid and natural to ask about the probability of life on Mars, the probability of the mass of an electron being 9.1 × 10 kg, or the probability that the 9 of July of 1816 was a sunny day in Buenos Aires. All these are one-time events. We cannot recreate 1 million universes, each with one Mars, and check how many of them develop life. Of course, we can do this as a mental experiment, so long-term frequencies can still be a valid mentalscaffold. −31 th

Sometimes the Bayesian interpretation of probabilities is described in terms of personal beliefs; I don’t like that. I think it can lead to unnecessary confusion as beliefs are generally associated with the notion of faith or unsupported claims. This association can easily lead people to think that Bayesian probabilities, and by extension Bayesian statistics, is less objective or less scientific than alternatives. I think it also helps to generate confusion about the role of prior knowledge in statistics and makes people think that being objective or rational means not using prior information.

Bayesian methods are as subjective (or objective) as any other well-established scientific method we have. Let me explain myself with an example: life on Mars exists or does not exist; the outcome is binary, a yes-no question. But given that we are not sure about that fact, a sensible course of action is trying to find out how likely life on Mars is. To answer this question any honest and scientific-minded person will use all the relevant geophysical data about Mars, all the relevant biochemical knowledge about necessary conditions for life, and so on. The response will be necessarily about our epistemic

state of knowledge, and others could disagree and even get different probabilities. But at least, in principle, they all will be able to provide arguments in favor of their data, their methods, their modeling decisions, and so on. A scientific and rational debate about life on Mars does not admit arguments such as “an angel told me about tiny green creatures.” Bayesian statistics, however, is just a procedure to make scientific statements using probabilities as building blocks.

1.6 Probabilities, uncertainty, and logic

Probabilities can help us to quantify uncertainty. If we do not have information about a problem, it is reasonable to state that every possible event is equally likely. This is equivalent to assigning the same probability to every possible event. In the absence of information, our uncertainty is maximum, and I am not saying this colloquially; this is something we can compute using probabilities. If we know instead that some events are more likely, then this can be formally represented by assigning a higher probability to those events and less to the others. Notice that when we talk about events in stats-speak, we are not restricting ourselves to things that can happen, such as an asteroid crashing into Earth or my auntie’s 60 birthday party. An event is just any of the possible values (or a subset of values) a variable can take, such as the event that you are older than 30, the price of a Sachertorte, or the number of bikes that will be sold next year around the world. th

The concept of probability is also related to the subject of logic. Under classical logic, we can only have statements that take the values of true or false. Under the Bayesian definition of probability, certainty is just a special case: a true statement has a probability of 1, and a false statement has a probability of 0. We would assign a probability of 1 to the statement that there is Martian life only after having conclusive data indicating something is growing, reproducing, and doing other activities we associate with living organisms.

Notice, however, that assigning a probability of 0 is harder because we could always think that there is some Martian spot that is unexplored, or that we have made mistakes with some experiments, or there are several other reasons that could lead us to falsely believe life is absent on Mars even if it is not. This is related to Cromwell’s rule, which states that we should reserve the probabilities of 0 or 1 to logically true or false statements. Interestingly enough, it can be shown that if we want to extend the logic to include uncertainty, we must use probabilities and probability theory.

As we willsoon see, Bayes’ theorem is just a logical consequence of the rules of probability. Thus, we can think of Bayesian statistics as an extension of logic that is useful whenever we are dealing with uncertainty. Thus, one way to justify using the Bayesian method is to recognize that uncertainty is commonplace. We generally have to deal with incomplete and or noisy data, we are intrinsically limited by our evolution-sculpted primate brain, and so on.

The Bayesian Ethos

Probabilities are used to measure the uncertainty we have about parameters, and Bayes’ theorem is a mechanism to correctly update those probabilities in light of new data, hopefully reducing our uncertainty.

1.7 Single-parameter inference

Now that we know what Bayesian statistics is, let’s learn how to do Bayesian statistics with a simple example. We are going to begin inferring a single, unknown parameter.

1.7.1 The coin-flipping problem

The coin-flipping problem, or the BetaBinomial model if you want to sound fancy at parties, is a classical problem in statistics and goes like this: we toss a coin several times and record how many heads and tails we get. Based on this data, we try to answer questions such as, is the coin fair? Or, more generally, how biased is the coin? While this problem may sound dull, we should not underestimate it.

The coin-flipping problem is a great example to learn the basics of Bayesian statistics because it is a simple model that we can solve and compute with ease. Besides, many real problems consist of binary, mutually exclusive outcomes such as 0 or 1, positive or negative, odds or evens, spam or ham, hotdog or not a hotdog, cat or dog, safe or unsafe, and healthy or unhealthy. Thus, even when we are talking about coins, this model applies to any of those problems. To estimate the bias of a coin, and in general, to answer any questions in a Bayesian setting, we will need data and a probabilistic model. For this example, we will assume that we have already tossed a coin several times and we have a record of the number of observed heads, so the data-gathering part is already done. Getting the model will take a little bit more effort. Since this is our first model, we will explicitly write Bayes’ theorem and do all the necessary math (don’t be afraid, I promise it will be painless) and we will proceed very slowly. From 2 onward, we will use PyMC and our computer to do the math for us.

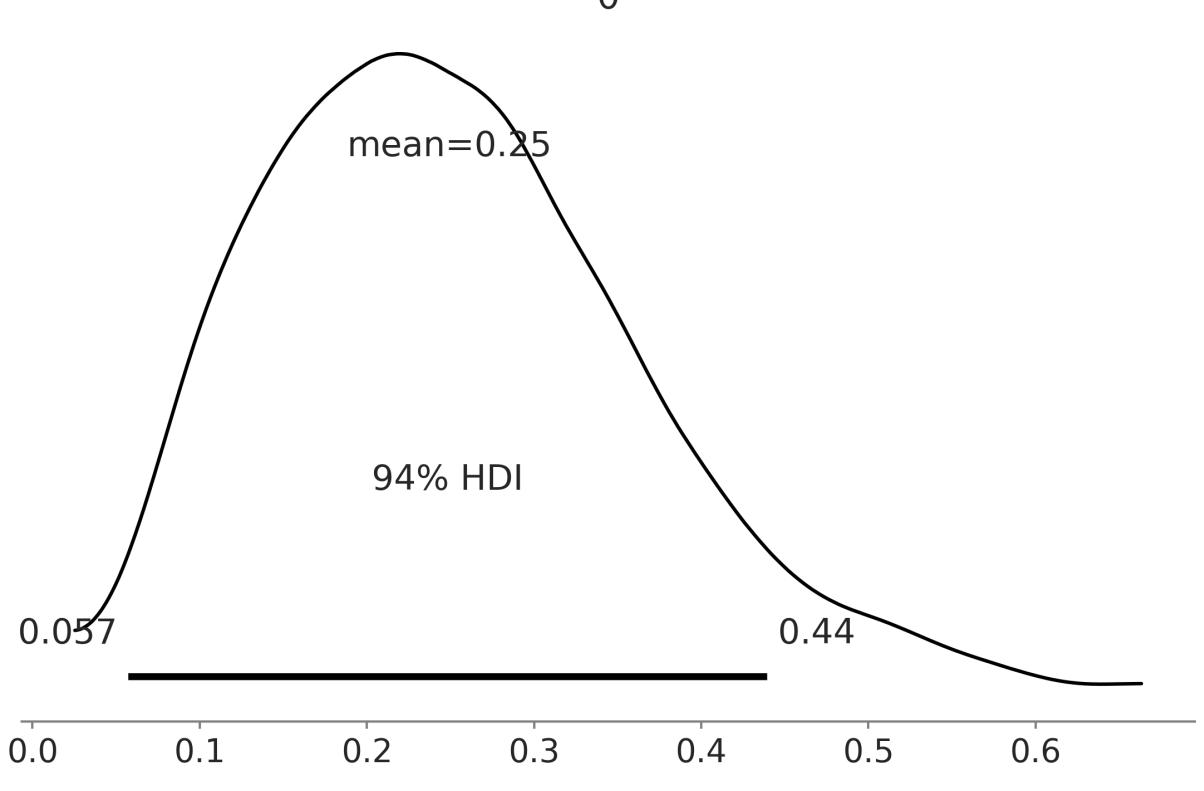

The first thing we will do is generalize the concept of bias. We willsay that a coin with a bias of 1 will always land heads, one with a bias of 0 will always land tails, and one with a bias of 0.5 will land heads half of the time and tails half of the time. To represent the bias, we will use the parameter θ, and to represent the total number of heads for several tosses, we will use the variable Y . According to Bayes’ theorem, we have to specify the prior, p(θ), and likelihood, p(Y |θ), we will use. Let’s start with the likelihood.

1.7.2 Choosing the likelihood

Let’s assume that only two outcomes are possible—heads or tails—and let’s also assume that a coin toss does not affect other tosses, that is, we are assuming coin tosses are independent of each other. We will further assume all coin tosses come from the same distribution. Thus the random variable coin toss is an example of an independent and identically distributed (iid) variable. I hope you agree

that these are very reasonable assumptions to make for our problem. Given these assumptions, a good candidate for the likelihood is the Binomial distribution:

\[p(Y \mid \theta) = \underbrace{\frac{N!}{y!(N-y)!}}\_{\text{normalized constant}} \theta^y (1-\theta)^{N-y}\]

This is a discrete distribution returning the probability of getting y heads (or, in general, successes) out of N coin tosses (or, in general, trials or experiments) given a fixed value of θ.

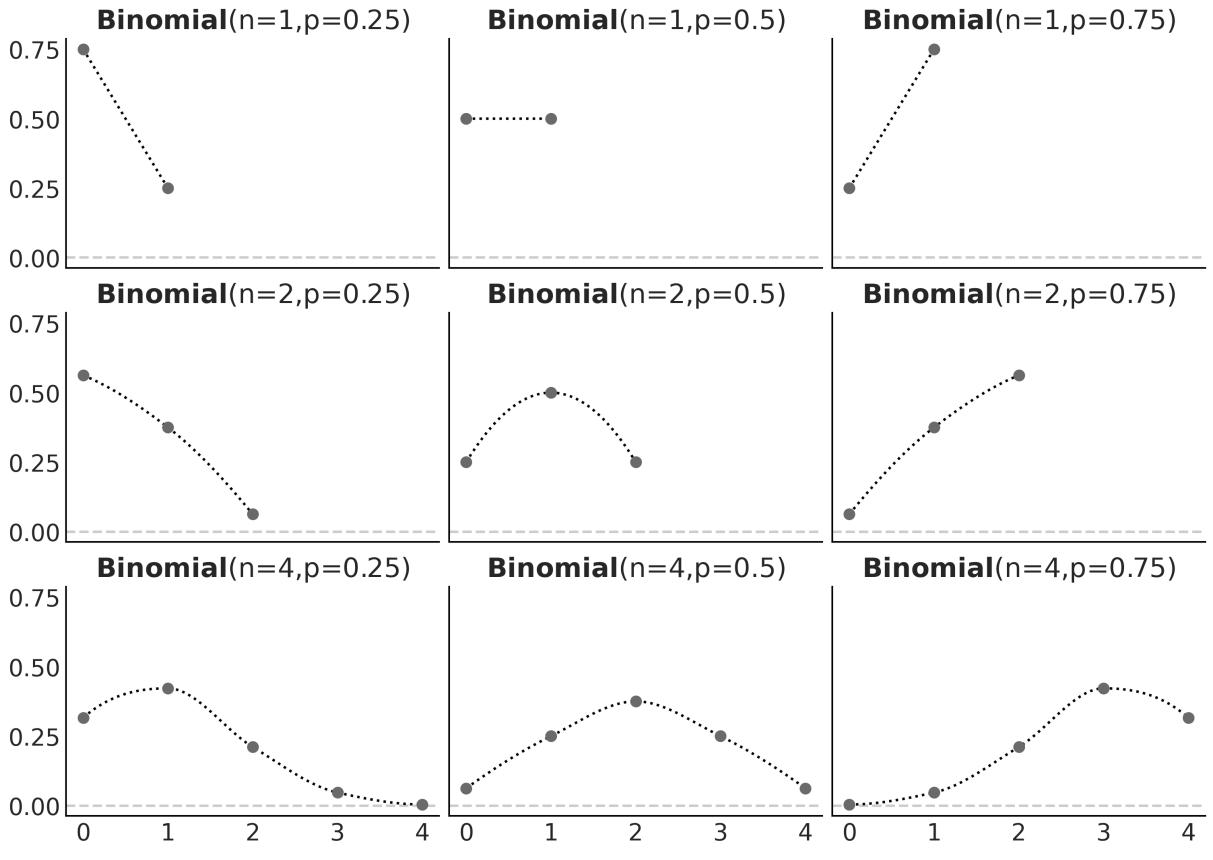

Figure 1.10 shows nine distributions from the Binomial family; each subplot has its legend indicating the values of the parameters. Notice that for this plot, I did not omit the values on the y-axis. I did this so you can check for yourself that if you sum the height of all bars, you will get 1, that is, for discrete distributions, the height of the bars represents actual probabilities.

Figure 1.10: Nine members of the Binomial family

The Binomial distribution is a reasonable choice for the likelihood. We can see that θ indicates how likely it is to obtain a head when tossing a coin. This is easier to see when N = 1 but is valid for any value of N, just compare the value of θ with the height of the bar for y = 1 (heads).

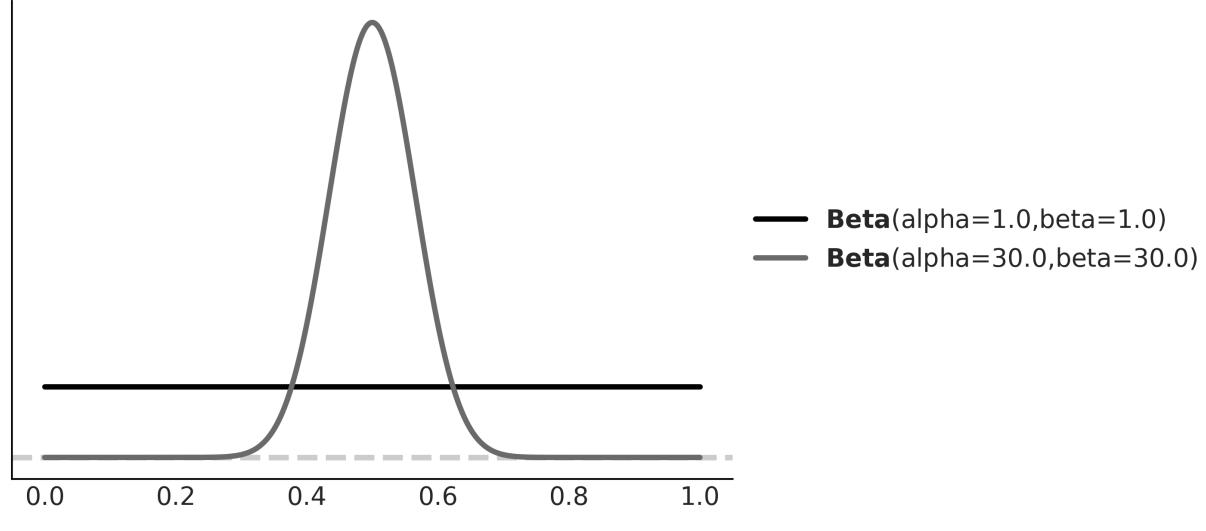

1.7.3 Choosing the prior

As a prior, we will use a Beta distribution, which is a very common distribution in Bayesian statistics and looks as follows:

\[p(\theta) = \underbrace{\frac{\Gamma(\alpha + \beta)}{\Gamma(\alpha) + \Gamma(\beta)}}\_{\text{normalized constant}} \quad \theta^{\alpha - 1}(1 - \theta)^{\beta - 1}\]

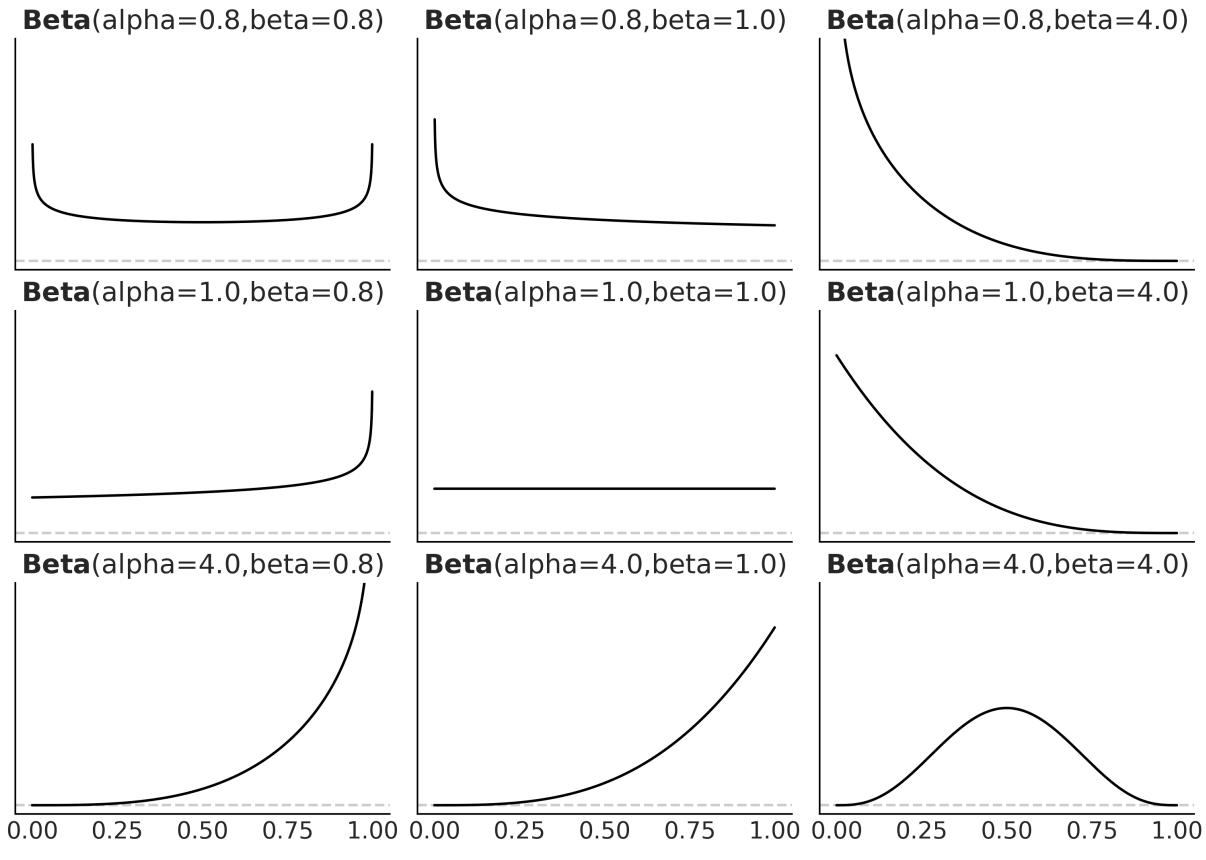

If we look carefully, we willsee that the Beta distribution looks similar to the Binomial except for the first term. Γ is the Greek uppercase gamma letter, which represents the gamma function, but that’s not really important. What is relevant for us is that the first term is a normalizing constant that ensures the distribution integrates to 1. We can see from the preceding formula that the Beta distribution has two parameters, α and β. Figure 1.11 shows nine members of the Beta family.

Figure 1.11: Nine members of the Beta family

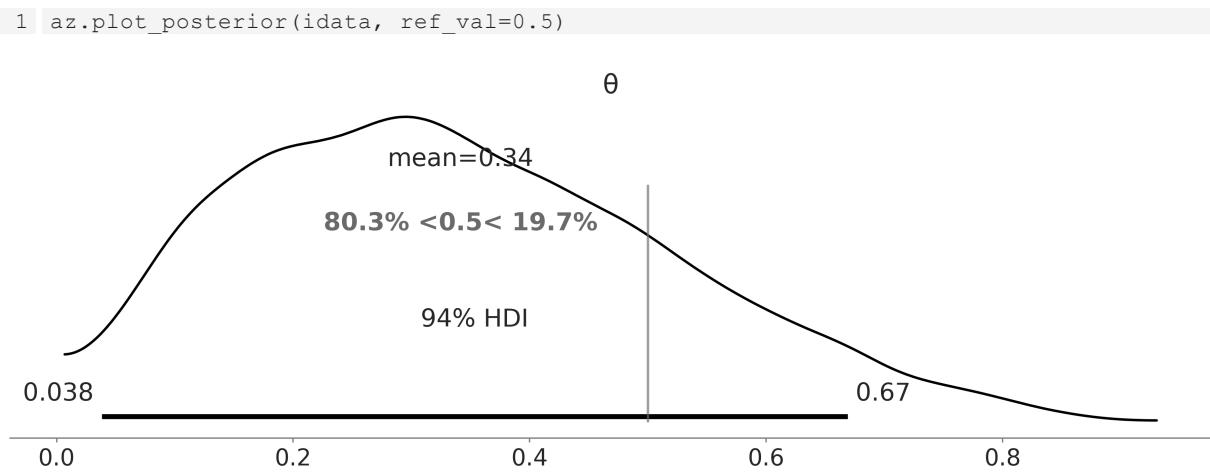

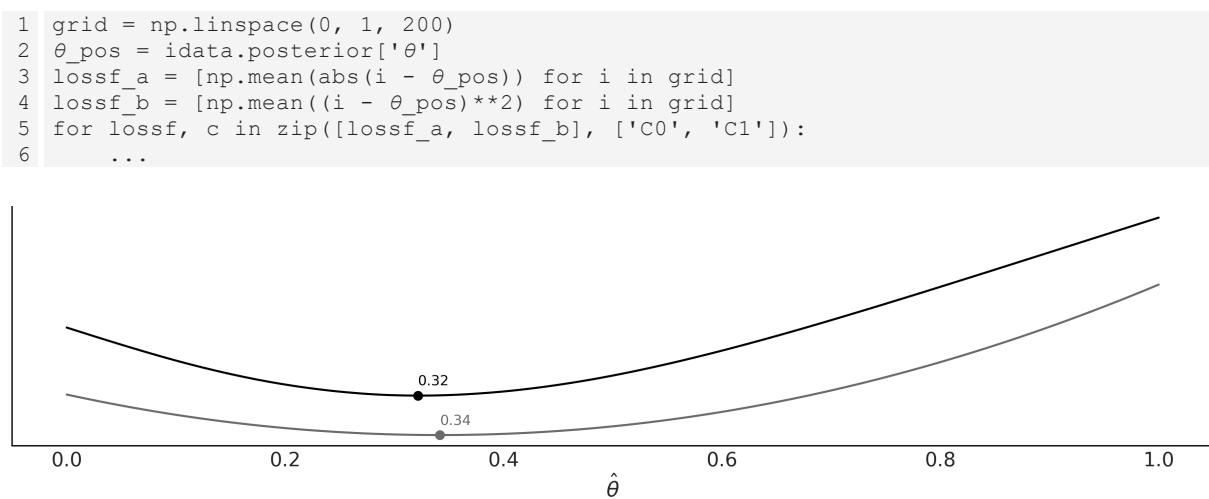

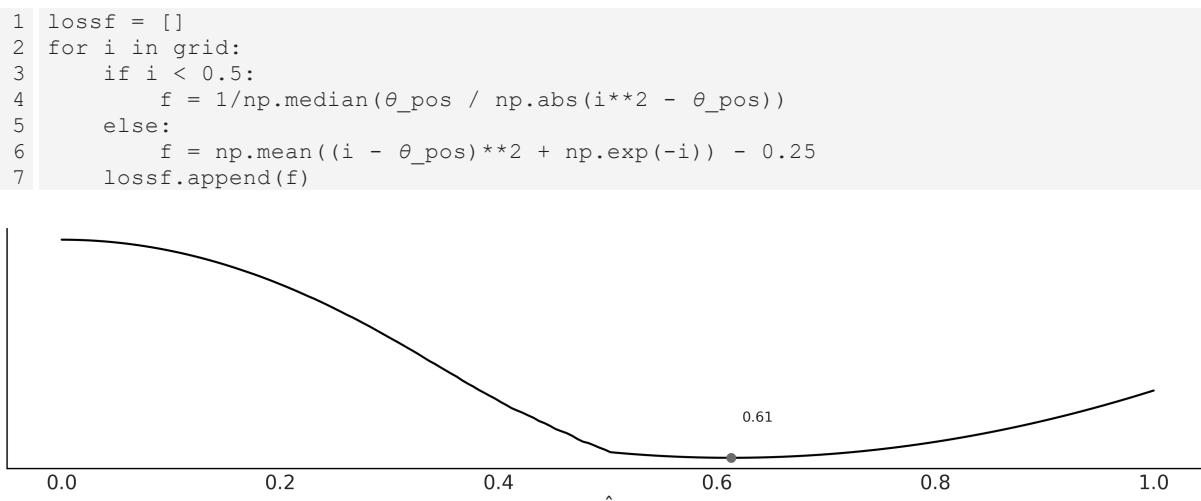

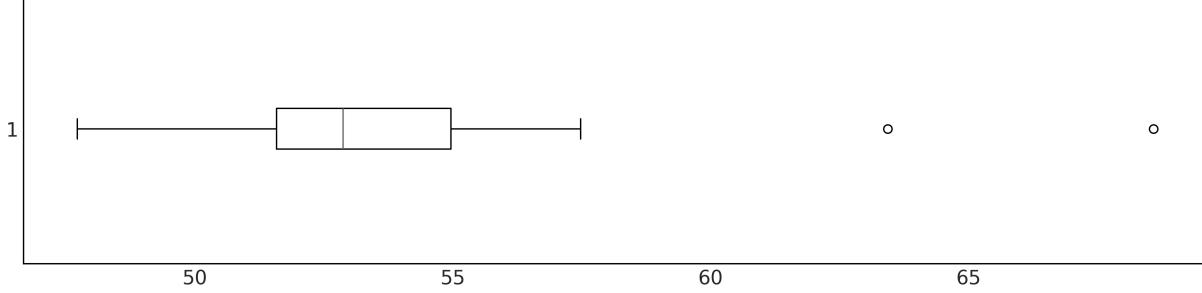

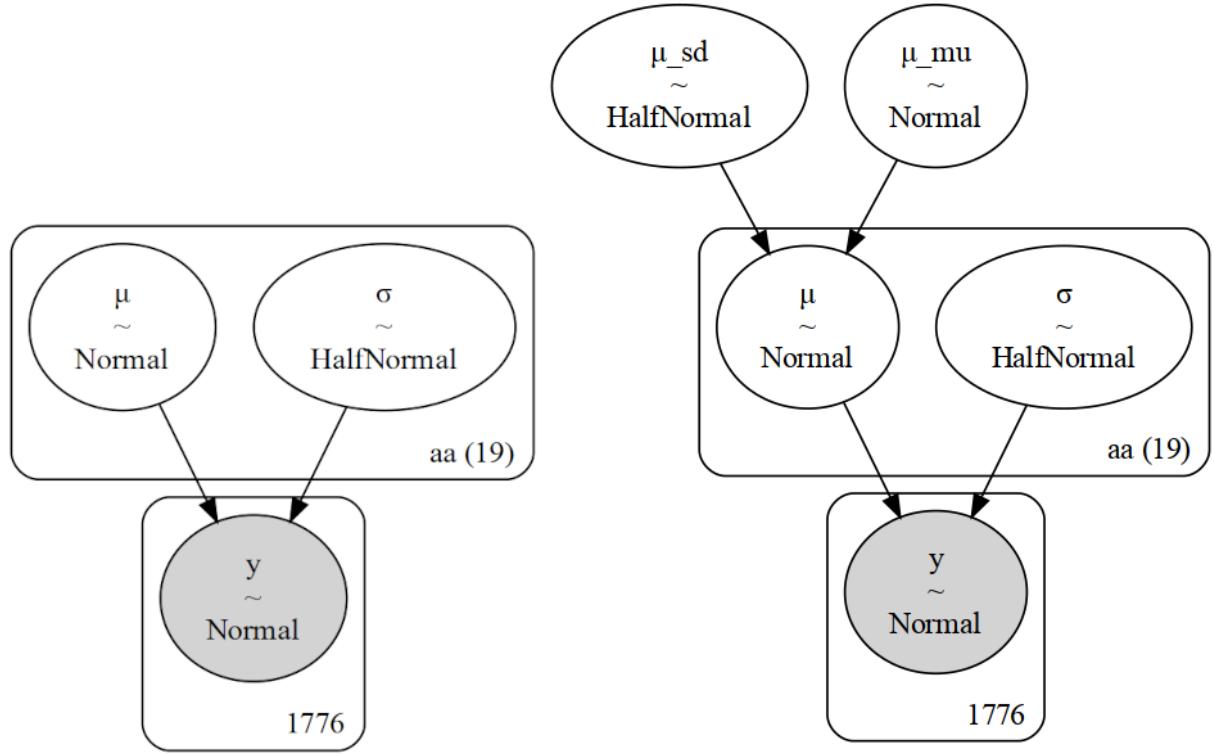

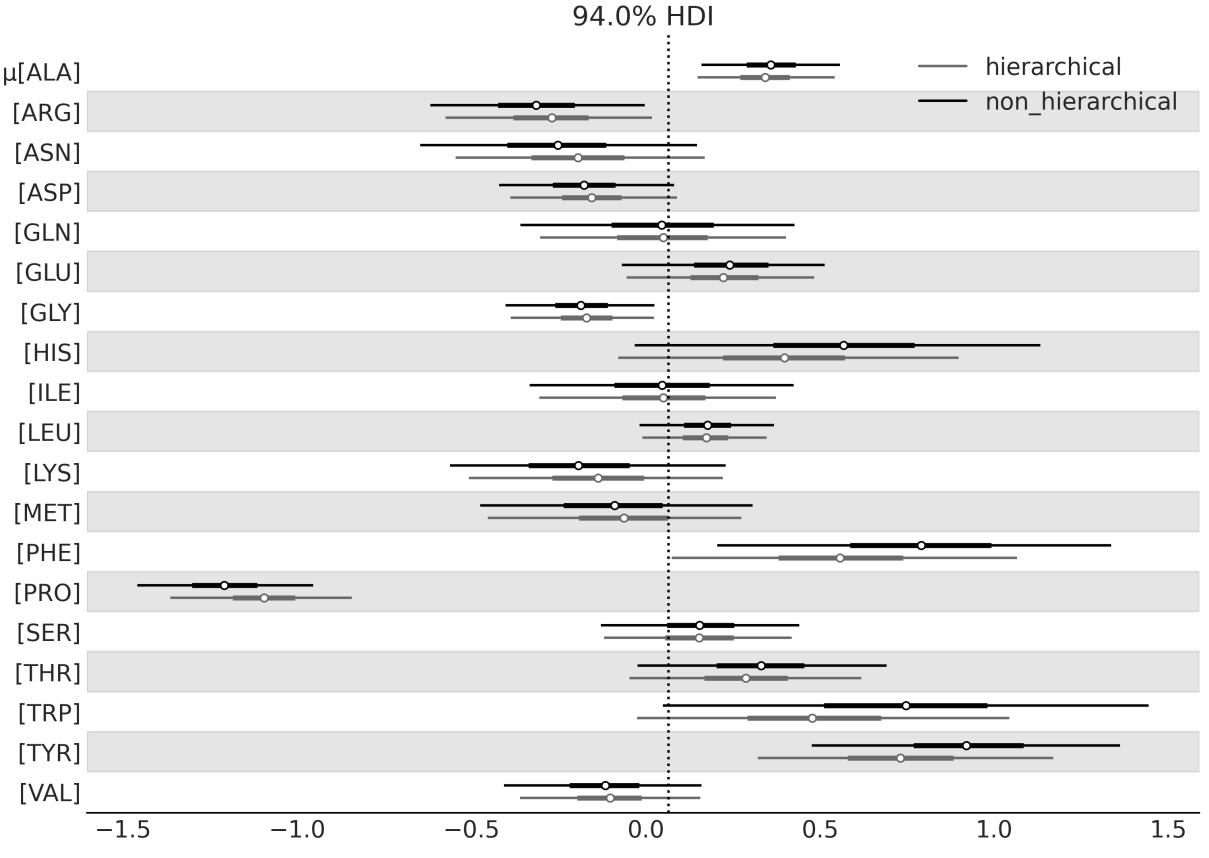

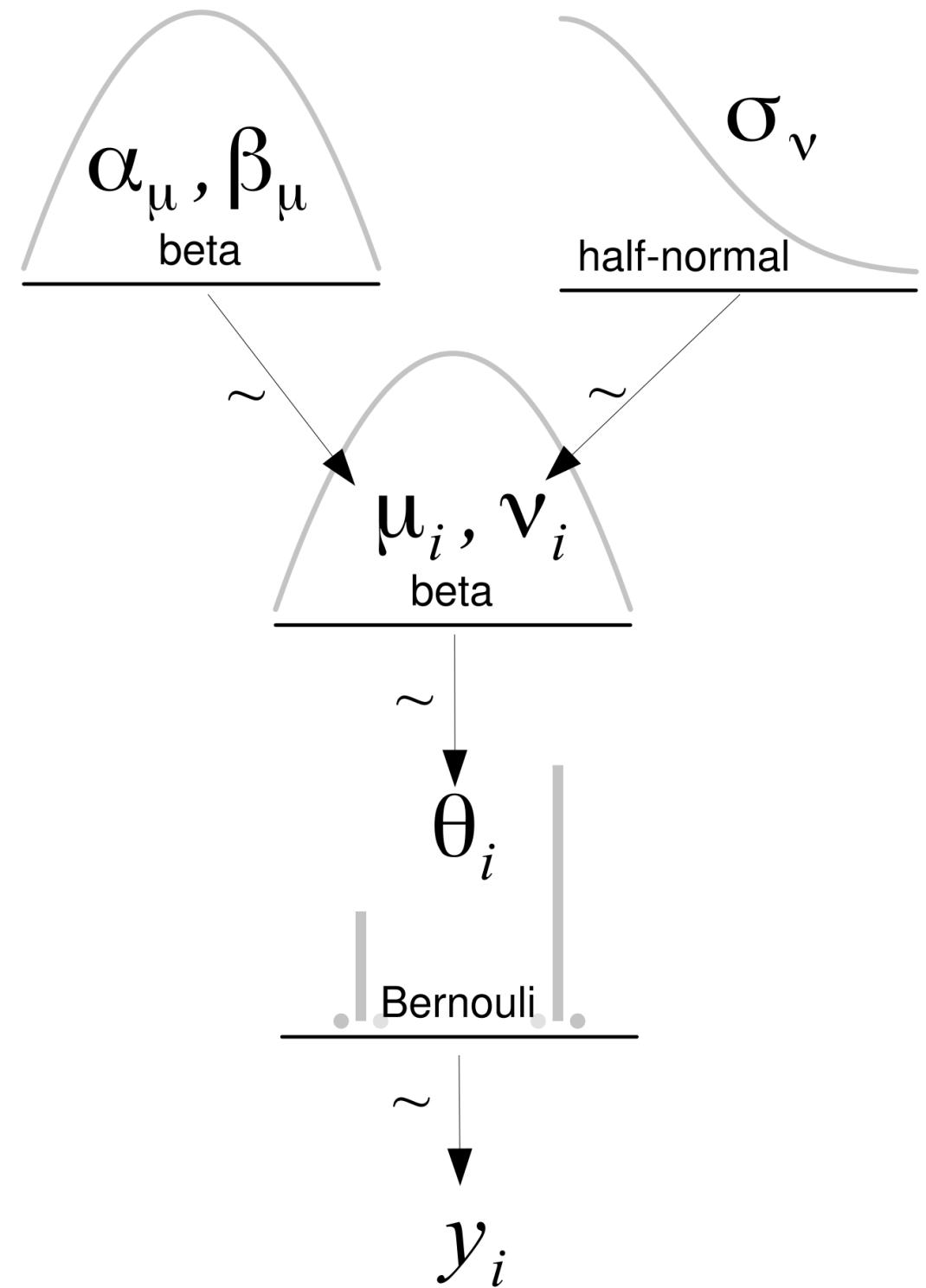

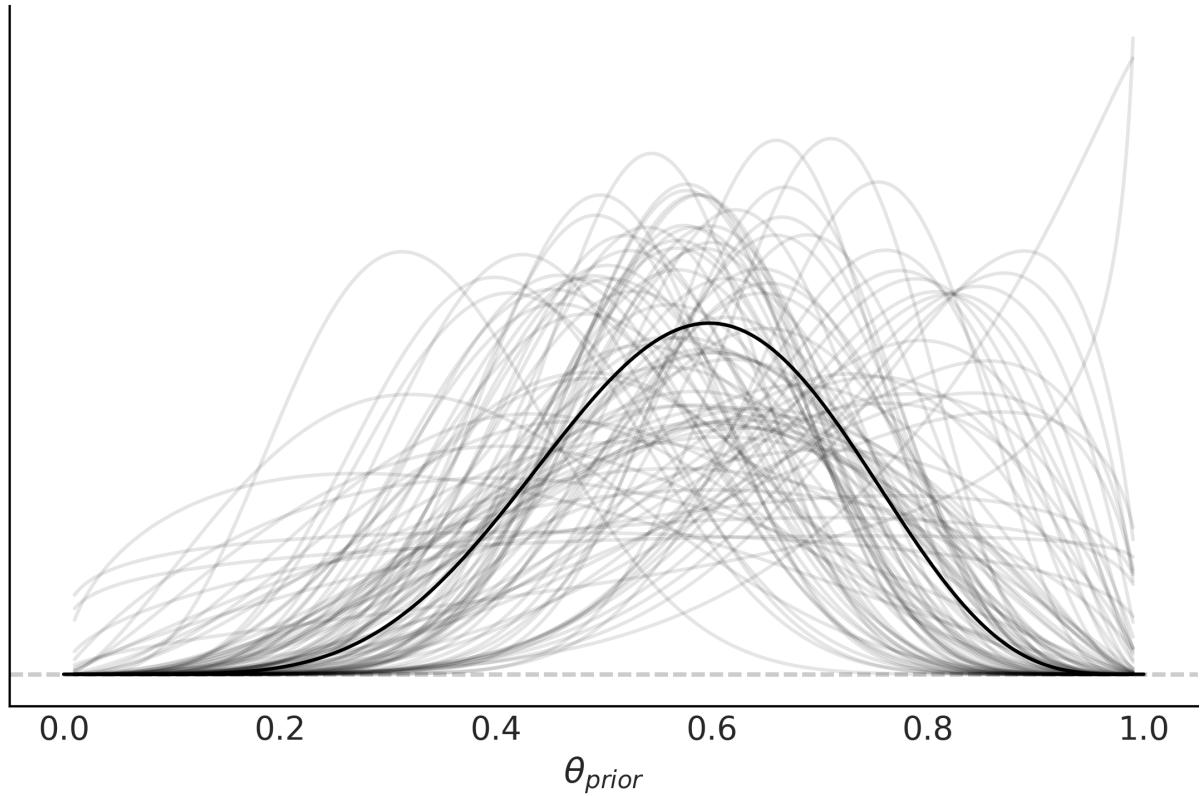

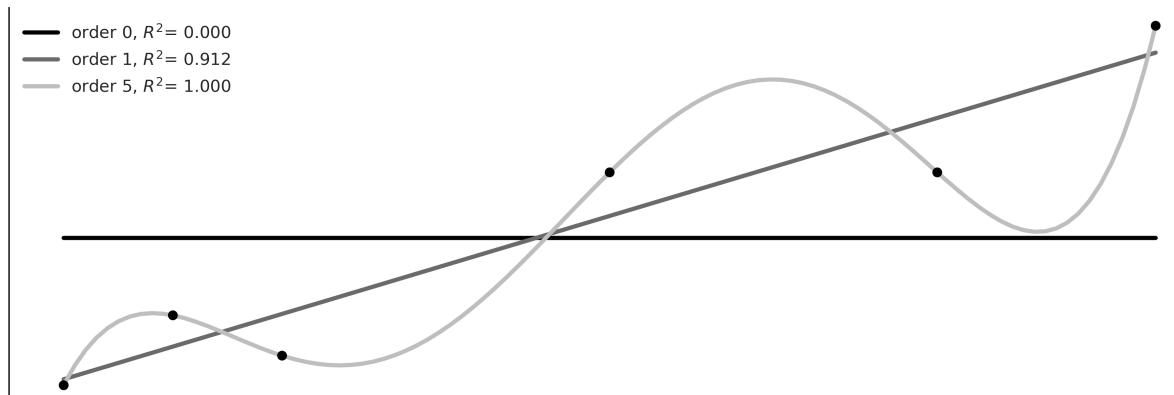

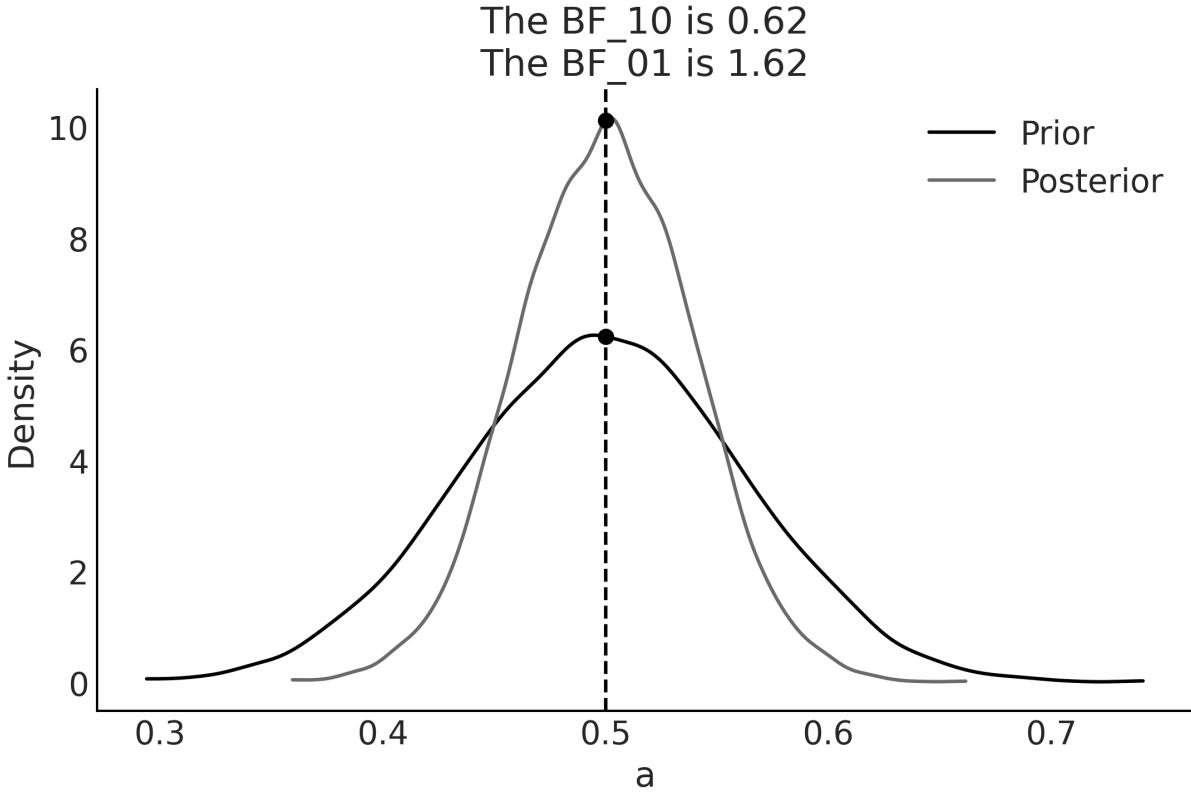

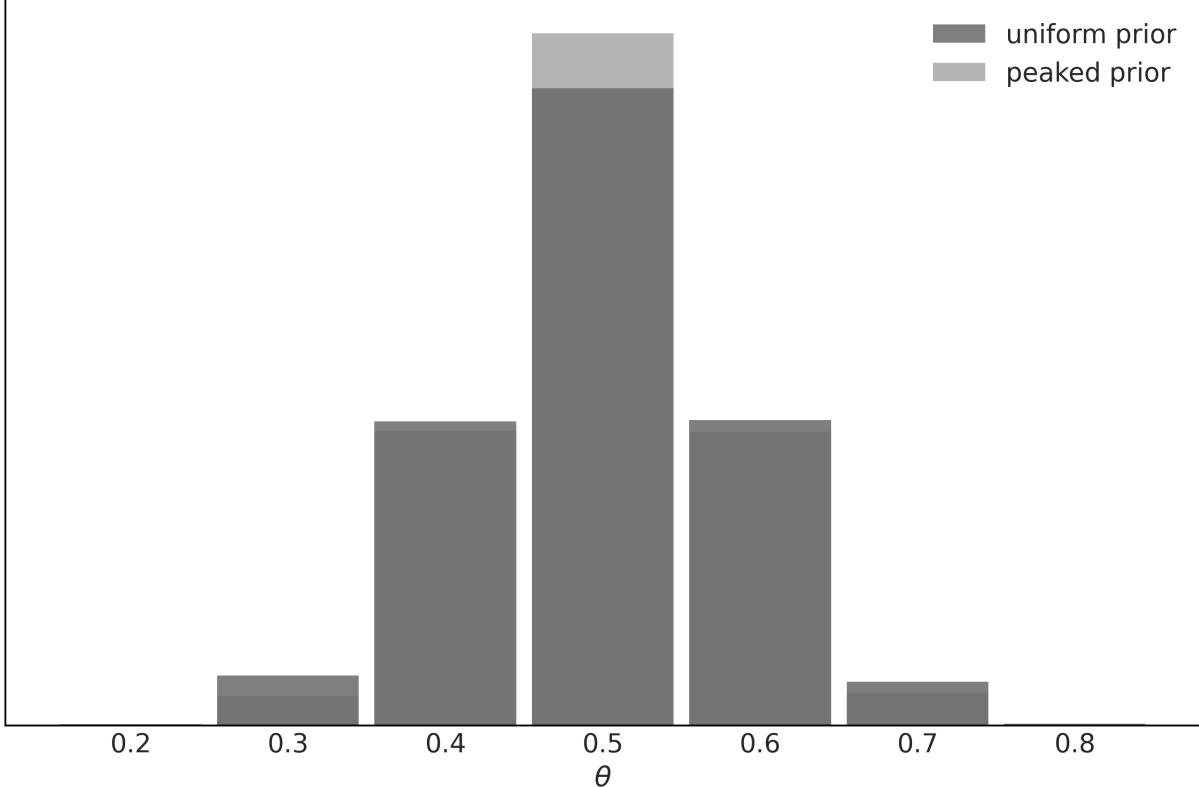

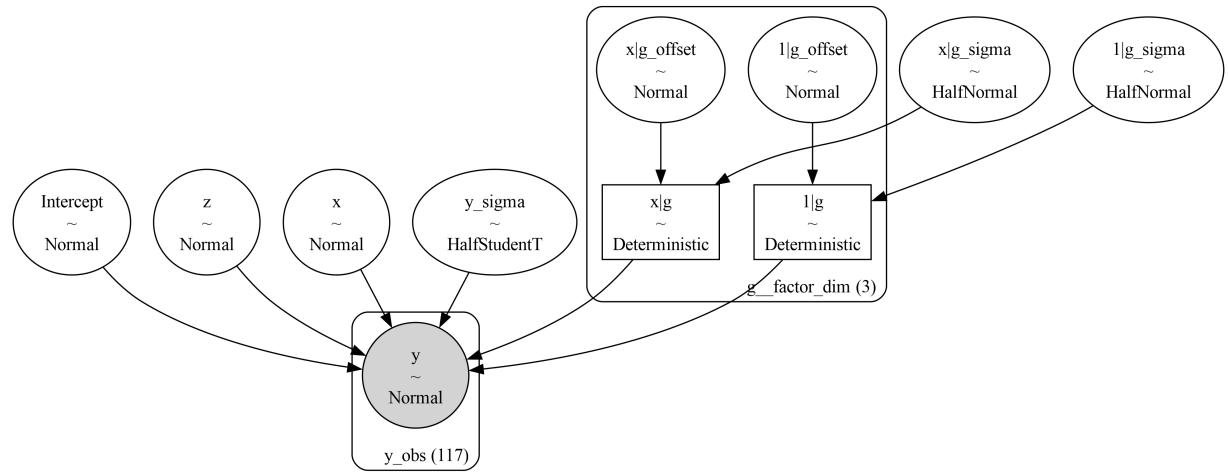

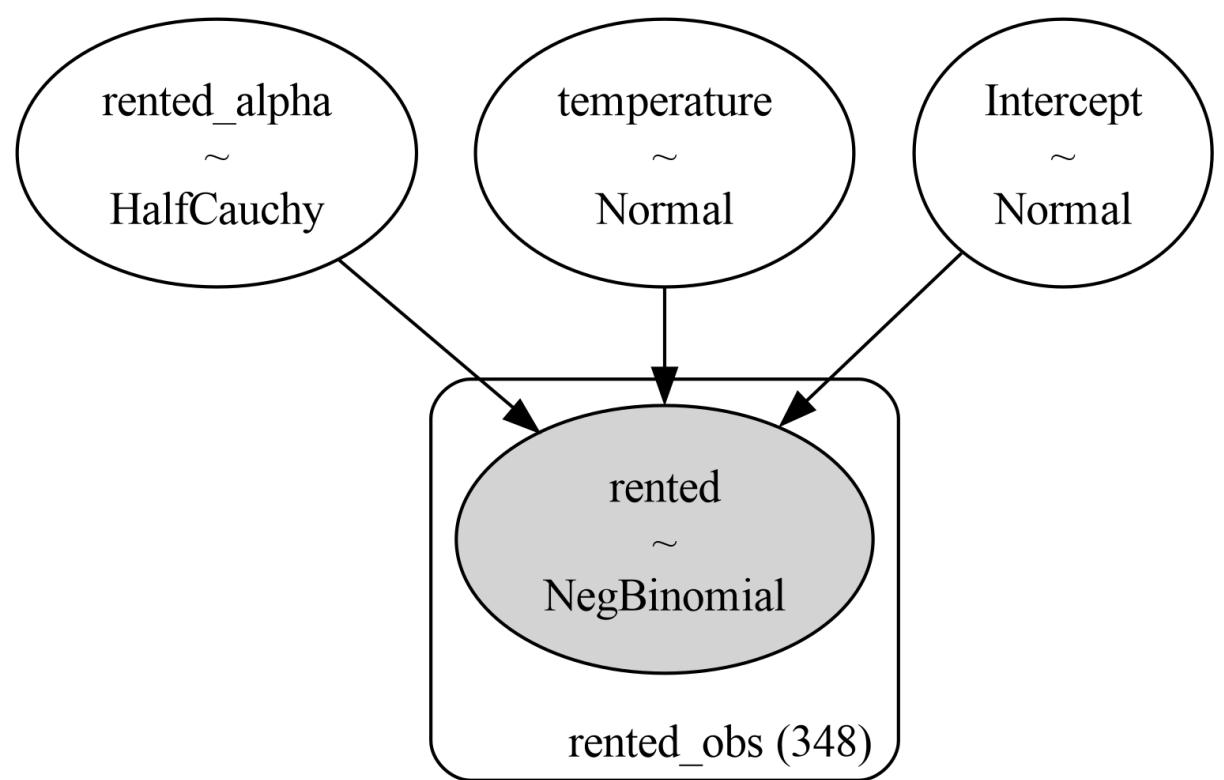

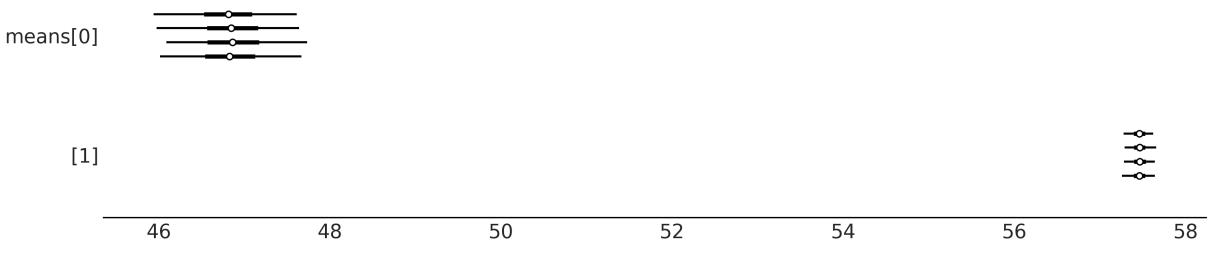

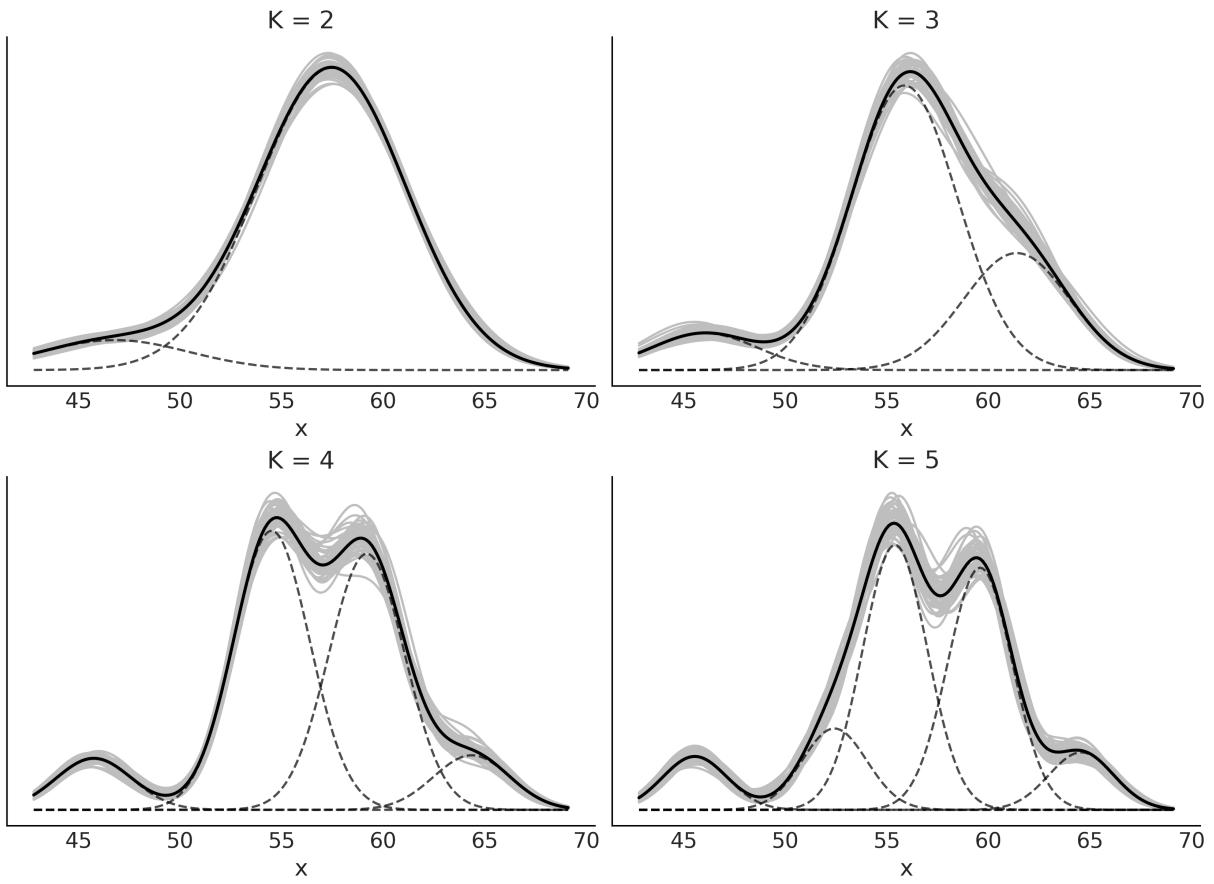

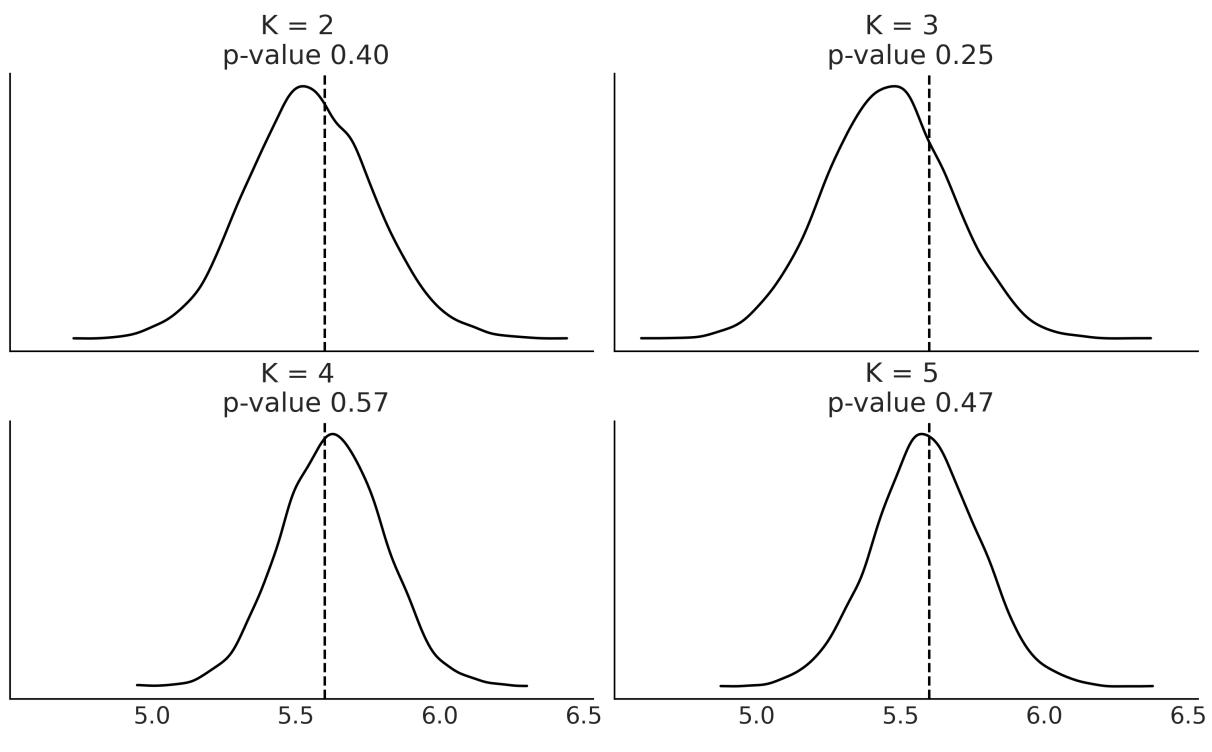

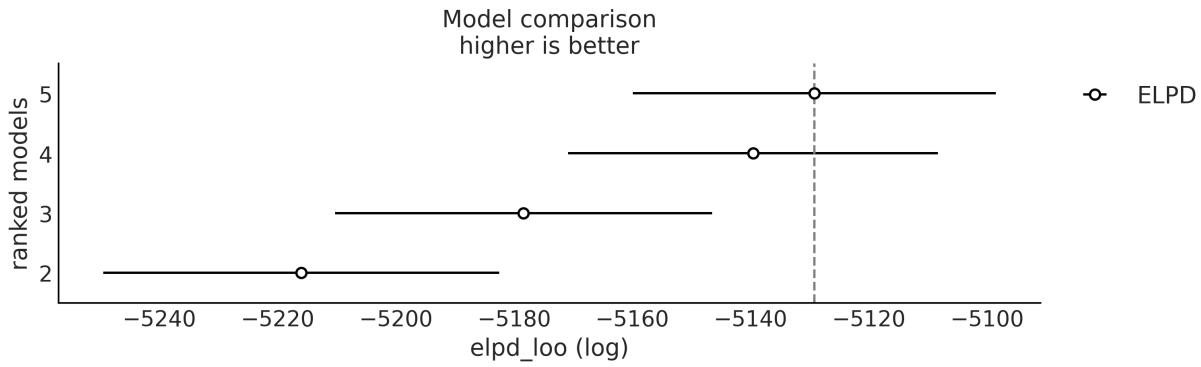

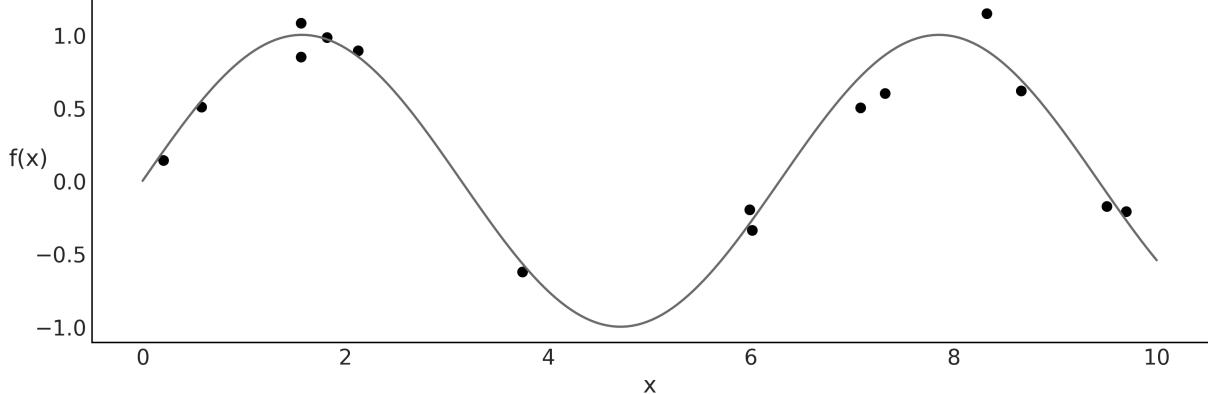

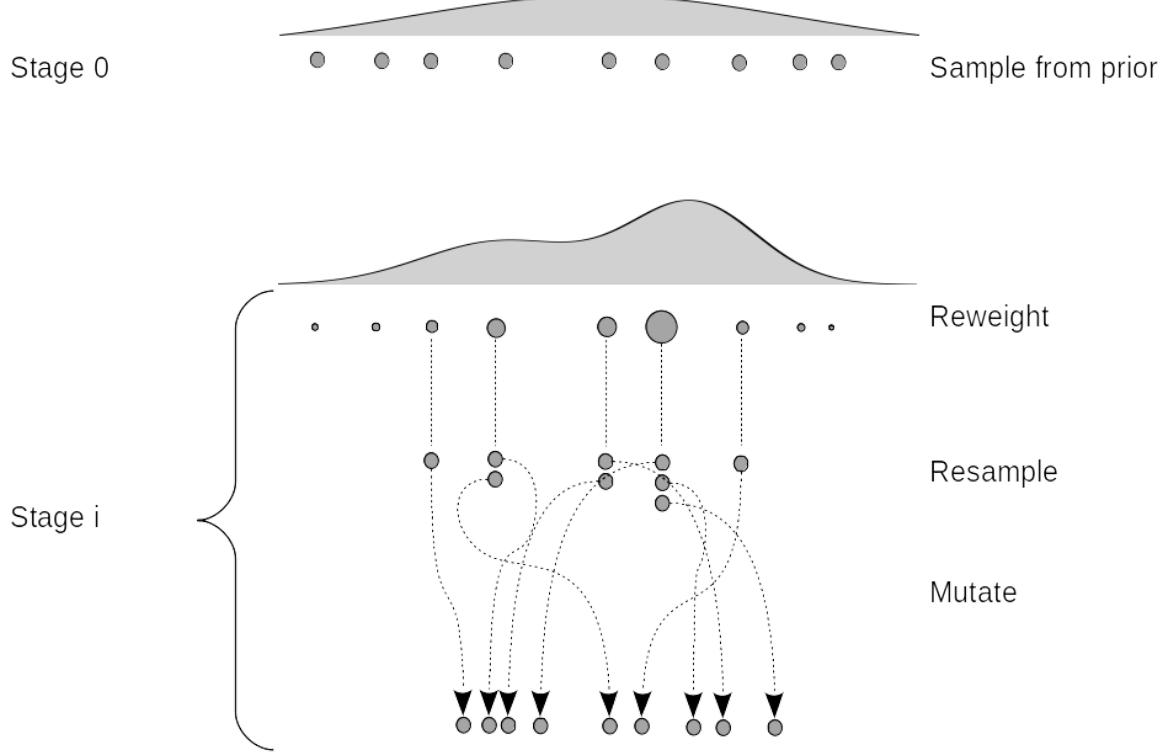

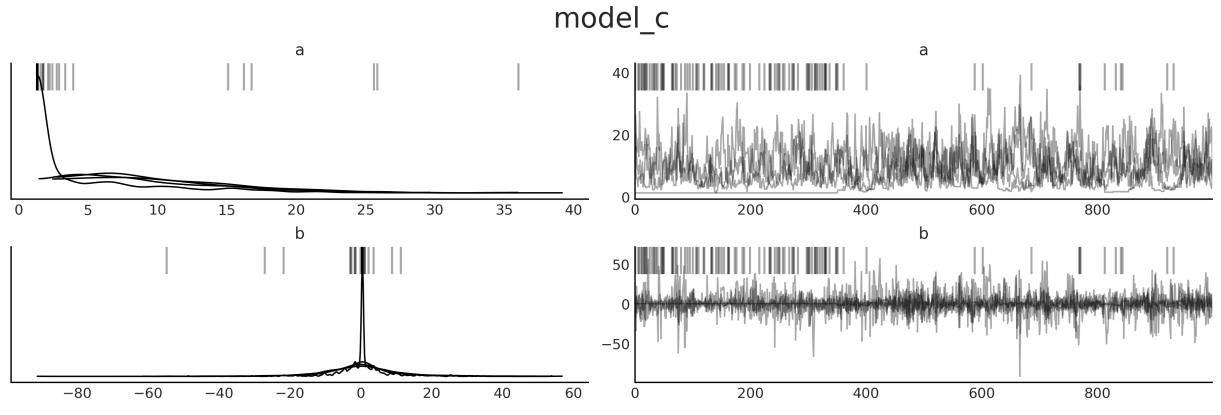

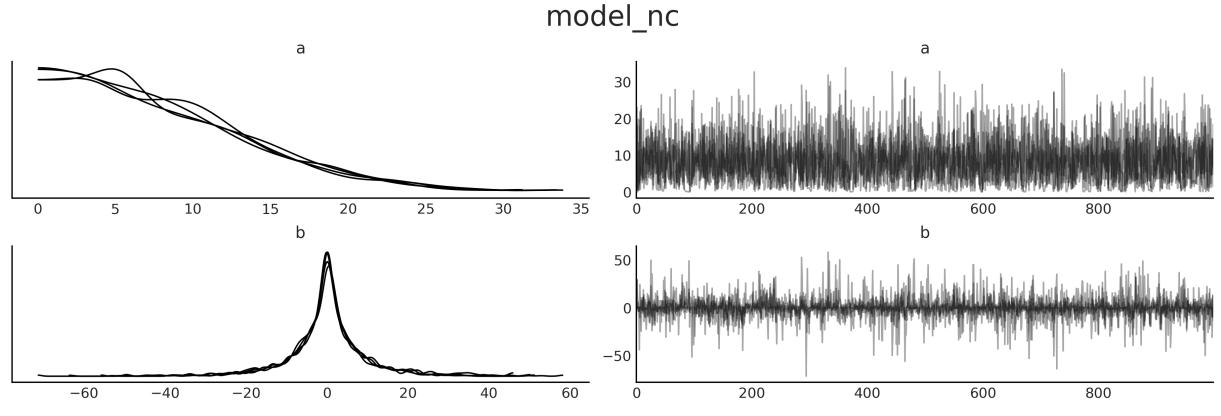

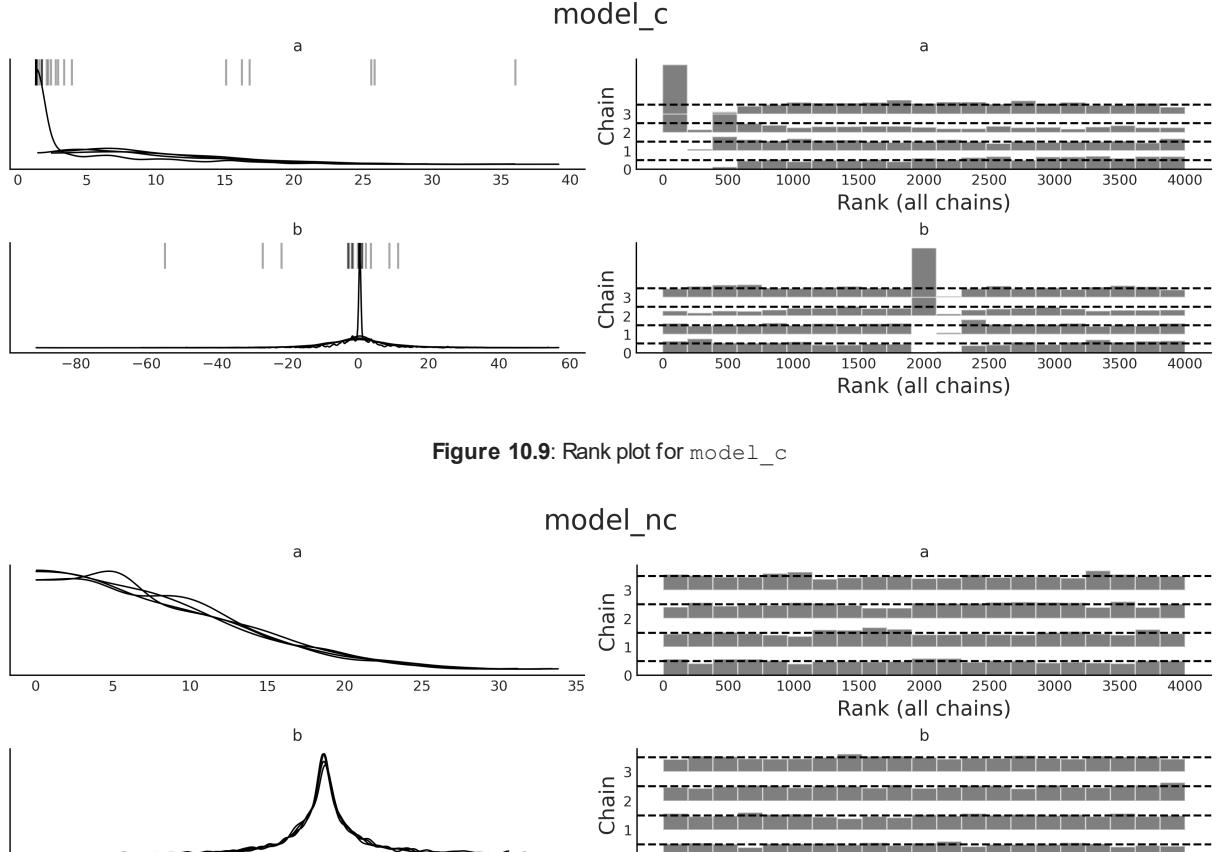

I like the Beta distribution and all the shapes we can get from it, but why are we using it for our model? There are many reasons to use a Beta distribution for this and other problems. One of them is that the Beta distribution is restricted to be between 0 and 1, in the same way our θ parameter is. In general, we use the Beta distribution when we want to model the proportions of a Binomial variable. Another reason is its versatility. As we can see in Figure 1.11, the distribution adopts severalshapes (all restricted to the [0,1] interval), including a Uniform distribution, Gaussian-like distributions, and U-like distributions.