Artificial Intelligence: A Modern Approach 4th Edition

III Knowledge, reasoning, and planning

Chapter 7 Logical Agents

In which we design agents that can form representations of a complex world, use a process of inference to derive new representations about the world, and use these new representations to deduce what to do.

Humans, it seems, know things; and what they know helps them do things. In AI, knowledge-based agents use a process of reasoning over an internal representation of knowledge to decide what actions to take.

Knowledge-based agents

Reasoning

Representation

The problem-solving agents of Chapters 3 and 4 know things, but only in a very limited, inflexible sense. They know what actions are available and what the result of performing a specific action from a specific state will be, but they don’t know general facts. A routefinding agent doesn’t know that it is impossible for a road to be a negative number of kilometers long. An 8-puzzle agent doesn’t know that two tiles cannot occupy the same space. The knowledge they have is very useful for finding a path from the start to a goal, but not for anything else.

The atomic representations used by problem-solving agents are also very limiting. In a partially observable environment, for example, a problem-solving agent’s only choice for representing what it knows about the current state is to list all possible concrete states. I could give a human the goal of driving to a U.S. town with population less than 10,000, but to say that to a problem-solving agent, I could formally describe the goal only as an explicit set of the 16,000 or so towns that satisfy the description.

Chapter 6 introduced our first factored representation, whereby states are represented as assignments of values to variables; this is a step in the right direction, enabling some parts of the agent to work in a domain-independent way and allowing for more efficient algorithms. In this chapter, we take this step to its logical conclusion, so to speak—we develop logic as a general class of representations to support knowledge-based agents. These agents can combine and recombine information to suit myriad purposes. This can be far removed from the needs of the moment—as when a mathematician proves a theorem or an astronomer calculates the Earth’s life expectancy. Knowledge-based agents can accept new tasks in the form of explicitly described goals; they can achieve competence quickly by being told or learning new knowledge about the environment; and they can adapt to changes in the environment by updating the relevant knowledge.

We begin in Section 7.1 with the overall agent design. Section 7.2 introduces a simple new environment, the wumpus world, and illustrates the operation of a knowledge-based agent without going into any technical detail. Then we explain the general principles of logic in Section 7.3 and the specifics of propositional logic in Section 7.4 . Propositional logic is a factored representation; while less expressive than first-order logic (Chapter 8 ), which is the canonical structured representation, propositional logic illustrates all the basic concepts of logic. It also comes with well-developed inference technologies, which we describe in sections 7.5 and 7.6 . Finally, Section 7.7 combines the concept of knowledge-based agents with the technology of propositional logic to build some simple agents for the wumpus world.

7.1 Knowledge-Based Agents

The central component of a knowledge-based agent is its knowledge base, or KB. A knowledge base is a set of sentences. (Here “sentence” is used as a technical term. It is related but not identical to the sentences of English and other natural languages.) Each sentence is expressed in a language called a knowledge representation language and represents some assertion about the world. When the sentence is taken as being given without being derived from other sentences, we call it an axiom.

Knowledge base

Sentence

Knowledge representation language

Axiom

There must be a way to add new sentences to the knowledge base and a way to query what is known. The standard names for these operations are TELL and ASK, respectively. Both operations may involve inference—that is, deriving new sentences from old. Inference must obey the requirement that when one ASKs a question of the knowledge base, the answer should follow from what has been told (or TELLed) to the knowledge base previously. Later in this chapter, we will be more precise about the crucial word “follow.” For now, take it to mean that the inference process should not make things up as it goes along.

Inference

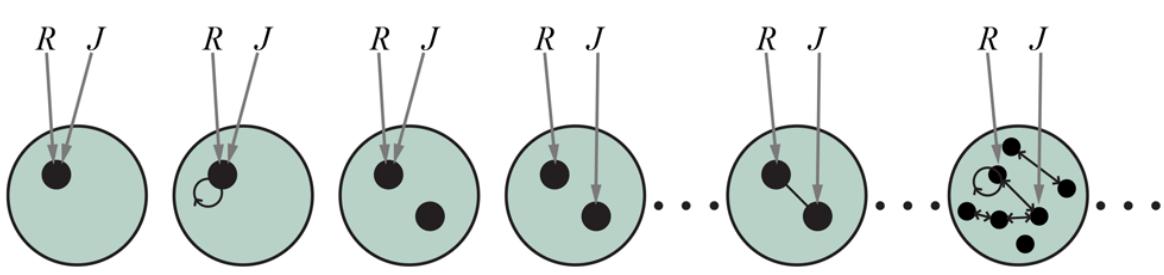

Figure 7.1 shows the outline of a knowledge-based agent program. Like all our agents, it takes a percept as input and returns an action. The agent maintains a knowledge base, , which may initially contain some background knowledge.

Figure 7.1

A generic knowledge-based agent. Given a percept, the agent adds the percept to its knowledge base, asks the knowledge base for the best action, and tells the knowledge base that it has in fact taken that action.

Background knowledge

Each time the agent program is called, it does three things. First, it TELLs the knowledge base what it perceives. Second, it ASKs the knowledge base what action it should perform. In the process of answering this query, extensive reasoning may be done about the current state of the world, about the outcomes of possible action sequences, and so on. Third, the agent program TELLs the knowledge base which action was chosen, and returns the action so that it can be executed.

The details of the representation language are hidden inside three functions that implement the interface between the sensors and actuators on one side and the core representation and reasoning system on the other. MAKE-PERCEPT-SENTENCE constructs a sentence asserting that

the agent perceived the given percept at the given time. MAKE-ACTION-QUERY constructs a sentence that asks what action should be done at the current time. Finally, MAKE-ACTION-SENTENCE constructs a sentence asserting that the chosen action was executed. The details of the inference mechanisms are hidden inside TELL and ASK. Later sections will reveal these details.

The agent in Figure 7.1 appears quite similar to the agents with internal state described in Chapter 2 . Because of the definitions of TELL and ASK, however, the knowledge-based agent is not an arbitrary program for calculating actions. It is amenable to a description at the knowledge level, where we need specify only what the agent knows and what its goals are, in order to determine its behavior.

Knowledge level

For example, an automated taxi might have the goal of taking a passenger from San Francisco to Marin County and might know that the Golden Gate Bridge is the only link between the two locations. Then we can expect it to cross the Golden Gate Bridge because it knows that that will achieve its goal. Notice that this analysis is independent of how the taxi works at the implementation level. It doesn’t matter whether its geographical knowledge is implemented as linked lists or pixel maps, or whether it reasons by manipulating strings of symbols stored in registers or by propagating noisy signals in a network of neurons.

Implementation level

A knowledge-based agent can be built simply by TELLing it what it needs to know. Starting with an empty knowledge base, the agent designer can TELL sentences one by one until the agent knows how to operate in its environment. This is called the declarative approach to system building. In contrast, the procedural approach encodes desired behaviors directly as program code. In the 1970s and 1980s, advocates of the two approaches engaged in heated

debates. We now understand that a successful agent often combines both declarative and procedural elements in its design, and that declarative knowledge can often be compiled into more efficient procedural code.

Declarative

Procedural

We can also provide a knowledge-based agent with mechanisms that allow it to learn for itself. These mechanisms, which are discussed in Chapter 19 , create general knowledge about the environment from a series of percepts. A learning agent can be fully autonomous.

7.2 The Wumpus World

In this section we describe an environment in which knowledge-based agents can show their worth. The wumpus world is a cave consisting of rooms connected by passageways. Lurking somewhere in the cave is the terrible wumpus, a beast that eats anyone who enters its room. The wumpus can be shot by an agent, but the agent has only one arrow. Some rooms contain bottomless pits that will trap anyone who wanders into these rooms (except for the wumpus, which is too big to fall in). The only redeeming feature of this bleak environment is the possibility of finding a heap of gold. Although the wumpus world is rather tame by modern computer game standards, it illustrates some important points about intelligence.

Wumpus world

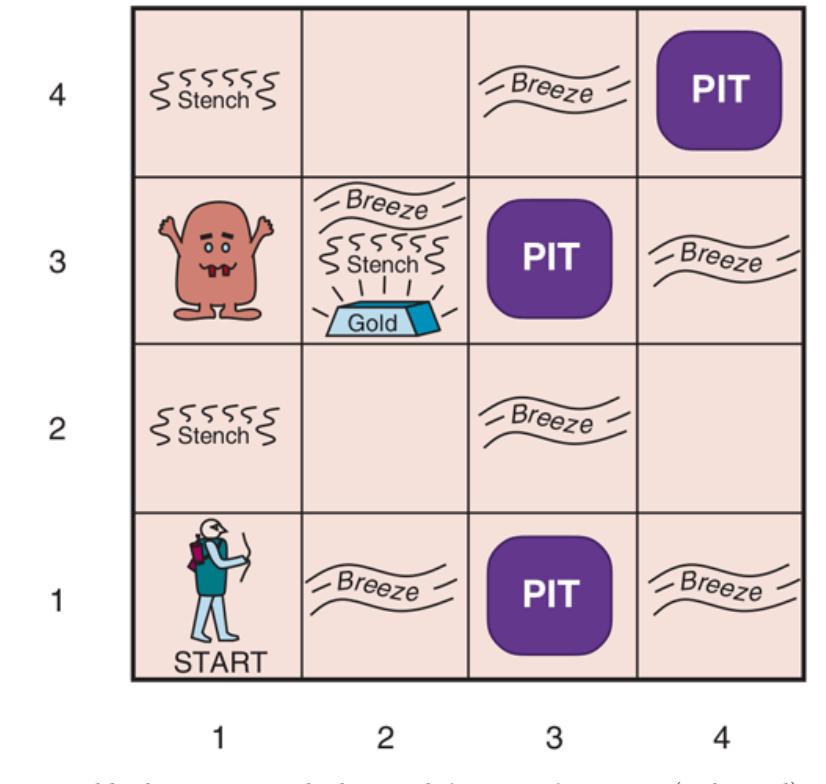

A sample wumpus world is shown in Figure 7.2 . The precise definition of the task environment is given, as suggested in Section 2.3 , by the PEAS description:

- PERFORMANCE MEASURE: for climbing out of the cave with the gold, for falling into a pit or being eaten by the wumpus, for each action taken, and for using up the arrow. The game ends either when the agent dies or when the agent climbs out of the cave.

- ENVIRONMENT: A grid of rooms, with walls surrounding the grid. The agent always starts in the square labeled [1,1], facing to the east. The locations of the gold and the wumpus are chosen randomly, with a uniform distribution, from the squares other than the start square. In addition, each square other than the start can be a pit, with probability 0.2.

- ACTUATORS: The agent can move Forward, TurnLeft by , or TurnRight by . The agent dies a miserable death if it enters a square containing a pit or a live wumpus. (It is safe, albeit smelly, to enter a square with a dead wumpus.) If an agent tries to move forward and bumps into a wall, then the agent does not move. The action Grab can be

used to pick up the gold if it is in the same square as the agent. The action Shoot can be used to fire an arrow in a straight line in the direction the agent is facing. The arrow continues until it either hits (and hence kills) the wumpus or hits a wall. The agent has only one arrow, so only the first Shoot action has any effect. Finally, the action Climb can be used to climb out of the cave, but only from square [1,1].

SENSORS: The agent has five sensors, each of which gives a single bit of information: ‐ In the squares directly (not diagonally) adjacent to the wumpus, the agent will perceive a Stench. 1

1 Presumably the square containing the wumpus also has a stench, but any agent entering that square is eaten before being able to perceive anything.

‐ In the squares directly adjacent to a pit, the agent will perceive a Breeze.

- ‐ In the square where the gold is, the agent will perceive a Glitter.

- ‐ When an agent walks into a wall, it will perceive a Bump.

- ‐ When the wumpus is killed, it emits a woeful Scream that can be perceived anywhere in the cave.

The percepts will be given to the agent program in the form of a list of five symbols; for example, if there is a stench and a breeze, but no glitter, bump, or scream, the agent program will get [Stench,Breeze,None,None,None].

Figure 7.2

We can characterize the wumpus environment along the various dimensions given in Chapter 2 . Clearly, it is deterministic, discrete, static, and single-agent. (The wumpus doesn’t move, fortunately.) It is sequential, because rewards may come only after many actions are taken. It is partially observable, because some aspects of the state are not directly perceivable: the agent’s location, the wumpus’s state of health, and the availability of an arrow. As for the locations of the pits and the wumpus: we could treat them as unobserved parts of the state—in which case, the transition model for the environment is completely known, and finding the locations of pits completes the agent’s knowledge of the state. Alternatively, we could say that the transition model itself is unknown because the agent doesn’t know which Forward actions are fatal—in which case, discovering the locations of pits and wumpus completes the agent’s knowledge of the transition model.

For an agent in the environment, the main challenge is its initial ignorance of the configuration of the environment; overcoming this ignorance seems to require logical reasoning. In most instances of the wumpus world, it is possible for the agent to retrieve the gold safely. Occasionally, the agent must choose between going home empty-handed and risking death to find the gold. About 21% of the environments are utterly unfair, because the gold is in a pit or surrounded by pits.

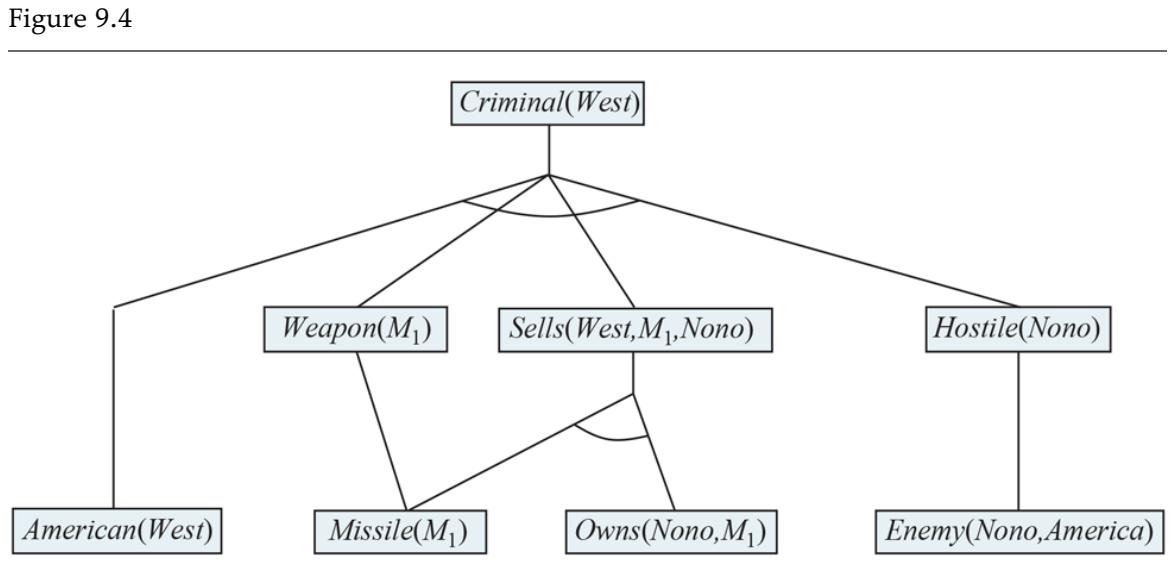

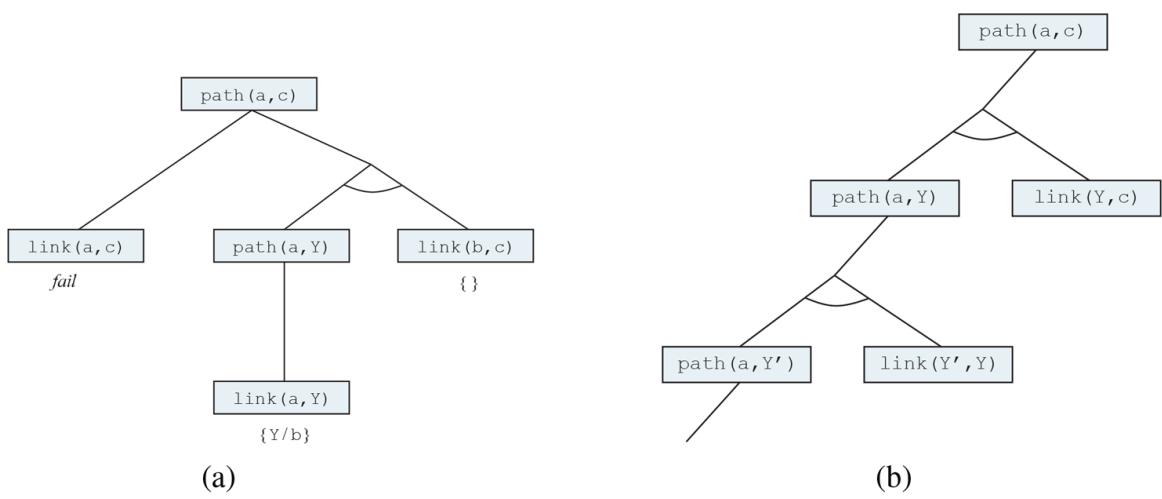

Let us watch a knowledge-based wumpus agent exploring the environment shown in Figure 7.2 . We use an informal knowledge representation language consisting of writing down symbols in a grid (as in Figures 7.3 and 7.4 ).

| 1,4 | 2,4 | 3,4 | 4,4 | A = Agent B = Breeze G = Glitter, Gold = Safe square OK |

1,4 | 2,4 | 3,4 | 4,4 |

|---|---|---|---|---|---|---|---|---|

| 1,3 | 2,3 | 3,3 | 4,3 | P = Pit S = Stench V = Visited W = Wumpus |

1,3 | 2,3 | 3,3 | 4,3 |

| 1,2 OK |

2,2 | 3,2 | 4,2 | 1,2 OK |

2,2 P? |

3,2 | 4,2 | |

| 1,1 A OK |

2,1 OK |

3,1 | 4,1 | 1,1 V OK |

2,1 A B OK |

3,1 P? |

4,1 | |

| (a) | (b) |

Figure 7.3

The first step taken by the agent in the wumpus world. (a) The initial situation, after percept [None,None,None,None,None]. (b) After moving to [2,1] and perceiving [None,Breeze,None,None,None].

Figure 7.4

| 1,4 | 2,4 | 3,4 | 4,4 | A = Agent B = Breeze G = Glitter, Gold = Safe square OK |

1,4 | 2,4 P? |

3,4 | 4,4 |

|---|---|---|---|---|---|---|---|---|

| 11,3 W! | 2,3 | 3,3 | 4,3 | P = Pit S = Stench V = Visited = Wumpus W |

1,3 W! | 2,3 A S G B |

(3,3 P? | 4,3 |

| 1,2 ▲ S OK |

2,2 OK |

3,2 | 4,2 | 1,2 S V OK |

2,2 V OK |

3,2 | 4,2 | |

| 1,1 V OK |

2,1 B V OK |

3,1 P! |

4,1 | 1,1 V OK |

2,1 B V OK |

3,1 P! |

4,1 | |

| (a) | (b) |

Two later stages in the progress of the agent. (a) After moving to [1,1] and then [1,2], and perceiving [Stench,None,None,None,None]. (b) After moving to [2,2] and then [2,3], and perceiving [Stench,Breeze,Glitter,None,None].

The agent’s initial knowledge base contains the rules of the environment, as described previously; in particular, it knows that it is in [1,1] and that [1,1] is a safe square; we denote that with an “A” and “OK,” respectively, in square [1,1].

The first percept is [None,None,None,None,None], from which the agent can conclude that its neighboring squares, [1,2] and [2,1], are free of dangers—they are OK. Figure 7.3(a) shows the agent’s state of knowledge at this point.

A cautious agent will move only into a square that it knows to be OK. Let us suppose the agent decides to move forward to [2,1]. The agent perceives a breeze (denoted by “B”) in [2,1], so there must be a pit in a neighboring square. The pit cannot be in [1,1], by the rules of the game, so there must be a pit in [2,2] or [3,1] or both. The notation “P?” in Figure 7.3(b) indicates a possible pit in those squares. At this point, there is only one known square that is OK and that has not yet been visited. So the prudent agent will turn around, go back to [1,1], and then proceed to [1,2].

The agent perceives a stench in [1,2], resulting in the state of knowledge shown in Figure 7.4(a) . The stench in [1,2] means that there must be a wumpus nearby. But the wumpus cannot be in [1,1], by the rules of the game, and it cannot be in [2,2] (or the agent would have detected a stench when it was in [2,1]). Therefore, the agent can infer that the wumpus is in [1,3]. The notation W! indicates this inference. Moreover, the lack of a breeze in [1,2] implies that there is no pit in [2,2]. Yet the agent has already inferred that there must be a pit in either [2,2] or [3,1], so this means it must be in [3,1]. This is a fairly difficult inference, because it combines knowledge gained at different times in different places and relies on the lack of a percept to make one crucial step.

The agent has now proved to itself that there is neither a pit nor a wumpus in [2,2], so it is OK to move there. We do not show the agent’s state of knowledge at [2,2]; we just assume that the agent turns and moves to [2,3], giving us Figure 7.4(b) . In [2,3], the agent detects a glitter, so it should grab the gold and then return home.

Note that in each case for which the agent draws a conclusion from the available information, that conclusion is guaranteed to be correct if the available information is correct. This is a fundamental property of logical reasoning. In the rest of this chapter, we describe how to build logical agents that can represent information and draw conclusions such as those described in the preceding paragraphs.

7.3 Logic

This section summarizes the fundamental concepts of logical representation and reasoning. These beautiful ideas are independent of any of logic’s particular forms. We therefore postpone the technical details of those forms until the next section, using instead the familiar example of ordinary arithmetic.

In Section 7.1 , we said that knowledge bases consist of sentences. These sentences are expressed according to the syntax of the representation language, which specifies all the sentences that are well formed. The notion of syntax is clear enough in ordinary arithmetic: ” ” is a well-formed sentence, whereas ” ” is not.

Syntax

A logic must also define the semantics, or meaning, of sentences. The semantics defines the truth of each sentence with respect to each possible world. For example, the semantics for arithmetic specifies that the sentence ” ” is true in a world where is 2 and is 2, but false in a world where is 1 and is 1. In standard logics, every sentence must be either true or false in each possible world—there is no “in between.” 2

2 Fuzzy logic, discussed in Chapter 13 , allows for degrees of truth.

Semantics

Truth

Possible world

When we need to be precise, we use the term model in place of “possible world.” Whereas possible worlds might be thought of as (potentially) real environments that the agent might or might not be in, models are mathematical abstractions, each of which has a fixed truth value (true or false) for every relevant sentence. Informally, we may think of a possible world as, for example, having men and women sitting at a table playing bridge, and the sentence is true when there are four people in total. Formally, the possible models are just all possible assignments of nonnegative integers to the variables and . Each such assignment determines the truth of any sentence of arithmetic whose variables are and . If a sentence is true in model , we say that satisfies or sometimes is a model of . We use the notation to mean the set of all models of .

Model

Satisfaction

Now that we have a notion of truth, we are ready to talk about logical reasoning. This involves the relation of logical entailment between sentences—the idea that a sentence follows logically from another sentence. In mathematical notation, we write

Entailment

to mean that the sentence entails the sentence . The formal definition of entailment is this: if and only if, in every model in which is true, is also true. Using the notation just introduced, we can write

\[ \alpha = \beta \text{ if and only if } M(\alpha) \subseteq M(\beta) \text{.} \]

(Note the direction of the here: if , then is a stronger assertion than : it rules out more possible worlds.) The relation of entailment is familiar from arithmetic; we are happy with the idea that the sentence entails the sentence . Obviously, in any model where is zero, it is the case that is zero (regardless of the value of ).

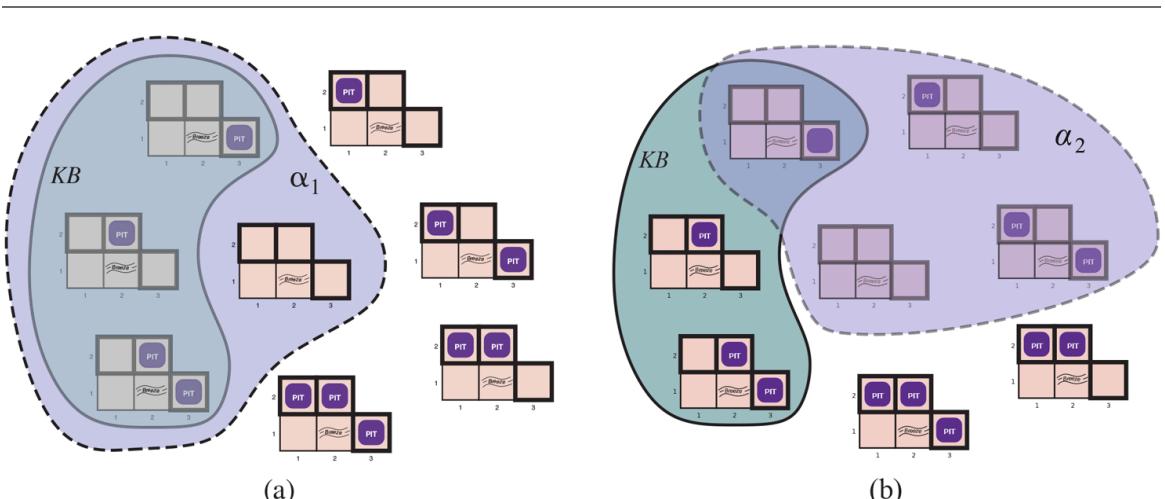

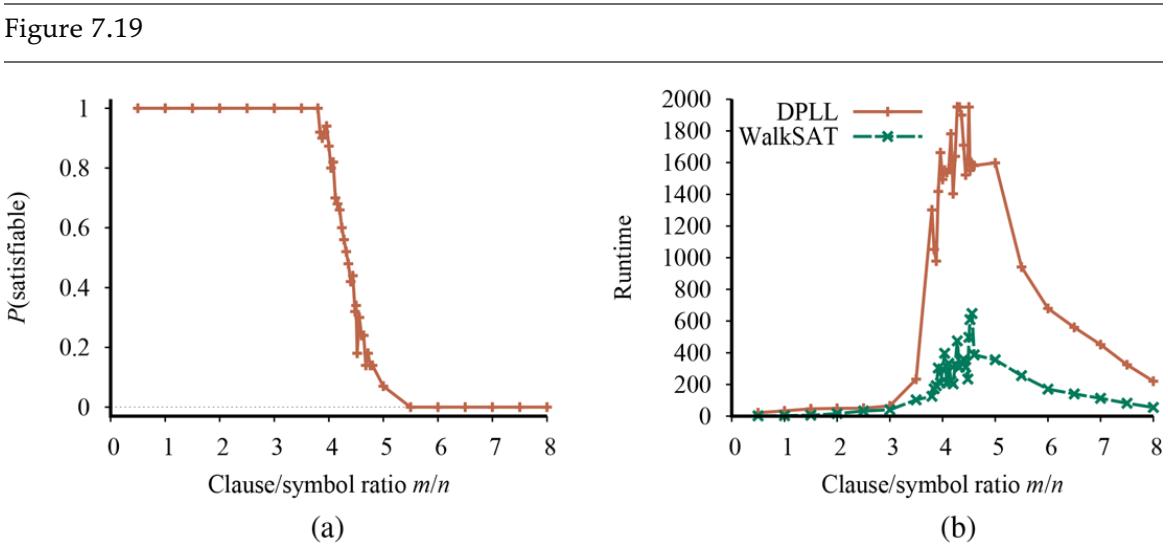

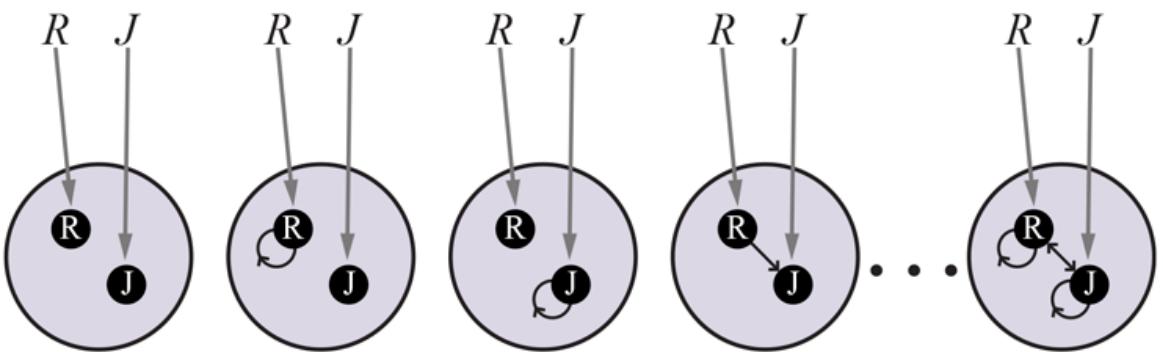

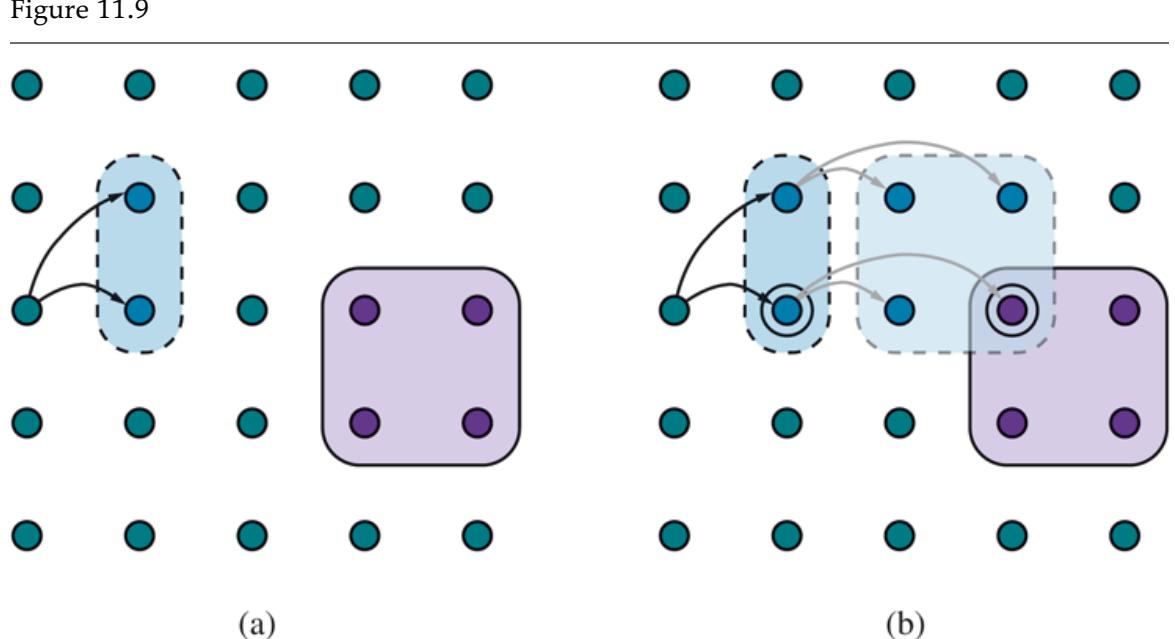

We can apply the same kind of analysis to the wumpus-world reasoning example given in the preceding section. Consider the situation in Figure 7.3(b) : the agent has detected nothing in [1,1] and a breeze in [2,1]. These percepts, combined with the agent’s knowledge of the rules of the wumpus world, constitute the KB. The agent is interested in whether the adjacent squares [1,2], [2,2], and [3,1] contain pits. Each of the three squares might or might not contain a pit, so (ignoring other aspects of the world for now) there are possible models. These eight models are shown in Figure 7.5 . 3

3 Although the figure shows the models as partial wumpus worlds, they are really nothing more than assignments of true and false to the sentences “there is a pit in [1,2]” etc. Models, in the mathematical sense, do not need to have ’orrible ’airy wumpuses in them.

Figure 7.5

Possible models for the presence of pits in squares [1,2], [2,2], and [3,1]. The KB corresponding to the observations of nothing in [1,1] and a breeze in [2,1] is shown by the solid line. (a) Dotted line shows models of (no pit in [1,2]). (b) Dotted line shows models of (no pit in [2,2]).

The KB can be thought of as a set of sentences or as a single sentence that asserts all the individual sentences. The KB is false in models that contradict what the agent knows—for example, the KB is false in any model in which [1,2] contains a pit, because there is no breeze in [1,1]. There are in fact just three models in which the KB is true, and these are shown surrounded by a solid line in Figure 7.5 . Now let us consider two possible conclusions:

We have surrounded the models of and with dotted lines in Figures 7.5(a) and 7.5(b) , respectively. By inspection, we see the following:

Hence, : there is no pit in [1,2]. We can also see that

\[\text{in some models in which } KB \text{ is true, } \alpha\_2 \text{ is false.}\]

Hence, does not entail : the agent cannot conclude that there is no pit in [2,2]. (Nor can it conclude that there is a pit in [2,2].) 4

4 The agent can calculate the probability that there is a pit in [2,2]; Chapter 12 shows how.

The preceding example not only illustrates entailment but also shows how the definition of entailment can be applied to derive conclusions—that is, to carry out logical inference. The inference algorithm illustrated in Figure 7.5 is called model checking, because it enumerates all possible models to check that is true in all models in which is true, that is, that .

Logical inference

Model checking

In understanding entailment and inference, it might help to think of the set of all consequences of as a haystack and of as a needle. Entailment is like the needle being in the haystack; inference is like finding it. This distinction is embodied in some formal notation: if an inference algorithm can derive from , we write

which is pronounced ” is derived from by ” or ” derives from .”

An inference algorithm that derives only entailed sentences is called sound or truthpreserving. Soundness is a highly desirable property. An unsound inference procedure essentially makes things up as it goes along—it announces the discovery of nonexistent needles. It is easy to see that model checking, when it is applicable, is a sound procedure. 5

5 Model checking works if the space of models is finite—for example, in wumpus worlds of fixed size. For arithmetic, on the other hand, the space of models is infinite: even if we restrict ourselves to the integers, there are infinitely many pairs of values for and in the sentence .

Sound

Truth-preserving

The property of completeness is also desirable: an inference algorithm is complete if it can derive any sentence that is entailed. For real haystacks, which are finite in extent, it seems obvious that a systematic examination can always decide whether the needle is in the haystack. For many knowledge bases, however, the haystack of consequences is infinite, and completeness becomes an important issue. Fortunately, there are complete inference procedures for logics that are sufficiently expressive to handle many knowledge bases. 6

6 Compare with the case of infinite search spaces in Chapter 3 , where depth-first search is not complete.

Completeness

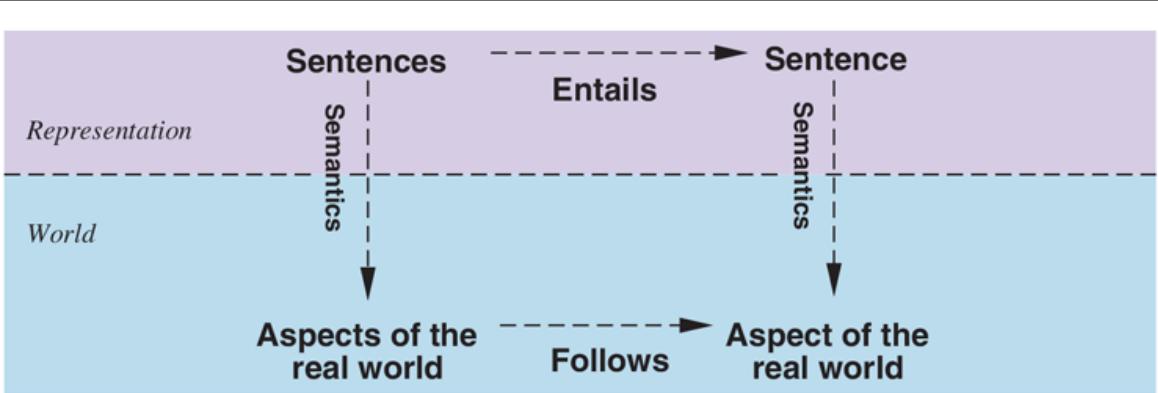

We have described a reasoning process whose conclusions are guaranteed to be true in any world in which the premises are true; in particular, if KB is true in the real world, then any sentence derived from KB by a sound inference procedure is also true in the real world. So, while an inference process operates on “syntax”—internal physical configurations such as bits in registers or patterns of electrical blips in brains—the process corresponds to the real-world relationship whereby some aspect of the real world is the case by virtue of other aspects of the real world being the case. This correspondence between world and representation is illustrated in Figure 7.6 . 7

7 As Wittgenstein (1922) put it in his famous Tractatus: “The world is everything that is the case.”

Figure 7.6

Sentences are physical configurations of the agent, and reasoning is a process of constructing new physical configurations from old ones. Logical reasoning should ensure that the new configurations represent aspects of the world that actually follow from the aspects that the old configurations represent.

The final issue to consider is grounding—the connection between logical reasoning processes and the real environment in which the agent exists. In particular, how do we know that is true in the real world? (After all, is just “syntax” inside the agent’s head.) This is a philosophical question about which many, many books have been written. (See Chapter 27 .) A simple answer is that the agent’s sensors create the connection. For example, our wumpus-world agent has a smell sensor. The agent program creates a suitable sentence whenever there is a smell. Then, whenever that sentence is in the knowledge base, it is true in the real world. Thus, the meaning and truth of percept sentences are defined by the processes of sensing and sentence construction that produce them. What about the rest of the agent’s knowledge, such as its belief that wumpuses cause smells in adjacent squares? This is not a direct representation of a single percept, but a general rule—derived, perhaps, from perceptual experience but not identical to a statement of that experience. General rules like this are produced by a sentence construction process called learning, which is the subject of Part V. Learning is fallible. It could be the case that wumpuses cause smells except on February 29 in leap years, which is when they take their baths. Thus, may not be true in the real world, but with good learning procedures, there is reason for optimism.

Grounding

7.4 Propositional Logic: A Very Simple Logic

We now present propositional logic. We describe its syntax (the structure of sentences) and its semantics (the way in which the truth of sentences is determined). From these, we derive a simple, syntactic algorithm for logical inference that implements the semantic notion of entailment. Everything takes place, of course, in the wumpus world.

Propositional logic

7.4.1 Syntax

The syntax of propositional logic defines the allowable sentences. The atomic sentences consist of a single proposition symbol. Each such symbol stands for a proposition that can be true or false. We use symbols that start with an uppercase letter and may contain other letters or subscripts, for example: , , , , and FacingEast. The names are arbitrary but are often chosen to have some mnemonic value—we use to stand for the proposition that the wumpus is in [1,3]. (Remember that symbols such as are atomic, i.e., , 1, and 3 are not meaningful parts of the symbol.) There are two proposition symbols with fixed meanings: True is the always-true proposition and False is the always-false proposition. Complex sentences are constructed from simpler sentences, using parentheses and operators called logical connectives. There are five connectives in common use:

Atomic sentences

Proposition symbol

Complex sentences

Logical connectives

(not). A sentence such as is called the negation of . A literal is either an atomic sentence (a positive literal) or a negated atomic sentence (a negative literal).

Negation

Literal

(and). A sentence whose main connective is , such as , is called a conjunction; its parts are the conjuncts. (The looks like an “A” for “And.”)

Conjunction

(or). A sentence whose main connective is , such as , is a disjunction; its parts are disjuncts—in this example, and .

Disjunction

(implies). A sentence such as is called an implication (or conditional). Its premise or antecedent is , and its conclusion or consequent is . Implications are also known as rules or if–then statements. The implication symbol is sometimes written in other books as or .

| Implication | |||

|---|---|---|---|

| Premise | |||

| Conclusion | |||

| Rules |

(if and only if). The sentence is a biconditional.

Biconditional

Figure 7.7 gives a formal grammar of propositional logic. (BNF notation is explained on page 1030.) The BNF grammar is augmented with an operator precedence list to remove ambiguity when multiple operators are used. The “not” operator has the highest precedence, which means that in the sentence the binds most tightly, giving us the equivalent of rather than . (The notation for ordinary arithmetic is the same: is 2, not –6.) When appropriate, we also use parentheses and square brackets to clarify the intended sentence structure and improve readability.

Figure 7.7

A BNF (Backus–Naur Form) grammar of sentences in propositional logic, along with operator precedences, from highest to lowest.

7.4.2 Semantics

Having specified the syntax of propositional logic, we now specify its semantics. The semantics defines the rules for determining the truth of a sentence with respect to a particular model. In propositional logic, a model simply sets the truth value—true or false for every proposition symbol. For example, if the sentences in the knowledge base make use of the proposition symbols , and , then one possible model is

\[m\_1 = \{P\_{1,2} = false, P\_{2,2} = false, P\_{3,1} = true\}.\]

Truth value

With three proposition symbols, there are possible models—exactly those depicted in Figure 7.5 . Notice, however, that the models are purely mathematical objects with no necessary connection to wumpus worlds. is just a symbol; it might mean “there is a pit in [1,2]” or “I’m in Paris today and tomorrow.”

The semantics for propositional logic must specify how to compute the truth value of any sentence, given a model. This is done recursively. All sentences are constructed from atomic sentences and the five connectives; therefore, we need to specify how to compute the truth of atomic sentences and how to compute the truth of sentences formed with each of the five connectives. Atomic sentences are easy:

- True is true in every model and False is false in every model.

- The truth value of every other proposition symbol must be specified directly in the model. For example, in the model given earlier, is false.

For complex sentences, we have five rules, which hold for any subsentences and (atomic or complex) in any model (here “iff” means “if and only if”):

- is true iff is false in .

- is true iff both and are true in .

- is true iff either or is true in .

- is true unless is true and is false in .

- is true iff and are both true or both false in .

The rules can also be expressed with truth tables that specify the truth value of a complex sentence for each possible assignment of truth values to its components. Truth tables for the five connectives are given in Figure 7.8 . From these tables, the truth value of any sentence can be computed with respect to any model by a simple recursive evaluation. For example, the sentence , evaluated in , gives . Exercise 7.TRUV asks you to write the algorithm PL-TRUE? which computes the truth value of a propositional logic sentence in a model .

| P | Q | -P | PAQ | PVQ | P = Q = | P & Q |

|---|---|---|---|---|---|---|

| false | false | true | false | false | true | true |

| false | true | true | false | true | true | false |

| true | false | false | false | true | false | false |

| true | true | false | true | true | true | true |

Truth tables for the five logical connectives. To use the table to compute, for example, the value of when is true and is false, first look on the left for the row where is true and is false (the third row). Then look in that row under the column to see the result: true.

Truth table

The truth tables for “and,” “or,” and “not” are in close accord with our intuitions about the English words. The main point of possible confusion is that is true when is true or is true or both. A different connective, called “exclusive or” (“xor” for short), yields false when both disjuncts are true. There is no consensus on the symbol for exclusive or; some choices are or or . 8

8 Latin uses two separate words: “vel” is inclusive or and “aut” is exclusive or.

The truth table for may not quite fit one’s intuitive understanding of ” implies ” or “if then .” For one thing, propositional logic does not require any relation of causation or relevance between and . The sentence “5 is odd implies Tokyo is the capital of Japan” is a true sentence of propositional logic (under the normal interpretation), even though it is a decidedly odd sentence of English. Another point of confusion is that any implication is true whenever its antecedent is false. For example, “5 is even implies Sam is smart” is true, regardless of whether Sam is smart. This seems bizarre, but it makes sense if you think of ” ” as saying, “If is true, then I am claiming that is true; otherwise I am making no claim.” The only way for this sentence to be false is if is true but is false.

The biconditional, , is true whenever both and are true. In English, this is often written as ” if and only if .” Many of the rules of the wumpus world are best written using . For example, a square is breezy if a neighboring square has a pit, and a square is breezy only if a neighboring square has a pit. So we need a biconditional,

\[B\_{1,1} \Leftrightarrow (P\_{1,2} \lor P\_{2,1})\]

where means that there is a breeze in [1,1].

7.4.3 A simple knowledge base

Now that we have defined the semantics for propositional logic, we can construct a knowledge base for the wumpus world. We focus first on the immutable aspects of the wumpus world, leaving the mutable aspects for a later section. For now, we need the following symbols for each location:

- is true if there is a pit in .

- is true if there is a wumpus in , dead or alive.

- is true if there is a breeze in .

- is true if there is a stench in .

- is true if the agent is in location .

The sentences we write will suffice to derive (there is no pit in [1,2]), as was done informally in Section 7.3 . We label each sentence so that we can refer to them:

There is no pit in [1,1]:

\[R\_1: \quad \neg P\_{1,1} \dots\]

A square is breezy if and only if there is a pit in a neighboring square. This has to be stated for each square; for now, we include just the relevant squares:

\[\begin{aligned} R\_2: &\quad B\_{1,1} \Leftrightarrow \left(P\_{1,2} \vee P\_{2,1}\right). \\ R\_3: &\quad B\_{2,1} \Leftrightarrow \left(P\_{1,1} \vee P\_{2,2} \vee P\_{3,1}\right). \end{aligned}\]

The preceding sentences are true in all wumpus worlds. Now we include the breeze percepts for the first two squares visited in the specific world the agent is in, leading up to the situation in Figure 7.3(b) .

7.4.4 A simple inference procedure

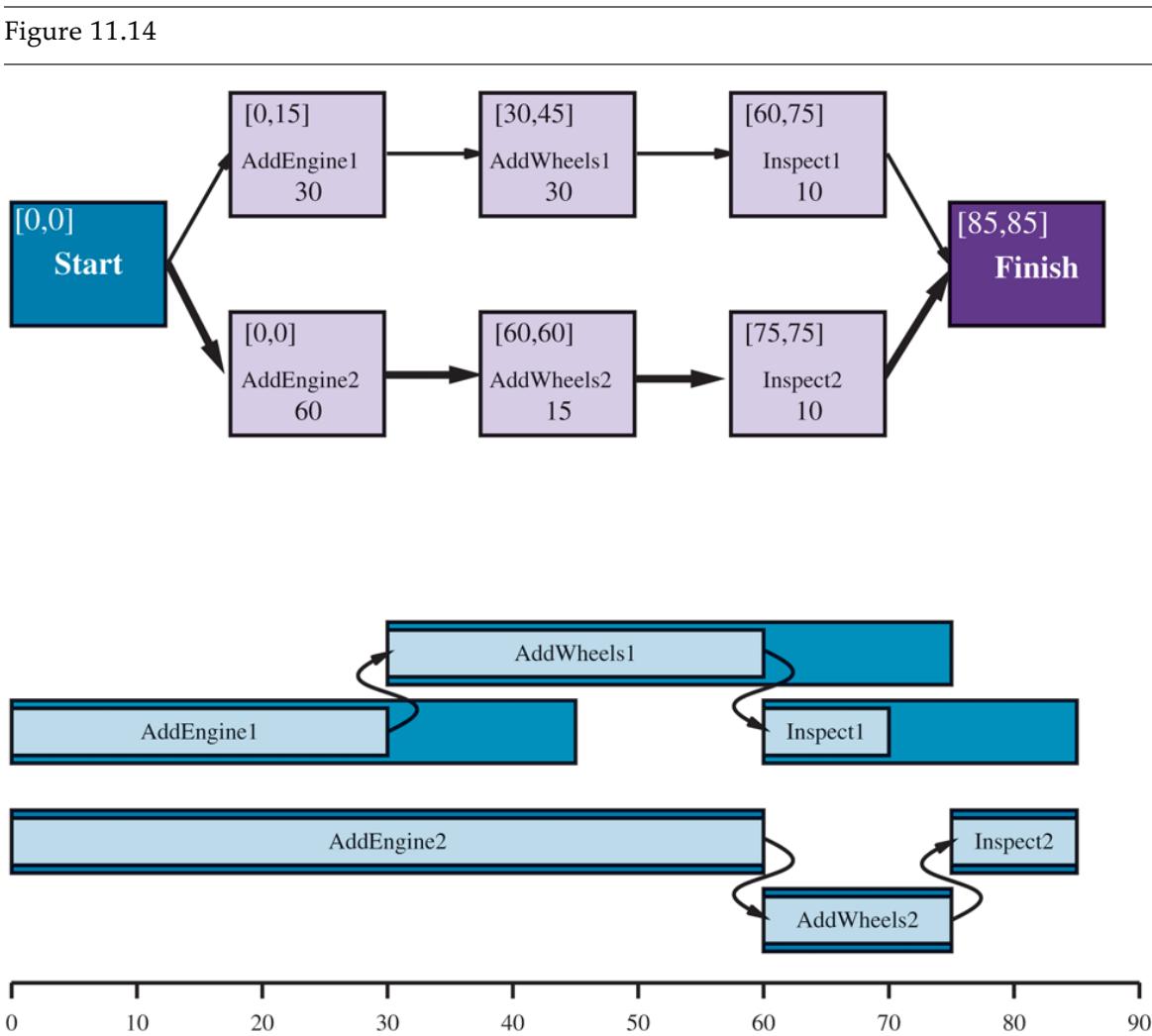

Our goal now is to decide whether for some sentence . For example, is entailed by our ? Our first algorithm for inference is a model-checking approach that is a direct implementation of the definition of entailment: enumerate the models, and check that is true in every model in which is true. Models are assignments of true or false to every proposition symbol. Returning to our wumpus-world example, the relevant proposition symbols are , , , , , , and . With seven symbols, there are possible models; in three of these, is true (Figure 7.9 ). In those three models, is true, hence there is no pit in [1,2]. On the other hand, is true in two of the three models and false in one, so we cannot yet tell whether there is a pit in [2,2].

| Figure 7.9 | |

|---|---|

| B1,1 | B2,1 | P1,1 | P1,2 | P2,1 | P2,2 | P3.1 | R1 | R2 | R3 | R4 | R5 | KB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| false | false | false | false | false | false | false | true | true | true | true | false | false |

| false | false | false | false | false | false | true | true | true | false | true | false | false |

| false | true | false | false | false | false | false | true | true | false | true | true | false |

| false | true | false | false | false | false | true | true | true | true | true | true | true |

| false | true | false | false | false | true | false | true | true | true | true | true | true |

| false | true | false | false | false | true | true | true | true | true | true | true | true |

| false | true | false | false | true | false | false | true | false | false | true | true | false |

| true | true | true | true | true | true | true | false | true | true | false | true | false |

A truth table constructed for the knowledge base given in the text. is true if through are true, which occurs in just 3 of the 128 rows (the ones underlined in the right-hand column). In all 3 rows, is false, so there is no pit in [1,2]. On the other hand, there might (or might not) be a pit in [2,2].

Figure 7.9 reproduces in a more precise form the reasoning illustrated in Figure 7.5 . A general algorithm for deciding entailment in propositional logic is shown in Figure 7.10 . Like the BACKTRACKING-SEARCH algorithm on page 192, TT-ENTAILS? performs a recursive enumeration of a finite space of assignments to symbols. The algorithm is sound because it implements directly the definition of entailment, and complete because it works for any and and always terminates—there are only finitely many models to examine.

A truth-table enumeration algorithm for deciding propositional entailment. (TT stands for truth table.) PL-TRUE? returns true if a sentence holds within a model. The variable model represents a partial model an assignment to some of the symbols. The keyword and here is an infix function symbol in the pseudocode programming language, not an operator in proposition logic; it takes two arguments and returns true or false.

Of course, “finitely many” is not always the same as “few.” If and contain symbols in all, then there are models. Thus, the time complexity of the algorithm is . (The space complexity is only because the enumeration is depth-first.) Later in this chapter we show algorithms that are much more efficient in many cases. Unfortunately, propositional entailment is co-NP-complete (i.e., probably no easier than NP-complete—see Appendix A ), so every known inference algorithm for propositional logic has a worst-case complexity that is exponential in the size of the input.

7.5 Propositional Theorem Proving

So far, we have shown how to determine entailment by model checking: enumerating models and showing that the sentence must hold in all models. In this section, we show how entailment can be done by theorem proving—applying rules of inference directly to the sentences in our knowledge base to construct a proof of the desired sentence without consulting models. If the number of models is large but the length of the proof is short, then theorem proving can be more efficient than model checking.

Theorem proving

Before we plunge into the details of theorem-proving algorithms, we will need some additional concepts related to entailment. The first concept is logical equivalence: two sentences and are logically equivalent if they are true in the same set of models. We write this as (Note that is used to make claims about sentences, while is used as part of a sentence.) For example, we can easily show (using truth tables) that and are logically equivalent; other equivalences are shown in Figure 7.11 . These equivalences play much the same role in logic as arithmetic identities do in ordinary mathematics. An alternative definition of equivalence is as follows: any two sentences and are equivalent if and only if each of them entails the other:

\[ \alpha \equiv \beta \quad \text{if and only if} \quad \alpha \vdash \beta \text{ and } \beta \vdash \alpha \text{ .} \]

Figure 7.11

\[\begin{array}{rcl} (\alpha \land \beta) & \equiv & (\beta \land \alpha) \quad \text{commutativity of } \land \\ (\alpha \lor \beta) & \equiv (\beta \lor \alpha) \quad \text{commutativity of } \lor \\ ( (\alpha \land \beta) \land \gamma) & \equiv & (\alpha \land (\beta \land \gamma)) \quad \text{associativity of } \land \\ ( (\alpha \lor \beta) \lor \gamma) & \equiv & (\alpha \lor (\beta \lor \gamma)) \quad \text{associativity of } \lor \\ & \neg(\neg \alpha) & \equiv \alpha \quad \text{double-negation elimination} \\ ( \alpha \to \beta) & \equiv & (\neg \beta \Rightarrow \neg \alpha) \quad \text{contrapsposition} \\ ( \alpha \to \beta) & \equiv & (\neg \alpha \lor \beta) \quad \text{implication elimination} \\ ( \alpha \to \beta) & \equiv & ((\alpha \to \beta) \land (\beta \Rightarrow \alpha)) \quad \text{bicconditional elimination} \\ \neg( \alpha \land \beta) & \equiv & (\neg \alpha \lor \neg \beta) \quad \text{De Morgan} \\ \neg( \alpha \lor \beta) & \equiv & (\neg \alpha \land \neg \beta) \quad \text{De Morgan} \\ ( \alpha \land (\beta \lor \gamma)) & \equiv & ((\alpha \land \beta) \lor (\alpha \land \gamma)) \quad \text{disitivity of } \land \text{ over } \land \\ ( \alpha \lor (\beta \lor \gamma)) & \equiv & ((\alpha \lor \beta) \land (\alpha \lor \gamma)) \quad \text{disitivity of } \lor \text{ over } \land \end{array}\]

Standard logical equivalences. The symbols , , and stand for arbitrary sentences of propositional logic.

Logical equivalence

The second concept we will need is validity. A sentence is valid if it is true in all models. For example, the sentence is valid. Valid sentences are also known as tautologies—they are necessarily true. Because the sentence True is true in all models, every valid sentence is logically equivalent to True. What good are valid sentences? From our definition of entailment, we can derive the deduction theorem, which was known to the ancient Greeks:

For any sentences and , if and only if the sentence is valid.

Validity

Tautology

Deduction theorem

(Exercise 7.DEDU asks for a proof.) Hence, we can decide if by checking that is true in every model—which is essentially what the inference algorithm in Figure 7.10 does —or by proving that is equivalent to True. Conversely, the deduction theorem states that every valid implication sentence describes a legitimate inference.

Satisfiability

The final concept we will need is satisfiability. A sentence is satisfiable if it is true in, or satisfied by, some model. For example, the knowledge base given earlier, ( ), is satisfiable because there are three models in which it is true, as shown in Figure 7.9 . Satisfiability can be checked by enumerating the possible models until one is found that satisfies the sentence. The problem of determining the satisfiability of sentences in propositional logic—the SAT problem—was the first problem proved to be NPcomplete. Many problems in computer science are really satisfiability problems. For example, all the constraint satisfaction problems in Chapter 6 ask whether the constraints are satisfiable by some assignment.

SAT

Validity and satisfiability are of course connected: is valid iff is unsatisfiable; contrapositively, is satisfiable iff is not valid. We also have the following useful result:

if and only if the sentence is unsatisfiable.

Proving from by checking the unsatisfiability of corresponds exactly to the standard mathematical proof technique of reductio ad absurdum (literally, “reduction to an absurd thing”). It is also called proof by refutation or proof by contradiction. One assumes a sentence to be false and shows that this leads to a contradiction with known axioms . This contradiction is exactly what is meant by saying that the sentence is unsatisfiable.

Reductio ad absurdum

Refutation

Contradiction

7.5.1 Inference and proofs

This section covers inference rules that can be applied to derive a proof—a chain of conclusions that leads to the desired goal. The best-known rule is called Modus Ponens (Latin for mode that affirms) and is written

\[\frac{\alpha \Rightarrow \beta, \quad \alpha}{\beta}\]

Inference rules

Proof

Modus Ponens

The notation means that, whenever any sentences of the form and are given, then the sentence can be inferred. For example, if and are given, then Shoot can be inferred.

Another useful inference rule is And-Elimination, which says that, from a conjunction, any of the conjuncts can be inferred:

\[\frac{\alpha \wedge \beta}{\alpha} \,.\]

And-Elimination

For example, from , WumpusAlive can be inferred.

By considering the possible truth values of and , one can easily show once and for all that Modus Ponens and And-Elimination are sound. These rules can then be used in any particular instances where they apply, generating sound inferences without the need for enumerating models.

All of the logical equivalences in Figure 7.11 can be used as inference rules. For example, the equivalence for biconditional elimination yields the two inference rules

\[\begin{array}{c} \alpha \Leftrightarrow \beta\\ \hline (\alpha \Rightarrow \beta) \land (\beta \Rightarrow \alpha) \end{array} \quad \text{and} \quad \begin{array}{c} (\alpha \Rightarrow \beta) \land (\beta \Rightarrow \alpha) \\ \hline \alpha \Leftrightarrow \beta \end{array} .\]

Not all inference rules work in both directions like this. For example, we cannot run Modus Ponens in the opposite direction to obtain and from .

Let us see how these inference rules and equivalences can be used in the wumpus world. We start with the knowledge base containing through and show how to prove , that is, there is no pit in [1,2]:

1. Apply biconditional elimination to to obtain

\[R\_6: \quad \left(B\_{1,1} \Rightarrow \left(P\_{1,2} \lor P\_{2,1}\right)\right) \land \left(\left(P\_{1,2} \lor P\_{2,1}\right) \Rightarrow B\_{1,1}\right) \dots\]

2. Apply And-Elimination to to obtain

\[R\_7: \quad \left( (P\_{1,2} \lor P\_{2,1}) \Rightarrow B\_{1,1} \right).\]

3. Logical equivalence for contrapositives gives

\[R\_8: \quad \left(\neg B\_{1,1} \Rightarrow \neg (P\_{1,2} \lor P\_{2,1})\right) .\]

4. Apply Modus Ponens with and the percept (i.e., ), to obtain

\[R\_{\emptyset}: \quad \neg (P\_{1,2} \lor P\_{2,1}) \; .\]

5. Apply De Morgan’s rule, giving the conclusion

\[R\_{10}: \quad \neg P\_{1,2} \land \neg P\_{2,1} \dots\]

That is, neither [1,2] nor [2,1] contains a pit.

Any of the search algorithms in Chapter 3 can be used to find a sequence of steps that constitutes a proof like this. We just need to define a proof problem as follows:

- INITIAL STATE: the initial knowledge base.

- ACTIONS: the set of actions consists of all the inference rules applied to all the sentences that match the top half of the inference rule.

- RESULT: the result of an action is to add the sentence in the bottom half of the inference rule.

- GOAL: the goal is a state that contains the sentence we are trying to prove.

Thus, searching for proofs is an alternative to enumerating models. In many practical cases finding a proof can be more efficient because the proof can ignore irrelevant propositions, no matter how many of them there are. For example, the proof just given leading to does not mention the propositions They can be ignored because the goal proposition, , appears only in sentence ; appear only in and so , , and have no bearing on the proof. The same would hold even if we added a million more sentences to the knowledge base; the simple truth-table algorithm, on the other hand, would be overwhelmed by the exponential explosion of models.

One final property of logical systems is monotonicity, which says that the set of entailed sentences can only increase as information is added to the knowledge base. For any sentences and , 9

9 Nonmonotonic logics, which violate the monotonicity property, capture a common property of human reasoning: changing one’s mind. They are discussed in Section 10.6 .

Monotonicity

For example, suppose the knowledge base contains the additional assertion stating that there are exactly eight pits in the world. This knowledge might help the agent draw additional conclusions, but it cannot invalidate any conclusion already inferred—such as the conclusion that there is no pit in [1,2]. Monotonicity means that inference rules can be applied whenever suitable premises are found in the knowledge base—the conclusion of the rule must follow regardless of what else is in the knowledge base.

7.5.2 Proof by resolution

We have argued that the inference rules covered so far are sound, but we have not discussed the question of completeness for the inference algorithms that use them. Search algorithms such as iterative deepening search (page 81) are complete in the sense that they will find any reachable goal, but if the available inference rules are inadequate, then the goal is not reachable—no proof exists that uses only those inference rules. For example, if we removed

the biconditional elimination rule, the proof in the preceding section would not go through. The current section introduces a single inference rule, resolution, that yields a complete inference algorithm when coupled with any complete search algorithm.

We begin by using a simple version of the resolution rule in the wumpus world. Let us consider the steps leading up to Figure 7.4(a) : the agent returns from [2,1] to [1,1] and then goes to [1,2], where it perceives a stench, but no breeze. We add the following facts to the knowledge base:

\[\begin{aligned} R\_{11}: &\quad \neg B\_{1,2}.\\ R\_{12}: &\quad B\_{1,2} \Leftrightarrow (P\_{1,1} \lor P\_{2,2} \lor P\_{1,3}) \end{aligned}\]

By the same process that led to earlier, we can now derive the absence of pits in [2,2] and [1,3] (remember that [1,1] is already known to be pitless):

\[\begin{array}{rcl} R\_{13}: & \neg P\_{2,2}. \\ R\_{14}: & \neg P\_{1,3}. \end{array}\]

We can also apply biconditional elimination to , followed by Modus Ponens with , to obtain the fact that there is a pit in [1,1], [2,2], or [3,1]:

\[R\_{15}: \quad P\_{1,1} \lor P\_{2,2} \lor P\_{3,1} \dots\]

Now comes the first application of the resolution rule: the literal in resolves with the literal in to give the resolvent

\[R\_{16}: \quad P\_{1,1} \lor P\_{3,1} \cdot\]

Resolvent

In English; if there’s a pit in one of [1,1], [2,2], and [3,1] and it’s not in [2,2], then it’s in [1,1] or [3,1]. Similarly, the literal in resolves with the literal in to give

\[R\_{17}: \quad P\_{3,1} \dots\]

In English: if there’s a pit in [1,1] or [3,1] and it’s not in [1,1], then it’s in [3,1]. These last two inference steps are examples of the unit resolution inference rule

\[\frac{\ell\_1 \vee \dots \vee \ell\_k, \qquad m}{\ell\_1 \vee \dots \vee \ell\_{i-1} \vee \ell\_{i+1} \vee \dots \vee \ell\_k}\]

Unit resolution

where each is a literal and and are complementary literals (i.e., one is the negation of the other). Thus, the unit resolution rule takes a clause—a disjunction of literals—and a literal and produces a new clause. Note that a single literal can be viewed as a disjunction of one literal, also known as a unit clause.

Complementary literals

Clause

Unit clause

The unit resolution rule can be generalized to the full resolution rule

\[\frac{\ell\_1 \lor \cdots \lor \ell\_k, \qquad m\_1 \lor \cdots \lor m\_n}{\ell\_1 \lor \cdots \lor \ell\_{i-1} \lor \ell\_{i+1} \lor \cdots \lor \ell\_k \lor m\_1 \lor \cdots \lor m\_{j-1} \lor m\_{j+1} \lor \cdots \lor m\_n}\]

Resolution

where and are complementary literals. This says that resolution takes two clauses and produces a new clause containing all the literals of the two original clauses except the two complementary literals. For example, we have

\[\frac{P\_{1,1} \lor P\_{3,1}, \qquad \neg P\_{1,1} \lor \neg P\_{2,2}}{P\_{3,1} \lor \neg P\_{2,2}}\]

You can resolve only one pair of complementary literals at a time. For example, we can resolve and to deduce

\[ \frac{P \lor \neg Q \lor R}{\neg Q \lor Q \lor R}, \]

but you can’t resolve on both and at once to infer . There is one more technical aspect of the resolution rule: the resulting clause should contain only one copy of each literal. The removal of multiple copies of literals is called factoring. For example, if we resolve with , we obtain , which is reduced to just by factoring. 10

10 If a clause is viewed as a set of literals, then this restriction is automatically respected. Using set notation for clauses makes the resolution rule much cleaner, at the cost of introducing additional notation.

Factoring

The soundness of the resolution rule can be seen easily by considering the literal that is complementary to literal in the other clause. If is true, then is false, and hence must be true, because is given. If is false, then must be true because is given. Now is either true or false, so one or other of these conclusions holds—exactly as the resolution rule states.

What is more surprising about the resolution rule is that it forms the basis for a family of complete inference procedures. A resolution-based theorem prover can, for any sentences and in propositional logic, decide whether . The next two subsections explain how resolution accomplishes this.

Conjunctive normal form

The resolution rule applies only to clauses (that is, disjunctions of literals), so it would seem to be relevant only to knowledge bases and queries consisting of clauses. How, then, can it lead to a complete inference procedure for all of propositional logic? The answer is that every sentence of propositional logic is logically equivalent to a conjunction of clauses.

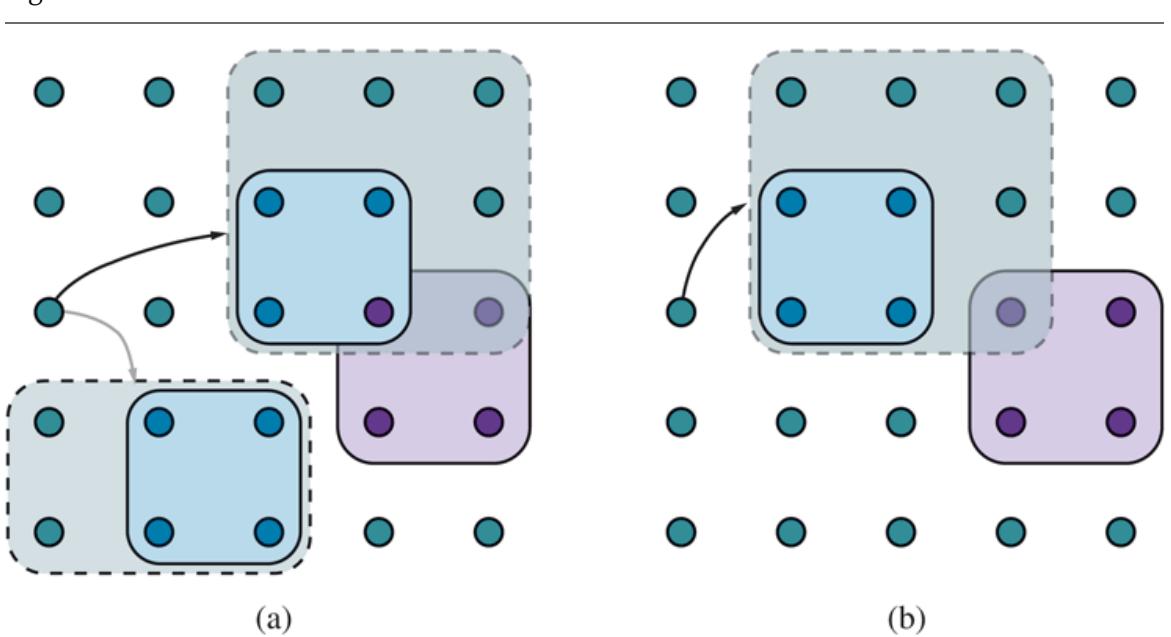

A sentence expressed as a conjunction of clauses is said to be in conjunctive normal form or CNF (see Figure 7.12 ). We now describe a procedure for converting to CNF. We illustrate the procedure by converting the sentence into CNF. The steps are as follows:

Conjunctive normal form

CNF

1. Eliminate , replacing with .

\[(B\_{1,1} \Rightarrow (P\_{1,2} \lor P\_{2,1})) \land ((P\_{1,2} \lor P\_{2,1}) \Rightarrow B\_{1,1}) \dots\]

2. Eliminate , replacing with :

\[(\neg B\_{1,1} \lor P\_{1,2} \lor P\_{2,1}) \land (\neg (P\_{1,2} \lor P\_{2,1}) \lor B\_{1,1}) \; .\]

3. CNF requires to appear only in literals, so we “move inwards” by repeated application of the following equivalences from Figure 7.11 :

(double-negation elimination)

(De Morgan) (De Morgan)

In the example, we require just one application of the last rule:

\[(\neg B\_{1,1} \lor P\_{1,2} \lor P\_{2,1}) \land ((\neg P\_{1,2} \land \neg P\_{2,1}) \lor B\_{1,1}) \dots\]

4. Now we have a sentence containing nested and operators applied to literals. We apply the distributivity law from Figure 7.11 , distributing over wherever possible.

\[(\neg B\_{1,1} \lor P\_{1,2} \lor P\_{2,1}) \land (\neg P\_{1,2} \lor B\_{1,1}) \land (\neg P\_{2,1} \lor B\_{1,1}) \; .\]

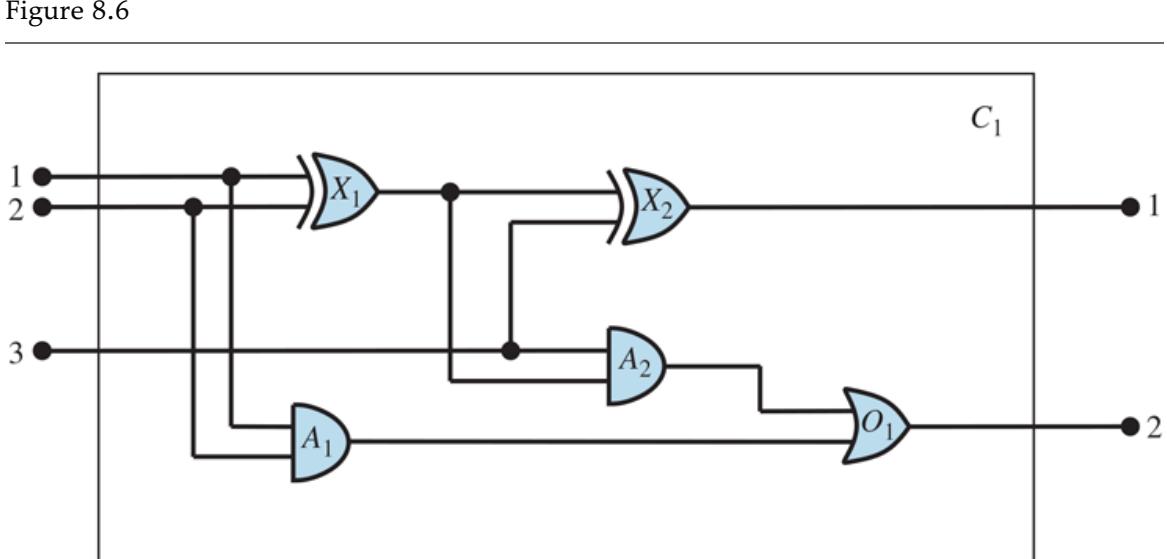

Figure 7.12

| CNFSentence > Clause1 ^ Clausen | |

|---|---|

| Clause -> Literal V V Literalm | |

| Fact > Symbol | |

| Literal -> Symbol -Symbol | |

| Symbol > P Q R | |

| HornClauseForm -> DefiniteClauseForm GoalClauseForm | |

| DefiniteClauseForm -> Fact (Symbol ^ ·· · · Symbol => Symbol | |

| GoalClauseForm -> (Symbol1 /··· · · Symbol;) => False |

A grammar for conjunctive normal form, Horn clauses, and definite clauses. A CNF clause such as can be written in definite clause form as

The original sentence is now in CNF, as a conjunction of three clauses. It is much harder to read, but it can be used as input to a resolution procedure.

A resolution algorithm

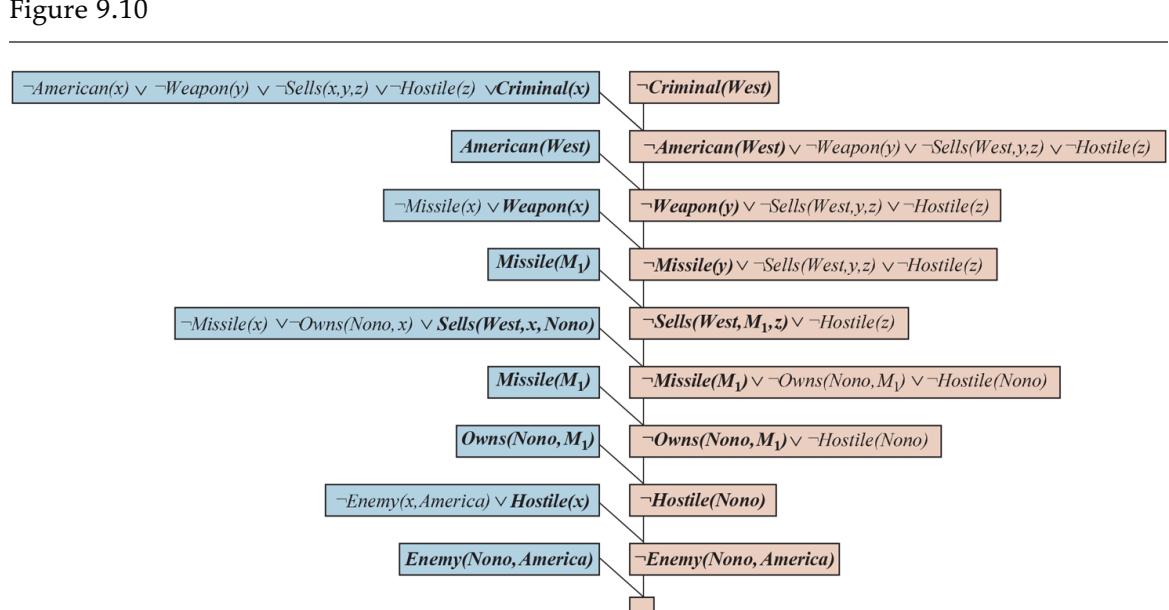

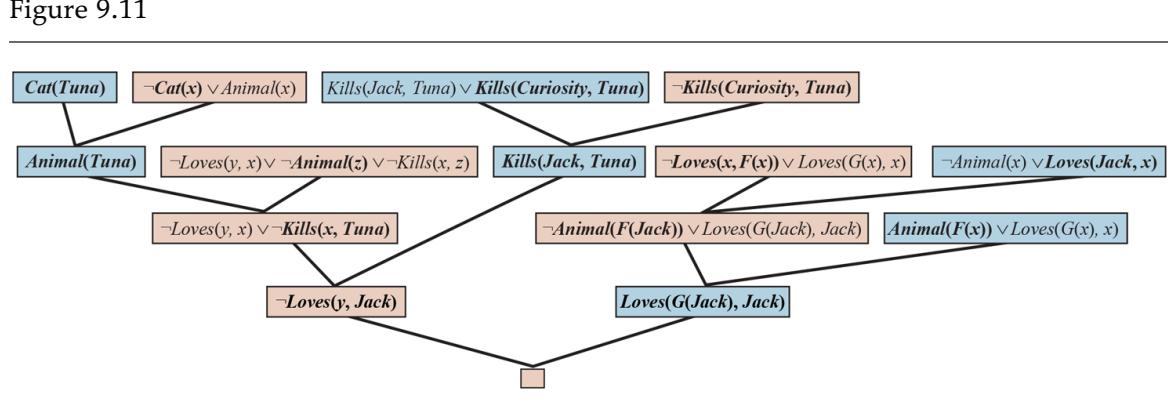

Inference procedures based on resolution work by using the principle of proof by contradiction introduced on page 223 . That is, to show that , we show that is unsatisfiable. We do this by proving a contradiction.

A resolution algorithm is shown in Figure 7.13 . First, is converted into CNF. Then, the resolution rule is applied to the resulting clauses. Each pair that contains

complementary literals is resolved to produce a new clause, which is added to the set if it is not already present. The process continues until one of two things happens:

- there are no new clauses that can be added, in which case does not entail ; or,

- two clauses resolve to yield the empty clause, in which case entails .

Figure 7.13

A simple resolution algorithm for propositional logic. PL-RESOLVE returns the set of all possible clauses obtained by resolving its two inputs.

The empty clause—a disjunction of no disjuncts—is equivalent to False because a disjunction is true only if at least one of its disjuncts is true. Moreover, the empty clause arises only from resolving two contradictory unit clauses such as and .

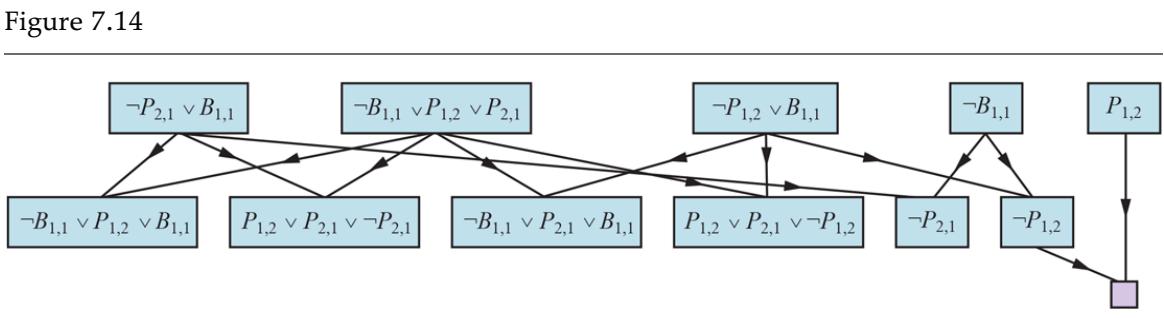

We can apply the resolution procedure to a very simple inference in the wumpus world. When the agent is in [1,1], there is no breeze, so there can be no pits in neighboring squares. The relevant knowledge base is

\[KB = R\_2 \land R\_4 = (B\_{1,1} \Leftrightarrow (P\_{1,2} \lor P\_{2,1})) \land \neg B\_{1,1}\]

and we wish to prove , which is, say, . When we convert into CNF, we obtain the clauses shown at the top of Figure 7.14 . The second row of the figure shows clauses obtained by resolving pairs in the first row. Then, when is resolved with , we obtain the empty clause, shown as a small square. Inspection of Figure 7.14 reveals

that many resolution steps are pointless. For example, the clause is equivalent to which is equivalent to True. Deducing that True is true is not very helpful. Therefore, any clause in which two complementary literals appear can be discarded.

Partial application of PL-RESOLUTION to a simple inference in the wumpus world to prove the query Each of the leftmost four clauses in the top row is paired with each of the other three, and the resolution rule is applied to yield the clauses on the bottom row. We see that the third and fourth clauses on the top row combine to yield the clause which is then resolved with to yield the empty clause, meaning that the query is proven.

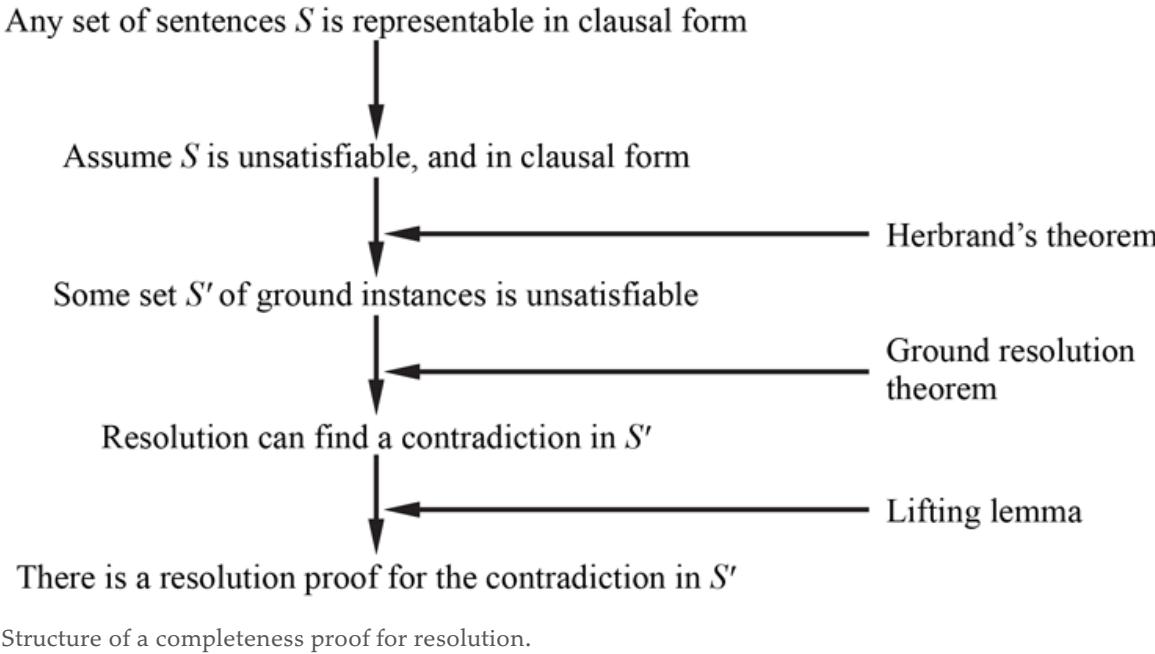

Completeness of resolution

To conclude our discussion of resolution, we now show why PL-RESOLUTION is complete. To do this, we introduce the resolution closure RC(S) of a set of clauses , which is the set of all clauses derivable by repeated application of the resolution rule to clauses in or their derivatives. The resolution closure is what PL-RESOLUTION computes as the final value of the variable clauses. It is easy to see that RC(S) must be finite: thanks to the factoring step, there are only finitely many distinct clauses that can be constructed out of the symbols that appear in . Hence, PL-RESOLUTION always terminates.

Resolution closure

The completeness theorem for resolution in propositional logic is called the ground resolution theorem:

If a set of clauses is unsatisfiable, then the resolution closure of those clauses contains the empty clause.

Ground resolution theorem

This theorem is proved by demonstrating its contrapositive: if the closure RC(S) does not contain the empty clause, then is satisfiable. In fact, we can construct a model for with suitable truth values for . The construction procedure is as follows:

For from 1 to ,

- If a clause in RC(S) contains the literal and all its other literals are false under the assignment chosen for , then assign false to . - Otherwise, assign true to .

This assignment to is a model of . To see this, assume the opposite—that, at some stage in the sequence, assigning symbol causes some clause to become false. For this to happen, it must be the case that all the other literals in must already have been falsified by assignments to . Thus, must now look like either or like . If just one of these two is in RC(S), then the algorithm will assign the appropriate truth value to to make true, so can only be falsified if both of these clauses are in RC(S).

Now, since RC(S) is closed under resolution, it will contain the resolvent of these two clauses, and that resolvent will have all of its literals already falsified by the assignments to . This contradicts our assumption that the first falsified clause appears at stage . Hence, we have proved that the construction never falsifies a clause in RC(S); that is, it produces a model of RC(S). Finally, because is contained in RC(S), any model of RC(S) is a model of itself.

7.5.3 Horn clauses and definite clauses

The completeness of resolution makes it a very important inference method. In many practical situations, however, the full power of resolution is not needed. Some real-world knowledge bases satisfy certain restrictions on the form of sentences they contain, which enables them to use a more restricted and efficient inference algorithm.

One such restricted form is the definite clause, which is a disjunction of literals of which exactly one is positive. For example, the clause is a definite clause, whereas is not, because it has two positive clauses.

Definite clause

Slightly more general is the Horn clause, which is a disjunction of literals of which at most one is positive. So all definite clauses are Horn clauses, as are clauses with no positive literals; these are called goal clauses. Horn clauses are closed under resolution: if you resolve two Horn clauses, you get back a Horn clause. One more class is the k-CNF sentence, which is a CNF sentence where each clause has at most k literals.

Horn clause

Goal clauses

Knowledge bases containing only definite clauses are interesting for three reasons:

1. Every definite clause can be written as an implication whose premise is a conjunction of positive literals and whose conclusion is a single positive literal. (See Exercise 7.DISJ.) For example, the definite clause can be written as the implication . In the implication form, the sentence is easier to understand: it says that if the agent is in [1,1] and there is a breeze percept, then [1,1] is breezy. In Horn form, the premise is called the body and the conclusion is called the head. A sentence consisting of a single positive literal, such as , is called a fact. It too can be written in implication form as , but it is simpler to write just .

| Body | |||

|---|---|---|---|

| Head | |||

| Fact |

2. Inference with Horn clauses can be done through the forward-chaining and backward-chaining algorithms, which we explain next. Both of these algorithms are natural, in that the inference steps are obvious and easy for humans to follow. This type of inference is the basis for logic programming, which is discussed in Chapter 9 .

Forward-chaining

Backward-chaining

3. Deciding entailment with Horn clauses can be done in time that is linear in the size of the knowledge base—a pleasant surprise.

7.5.4 Forward and backward chaining

The forward-chaining algorithm PL-FC-ENTAILS? determines if a single proposition symbol —the query—is entailed by a knowledge base of definite clauses. It begins from known facts (positive literals) in the knowledge base. If all the premises of an implication are known, then its conclusion is added to the set of known facts. For example, if and Breeze are known and is in the knowledge base, then can be added. This process continues until the query q is added or until no further inferences can be made. The algorithm is shown in Figure 7.15 ; the main point to remember is that it runs in linear time.

Figure 7.15

The forward-chaining algorithm for propositional logic. The agenda keeps track of symbols known to be true but not yet “processed.” The count table keeps track of how many premises of each implication are not yet proven. Whenever a new symbol from the agenda is processed, the count is reduced by one for each implication in whose premise appears (easily identified in constant time with appropriate indexing.) If a count reaches zero, all the premises of the implication are known, so its conclusion can be added to the agenda. Finally, we need to keep track of which symbols have been processed; a symbol that is already in the set of inferred symbols need not be added to the agenda again. This avoids redundant work and prevents loops caused by implications such as and .

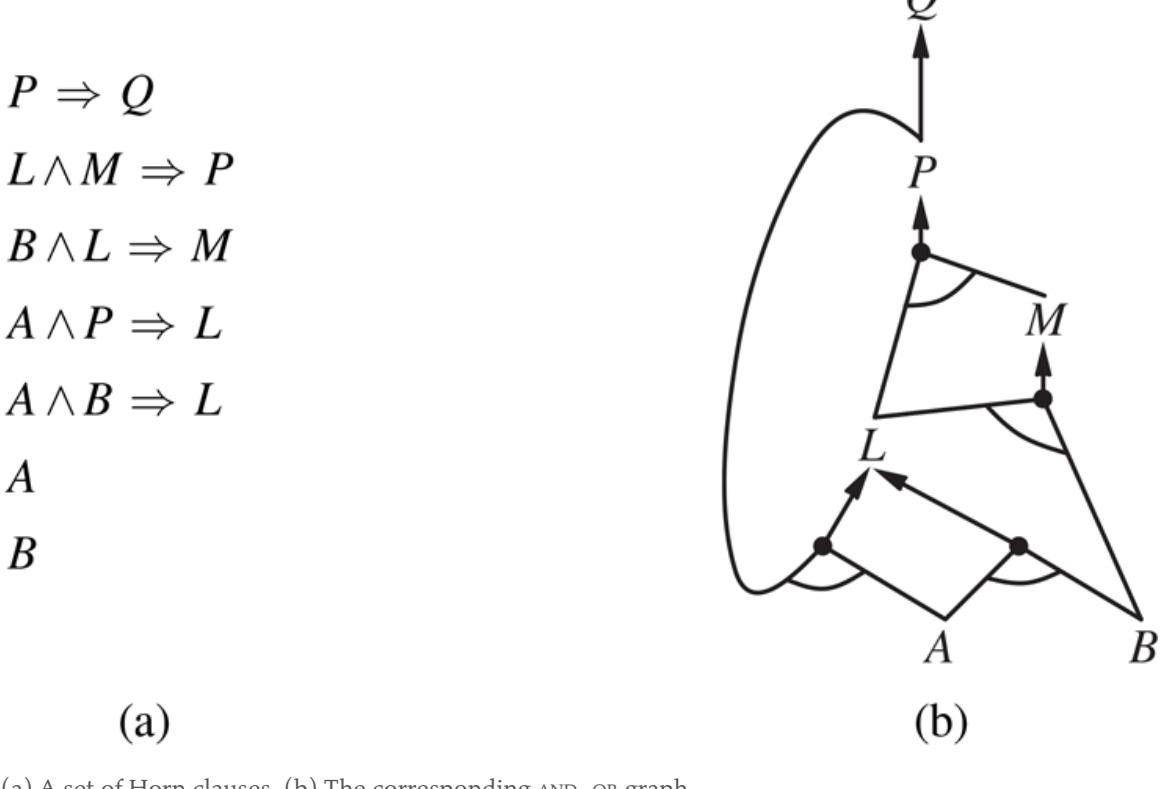

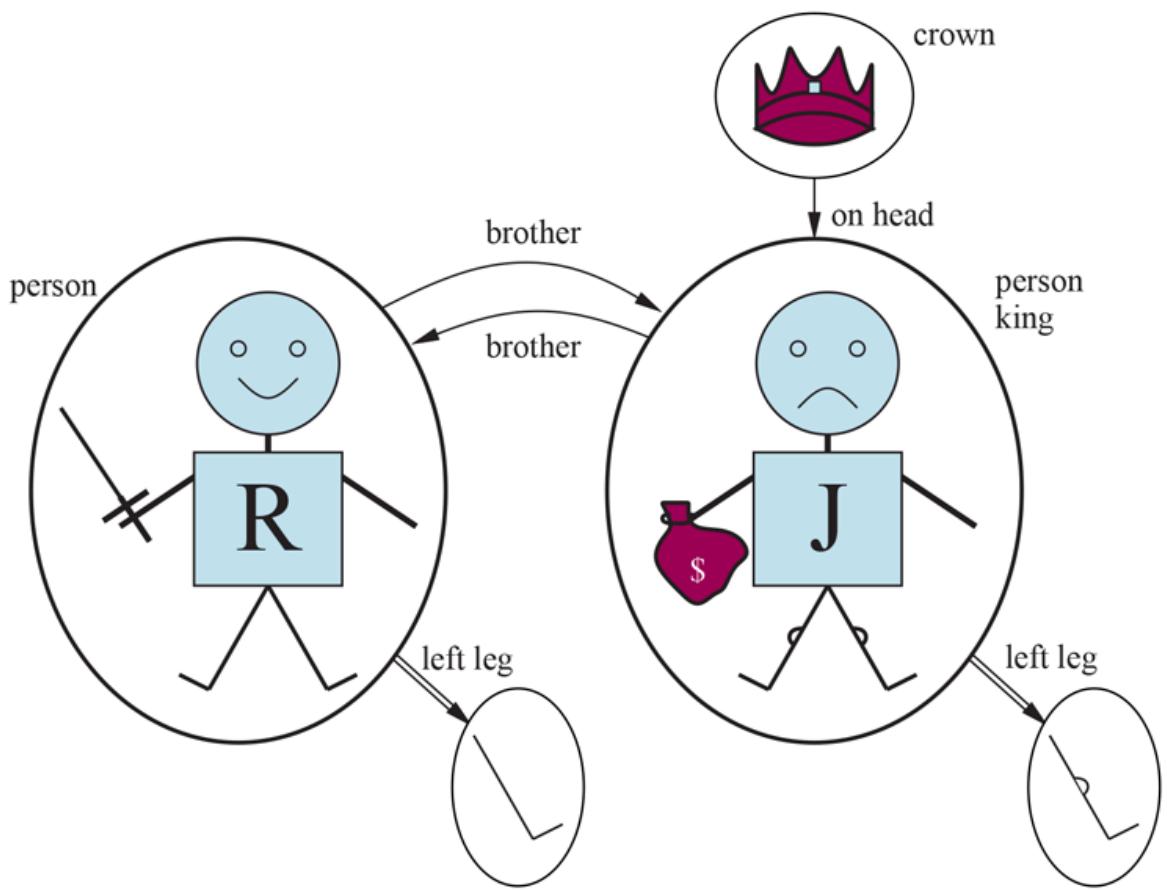

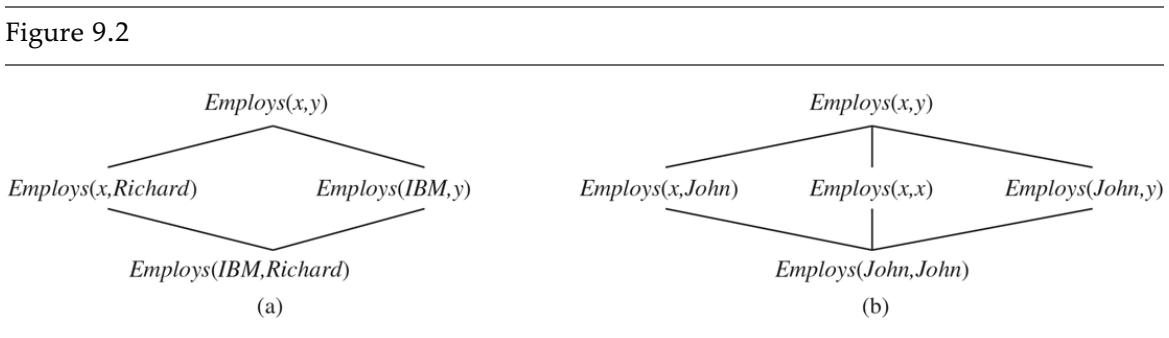

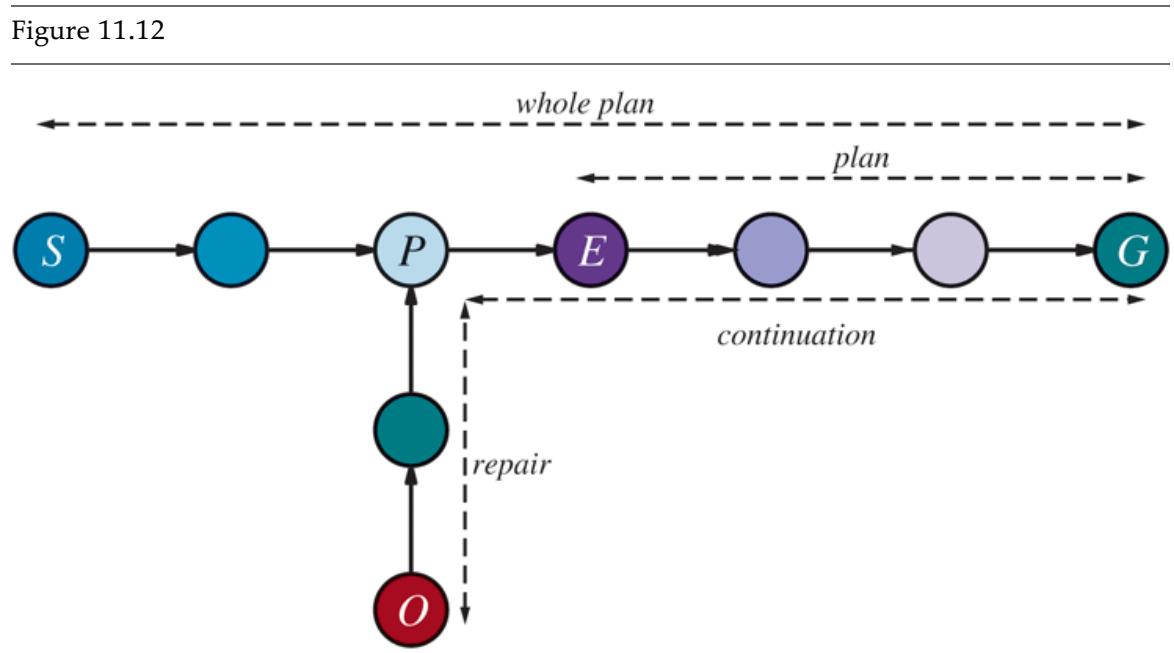

The best way to understand the algorithm is through an example and a picture. Figure 7.16(a) shows a simple knowledge base of Horn clauses with and as known facts. Figure 7.16(b) shows the same knowledge base drawn as an AND–OR graph (see Chapter 4 ). In AND–OR graphs, multiple edges joined by an arc indicate a conjunction—every edge must be proved—while multiple edges without an arc indicate a disjunction—any edge can be proved. It is easy to see how forward chaining works in the graph. The known leaves (here, and ) are set, and inference propagates up the graph as far as possible. Wherever a conjunction appears, the propagation waits until all the conjuncts are known before proceeding. The reader is encouraged to work through the example in detail.

(a) A set of Horn clauses. (b) The corresponding AND–OR graph.

It is easy to see that forward chaining is sound: every inference is essentially an application of Modus Ponens. Forward chaining is also complete: every entailed atomic sentence will be derived. The easiest way to see this is to consider the final state of the inferred table (after the algorithm reaches a fixed point where no new inferences are possible). The table contains true for each symbol inferred during the process, and false for all other symbols. We can view the table as a logical model; moreover, every definite clause in the original KB is true in this model.

To see this, assume the opposite, namely that some clause is false in the model. Then must be true in the model and must be false in the model. But this contradicts our assumption that the algorithm has reached a fixed point, because we would now be licensed to add to the KB. We can conclude, therefore, that the set of atomic sentences inferred at the fixed point defines a model of the original KB. Furthermore, any atomic sentence that is entailed by the KB must be true in all its models and in this model in particular. Hence, every entailed atomic sentence must be inferred by the algorithm.

Forward chaining is an example of the general concept of data-driven reasoning—that is, reasoning in which the focus of attention starts with the known data. It can be used within an agent to derive conclusions from incoming percepts, often without a specific query in mind. For example, the wumpus agent might TELL its percepts to the knowledge base using an incremental forward-chaining algorithm in which new facts can be added to the agenda to initiate new inferences. In humans, a certain amount of data-driven reasoning occurs as new information arrives. For example, if I am indoors and hear rain starting to fall, it might occur to me that the picnic will be canceled. Yet it will probably not occur to me that the seventeenth petal on the largest rose in my neighbor’s garden will get wet; humans keep forward chaining under careful control, lest they be swamped with irrelevant consequences.

Data-driven

The backward-chaining algorithm, as its name suggests, works backward from the query. If the query is known to be true, then no work is needed. Otherwise, the algorithm finds those implications in the knowledge base whose conclusion is . If all the premises of one of those implications can be proved true (by backward chaining), then is true. When applied to the query in Figure 7.16 , it works back down the graph until it reaches a set of known facts, A and B, that forms the basis for a proof. The algorithm is essentially identical to the AND-OR-GRAPH-SEARCH algorithm in Figure 4.11 . As with forward chaining, an efficient implementation runs in linear time.

Backward chaining is a form of goal-directed reasoning. It is useful for answering specific questions such as “What shall I do now?” and “Where are my keys?” Often, the cost of backward chaining is much less than linear in the size of the knowledge base, because the process touches only relevant facts.

Goal-directed reasoning

7.6 Effective Propositional Model Checking

In this section, we describe two families of efficient algorithms for general propositional inference based on model checking: one approach based on backtracking search, and one on local hill-climbing search. These algorithms are part of the “technology” of propositional logic. This section can be skimmed on a first reading of the chapter.

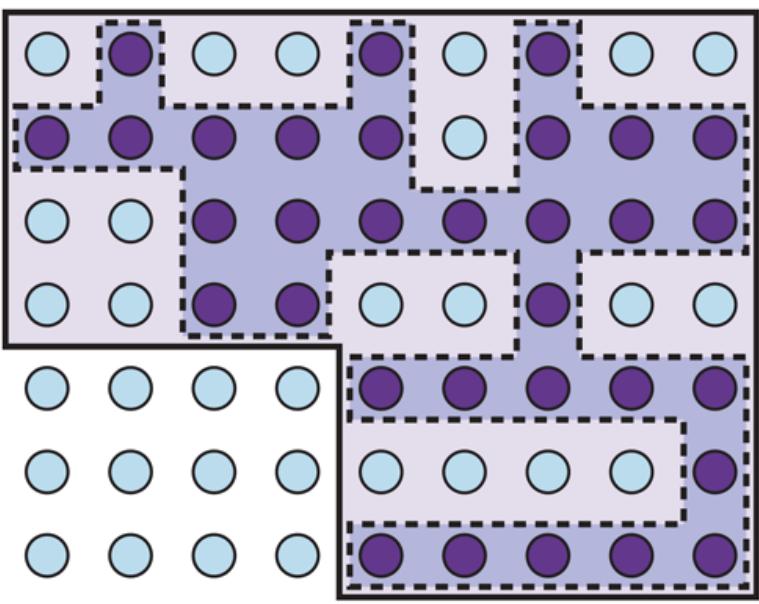

The algorithms we describe are for checking satisfiability: the SAT problem. (As noted in Section 7.5 , testing entailment, , can be done by testing unsatisfiability of . We mentioned on page 223 the connection between finding a satisfying model for a logical sentence and finding a solution for a constraint satisfaction problem, so it is perhaps not surprising that the two families of propositional satisfiability algorithms closely resemble the backtracking algorithms of Section 6.3 and the local search algorithms of Section 6.4 . They are, however, extremely important in their own right because so many combinatorial problems in computer science can be reduced to checking the satisfiability of a propositional sentence. Any improvement in satisfiability algorithms has huge consequences for our ability to handle complexity in general.

7.6.1 A complete backtracking algorithm

The first algorithm we consider is often called the Davis–Putnam algorithm, after the seminal paper by Martin Davis and Hilary Putnam (1960). The algorithm is in fact the version described by Davis, Logemann, and Loveland (1962), so we will call it DPLL after the initials of all four authors. DPLL takes as input a sentence in conjunctive normal form—a set of clauses. Like BACKTRACKING-SEARCH and TT-ENTAILS?, it is essentially a recursive, depthfirst enumeration of possible models. It embodies three improvements over the simple scheme of TT-ENTAILS?:

Davis–Putnam algorithm

- EARLY TERMINATION: The algorithm detects whether the sentence must be true or false, even with a partially completed model. A clause is true if any literal is true, even if the other literals do not yet have truth values; hence, the sentence as a whole could be judged true even before the model is complete. For example, the sentence is true if is true, regardless of the values of and . Similarly, a sentence is false if any clause is false, which occurs when each of its literals is false. Again, this can occur long before the model is complete. Early termination avoids examination of entire subtrees in the search space.

- PURE SYMBOL HEURISTIC: A pure symbol is a symbol that always appears with the same “sign” in all clauses. For example, in the three clauses , , and , the symbol is pure because only the positive literal appears, is pure because only the negative literal appears, and is impure. It is easy to see that if a sentence has a model, then it has a model with the pure symbols assigned so as to make their literals true, because doing so can never make a clause false. Note that, in determining the purity of a symbol, the algorithm can ignore clauses that are already known to be true in the model constructed so far. For example, if the model contains , then the clause is already true, and in the remaining clauses appears only as a positive literal; therefore becomes pure.

Pure symbol

UNIT CLAUSE HEURISTIC: A unit clause was defined earlier as a clause with just one literal. In the context of DPLL, it also means clauses in which all literals but one are already assigned false by the model. For example, if the model contains , then simplifies to , which is a unit clause. Obviously, for this clause to be true, must be set to false. The unit clause heuristic assigns all such symbols before branching on the remainder. One important consequence of the heuristic is that any attempt to prove (by refutation) a literal that is already in the knowledge base will succeed immediately (Exercise 7.KNOW). Notice also that assigning one unit clause can create another unit clause—for example, when is set to false, becomes a unit clause, causing true to be assigned to . This “cascade” of forced assignments is called unit propagation. It resembles the process of forward chaining with definite clauses,

and indeed, if the CNF expression contains only definite clauses then DPLL essentially replicates forward chaining. (See Exercise 7.DPLL.)

Unit propagation

The DPLL algorithm is shown in Figure 7.17 , which gives the essential skeleton of the search process without the implementation details.

Figure 7.17

The DPLL algorithm for checking satisfiability of a sentence in propositional logic. The ideas behind FIND-PURE-SYMBOL and FIND-UNIT-CLAUSE are described in the text; each returns a symbol (or null) and the truth value to assign to that symbol. Like TT-ENTAILS?, DPLL operates over partial models.

What Figure 7.17 does not show are the tricks that enable SAT solvers to scale up to large problems. It is interesting that most of these tricks are in fact rather general, and we have seen them before in other guises:

- 1. Component analysis (as seen with Tasmania in CSPs): As DPLL assigns truth values to variables, the set of clauses may become separated into disjoint subsets, called components, that share no unassigned variables. Given an efficient way to detect when this occurs, a solver can gain considerable speed by working on each component separately.

- 2. Variable and value ordering (as seen in Section 6.3.1 for CSPs): Our simple implementation of DPLL uses an arbitrary variable ordering and always tries the value true before false. The degree heuristic (see page 193) suggests choosing the variable that appears most frequently over all remaining clauses.

- 3. Intelligent backtracking (as seen in Section 6.3.3 for CSPs): Many problems that cannot be solved in hours of run time with chronological backtracking can be solved in seconds with intelligent backtracking that backs up all the way to the relevant point of conflict. All SAT solvers that do intelligent backtracking use some form of conflict clause learning to record conflicts so that they won’t be repeated later in the search. Usually a limited-size set of conflicts is kept, and rarely used ones are dropped.

- 4. Random restarts (as seen on page 113 for hill climbing): Sometimes a run appears not to be making progress. In this case, we can start over from the top of the search tree, rather than trying to continue. After restarting, different random choices (in variable and value selection) are made. Clauses that are learned in the first run are retained after the restart and can help prune the search space. Restarting does not guarantee that a solution will be found faster, but it does reduce the variance on the time to solution.

- 5. Clever indexing (as seen in many algorithms): The speedup methods used in DPLL itself, as well as the tricks used in modern solvers, require fast indexing of such things as “the set of clauses in which variable appears as a positive literal.” This task is complicated by the fact that the algorithms are interested only in the clauses that have not yet been satisfied by previous assignments to variables, so the indexing structures must be updated dynamically as the computation proceeds.

With these enhancements, modern solvers can handle problems with tens of millions of variables. They have revolutionized areas such as hardware verification and security protocol verification, which previously required laboriou, hand-guided proofs.

7.6.2 Local search algorithms

We have seen several local search algorithms so far in this book, including HILL-CLIMBING (page 111) and SIMULATED-ANNEALING (page 115). These algorithms can be applied directly to satisfiability problems, provided that we choose the right evaluation function. Because the goal is to find an assignment that satisfies every clause, an evaluation function that counts the number of unsatisfied clauses will do the job. In fact, this is exactly the measure used by the MIN-CONFLICTS algorithm for CSPs (page 198). All these algorithms take steps in the space of complete assignments, flipping the truth value of one symbol at a time. The space usually contains many local minima, to escape from which various forms of randomness are required. In recent years, there has been a great deal of experimentation to find a good balance between greediness and randomness.

One of the simplest and most effective algorithms to emerge from all this work is called WALKSAT (Figure 7.18 ). On every iteration, the algorithm picks an unsatisfied clause and picks a symbol in the clause to flip. It chooses randomly between two ways to pick which symbol to flip: (1) a “min-conflicts” step that minimizes the number of unsatisfied clauses in the new state and (2) a “random walk” step that picks the symbol randomly.

| Figure 7.18 | |

|---|---|

| ————- | – |

| function WALKSAT(clauses, p, max_flips) returns a satisfying model or failure |

|---|

| inputs: clauses, a set of clauses in propositional logic |

| p, the probability of choosing to do a “random walk” move, typically around 0.5 |

| max_flips, number of value flips allowed before giving up |